当前位置:网站首页>How to understand query, key and value in transformer

How to understand query, key and value in transformer

2022-06-28 01:33:00 【coast_ s】

-------------------------------------

Reprint : The original author yafee123

-------------------------------------

Transformer Come of 2017 An article in google brain Another divine article of 《Attention is all you need》, So far, you have led the way in NLP and CV Another research hotspot .

stay Transformer One of the most critical contributions of the is self-attention. It is to use the relationship between input samples to build an attention model .

self-attention Three very important elements have been introduced in : Query 、Key and Value.

hypothesis  Is the characteristic of an input sample sequence , among n Enter the number of samples for ( Sequence length ),d Is the latitude of a single sample .

Is the characteristic of an input sample sequence , among n Enter the number of samples for ( Sequence length ),d Is the latitude of a single sample .

Query、Key & Value The definition is as follows :

Query:  , among

, among  , This matrix can be considered as proof of spatial transformation , The same below

, This matrix can be considered as proof of spatial transformation , The same below

Key:  , among

, among

Value:  , among

, among

For many people , Seeing these three concepts, I was confused . What are the three concepts and self-attention By what relationship , Why did you choose this name ?

【 Be careful : It's important to be careful here X 、Q、K、V Each line of represents an input sample , This is different from the definition that each column of a sample matrix is a sample , This is very important for understanding the following content .】

So this blog is to briefly explain the reasons for these three names .

To understand the meaning of these three concepts , First of all, understand self-attention What do you want in the end ?

The answer is : Given the current input sample  ( Just to understand , We disassemble the input ), Produce an output , This output is the weighted sum of all samples in the sequence . Because it is assumed that this output can see all the input sample information , Then choose your own attention points according to different weights .

( Just to understand , We disassemble the input ), Produce an output , This output is the weighted sum of all samples in the sequence . Because it is assumed that this output can see all the input sample information , Then choose your own attention points according to different weights .

If you agree with this answer , Then it's easy to explain .

query 、 key & value The concept of is actually derived from the recommendation system . The basic principle is : Given a query, Calculation query And key The relevance of , And then according to query And key To find the most appropriate value. for instance : In the movie recommendation .query It's someone's preference for movies ( For example, points of interest 、 Age 、 Gender, etc )、key It's the type of film ( comedy 、 Age, etc )、value It's the movie to be recommended . In this case ,query, key and value Each attribute of the is in a different space , In fact, they have a certain potential relationship , That is to say, through some kind of transformation , It can make the attributes of the three in a similar space .

stay self-attention In the principle of , Current input sample  , Through spatial transformation, it becomes a query,

, Through spatial transformation, it becomes a query,  ,

,  . Search items in analogy and recommendation system , We have to rely on query And key The relevance to retrieve what is needed value. that

. Search items in analogy and recommendation system , We have to rely on query And key The relevance to retrieve what is needed value. that  Why key Well ?

Why key Well ?

Because according to the process of the recommended system , We are going to find query and key The relevance of , The simplest way is to dot product , Get the current sample and relation vector . And in the self-attention In operation , Will do the following  , such

, such  Each element can be regarded as the current sample

Each element can be regarded as the current sample  And other samples in the sequence .

And other samples in the sequence .

After obtaining the relationship between samples , It's natural , Only need to  Normalized and multiplied by V matrix , You can get self-attention The final weighted output of :

Normalized and multiplied by V matrix , You can get self-attention The final weighted output of : .

.

V Medium Every line Is a sample of the sequence . , among O Output per dimension of , It is equivalent to the weighted sum of the corresponding latitudes of all input sequence samples , And weight is the relation vector

, among O Output per dimension of , It is equivalent to the weighted sum of the corresponding latitudes of all input sequence samples , And weight is the relation vector  .( This matrix multiplication can be drawn by yourself ).

.( This matrix multiplication can be drawn by yourself ).

Since then , It can be concluded that :

1. self-attention The reason for this is that... In the recommendation system query、key 、value Three concepts , It uses a process similar to the recommendation system . however self-attention Not for query Look for value, But according to the present query obtain value Weighted sum of . This is a self-attention The task of , Want to find a better weighted output for the current input , The output should contain all visible input sequence information , Attention is controlled by weight .

2. self-attention Middle here key and value Is a transformation of the input sequence itself , Maybe it's also self-attention Another meaning of : Act at the same time as key and value. Actually, it's very reasonable , Because in the recommendation system , although key and value The original feature space of attributes is different , But they are strongly related , So they go through certain spatial transformations , Can be unified into a feature space . That's why self-attention To multiply by W One of the reasons .

--

Above contents , Continuous modification and optimization , Welcome to exchange and discussion

---

Reference material :

Attention is all you need:https://arxiv.org/pdf/1706.03762.pdf

Transformers in Vision: A Survey: https://arxiv.org/abs/2101.01169 [ Note in this article , About W^Q , W^K and W^V The definition of latitude is wrong , Don't be misled ]

A Survey on Visual Transformer:https://arxiv.org/abs/2012.12556

边栏推荐

- Installation and use of Zotero document management tool

- How to add live chat in your Shopify store?

- 免费、好用、强大的开源笔记软件综合评测

- Is it reliable to invest in exchange traded ETF funds? Is it safe to invest in exchange traded ETF funds

- Acwing game 57 [unfinished]

- Message Oriented Middleware for girlfriends

- Efficient supplier management in supply chain

- 章凡:飞猪基于因果推断技术的广告投后归因

- How to build an e-commerce platform at low cost

- Latest MySQL advanced SQL statement Encyclopedia

猜你喜欢

如何在您的Shopify商店中添加实时聊天功能?

Why are cloud vendors targeting this KPI?

美团动态线程池实践思路已开源

【说明】Jmeter乱码的解决方法

How to build dual channel memory for Lenovo Savior r720

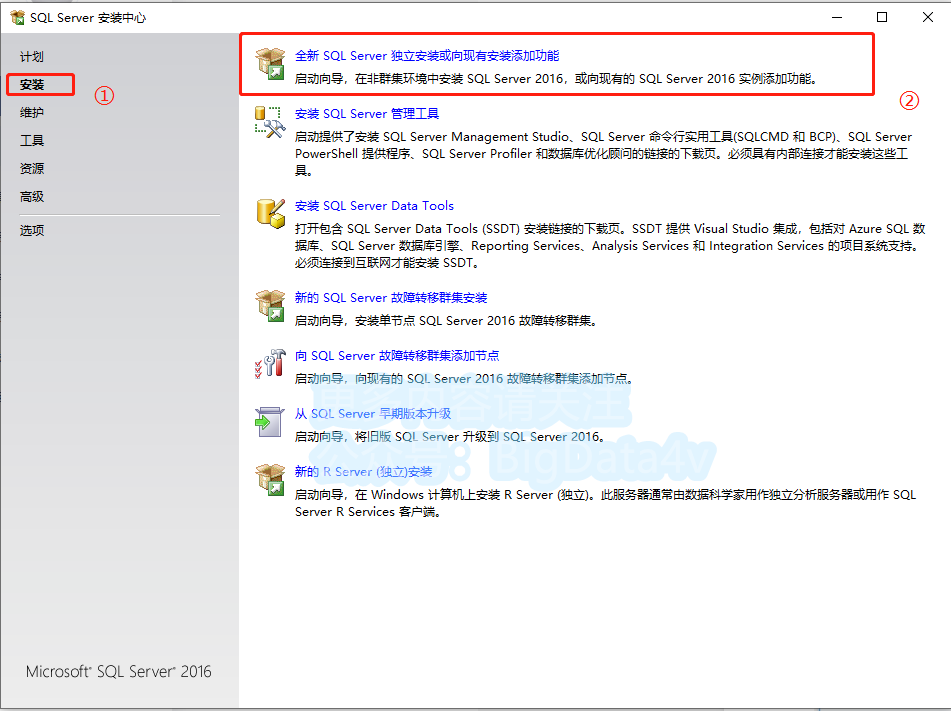

SQL Server 2016 detailed installation tutorial (with registration code and resources)

![完全二叉树的节点个数[非O(n)求法 -> 抽象二分]](/img/56/768f8be9f70bf751f176e40cbb1df2.png)

完全二叉树的节点个数[非O(n)求法 -> 抽象二分]

独立站卖家都在用的五大电子邮件营销技巧,你知道吗?

Class文件结构和字节码指令集

What is the e-commerce conversion rate so abstract?

随机推荐

现在炒股网上开户安全吗?新手刚上路,求答案

美团动态线程池实践思路已开源

为什么要选择不锈钢旋转接头

What is a better and safer app for securities companies to buy stocks

GFS 分布式文件系统概述与部署

什麼是數字化?什麼是數字化轉型?為什麼企業選擇數字化轉型?

golang 猴子吃桃子,求第一天桃子的数量

什么是过孔式导电滑环?

Latest MySQL advanced SQL statement Encyclopedia

【说明】Jmeter乱码的解决方法

Cloud assisted privacy collection intersection (server assisted psi) protocol introduction: Learning

#796 Div.2 F. Sanae and Giant Robot set *

模块化开发

Solon 1.8.3 发布,云原生微服务开发框架

Ceiling scheme 1

Is it safe to open a new bond registration account? Is there any risk?

Deepmind | pre training of molecular property prediction through noise removal

Set集合用法

[untitled]

AI+临床试验患者招募|Massive Bio完成900万美元A轮融资