当前位置:网站首页>Reinforcement learning series (I) -- basic concepts

Reinforcement learning series (I) -- basic concepts

2022-06-23 17:51:00 【languageX】

Recently learned about reinforcement learning , Prepare to sort out and summarize . This paper first introduces some basic concepts in reinforcement learning .

Reinforcement learning

Reinforcement learning , Supervised learning , Unsupervised learning is one of the three learning methods of machine learning .

Reinforcement learning is that agents try and learn by constantly exploring the unknown and using the existing knowledge in the environment , Then through the learned strategies ( What behavior should be taken in what state ) Carry out a series of actions to obtain the maximum cumulative income in the long term .

Supervised learning , Unsupervised learning , The difference between reinforcement learning

Supervised learning requires training data to have inputs and tags , Learn the expected output of input from the tag . Reinforcement learning has no tag value , Only encouragement and punishment , Need to constantly interact with the environment , Learn the best strategy by trial and error .

Unsupervised learning has no label or reward value , But by learning the hidden features of the data , Usually used for clustering . Reinforcement learning needs a feedback .

There is no sequence dependency between supervised learning and unsupervised learning , The reward calculation of reinforcement learning is sequence dependent , It is a delayed return .

Markov decision making process (MDP)

Let's first understand MDP, He is a theoretical basis for reinforcement learning . For us to understand the decision-making of agents in reinforcement learning , Have a clearer understanding of concepts such as value function .

MDP Consisting of four tuples , M=(S,A,P,R)

S: Represents a state set , among S_i It means the first one i The state of a moment ,s \in S

A: Represents an action set , among a_i It means the first one i A moment's action , a \in A

P: Represents the transition probability . P_{sa} Represents slave state s Executive action a after , The probability of transition to another state .

R: The return function . From the State s Executive action a. Notice that the reward here is immediate . From which S State to A Mapping of actions , It's behavioral strategy , Write it down as a strategy \pi:S \rightarrow A.

MDP The dynamic process of is shown in the following figure : Agent from state s_1, Take action a_1, Enter the state of s_2, And get a return r_1. Then from the State s_2 Take action a_2 Enter the state of s_3, Get paid r_2....

MDP It has Markov property , That is, writing a status is only related to the current status , No aftereffect .MDP At the same time, the action , It assumes that the probability of transition from the current state to the next state is only related to the current state and the action to be taken , It has nothing to do with the previous state .

Value function (value function)

We mentioned above MDP The payoff function in is immediate payoff , But in reinforcement learning , What we care about is not the immediate return at present , But the long-term benefits of the state under the strategy .

Let's put aside the action , Define value function (value function) To represent the policy in the current state π The long-term impact of .

Definition G_t From time to time t Start the sum of total discounted returns in the future :

among γ∈(0,1) It is called the reduction factor , Indicates the importance of future returns relative to current returns .γ=0 when , It is equivalent to only considering the immediate without considering the long-term return ,γ=1 when , Consider long-term return as important as immediate return .

Value function V(s) Defined as the State S The long-term value of , namely G_t Mathematical expectation

Then think about MDP The process also has a strategy and action , Given policy π And initial state s, Then action a=π(s), We can define it

State value function :

Action value function ( Given current state s And the current action a, Follow the strategy in the future π):

stay q_π(s,a) in , Not just strategy π And initial state s It's given by us , The current action a It is also what we give , This is a q_π(s,a) and Vπ(s) The main difference .

One MDP The optimal strategy is the optimal state - Value function :v_*(s) =\underset {\pi}{\max}v_{\pi}(s) state s Next , Choose a strategy that maximizes value .

Solve the optimal strategy

As mentioned above, our goal is to find a strategy to maximize the value function , Find an optimal solution problem .

The main methods used are :

- Dynamic programming (dynamic programming methods)

Dynamic programming method (DP) Calculate the value function :

It can be seen from the formula that DP The computed value function uses the current state s All of the s’ The value function at , That is to say bootstapping Method ( The current value function is estimated by the value function of the subsequent state ). The subsequent state is modeled p(s',r|S_t,a) Calculated .

therefore DP Is a function that stores values for each state Q surface , according to Q Table get the best strategy through iterative method . Storage Q After the table , The current value function is estimated by the subsequent state value function , Single step update can be realized , Improve learning efficiency .

advantage : Get ahead of time Q After the table , No more interaction with the environment , Get the best strategy directly through the iterative algorithm .

Inferiority : Practical application , It is difficult to obtain the state transition probability and value function

- The monte carlo method (Monte Carlo Methods)

The monte carlo method (MC), Its state value function update formula is :

among G_t Is the cumulative return after each exploration ,\alpha For learning rate . We use it S_t Cumulative Return of Gt And current estimates V(S_t) The deviation value of is multiplied by the learning rate to update the current V(S_t) New estimates for . Because there is no model to get the state transition probability , therefore MC Is a value function of estimating the state using the empirical mean .

Monte Carlo method is a sampling method ( Trial and error , Interact with the environment ) To estimate the expected value function of the state . After sampling , The environment gives reward information , Embodied in the value function .

advantage : There is no need to know the state transition probability , But through experience ( Sampling and trial and error experiments ) To evaluate the expected value function , As long as the number of samples is enough , Ensure every possible state - Actions can be sampled , It can approach the expectation to the greatest extent .

Inferiority : Because the value function is from the state s Accumulated rewards till the final status , So every experiment must be carried out to the final state to get the state s The value function of , Learning efficiency is very low .

- Time difference method (temporal difference)

Finally, look at the time difference method (TD) The value function update formula of the algorithm :

among r_{t+1}+ \gamma V(S_{t+1}) and MC Medium G_t Corresponding , Models that do not require the use of state transition functions , At the same time, it does not need to be executed to the final state to get cumulative return , Instead, use the immediate return and value function of the next moment , similar DP Of bootstapping Method .

The time difference method combines the idea of dynamic specification with the idea of Monte Carlo sampling . Avoid dependence on state transition probability without environmental interaction , The value function of the state is estimated by sampling . Learn directly from experience , Similar to Monte Carlo method . At the same time, it is allowed to estimate the current value function based on the value function of subsequent states before reaching the final state to realize one-step update , Similar to dynamic programming to improve efficiency .

Strengthen learning elements

After passing the above basic knowledge , Let's go back to the framework of reinforcement learning :

agent agent : Decision makers

environment Environmental Science : Things that interact with agents

State state : At present agent The state of being in the environment

action action :agent Each time in the state and the last state reward Together with the strategy, it determines the current actions to be performed

reward Reward : Environment rewards for action return

policy Strategy :agent act , from state To action Mapping

What we need to do with the reinforcement learning project is , To solve a problem, we first extract an environment and the agents interacting with it , Extract the state from the environment (S) And the action (A), And the instant reward for performing an action (R), The agent decides an optimal strategy algorithm , Under this strategy, the agent can perform the optimal action in the environment , Accumulated reward Maximum .

If there is any misunderstanding , Welcome to point out ~

Reference material :

边栏推荐

猜你喜欢

![[qsetting and.Ini configuration files] and [create resources.qrc] in QT](/img/67/85a5e7f6ad4220600acd377248ef46.png)

[qsetting and.Ini configuration files] and [create resources.qrc] in QT

Interface ownership dispute

ctfshow php的特性

![[untitled] Application of laser welding in medical treatment](/img/c5/9c9edf1c931dfdd995570fa20cf7fd.png)

[untitled] Application of laser welding in medical treatment

How to make sales management more efficient?

【网络通信 -- WebRTC】WebRTC 源码分析 -- 接收端带宽估计

FPN characteristic pyramid network

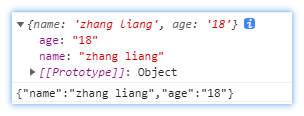

JSON - learning notes (message converter, etc.)

Wechat applet: time selector for the estimated arrival date of the hotel

内网渗透令牌窃取

随机推荐

【网络通信 -- WebRTC】WebRTC 源码分析 -- PacingController 相关知识点补充

What is the mobile account opening process? Is it safe to open an account online now?

解答02:Smith圆为什么能“上感下容 左串右并”?

Best practices cloud development cloudbase content audit capability

Ctfshow PHP features

12 initialization of beautifulsoup class

浅析3种电池容量监测方案

Hapoxy cluster service setup

Explanation of the principle and code implementation analysis of rainbow docking istio

Another breakthrough! Alibaba cloud enters the Gartner cloud AI developer service Challenger quadrant

Answer 03: why can Smith circle "allow left string and right parallel"?

Innovative technology leader! Huawei cloud gaussdb won the 2022 authoritative award in the field of cloud native database

Installation, configuration, désinstallation de MySQL

The principle of MySQL index algorithm and the use of common indexes

[qsetting and.Ini configuration files] and [create resources.qrc] in QT

内网渗透令牌窃取

How to make sales management more efficient?

Discussion on five kinds of zero crossing detection circuit

Intranet penetration token stealing

Drawing black technology - easy to build a "real twin" 2D scene