当前位置:网站首页>Spark common interview questions sorting

Spark common interview questions sorting

2022-07-23 11:14:00 【I'm a girl, I don't program yuan】

List of articles

Data skew

- What is data skew

In the big data system of parallel processing , Some part (Partition) The data volume of is significantly larger than that of other parts , The data processing speed of this part becomes the bottleneck of data set processing . - Why data skew

same Stage Different from task There are significant differences in the amount of data processed , Some task The amount of data processed is significantly larger than others task. - How to solve the problem of data skew

① Improve shuffle Parallelism of operations

for fear of task Less leads to more key Assigned to the same task And uneven distribution , Can be improved properly task The number of ( But it can't solve one key The amount of data is significantly larger than others key The problem of the situation )

② Two stage polymerization ( by key Add random prefix / suffix )

If a key Too much data , such as key by ‘hello’ There are a lot of data , You can prefix it , Turn into :0_hello,1_hello,2_hello…

Then assign them to different task On , Local polymerization , Finally, conduct global aggregation , Thorough solution key The problem of uneven data volume .

③ take reduce join To map join, eliminate shuffle Data skew

reduce join: Suitable for two big watches join,map The stage retains all the information of the two tables , With join The key word for is key After distributed computing ,shuffle and reduce Phase in join.

reduce join Of map The data is not slimmed down at this stage ,reduce Multiply two tables , Memory consumption is large .

map join: Suitable for a small watch join A big watch , Load all the data of the small table into the node memory ,join After that, there was no reduce operation .

map join There must be a table small enough , It can be done in memory join operation .

④ Delete fields with too much data , Such as company_id=0 Corresponding to hundreds of millions of data , Other corresponding single digits , be company_id=0 The allocated node will overflow memory and cause the program to crash , Deleting the outlier can quickly execute .

Spark Run the architecture

- Spark The components of include :driver and executor ( All are process ), Responsible for the use of resources .

Drive nodes (driver):

perform main() Process of method , structure sparkContext object , Be responsible for dividing user programs into multiple stage( Serial ), And put each stage Decompose into multiple tasks task( parallel ), Assign tasks to each actuator and schedule task execution ;

Once the drive terminates ,Spark End of application .

Actuator node (executor):

Responsible for the operation task , take rdd Cache in the actuator process , And return the result to the drive process

Actuators are independent of each other , If an actuator node crashes ,Spark The application can continue to execute . - Master node (master) And work nodes (worker)( yes Physical nodes ), Responsible for resource management and allocation .

One machine can act as master and worker node .

Master node (master):

Responsible for managing the worker node ,executor towards master Request resources .

Work node (worker):

Responsible for managing the executor process . One Worker Assign a by default Executor, When configuring, you can also configure multiple Executor.

Wide dependence and narrow dependence

1. Narrow dependence

Narrow dependence refers to the father RDD Each partition of is only one child RDD Partition use , Son RDD Partitions usually only correspond to constant parents RDD Partition

2. Wide dependence

Wide dependence refers to the father RDD Each partition of can be divided into several sub sections RDD Partition use , Son RDD The partition usually corresponds to the parent RDD All divisions

3. The difference between wide dependence and narrow dependence

Wide dependence often corresponds to shuffle operation , You need to run the same RDD Partition into different RDD partition , The middle may involve the transmission of data between multiple nodes , And every parent of narrow dependence RDD Partitions are usually passed into another child RDD Partition , It is usually done in one node .

When RDD When a partition is lost , For narrow dependence , Because of the father RDD A partition of is corresponding to only one child RDD Partition , In this way, you only need to recalculate and RDD The parent of the partition RDD Just partition . The use of data in this calculation is 100% Of

When RDD When a partition is lost , For wide dependence , Recalculate father RDD Only part of the data in the partition corresponds to the lost child RDD The partition , The other part causes redundant calculations . Children in wide dependence RDD Partitions usually come from multiple parents RDD Partition , In extreme cases , All the fathers RDD It is possible to recalculate .

4. Corresponding function

Narrow dependent functions are :

map, filter, union, join( Father RDD yes hash-partitioned ), mapPartitions, mapValues

The functions with wide dependence are :

groupByKey, join( Father RDD No hash-partitioned ), partitionBy

Reference article :

Spark Interview questions ( One ). Know about columns .runzhliu

Spark Wide dependence and narrow dependence . Simple books . Out of round stone

边栏推荐

- Concepts et différences de bits, bits, octets et mots

- Leetcode daily question (1946. largest number after varying substring)

- shell/sh/bash的区别和基本操作

- 十年架构五年生活-02第一份工作

- 视、音频分开的网站内容如何合并?批量下载代码又该如何编写?

- 超级简单的人脸识别api 只需几行代码就可以实现人脸识别

- 遇到了ANR错误

- js中拼接字符串,注意传参,若为字符串,则需要加转义字符

- wps中的word中公式复制完后是图片

- 【文献调研】在Pubmed上搜索特定影响因子期刊上的论文

猜你喜欢

Install enterprise pycharm and Anaconda

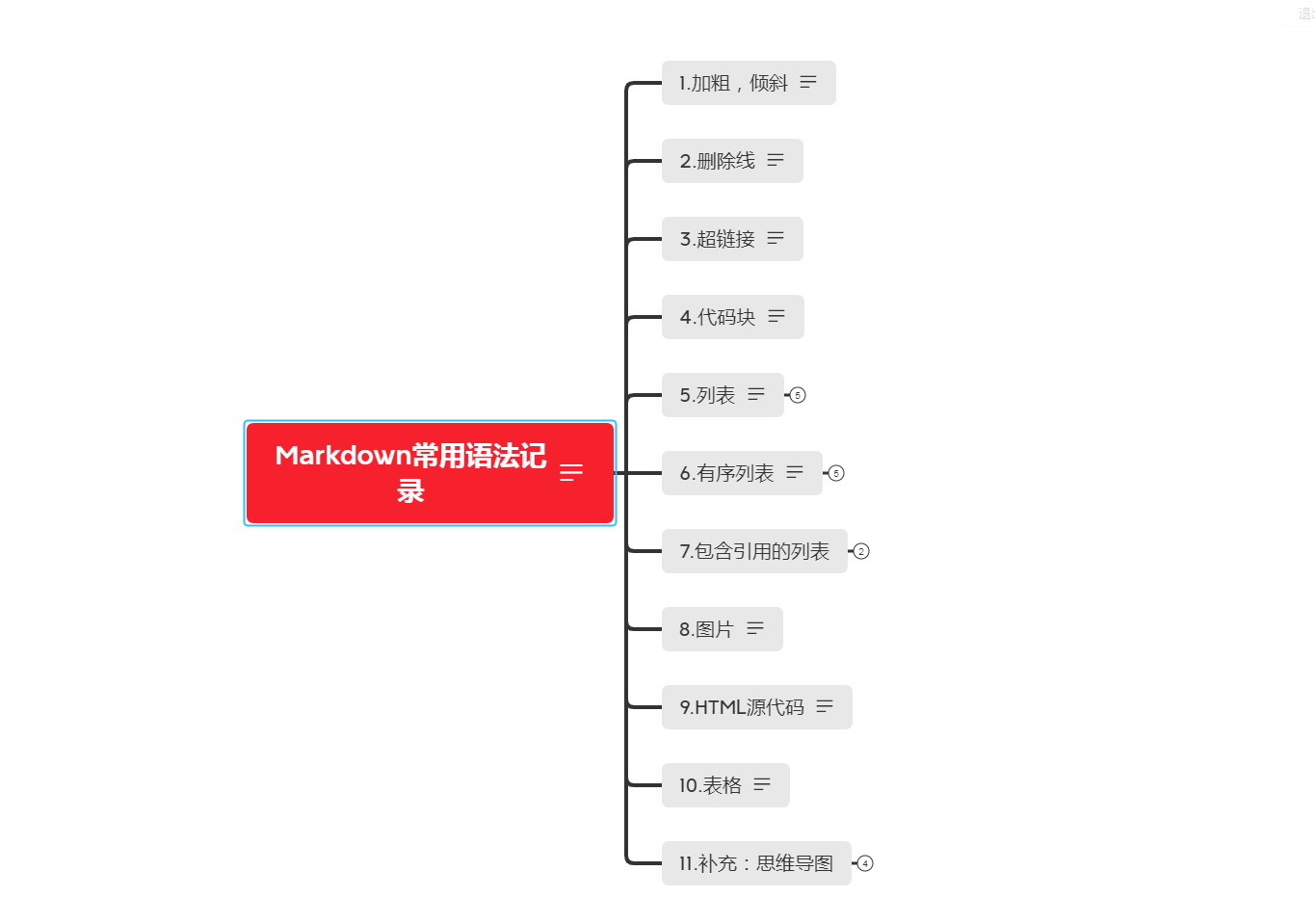

Markdown常用语法记录

9. Ray tracing

字典创建与复制

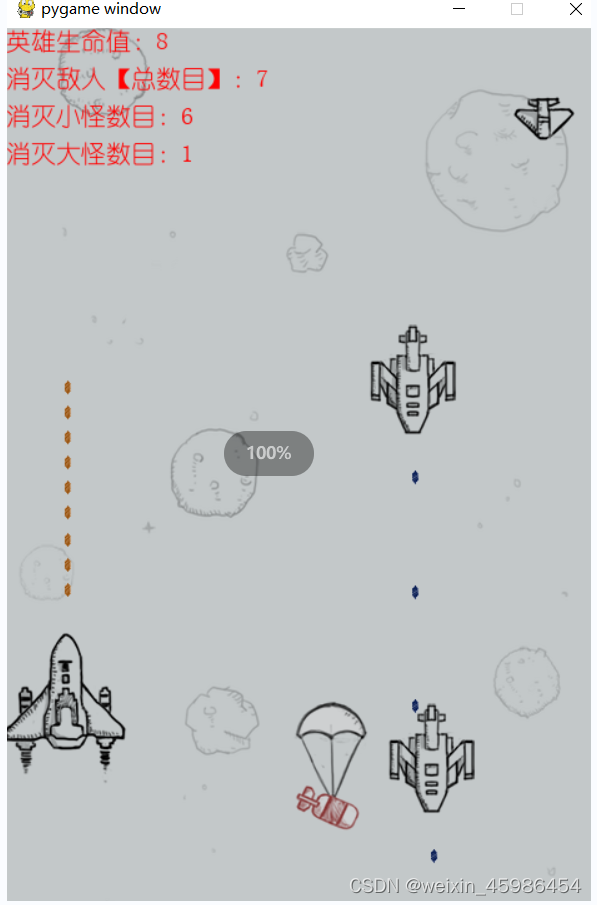

pygame实现飞机大战游戏

C1 -- vivado configuration vs code text editor environment 2022-07-21

plsql创建Oracle数据库报错:使用Database Control配置数据库时,要求在当前Oracle主目录中配置监听程序 必须运行Netca以配置监听程序,然后才能继续。或者

When using cache in sprintboot, the data cannot be loaded

With only 5000 lines of code, AI renders 100 million landscape paintings on v853

cuda10.0配置pytorch1.7.0+monai0.9.0

随机推荐

频谱聚类|拉普拉斯矩阵

遇到了ANR错误

Usage of countdownlatch

MySQL syntax (pure syntax)

【pyradiomics】bugFix:GLCM特征时:IndexError: arrays used as indices must be of integer (or boolean) type

img标签设置height和width无效

Spark常见面试问题整理

EntityManagerFactory和EntityManager的一个用法探究

安装企业版pycharm以及anaconda

Data Lake: viewing data lake from data warehouse

Fun code rain, share it online~-

FFmpeg 音频编码

使用pytorch实现基于VGG 19预训练模型的鲜花识别分类器,准确度达到97%

MySQL statement queries all child nodes of a level node

初识Flask

Basic concepts of software testing

IMG tag settings height and width are invalid

比特,比特,字節,字的概念與區別

mysql invalid conn排查

视、音频分开的网站内容如何合并?批量下载代码又该如何编写?