当前位置:网站首页>Gpt/gpt2/dialogpt detailed explanation comparison and application - text generation and dialogue

Gpt/gpt2/dialogpt detailed explanation comparison and application - text generation and dialogue

2022-06-24 04:02:00 【Goose】

1. background

GPT The full name of :Generative Pre-Training, The title of his thesis is Improving Language Understanding by Generative Pre-Training.

I believe everyone has seen GPT The series has made record breaking achievements in text generation tasks and other tasks, as shown in the figure below , This article is mainly about GPT And GPT What is the structure and task of .

2. GPT

GPT The underlying architecture of is transformer, By pre-training and fine-tuning It's made up of two parts

Pre training data GPT Use BooksCorpus Data sets , It contains 7000 This book , total 5GB written words . Such a huge data scale , yes GPT One of the keys to success .Elmo What is used 1B Word Benchmark The data set is the same size , But it was reorganized into a single sentence , Thus a long sequence of samples is lost , It is that it is not GPT Reasons for selection .

2.1 pre-training

pre-training Is to use transformer The framework of the process , But for transformer Changed a little .transformer I mentioned in my previous blog , See https://cloud.tencent.com/developer/article/1868051

We know transformer Are there in encoder Layer and the decoder layer , and GPT It mainly uses decoder layer , But I made a little change , Removed the middle Encoder-Decoder Attention layer ( Because no encoder layer , So there's no need to Encoder-Decoder Attention layer ) Here's the picture

The whole process is shown in the figure above , The word vector (token embedding) And the position vector (position embedding) And as input , after 12 Layer of Masked Multi-Head Attention and Feed Forward( Of course, the middle also includes Layer Norm), Get the predicted vector and the vector of the last word , The word vector of the last word will be used as a follow-up fine-tuning The input of .

GPT It's essentially an autoregressive model , Means to return from , Every time a new word is generated , Add the new word to the original input sentence , As a new input sentence .

The model will input statements into the structure shown in the figure above , Predict the next word , Then add the new words , As a new input , Continue to predict . The loss function calculates the deviation between the predicted value and the actual value .

Training objectives :

In the pre training phase , Only take the language model as the training goal , That is... In the picture Text Prediction.

The formula 1 Describes this typical left to right language model : Predict the next word given the above conditions .

In the formula 2 in ,h0 Represents the first hidden layer of the network ,U Represents the input sequence ,We Express token embedding matrix ,Wp Express position embedding.h1 To hn Yes, each floor Transformer block Output hidden layer of . With a final sotfmax Calculate the probability of the next word .

problem : What are the termination conditions for unsupervised training ? When can the training stop ? For example, clustering stops when the classification is stable

We can evaluate when the training stops by accuracy . The text generated during training is compared with the original text , Get accuracy , Whether to stop training is determined by whether the accuracy reaches the expected value or whether the accuracy fluctuates all the time .

2.2 Supervised fine-tuning

stay Fine-Tune Stage , The input sample is ( Sequence x1...xm, label y). Given a sequence , The probability of predicting a tag is as follows .

Task Classifier The training goal of is to maximize the probability of all supervised training samples , This is the picture 1 Medium Task Classifier.

At this stage , The training objectives of the language model are also enabled .

Review the network structure and Fine-Tune The formula of the stage , You will find , Parameters introduced , Only Wy.

First, most of the parameters are trained through unsupervised pre training , Then fine tune to determine the last parameter w Value , To adapt to different tasks . Use the vector of the last word of unsupervised as the input of fine-tuning ( I think I can actually use the word vector of the whole sentence as input , But there's no need to ).

The figure above shows the different NLP The fine-tuning process of the task :

Classification task : Input is text , The vector of the last word is directly used as the input of fine-tuning , Get the final classification result ( It can be divided into several categories )

Reasoning task : Input is transcendental + Separator + hypothesis , The vector of the last word is directly used as the input of fine-tuning , Get the final classification result , namely : Is it true

Sentence similarity : Input is The two sentences are reversed , Then add the vectors of the last word , Then proceed Linear, Get the final classification result , namely : Whether it is similar to

Q & A tasks : Input is Context and issues Put together with multiple answer , The middle is also separated by a separator , For each answer, the vector of the last word of the sentence is used as the input of fine-tuning , Then proceed Linear, Will be multiple Linear The result of softmax, Get the one with the greatest probability

problem : For Q & A tasks , The last many Linear How the results of softmax?

For Q & A tasks , One question corresponds to multiple answers , And finally, I want to take the most accurate answer ( The highest score ) As a result , I do this by answering many pairs of questions transformer after , Then do it separately linear, The dimensions can be unified , And then for multiple linear Conduct softmax~ Before, it was all for one linear do softmax, Directly take the one with the largest probability value , But now there are more linear How to do softmax Well ?

That's all GPT A general description of , Using unsupervised pre training and supervised fine tuning can achieve most of the NLP Mission , And the effect is remarkable , But it's not as good as Bert The effect is good . however GPT Using one-way transformer Can solve Bert Unresolved generate text task , See the following summary for specific reasons .

2.3 effect

3. GPT-2

GPT-2 Want to model without understanding words at all , So that the model can handle any coded language .GPT-2 Mainly aimed at zero-shot problem . It has greatly improved in solving a variety of unsupervised problems , But it is worse for supervised learning .

GPT-2 Still use GPT A one-way transformer The pattern of , Just made some improvements and changes . that GPT-2 be relative to GPT What are the differences ? Look at the following :

- GPT-2 Removed fine-tuning layer : No more fine tuning modeling for different tasks , It doesn't define what the model should do , The model will automatically identify what needs to be done . This is like a person reading a lot , What kind of questions do you ask him , He can pick it up easily ,GPT-2 It is such a well read model .

- stay Pretrain Some basic and GPT In the same way , stay Fine-tune Part of the second stage Fine-tuning There is specific supervision training NLP Mission , Replaced by unsupervised training of specific tasks , This makes pre training and Fine-tuning The structure of is exactly the same . When the input and output of the question are text , Only need to use specific methods to organize different types of labeled data can be substituted into the model , For example, for questions and answers, use “ problem + answer + file ” The organizational form of , For translation use “ english + French ” form . Use the preceding passage to predict the following passage , Instead of using dimension data to adjust model parameters . In this way, a unified structure is used for training , It can also adapt to different types of tasks . Although the learning speed is slow , But it can also achieve relatively good results .

- Add data set : This is a larger and larger data set .GPT-2 A new data set is constructed ,WebText. These unsupervised samples , All from Reddit The outer chain of , And those who get at least three likes of the outer chain , total 4500 Million links . Use Dragnet and Newspaper Two tools to extract web content . Filter 2017 year 12 Pages after months . Delete from Wikipedia The web page of . duplicate removal . Finally, I did some trivial and unspoken heuristic cleaning work . So get 8 Million pages in total 40GB Text data .WebText Data sets are characterized by being comprehensive and clean . Comprehensiveness comes from Reddit It is a social media website with a wide range of categories . Cleanliness comes from the author's intentional screening , At least three likes mean that the obtained outer chain is meaningful or interesting .

- Add network parameters :GPT-2 take Transformer The number of layers stacked increases to 48 layer , The dimension of the hidden layer is 1600, The parameter quantity has reached 15 Billion .(Bert The parameter quantity of is only 3 Billion )

- adjustment transformer: take layer normalization Put it in each sub-block Before , And in the last Self-attention Then add another one layer normalization.

It also adds a clue word , such as :“TL;DR:”,GPT-2 The model will know that it is doing summary work . The input format is Text +TL;DR:,

Model | Layers | d_size | ff_size | Heads | Parameters |

|---|---|---|---|---|---|

GPT2-base | 12 | 768 | 3072 | 12 | 117M |

GPT2-medium | 24 | 1024 | 4096 | 16 | 345M |

GPT2-large | 36 | 1280 | 5120 | 20 | 774M |

GPT2-xl | 48 | 1600 | 6400 | 25 | 1558M |

3.1 effect

be aware , The standard model 1542M stay 8 Test sets 7 A record , And the smallest model 117M Can also be in it 4 A test set . and GPT-2 No, Fine-Tune

4. DialoGPT

DialoGPT Expanded GPT-2 To deal with the generation of conversational neural responses (conversational neural response generation model) Challenges encountered . Neural response generation is a sub problem of text generation , The task is to quickly generate natural text ( Inconsistent with training text ). Human dialogue contains the competing goals of two interlocutors , Potential response ( reply ) More diverse . therefore , Compared to other text generation tasks , The dialogue model presents a larger one to many task . And human conversation is usually informal , Often contains abbreviations or errors , These are the challenges of dialogue generation .

Be similar to GPT-2,DialoGPT Also expressed as an autoregressive (autoregressive, AR) Language model , Using multiple layers transformer Model architecture . But it's different from GPT-2,DialoGPT In from Reddit The large-scale conversation extracted from the discussion chain is trained on . The author's assumption is that this allows DialoGPT Capture the joint probability distribution in a more fine-grained conversation flow P(Target, Source). It is observed in practice that ,DialoGPT The resulting sentences are diverse , And it contains information about the source sentence . The author puts the pre trained model in the open benchmark data set DSTC-7 Assessment was carried out on , Again from Reddit Extracted new 6000+ As reference test data . Whether in automatic assessment or human assessment ,DialoGPT All show the most advanced results , Raise performance to a level close to human recovery .

4.1 Model architecture

The author in GTP-2 Training on the basis of architecture DialoGPT Model . Reference resources OpenAI GPT-2 Use multiple rounds of conversation as long text , Take the build task as a language model .

First , Merge all conversations in a session into one long text , End with a text Terminator . Source sentence (source sentence) Expressed as S, The target sentence (target sentence) Expressed as T, The conditional probability can be written as

For a multi round conversation ,(1) The formula can be written as , It's essentially the product of conditional probabilities . therefore , Optimizing a single goal can be considered all “ Source - The goal is ” Yes .

The author has trained the parameter size of 117M、345M、761M Model of , The model uses 50257 A word (entry).

Used Noam Learning rate , The learning rate is chosen based on the loss of validation . To speed up training , The author compresses all training data into lazy load database In file , Data is only loaded when it is needed , Train with independent asynchronous data processing .

The author further adopts the dynamic batch strategy , Grouping sessions of similar length into the same batch , Thus, the training throughput is improved . After the above adjustment , Training time goes with GPU The increase in the number decreases approximately linearly .

4.2 Maximizing mutual information

The author maximizes mutual information (maximum mutual information,MMI) The scoring equation .MMI A pre training feedback model is used to predict the source sentence of a given response , namely P(Source|target). The author first uses top-K Sampling generates some assumptions , And then use probability P(Source|Hypothesis) To re rank all the assumptions . Intuitively , Maximizing the probability of the feedback model penalizes those mild assumptions , This is because frequent and repetitive assumptions can be associated with many possible queries , So the probability is low .

The author tries to use the strategy gradient method of reinforcement learning to optimize reward, Its handle reward Defined as P(Source|Hypothesis). Verification set of reward It's improving steadily , But I don't like RNN Model architecture training is like that . It is observed that reinforcement learning training can easily converge to degenerate local optimal solution , In this case, suppose you are repeating the source sentence ( repeat the words of others like a parrot ) And maximize mutual information . The author thinks that transformers Because of the powerful representation ability of the model , Therefore, it will fall into the local optimal solution . So the author put RL Standardized training for the future .

4.3 The evaluation index :DSTC-7 Dialogue system technology competition

DSTC(Dialog System Technology Challenges) There is an end-to-end dialogue modeling task in , The goal of the task is to generate beyond chitchat by injecting information based on external knowledge (chitchat) The dialogue . This task is different from the goal oriented task 、 A task oriented or task completed dialogue , Because it has no specific or predefined goals ( For example, booking flights 、 Restaurant reservation, table reservation, etc ). contrary , It's about human like conversations , In this conversation , Potential targets are usually unclear or unknown in advance , It's like in a work and production environment ( Like brainstorming sessions ) That's what people see when they're sharing information .

DSTC-7 The test data contains Reddit Data dialogue . To create a multiple reference (multi-reference) Test set , The author uses the inclusion of 6 More than one conversation . After filtering under other conditions , Got the size of 2208 Of “5- quote ” Test set .

DialoGPT And Team B、PERSONALITYCHAT contrast

The author makes use of the standard Machine translation evaluation BLEU、METEOR、NIST For automatic assessment , And use Entropy and Dist-n To assess lexical diversity .

The author will DialoGPT And “Team B”( The winning model of the competition )、GPT、PERSONALITYCHAT( A kind of seq2seq Model , Has been applied to the production environment as Microsoft Azure Cognitive services for ) OK, comparison , Results such as table 1 Shown , In all aspects, it is the most advanced level at present .

5. GPT-2 application - The text generated

5.1 Byte pair encoding

GPT-2 The model uses byte pair encoding in data preprocessing (Byte Pair Encoding, abbreviation BPE) Method ,BPE It can solve the problem of unlisted words , And reduce the size of the dictionary . It makes comprehensive use of the advantages of word level coding and character level coding , for instance , We need to encode the following string ,

aaabdaaabac

Byte pairs aa Most of the times , So we replace it with a character that has not been used in the string Z ,

ZabdZabac Z=aa

Then we repeat the process , use Y Replace ab ,

ZYdZYac Y=ab Z=aa

continue , use X Replace ZY ,

XdXac X=ZY Y=ab Z=aa

This process is repeated , Until no byte pair appears more than once . When decoding is needed , Reverse the above replacement process .

PT-2 It's a language model , Be able to predict the next word according to the above , So it can use the knowledge learned in pre training to generate text , Such as generating news . You can also use other data to fine tune , Generate text with a specific format or theme , Such as poetry 、 drama . So next , We will use GPT-2 The model performs a text generation .

5.2 The pre training model generates news

Want to run a pre trained GPT-2 Model , The easiest way is to let it work freely , Randomly generated text . let me put it another way , At the beginning , Let's give it a hint , That is, a predetermined starting word , Then let it randomly generate subsequent text .

But sometimes there may be problems , For example, the model falls into a loop , Keep generating the same word . To avoid that , GPT-2 Set up a top-k Parameters , In this way, the model will start from the front of probability k Choose a word randomly from the big words , As the next word . Here is the choice top-k Implementation of the function ,

import random

def select_top_k(predictions, k=10):

predicted_index = random.choice(

predictions[0, -1, :].sort(descending=True)[1][:10]).item()

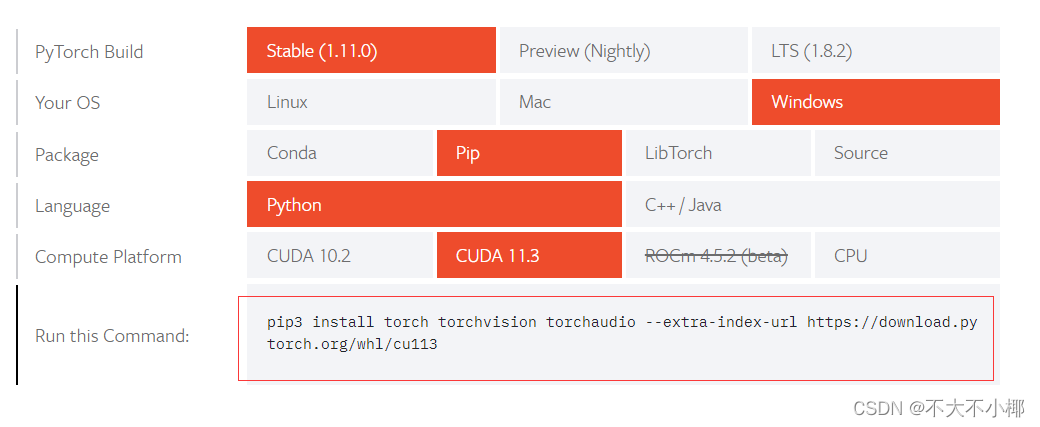

return predicted_index Let's introduce GPT-2 Model , We will use it in PyTorch-Transformers Encapsulated in the model library GPT2Tokenizer() and GPT2LMHeadModel() Class to actually see GPT-2 Ability to predict the next word after pre training . First , Need to install PyTorch-Transformers.

!pip install pytorch_transformers==1.0 # install PyTorch-Transformers

Use PyTorch-Transformers model base , Set up the example of preparing the input model first , Use GPT2Tokenizer() Create a word breaker object to encode the original sentence .

import torch

from pytorch_transformers import GPT2Tokenizer

import logging

logging.basicConfig(level=logging.INFO)

# Load the word breaker of the pre training model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

# Use GPT2Tokenizer Encode the input

text = "Yesterday, a man named Jack said he saw an alien,"

indexed_tokens = tokenizer.encode(text)

tokens_tensor = torch.tensor([indexed_tokens])

tokens_tensor.shape Next use GPT2LMHeadModel() Build a model , And set the model mode as the verification mode . Due to the large volume of pre training model parameters , And hosted on the Internet , So this time, download the pre training model from the online disk , This step is not required locally .

from pytorch_transformers import GPT2LMHeadModel

# Read GPT-2 Pre training model

model = GPT2LMHeadModel.from_pretrained("./")

model.eval()

total_predicted_text = text

n = 100 # Predict the number of cycles in the process

for _ in range(n):

with torch.no_grad():

outputs = model(tokens_tensor)

predictions = outputs[0]

predicted_index = select_top_k(predictions, k=10)

predicted_text = tokenizer.decode(indexed_tokens + [predicted_index])

total_predicted_text += tokenizer.decode(predicted_index)

if '<|endoftext|>' in total_predicted_text:

# If the end of text flag appears , End text generation

break

indexed_tokens += [predicted_index]

tokens_tensor = torch.tensor([indexed_tokens])

print(total_predicted_text)At the end of the run , Let's look at the text generated by the model , You can see , In general, this seems to be a normal text , however , If you look carefully, you will find the logic problems in the sentence , This is also a problem that researchers will continue to tackle later .

In addition to directly using the pre training model to generate text , We can also use fine tuning to make GPT-2 The model generates text with a specific style and format .

5.3 Fine tune to generate drama text

Next , We will use some drama scripts for GPT-2 Fine tuning . because OpenAI Team open source GPT-2 The pre training parameters of the model are obtained after pre training with English data set , Although Chinese data sets can be used in fine-tuning , But it takes a lot of data and time to have good results , So here we use the English data set for fine tuning , So as to better show GPT-2 The power of the model .

First , Download training dataset , Shakespeare's plays are used here 《 Romeo and Juliet 》 As a training sample . The data set has been downloaded in advance and placed in the cloud disk , link :https://pan.baidu.com/s/1LiTgiake1KC8qptjRncJ5w Extraction code :km06

with open('./romeo_and_juliet.txt', 'r') as f:

dataset = f.read()

len(dataset)Preprocessing training set , Encode the training set 、 piecewise .

indexed_text = tokenizer.encode(dataset)

del(dataset)

dataset_cut = []

for i in range(len(indexed_text)//512):

# Segment the string to a length of 512

dataset_cut.append(indexed_text[i*512:i*512+512])

del(indexed_text)

dataset_tensor = torch.tensor(dataset_cut)

dataset_tensor.shape Use here PyTorch Provided DataLoader() Construct training set data set representation , Use TensorDataset() Build a training set data iterator .

from torch.utils.data import DataLoader, TensorDataset

# Building datasets and data iterators , Set up batch_size The size is 2

train_set = TensorDataset(dataset_tensor,

dataset_tensor) # The label is the same as the sample data

train_loader = DataLoader(dataset=train_set,

batch_size=2,

shuffle=False)

train_loaderCheck if the machine has GPU, If so, it's in GPU function , Otherwise, it will be CPU function .

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

deviceStart training .

from torch import nn

from torch.autograd import Variable

import time

pre = time.time()

epoch = 30 # Circular learning 30 Time

model.to(device)

model.train()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-5) # Define optimizer

for i in range(epoch):

total_loss = 0

for batch_idx, (data, target) in enumerate(train_loader):

data, target = Variable(data).to(device), Variable(

target).to(device)

optimizer.zero_grad()

loss, logits, _ = model(data, labels=target)

total_loss += loss

loss.backward()

optimizer.step()

if batch_idx == len(train_loader)-1:

# At every Epoch The final output of

print('average loss:', total_loss/len(train_loader))

print(' Training time :', time.time()-pre)After training , You can make the model generate text , Observe the output .

text = "From fairest creatures we desire" # You can also enter different English texts here

indexed_tokens = tokenizer.encode(text)

tokens_tensor = torch.tensor([indexed_tokens])

model.eval()

total_predicted_text = text

# Make the model after training 500 Second prediction

for _ in range(500):

tokens_tensor = tokens_tensor.to('cuda')

with torch.no_grad():

outputs = model(tokens_tensor)

predictions = outputs[0]

predicted_index = select_top_k(predictions, k=10)

predicted_text = tokenizer.decode(indexed_tokens + [predicted_index])

total_predicted_text += tokenizer.decode(predicted_index)

if '<|endoftext|>' in total_predicted_text:

# If the end of text flag appears , End text generation

break

indexed_tokens += [predicted_index]

if len(indexed_tokens) > 1023:

# The maximum input length of the model is 1024, If the length is too long, it will be truncated

indexed_tokens = indexed_tokens[-1023:]

tokens_tensor = torch.tensor([indexed_tokens])

print(total_predicted_text)You can see from the generated results , The model has learned the text structure of the play . But when you read it carefully, you will find that it lacks logic and relevance , This is because of time and equipment limitations , The training of the model is limited . If possible, you can use more data , Train longer , In this way, the model will perform better .

5.4 The evaluation index

https://zhuanlan.zhihu.com/p/88502676

Model A:

Fusion model , There are two main goals : Growth text , Increase the relevance of the generated stories and prompts . Threshold self attention mechanism and fusion mechanism are used ((Sriram et al., 2018), The meaning of integration mechanism : first seq2seq Model training , the second seq2seq Use the hidden layer of the first model during training .

Model B:

GPT-2 Model , use 117M Pre training model of . The author explains why 117M Of , instead of 340M What about . When doing experiments , A larger pre training model has not been released yet . gpt-2 Detailed introduction , From simple . There is a lot of information on the Internet , such as :

Decoding algorithm :

An invention that originated in machinetranslation , Most build tasks use beam search Algorithm .(Shang et al., 2015; Serbanet al., 2016). But research shows that ,beam search Application of algorithm in text generation in open domain , Prone to repetition , General vocabulary . see (Holtzman et al., 2019).

top-k sampling , At every step of decoding , First, take the probability distribution of the whole vocabulary k individual token, Then re normalize , Then select one of them as the decoding token.

top-k Sampling ,k The impact of value :

In the depth model of unconditionally generating long texts , Big k The value represents the higher entropy . Smaller k value , Generated text , Often simpler , High repetition . Simultaneous discovery , Smaller k value ,GPT2-117 Easier to copy from the guide , Contains more verbs and pronouns , Fewer nouns and adjectives , The noun is more specific , A smaller range of sentences .

If you will k Value set to large , For example, the size of the vocabulary . Similar to human indicators in most indicators , But in common sense reasoning , World knowledge , Poor coherence between sentences .

5.4.1 Confusion

ppl Degree of bewilderment at the word level , Is in WritingPrompts-1024( The leading words shall not exceed 1024 Word ) Data subset .

Confusion (perplexity) The basic idea of : It is better to assign higher probability values to the sentences in the test set , When the language model is trained , The sentences in the test set are all normal sentences , So the trained model is the higher the probability on the test set, the better , The formula is as follows :

It can be seen from the formula that , The greater the probability of sentence , The better the language model , The less confused . Confusion p It can be understood as , If every time step randomly selects words according to the probability distribution calculated by the language model , So on average , How many words can I pick to get the right one

5.4.2 Prompt ranking accuracy

The definition and evaluation method of this index , come from 《Hierarchical Neural Story Generation》. It mainly focuses on the correlation between the leading words and the generated stories . The specific way is : Select a pair in the test set (p,g),p Indicates the leading words ,g Represents the generated story , In random selection of other leading words p1-p9, And then calculate p and g Of likelihood. Conditions for a :(p,g) The similarity ratio of (p1,g) The similarity is great . Then take it 10000 In a test set (p,g), The proportion of the part meeting condition one , It's called Prompt ranking accuracy.

GPT2-117 Score of :80.16%

Fusion Model Of 39.8%

5.4.3 Sentence embedding similarity

Calculate the sentence embeddedness of the lead words and the generated stories ( use GloVe Take the average embedded value of each word ) Cosine similarity of .

stay top-k In the algorithm, ,k Take different values , Calculate each model separately , give the result as follows :

Use of named entities : It mainly looks at the number of times the named entity in the guide language appears in the generated story .

5.4.4 Evaluate consistency

Evaluation method of consistency , come from 《Modeling local coherence: An entity-based approach》, The main idea is , In the test dataset , For a story s0, Select front 15 A sentence , Out of order , Generate 14 A disorderly story s1-s14. And then use the language model to calculate s0-s14 The possibility of . about s1-s14, If the probability is greater than s0, It is called a counterexample . The error rate is defined as the proportion of counterexamples .

GPT2-117 Score of :2.17%

Fusion Model Of 3.44%

5.4.5 Evaluate word repetition and rareness

GPT2-117 Than Fusion Model The repeatability should be small ,rareness To be high . But the gap is very small .

6. Dialogue task / chatbot

You can participate in the training process

https://cloud.tencent.com/developer/article/1877398

https://github.com/yangjianxin1/GPT2-chitchat

For details, see https://blog.csdn.net/g534441921/article/details/104312983

6.1 Common scenarios and processing

Chat robot is the most widely used scene in semantic matching . At present, there are five kinds of chat robots :

- Based on question and answer pairs . Enter the user's question , In by ( problem : answer ) Search for similar problems in the knowledge base , Finally, the answer to the user's similar question is returned as the result ;

- Based on machine reading comprehension : Enter the user question , Retrieve relevant documents from the knowledge base , Then return the answer in the form of machine reading comprehension , This also involves retrieval , But the most critical step is to extract from the document in the form of a pointer network Span As an answer , Prone to instability ;

- Based on Knowledge Map : Enter the user question , Through semantic analysis, it is transformed into the corresponding Cypher grammar , Search the problem from the established knowledge map , The main difficulty lies in the fact that the establishment of the knowledge map requires a lot of manpower ;

- Task based conversations : For limited actual business scenarios , For example, Ctrip's chat robot , Enter the user question , Identify by intention 、 Extract word slots , Convert to the corresponding “ make a flight reservation ”、“ Check the hotel ” Etc ;

- gossip : Similar to Microsoft Xiaobing , There are few practical application scenarios .

Q1: The standard of knowledge base asks how to arrange ?

A: Although it is a relatively simple chat robot solution based on question and answer pairs , But in practice , We must first consider how extensive this scenario is , Is to solve a medical question and answer 、 It is also a financial Q & A 、 Or an encyclopedic question and answer . Be sure to comb your scene first , If the scene is too big , It also needs hierarchical management , If you are in a hurry to go online , Then you should do high-frequency Q & a first , As the saying goes, the law of 28 ,20% The questions covered 80% Frequently asked questions about .

Q2: How to deal with cold start ?

A: Sometimes it's a new need , No previous data accumulation , At this time, you can use the search engine , For example, Baidu knows to search some relevant questions raised by some netizens as a knowledge base , At least these questions are true , If the scene is very narrow , Can't find out , Only let the customer provide some common problems before divergence .

Q3: How to make a training set ?

A: It is also easy to encounter cold start , If you have sorted out the knowledge base , How to generate our training set ?

Can pass , As a standard question of the same meaning as a pair of positive samples , The standard questions with different meanings are a pair of negative samples , But this easy training set is not rich enough . Here is a recommended practice , Take the label to Baidu know search , Usually No. 1 All the problems found on the page are of the same semantics, which can be used as positive samples ,10 Multi page problems with high similarity but different semantics can be used as negative samples , Of course, it needs to be reviewed manually , The advantage of this approach is that it greatly enriches the training set .

Q4: Semantic matching needs to be done in detail ?

A: Think first , What is a sentence with the same meaning ? Such as “ How to become a network Celebrity ”、“ How to become a network Celebrity ”, There is no doubt that it belongs to the same semantic sentence pair ,

But if it is " How to become a network Celebrity "、“ How easy is it for a woman to become an Internet Celebrity ”. Do you think these two sentences have the same meaning ?

In essence, it is not strictly the same , But if in your business scenario , These two questions correspond to the same answer , Whether these two sentences can be regarded as similar to 1 A positive sample of the model to train ? The answer lies in , How thick and how thin do you want it to be , If it is very fine grained , Then these two sentences are not sentences with the same meaning , Or their similarity is not 1, yes 0.6 or 0.8, But if it is done very carefully , You need to define a lot of criteria to ask . If we do it widely , For example, the same answer is defined as the similarity of 1 Sentences , Now , You have to take these samples you think are similar to and train the model , Let the model learn , Because this is not strictly the same meaning , The advantage of expanding is that you don't have to define too many problems , But it is very easy to be unstable .

Q5: How to do Retrieval ?

A: The common practice is to search out a batch of similar problems before fine sorting . Among them, the retrieval can use bm25、SBERT Wait for the model , Fine discharge can be used Cross-Encoders Isostructure .

Q6: Users' problems have no similar problems in the knowledge base ?

A: You can set ,

- If there is a similarity between standard questions and user questions 0.8 The above , Then directly return the answer corresponding to the standard question with the highest similarity ;

- If the highest similarity is 0.4~0.8 Between , We can return the user “ Do you want to ask ...”;

- If the similarity is 0.4 following , We can return the three questions that are most similar to the user's questions , Output “ Do you want to ask these questions ...”;

6.2 The evaluation index

6.2.1 Retrieval evaluation index

Evaluation methods in information retrieval , The most common ones are [email protected], Given a query, choice k The most likely response, Look at the right response Is it here k A Li .

6.2.2 Generate evaluation indicators

Word overlap evaluation index

There are mainly BLEU,ROUGE and METEOR, Originally used to measure the effect of machinetranslation .BLEU It mainly depends on people / The words in the sentences tested overlap ( Machine generated sentences to be evaluated ngram Match the artificially generated reference sentences correctly ngram And all the sentences produced by the machine ngram Ratio of occurrence times ), Join in BP(Brevity Penalty) Penalty factor can evaluate the integrity of sentences . However BLEU Don't care about grammar , Only care about content distribution , It is suitable for measuring the performance of data set , Poor performance at the sentence level .

“BLEU is designed to approximate human judgement at a corpus level, and performs badly if used to evaluate the quality of individual sentences.”——wikipedia

ROUGE It is a similarity measurement method based on recall rate , And BLEU similar , But the calculation is ngram The co occurrence probability of the reference sentence and the sentence to be evaluated , contain ROUGE-N, ROUGE-L( Longest common clause , Fmeasure), ROUGE-W( Longest public clause with weight , Fmeasure), ROUGE-S( Discontinuous binary , Fmeasure) Four kinds of , Don't say more about it .

METEOR Improved BLEU, The alignment between the reference sentence and the sentence to be evaluated is considered , It has a higher correlation with the results of manual judgment .

Word vector evaluation index

Focus on comparing the semantic similarity between the generated sentences and the real samples , But only on the basis of word vectors , It is difficult to capture long-distance semantics

- Embedding average score Average the word vector of each word in the sentence as the feature of the sentence , Calculate the characteristics of the generated sentence and the real sentence cosine similarity

- Greedy matching score Find the most similar pair of words between the generated sentence and the real sentence , The similarity of the two words is approximated as the distance between sentences

- Vector extrema score Extract the maximum for each dimension of the word vector in the sentence ( Small ) Value as the value of the dimension corresponding to the sentence vector , And then calculate cosine similarity

6.2.3 Evaluation index based on learning

Use machine learning / Neural network method to learn a good evaluation index , Make the model scoring closer to manual scoring . Like all kinds of GANs、ADEM、Dual Encoder(DE) wait .

6.2.4 Artificial metering

“We find that all metrics show either weak or no correlation with human judgements, despite the fact that word overlap metrics have been used extensively in the literature for evaluating dialogue response models”

stay How NOT To Evaluate Your Dialogue System In this paper , Claim compared with manual judgment , all metric It's all rubbish

- On a chatty dataset , Above metric There is a weak correlation with manual judgment (only a small positive correlation on chitchat oriented Twitter dataset)

- On the technical data set , Above metric It has nothing to do with human judgment (no correlation at all on the technical UDC)

- When confined to a specific area ,BLEU Will have a good performance

Sum up , In fact, the evaluation index of dialogue system should be a research hotspot , Especially the evaluation index based on learning . In addition, if you want to evaluate multiple rounds of dialogue , That's even more complicated , Also introduce evaluation timing , For example, whether each round of dialogue is evaluated , Are you referring to a concept (slot-value pair) Time to evaluate ……

6.3 Data sets

tianchi :“ public welfare AI Star of the year ” challenge round - COVID-19 similar sentence pair judgment contest

This competition is the scene mentioned above 1, And it is a very detailed chat scene , Focus on respiratory problems related to the epidemic . The more subdivided the field , Relatively speaking, it is better to do , More accurate .

tianchi :「 Xiaobu assistant dialogue short text semantic matching 」

Use semantic matching to do intention recognition , Instead of directly retrieving the problem . This broadens our thinking , The task-based robot in the chat robot mentioned above , The first step is intention recognition , The traditional practice is to do it as a classification task , But the disadvantage of classification is that it is difficult to expand ( As defined at the beginning 10 An intentional analogy , In the future, it will be expanded , To retrain the model ), But it is not necessary to use semantic matching , To add an intention, you only need to add related questions to the corresponding library .

2019 Law research cup :

Calculate and judge the similarity of several legal documents . say concretely , For each instrument, we provide the title and factual description of the instrument , The contestant needs to find a document more similar to the inquiry document from the two candidate documents . Similar to the scenario I mentioned above 2, Search by semantic matching , The retrieved cases or work orders are used for staff reference .

CCF:“ Technical requirements ” And “ Technical achievements ” Calculation model of correlation degree between projects

The application scenario has a platform , Regularly collect technical requirements and technical achievements , Regularly update the technical requirements database and technical achievements database , There are two sources of data :(1) Member units publish ;(2) The official website of non member units . The amount of new data each month is about 3000 A project .

According to the text meaning of the project information , Provide the supplier and the demander with highly relevant corresponding information ( demand —— Results intelligent matching service ), It is a functional requirement of the platform . The correlation between technical requirements and technical achievements is divided into four levels : Strong correlation 、 Strong correlation 、 Weak correlation 、 No relevant .

Baidu Qianyan data set :

- LCQMC(A Large-scale Chinese Question Matching Corpus), Baidu knows the Chinese problem matching data set in the field ;

- BQ Corpus(Bank Question Corpus), Problem matching data in the banking and financial field ;

- PAWS-X ( chinese ): Data sets with high difficulty in semantic matching . The data set contains defined pairs and non - defined pairs , That is, to identify whether a pair of sentences have the same interpretation ( meaning ), It is characterized by highly overlapping words , It is very helpful to further improve the judgment of the model for strong negative cases .

sohu :2021 Sohu campus text matching algorithm contest

Each pair of text determines whether the two paragraphs in the text pair match on two granularity . among , A particle size is relatively wide , Two paragraphs of text belonging to one topic can be regarded as matching ; Another particle size is more strict , Two paragraphs of text must be the same event to be considered a match . Such as the following questions , They all say English football , Belong to the same topic , But the two events are not the same .

summary

because GPT Is an autoregressive language model , The setting of the experiment is zero-shot forecast , Not specifically designed and tuned for downstream tasks , So in all kinds of NLP Tasks such as reading comprehension 、 Question answering system 、 Text in this paper, 、 The translation system can also achieve certain effects , But compared to BERT This kind of fine-tuning supervision model will have gaps .

Why? GPT The model compares BERT More suitable for text generation ?

At present, the mainstream text generation is from left to right , Generation page N individual token when , You can only use the 1~N-1 individual token Information about , You can't use the following token Information , and GPT/GPT-2 The same left to right generation task is used for pre training ( That is the so-called one-way ), Pre training is consistent with downstream tasks ;

and BERT Use MLM Task pre training , That is, give the context before and after , It is predicted that mask The one that fell token, This kind of pre training makes BERT Can take full advantage of two-way context information ( Before and after ), But in the text generation task , Naturally, the model can only use the previous information , And BERT There is a big problem in the pre training task gap, therefore BERT It is not suitable for direct use in text generation tasks .

The author claims that , Even if GPT-2 Ten times as many parameters as GPT, Five times Bert, But still WebText The data set is under fitted .

The network becomes larger and larger , Maybe there is no end , And before finding a more efficient network , Our best choice is still Transformer.

Ref

- https://zhuanlan.zhihu.com/p/96791725 GPT/GPT2 Introduce

- https://github.com/Morizeyao/GPT2-Chinese

- https://cloud.tencent.com/developer/article/1748379 GPT2

- https://cloud.tencent.com/developer/article/1877398 GPT2 chatbot

- http://jalammar.github.io/illustrated-gpt2/ GPT2 Detailed explanation

- http://blog.zhangcheng.ai/2019/04/28/%E6%B1%9D%E6%9E%9C%E6%AC%B2%E5%AD%A6%E8%AF%97%EF%BC%8C%E5%8A%9F%E5%A4%AB%E5%9C%A8%E8%AF%97%E5%A4%96-%E8%AE%BAgpt-2%E6%97%A0%E7%9B%91%E7%9D%A3%E9%A2%84%E8%AE%AD%E7%BB%83%E7%9A%84/

- https://www.cnblogs.com/wwj99/p/12503545.html pytorch GPT2

- https://zhuanlan.zhihu.com/p/88502676 GPT2 Training

- https://cloud.tencent.com/developer/article/1877398 GPT2 Chat robot training

- https://blog.csdn.net/g534441921/article/details/104312983 GPT2 Dialogue task Overview

- https://cloud.tencent.com/developer/article/1783241 DialoGPT

- https://mp.weixin.qq.com/s/43yfUIyp7L4lCRVFKms3Og Semantic matching and dialogue

边栏推荐

- web渗透测试----5、暴力破解漏洞--(1)SSH密码破解

- halcon知识:区域(Region)上的轮廓算子(2)

- Browser rendering mechanism

- How to be a web server and what are the advantages of a web server

- 多任务视频推荐方案,百度工程师实战经验分享

- Getlocationinwindow source code

- Use the fluxbox desktop as your window manager

- Record a programming contest

- web rdp Myrtille

- After 20 years of development, is im still standing still?

猜你喜欢

随机推荐

Psexec right raising

【代码随想录-动态规划】T392.判断子序列

Common content of pine script script

halcon知识:区域(Region)上的轮廓算子(2)

Gaussian beam and its matlab simulation

golang clean a slice

TCP three handshakes and four waves

Cross platform RDP protocol, RDP like protocol and non RDP protocol remote software

Building RPM packages - spec Basics

How to spell the iframe address of the video channel in easycvr?

3. go deep into tidb: perform optimization explanation

Idea 1 of SQL injection bypassing the security dog

Hprof information in koom shark with memory leak

hprofStringCache

在pycharm中pytorch的安装

Analysis of grafana SSO authentication process based on keyloak

内存泄漏之KOOM

C language - number of bytes occupied by structure

How to restore the default route for Tencent cloud single network card machine

Pits encountered in refactoring code (1)

![[Numpy] Numpy对于NaN值的判断](/img/aa/dc75a86bbb9f5a235b1baf5f3495ff.png)

![[code Capriccio - dynamic planning] t392 Judgement subsequence](/img/59/9da6d70195ce64b70ada8687a07488.png)