当前位置:网站首页>Unable to access the CVM self built container outside the TKE cluster pod

Unable to access the CVM self built container outside the TKE cluster pod

2022-06-24 03:51:00 【Nieweixing】

1. Problem description

Use tke The customer of the product , Come across GlobalRouter In network mode tke Within cluster pod Unable to access out of cluster cvm build by oneself docker Container services ,cvm Nodes cannot be in the cluster pod service ,vpc-cni Cluster does not have this problem in network mode .

2. Problem phenomenon

Simulate problem phenomena , Outside the cluster cvm There's a nginx Containers , And map ports to nodes 8082,tke Within cluster pod Inside ping perhaps telnet Outside the cluster cvm The self built container service on does not work , Nodes in the cluster can be accessed through , Outside the cluster cvm Visit... In the cluster pod It doesn't work .

The specific test results are as follows :

- tke Cluster node access cvm On nginx service

- Within cluster pod visit cvm On nginx service

- Outside the cluster cvm Access the cluster pod

3. The analysis process

Why does it happen outside the cluster cvm Self built containers and clusters pod The phenomenon of impassability ? Let's simply grab the bag and take a look

pod Internal visit cvm Upper nginx service , At the same time cvm And pod Inner grab , From the above packet capturing results, we can see ,cvm I received pod Packet sent , however cvm Didn't return the package , Explain that the problem is cvm On , Why didn't you give it back to pod Well ?

Before we tested in cvm On is ping No cluster connection pod, You can use it here traceroute To test the routing direction , Let's see what's wrong

after traceroute You can find , visit pod The route to the node 172.18.0.1 This ip,172.18.0.1 This ip yes docker0 Bridged ip, Check the routing table and find that the destination is 172.18.0.0 The network segment ip All gone docker0 This network card . Check it out here , The cause of the problem is almost clear .

The reason is that cvm self-built docker Segments and tke Cluster network segment conflicts , Give back 172.18.0.0 Network segment packets are routed to docker0 NIC , Didn't go eth0 get out , The destination cannot receive the packet , The access is blocked .

4. Solution

Now that you know the reason , So what is the solution that doesn't exist ? Can't the follow-up be in the cluster pod Have you accessed the container service outside the cluster ?

Of course there are solutions . The solutions to this problem are 2 Kind of :

4.1 Modify cluster ip-masq-agent To configure

Careful students can find that , stay cvm Packet source received on ip yes pod The real ip, This leads to routing to docker0 On , normal k8s Access external services on ,pod ip Metropolis snat Node forming ip, Nothing here snat Node forming ip This is because a is deployed in the cluster ip-masq-agent Caused by components ,ip-masq-agent You can refer to the document for the specific description of https://cloud.tencent.com/developer/article/1688838

GlobalRouter In mode tke colony ,ip-masq-agent The default configuration access purpose is vpc The addresses of network segments and container network segments are not set snat, therefore pod visit cvm Is real pod ip.

If you want to pod visit cvm Don't be true pod Of ip, Use node ip, modify ip-masq-agent Configuration file for , Get rid of vpc Network segment configuration .

kubectl edit cm ip-masq-agent-config -n kube-system

modify configmap, Get rid of vpc Network segment 10.0.0.0/16, Then wait 1 Minutes later ,ip-masq-agent Of pod Reload configuration , Let's grab the bag and test again .

After modifying the configuration ,pod Inside, you can access cvm Of nginx The container serves , From the result of bag grabbing ,cvm The source of packet capture ip Turned into pod Where node node ip 10.0.17.16, When the destination ip Is the node ip,cvm The second routing rule will be followed on the , from eth0 Give the bag to pod, Therefore, the access is normal .

4.2 modify cvm On docker Container network segment

In addition to the above modification cluster ip-masq-agent Out of component configuration , Another solution is to modify cvm Upper docker Container network segment , To avoid and tke Cluster network segment conflict , This solution needs to be restarted cvm All the containers on , It needs to be used carefully .

Modify the /etc/docker/daemon.json The configuration file , No new one , Add the following , Here, the network segment is changed to 192.168 了 , Restart after saving docker.

"bip": "192.168.0.1/24"

Let's test , take ip-masq-agent Configuration plus vpc Network segment , And then in pod Internal access cvm Upper nginx Look at the service .

You can find , Changed cvm After the container network segment of , stay pod You can successfully access cvm Of nginx Service .

5. Think about expanding

Remember when we said , stay vpc-cni In the mode of , There will be no such problem , After the above analysis and troubleshooting , You should know why vpc-cni There won't be this problem , The reason is simple .

vpc-cni In this mode, all container network segments are vpc Next subnet ip, Container network and vpc Is the same network level ,vpc-cni Mode of pod visit cvm Upper docker Will go local Routing strategy , Go to the primary network card eth0 get out , So there won't be a problem .

边栏推荐

- Grpc: how to make grpc provide swagger UI?

- Web penetration test - 5. Brute force cracking vulnerability - (4) telnet password cracking

- How to select a high-performance amd virtual machine? AWS, Google cloud, ucloud, Tencent cloud test big PK

- web rdp Myrtille

- 【代码随想录-动态规划】T392.判断子序列

- Understand Devops from the perspective of leader

- Use the fluxbox desktop as your window manager

- Black hat SEO actual combat directory wheel chain generates millions of pages in batch

- An open source monitoring data collector that can monitor everything

- Brief ideas and simple cases of JVM tuning - how to tune

猜你喜欢

Idea 1 of SQL injection bypassing the security dog

Flutter series: offstage in flutter

Modstartcms enterprise content site building system (supporting laravel9) v4.2.0

On game safety (I)

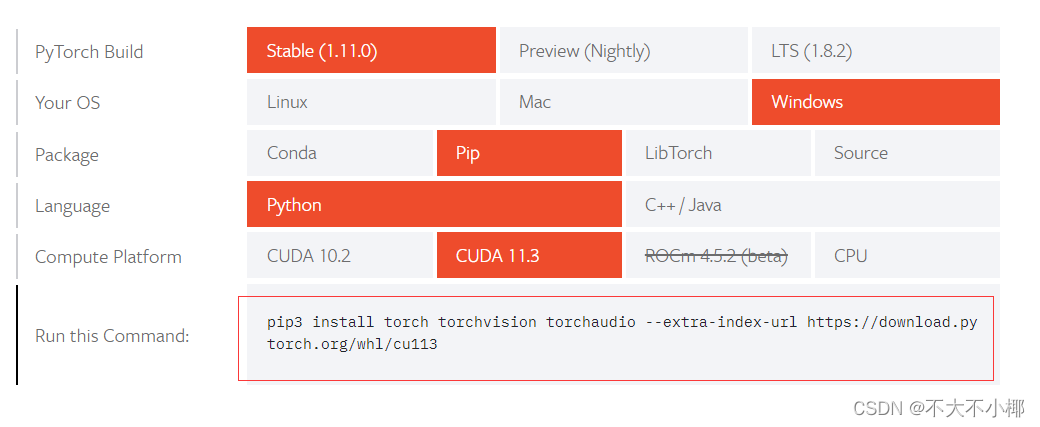

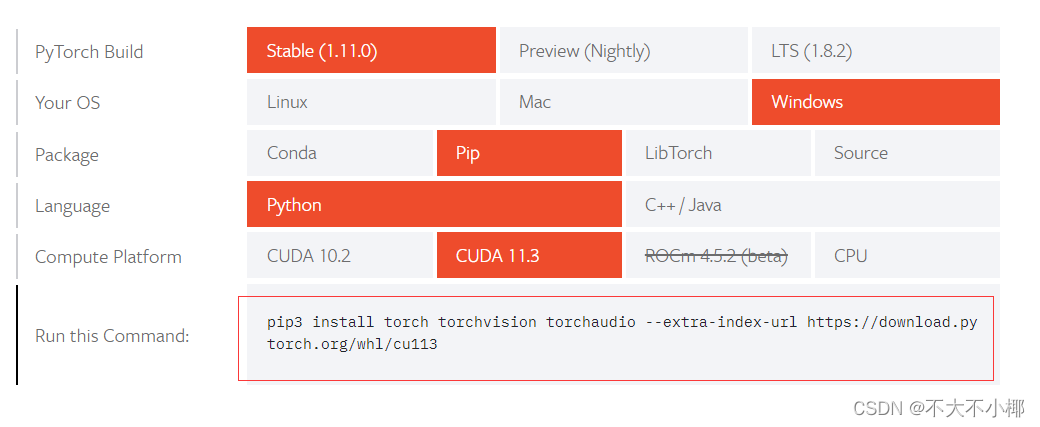

在pycharm中pytorch的安装

Installation of pytorch in pycharm

Black hat SEO actual combat directory wheel chain generates millions of pages in batch

【代码随想录-动态规划】T392.判断子序列

Do you understand TLS protocol?

Old popup explorer Exe has stopped working due to problems. What should I do?

随机推荐

Recording a summary of frequently asked questions

Why install code signing certificate to scan and eliminate virus software from security

Spirit breath development log (17)

take the crown! Tencent security won the 2021 national network security week outstanding innovation achievement award

在pycharm中pytorch的安装

High quality travel on national day, visual start of smart Tourism

618大促:手机品牌“神仙打架”,高端市场“谁主沉浮”?

Self built DNS to realize the automatic intranet resolution of tke cluster apiserver domain name

Life reopens simulation / synthetic big watermelon / small air conditioner Inventory of 2021 popular open source projects

Hprof information in koom shark with memory leak

Web penetration test - 5. Brute force cracking vulnerability - (1) SSH password cracking

2021-10-02: word search. Given an M x n two-dimensional character grid boa

Using RDM (Remote Desktop Manager) to import CSV batch remote

How to use elastic scaling in cloud computing? What are the functions?

Tencent cloud ASR product -php realizes the authentication request of the extremely fast version of recording file identification

高斯光束及其MATLAB仿真

Live broadcast Reservation: cloud hosting or cloud function, how can the business do a good job in technology selection?

How do websites use CDN? What are the benefits of using it?

Troubleshooting and resolution of errors in easycvr calling batch deletion interface

SQL注入绕过安全狗思路一