当前位置:网站首页>Evaluation index and code realization (ndcg)

Evaluation index and code realization (ndcg)

2022-06-22 21:04:00 【Weiyaner】

Common evaluation indicators for sorting , The calculation principle and code implementation are given

Sorting evaluation indicators

NDCG

1 principle

NDCG Its full name is Normalized Discounted Cumulative Gain( Normalized loss cumulative gain ), Usually used in search sorting tasks , In such a task , It usually returns a list Output as the result of search sorting , To test this list The rationality of , That's what we need to do list The ranking of . This is also NDCG The origin of .

Gain: G, gain .

In order list in , Gain refers to the correlation score inside , That is, the prediction result of the model .rel(i) Express item(i) Correlation score .

Culumatative Gain:CG, Cumulative gain .

Yes k individual rel(i) Stack , Regardless of location .

C G k = ∑ i = 1 k r e l ( i ) CG_k=\sum_{i=1}^krel(i) CGk=i=1∑krel(i)Discounted Cumulative Gain: DCG, Cumulative gain of loss reduction .

Consider the ordering factor , Make the top item Higher gain , For the lower ranking item Carry out impairment .DCG I think the contribution from the top is greater , The latter contribution is small , That is, weighted sum of the gain values , Weight is caused by position .

D C G k = ∑ i = 1 k r e l ( i ) l o g 2 ( i + 1 ) DCG_k=\sum_{i=1}^k\frac{rel(i)}{log_2(i+1)} DCGk=i=1∑klog2(i+1)rel(i)

perhaps :

D C G k = ∑ i = 1 k 2 r e l ( i ) + 1 l o g 2 ( i + 1 ) DCG_k=\sum_{i=1}^k\frac{2^{rel(i)}+1}{log_2(i+1)} DCGk=i=1∑klog2(i+1)2rel(i)+1

That is said :i The bigger it is , The more you sort back , Corresponding l o g ( i + 1 ) log(i+1) log(i+1) The greater the , The higher the loss .iDCG, Best arranged DCG

according to rel(i) Arrange in descending order , Calculate from this sequence DCG, That is, the best DCG, be called iDCG. In calculation , use labels The correlation score of ( Invisibility is 0,1; The dominant score is 1-5 fraction ).

If it is an implicit score , according toNDCG, Normalized loss cumulative gain

Because the return length of different search results is different , In this way iDCG Is an absolute value , Can't compare , So by DCG/iDCG To express NDCG, Represents a relative degree .

N D C G = D C G i D C G NDCG = \frac{DCG}{iDCG} NDCG=iDCGDCG

2 Code implementation

At first glance, the above theory is simple to understand , But when it comes to specific applications , The discovery is still very complicated , Many questions need to be considered in the future , such as , The similarity score , Sort according to what score, etc . Code implementation is also easy to get around . Here are two coding methods , They can only calculate implicit scores torch Version and numpy edition

torch

# socres Corresponding item(i) The predicted score of ,labels Yes item(i) The label of , Because it is invisible scoring data , Only 0,1 Click value

scores = torch.tensor([[0,0.1,0.3,0.4,0.5]])

labels = torch.tensor([[0,1,1,0,1]])

k = 5

# Descending order , Get a list of recommendations id

rank = (-scores).argsort(dim=1)

cut = rank[:, :k]

# Get relevance scores , That is to say 0,1, If hit

hits = labels.gather(1, cut)

# Calculate the positional relationship , from 2 Starting meter

position = torch.arange(2, 2+k)

# Calculate the position weight according to the position relationship

weights = 1 / torch.log2(position+1)

# Calculation DCG

dcg = (hits* weights).sum(1)

# Calculation iDCG, Because the correlation score is 0,1, And sorted , So the front of the calculation is 1 Corresponding weights Sum of .

idcg = torch.Tensor([weights[:min(n, k)].sum() for n in labels.sum(1)])

ndcg = dcg / idcg

print(ndcg)

numpy

def getDCG(scores):

return np.sum(

np.divide(np.power(2, scores) - 1, np.log2(np.arange(scores.shape[0], dtype=np.float32) + 2)+1),

# np.divide(scores, np.log2(np.arange(scores.shape[0], dtype=np.float32) + 2)+1),

dtype=np.float32)

def getNDCG(rank_list, pos_items):

relevance = np.ones_like(pos_items)

it2rel = {

it: r for it, r in zip(pos_items, relevance)}

rank_scores = np.asarray([it2rel.get(it, 0.0) for it in rank_list], dtype=np.float32)

print(rank_scores)

idcg = getDCG(relevance)

dcg = getDCG(rank_scores)

if dcg == 0.0:

return 0.0

ndcg = dcg / idcg

return ndcg

## l1 Is a recommended sort list ,l2 Is a list of real clicks

l1 = [4,3,2,1,0]

l2 = [4,2,1]

a = getNDCG(l1, l2)

print(a)

边栏推荐

- 已解决:一个表中可以有多个自增列吗

- 密码学系列之:PKI的证书格式表示X.509

- A Dynamic Near-Optimal Algorithm for Online Linear Programming

- Flutter System Architecture(Flutter系统架构图)

- pytorch的模型保存加载和继续训练

- Huawei cloud releases Latin American Internet strategy

- [proteus simulation] 74LS138 decoder water lamp

- MySQL中如何计算同比和环比

- 程序员必看的学习网站

- [proteus simulation] H-bridge drive DC motor composed of triode + key forward and reverse control

猜你喜欢

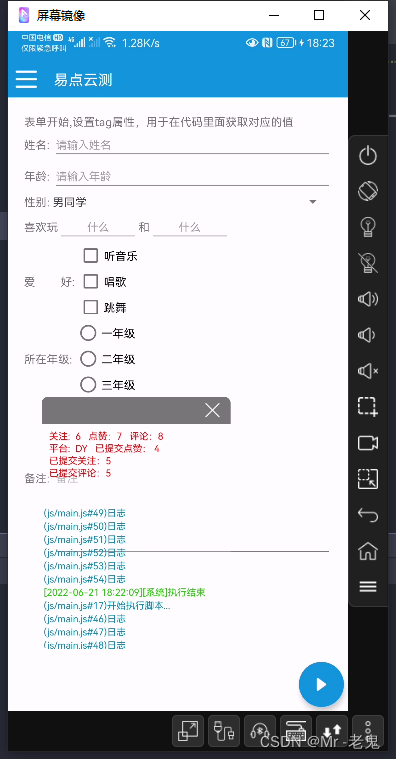

EasyClick 固定状态日志窗口

Introduction of neural networks for Intelligent Computing (Hopfield network DHNN, CHNN)

Xunrui CMS custom data interface PHP executable code

从感知机到Transformer,一文概述深度学习简史

深度学习常用损失函数总览:基本形式、原理、特点

底部菜单添加的链接无法跳转到二级页面的问题

R语言organdata 数据集可视化

【513. 找树左下角的值】

MySQL中如何计算同比和环比

一行代码为特定状态绑定SwiftUI视图动画

随机推荐

Kotlin1.6.20 new features context receivers tips

R语言 co2数据集 可视化

软件测试——测试用例设计&测试分类详解

Code to image converter

Notes d'apprentissage de golang - structure

Stochastic Adaptive Dynamics of a Simple Market as a Non-Stationary Multi-Armed Bandit Problem

79-不要看到有order by xxx desc就创建desc降序索引-文末有赠书福利

85-这些SQL调优小'技巧',你学废了吗?

Easyclick fixed status log window

程序员必看的学习网站

what? You can't be separated by wechat

R 语言 wine 数据集可视化

R 语言nutrient数据集的可视化

R语言organdata 数据集可视化

Moke 5. Service discovery -nacos

R language airpassengers dataset visualization

How to use feign to construct multi parameter requests

R language CO2 dataset visualization

R language usarrests dataset visualization

云服务器中安装mysql(2022版)