当前位置:网站首页>Develop operator based on kubebuilder (for getting started)

Develop operator based on kubebuilder (for getting started)

2022-06-26 16:15:00 【chenxy02】

Original address : Use kubebuilder understand k8s crd - You know

understand k8s Of crd You need to understand first k8s Of controller Pattern

- such as kube-controller-manager Medium deployment controller , During initialization, it will be passed in to listen Deployments、ReplicaSet and pod One of the three informer object

- First list The following objects are cached locally , meanwhile watch Object change , Equal to incremental update

func startDeploymentController(ctx ControllerContext) (controller.Interface, bool, error) {

dc, err := deployment.NewDeploymentController(

ctx.InformerFactory.Apps().V1().Deployments(),

ctx.InformerFactory.Apps().V1().ReplicaSets(),

ctx.InformerFactory.Core().V1().Pods(),

ctx.ClientBuilder.ClientOrDie("deployment-controller"),

)

if err != nil {

return nil, true, fmt.Errorf("error creating Deployment controller: %v", err)

}

go dc.Run(int(ctx.ComponentConfig.DeploymentController.ConcurrentDeploymentSyncs), ctx.Stop)

return nil, true, nil

}- Then we will do it internally sync In fact, that is Reconcile loop, Tuning cycle —— To put it bluntly, it is the object of comparison actualState and expectState The difference of , Execute the corresponding add / delete operation such as deployment Medium expansion and contraction operation

- Calculate the difference , Can be created pod Number minus all active rs The sum of the number of copies of

- If the difference is positive , Explain the need for expansion , And from ReplicaSetsBySizeNewer The order is from new to old

- If the difference is negative , It indicates that the volume needs to be reduced , And from ReplicaSetsBySizeOlder The sorting is from old to new

The difference is calculated as follows

deploymentReplicasToAdd := allowedSize - allRSsReplicas

var scalingOperation string

switch {

case deploymentReplicasToAdd > 0:

sort.Sort(controller.ReplicaSetsBySizeNewer(allRSs))

scalingOperation = "up"

case deploymentReplicasToAdd < 0:

sort.Sort(controller.ReplicaSetsBySizeOlder(allRSs))

scalingOperation = "down"

}I understand k8s Of controller After the model ,crd You wrote it yourself controller

- Listen and process your own defined resources ,

- The following picture is very vivid

install Kubebuilder

wget https://github.com/kubernetes-sigs/kubebuilder/releases/download/v3.1.0/kubebuilder_linux_amd64

mv kubebuilder_linux_amd64 /usr/local/bin/kubebuilderCreate scaffolding works

- Create a directory , And specify this directory as the code repository

mkdir -p ~/projects/guestbook

cd ~/projects/guestbook

kubebuilder init --domain my.domain --repo my.domain/guestbook- View the file results in this directory

[[email protected] guestbook]# tree

.

├── config

│ ├── default

│ │ ├── kustomization.yaml

│ │ ├── manager_auth_proxy_patch.yaml

│ │ └── manager_config_patch.yaml

│ ├── manager

│ │ ├── controller_manager_config.yaml

│ │ ├── kustomization.yaml

│ │ └── manager.yaml

│ ├── prometheus

│ │ ├── kustomization.yaml

│ │ └── monitor.yaml

│ └── rbac

│ ├── auth_proxy_client_clusterrole.yaml

│ ├── auth_proxy_role_binding.yaml

│ ├── auth_proxy_role.yaml

│ ├── auth_proxy_service.yaml

│ ├── kustomization.yaml

│ ├── leader_election_role_binding.yaml

│ ├── leader_election_role.yaml

│ ├── role_binding.yaml

│ └── service_account.yaml

├── Dockerfile

├── go.mod

├── go.sum

├── hack

│ └── boilerplate.go.txt

├── main.go

├── Makefile

└── PROJECT

6 directories, 24 filesestablish api

- Create a file called webapp/v1 Of API (group/version)

- Create a new type Guestbook

kubebuilder create api --group webapp --version v1 --kind Guestbook- Tracking results , I found many more files , among api/v1/guestbook_types.go Representative definition API The place of ,controllers/guestbook_controller.go Represents the logic of tuning

[[email protected] guestbook]# tree

.

├── api

│ └── v1

│ ├── groupversion_info.go

│ ├── guestbook_types.go

│ └── zz_generated.deepcopy.go

├── bin

│ └── controller-gen

├── config

│ ├── crd

│ │ ├── kustomization.yaml

│ │ ├── kustomizeconfig.yaml

│ │ └── patches

│ │ ├── cainjection_in_guestbooks.yaml

│ │ └── webhook_in_guestbooks.yaml

│ ├── default

│ │ ├── kustomization.yaml

│ │ ├── manager_auth_proxy_patch.yaml

│ │ └── manager_config_patch.yaml

│ ├── manager

│ │ ├── controller_manager_config.yaml

│ │ ├── kustomization.yaml

│ │ └── manager.yaml

│ ├── prometheus

│ │ ├── kustomization.yaml

│ │ └── monitor.yaml

│ ├── rbac

│ │ ├── auth_proxy_client_clusterrole.yaml

│ │ ├── auth_proxy_role_binding.yaml

│ │ ├── auth_proxy_role.yaml

│ │ ├── auth_proxy_service.yaml

│ │ ├── guestbook_editor_role.yaml

│ │ ├── guestbook_viewer_role.yaml

│ │ ├── kustomization.yaml

│ │ ├── leader_election_role_binding.yaml

│ │ ├── leader_election_role.yaml

│ │ ├── role_binding.yaml

│ │ └── service_account.yaml

│ └── samples

│ └── webapp_v1_guestbook.yaml

├── controllers

│ ├── guestbook_controller.go

│ └── suite_test.go

├── Dockerfile

├── go.mod

├── go.sum

├── hack

│ └── boilerplate.go.txt

├── main.go

├── Makefile

└── PROJECT

13 directories, 37 files- Add print log in tuning function , be located controller/guestbook_controller.go

func (r *GuestbookReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

_ = log.FromContext(ctx)

// your logic here

log.FromContext(ctx).Info("print_req", "req", req.String())

return ctrl.Result{}, nil

}Deploy to k8s In the cluster

- Compiling and mirroring

make docker-build IMG=guestbook:v1.0 # Actually implement docker build -t guestbook:v1.0 .- Generally, we need to modify the dockerfile, to go Set up proxy agent , such go mod download The time will not exceed the time limit and cannot be connected

- Then you need to manually push the image to the local warehouse , If the bottom one runtime yes ctr Words need docker save Come out and import

docker save guestbook:v1.0 > a.tar

ctr --namespace k8s.io images import a.tar- Because... Is used in the project kube-rbac-proxy, The image may not be downloaded , It needs to be handled manually , as follows :

- Now the deployment crd

make deploy IMG=guestbook:v1.0 # Actually implement kustomize build config/default | kubectl apply -f -- Check on guestbook-system ns The object of the lower deployment

- Check api-resources

- Deploy guestbook

kubectl apply -f config/samples/webapp_v1_guestbook.yaml

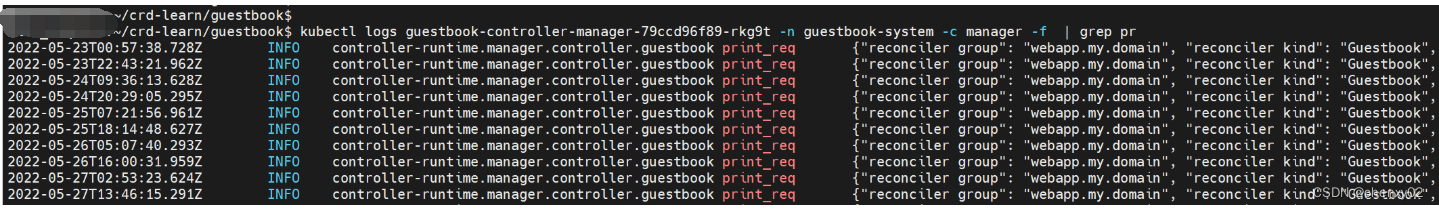

# guestbook.webapp.my.domain/guestbook-cxy created- see crd You can see from the log in the tuning function that the

边栏推荐

- Simple use of tensor

- Solution for filtering by special string of microservice

- Kept to implement redis autofailover (redisha) 1

- Tsinghua's "magic potion" is published in nature: reversing stem cell differentiation, and the achievements of the Nobel Prize go further. Netizen: life can be created without sperm and eggs

- I want to know how to open an account through online stock? Is online account opening safe?

- 基于STM32+华为云IOT设计的云平台监控系统

- 2 三种建模方式

- OpenSea上如何创建自己的NFT(Polygon)

- Canvas three dot flashing animation

- R language uses cor function to calculate the correlation matrix for correlation analysis, uses corrgram package to visualize the correlation matrix, reorders rows and columns using principal componen

猜你喜欢

振动式液量检测装置

Practice of federal learning in Tencent micro vision advertising

This year, the AI score of college entrance examination English is 134. The research of Fudan Wuda alumni is interesting

3. Keras version model training

The details of the first pig heart transplantation were fully disclosed: human herpes virus was found in the patient, the weight of the heart doubled after death, and myocardial cell fibrosis

NFT transaction principle analysis (2)

了解下常见的函数式接口

How to implement interface current limiting?

TCP拥塞控制详解 | 1. 概述

2 三种建模方式

随机推荐

Tweenmax+svg switch color animation scene

2 three modeling methods

Failed to get convolution algorithm. This is probably because cuDNN failed to initialize

9 Tensorboard的使用

Hyperf框架使用阿里云OSS上传失败

【毕业季】致毕业生的一句话:天高任鸟飞,海阔凭鱼跃

Keepalived 实现 Redis AutoFailover (RedisHA)1

Angel 3.2.0 new version released! Figure the computing power is strengthened again

Comprehensive analysis of discord security issues

Svg canvas canvas drag

R language generalized linear model function GLM, GLM function to build logistic regression model, analyze whether the model is over discrete, and use the ratio of residual deviation and residual degr

Natural language inference with attention and fine tuning Bert pytorch

补齐短板-开源IM项目OpenIM关于初始化/登录/好友接口文档介绍

TCP拥塞控制详解 | 1. 概述

李飞飞团队将ViT用在机器人身上,规划推理最高提速512倍,还cue了何恺明的MAE...

Data analysis - numpy quick start

手写数字体识别,用保存的模型跑自己的图片

How to identify contractual issues

Detailed explanation of cookies and sessions

Redis order sorting command