当前位置:网站首页>Paddlenlp's UIE relationship extraction model [executive relationship extraction as an example]

Paddlenlp's UIE relationship extraction model [executive relationship extraction as an example]

2022-07-25 14:22:00 【Ting】

Review of previous projects :

Paddlenlp And UIE Model actual combat entity extraction task 【 Taxi Data 、 Express bill 】

Application practice : Classification model integrator [PaddleHub、Finetune、prompt]

Link to this project : It only needs fork It can be reproduced directly

0. Background introduction

This project will demonstrate how to fine tune the model with small samples , Complete relationship extraction .

Data set situation :

Executive data set demo:

Ma Yun is from Hangzhou, Zhejiang Province , One of the main founders of Alibaba Group . Currently, he is the chairman and CEO of Alibaba Group , He is 《 Forbes 》 The magazine was founded 50 The first mainland entrepreneur to become the cover person for many years , Once elected as the future global leader .

Ren Zhengfei is a private telecommunications equipment enterprise in Chinese Mainland - The founder and President of Huawei . He is about business “ Crisis management ” Its theory and practice have had a wide impact both inside and outside the industry .

ma , He is one of the main founders of Tencent and now serves as the chairman and CEO of the company's holding board . As a native entrepreneur in Shenzhen , He majored in computer and application in Shenzhen University , On 1993 Obtained a Bachelor of Science degree from Shenzhen University in .

Robin Lee is the founder, chairman and CEO of Baidu , Be fully responsible for the strategic planning and operation management of Baidu company , After years of development , Baidu has firmly occupied the Chinese search engine more than 7 Market share achieved .

Lei jun , 2012 year 8 In June, Xiaomi company, which it invested and founded, officially released Xiaomi mobile phone .

Qiang Dong Liu , Suyu District, Suqian City, Jiangsu Province , 360buy CEO.1996 Graduated from the Sociology Department of Renmin University of China .

Mr Liu , Famous Chinese Entrepreneur , investor , Former chairman of Lenovo Holdings Limited 、 Chairman of the board of directors of Lenovo Group Co., Ltd .

{"id":1845,"text":" Ma Yun is from Hangzhou, Zhejiang Province , One of the main founders of Alibaba Group . Currently, he is the chairman and CEO of Alibaba Group , He is 《 Forbes 》 The magazine was founded 50 The first mainland entrepreneur to become the cover person for many years , Once elected as the future global leader .","entities":[{"id":945,"label":" The person's name ","start_offset":0,"end_offset":2},{"id":946,"label":" company ","start_offset":10,"end_offset":16}],"relations":[{"id":11,"from_id":945,"to_id":946,"type":" senior executive "}]}

{"id":1846,"text":" Ren Zhengfei is a private telecommunications equipment enterprise in Chinese Mainland - The founder and President of Huawei . He is about business “ Crisis management ” Its theory and practice have had a wide impact both inside and outside the industry .","entities":[{"id":949,"label":" The person's name ","start_offset":0,"end_offset":3},{"id":950,"label":" company ","start_offset":19,"end_offset":23}],"relations":[{"id":13,"from_id":949,"to_id":950,"type":" senior executive "}]}

{"id":1847,"text":" ma , He is one of the main founders of Tencent and now serves as the chairman and CEO of the company's holding board . As a native entrepreneur in Shenzhen , He majored in computer and application in Shenzhen University , On 1993 Obtained a Bachelor of Science degree from Shenzhen University in .","entities":[{"id":954,"label":" The person's name ","start_offset":0,"end_offset":3},{"id":955,"label":" company ","start_offset":5,"end_offset":7}],"relations":[{"id":16,"from_id":954,"to_id":955,"type":" senior executive "}]}

{"id":1848,"text":" Robin Lee is the founder, chairman and CEO of Baidu , Be fully responsible for the strategic planning and operation management of Baidu company , After years of development , Baidu has firmly occupied the Chinese search engine more than 7 Market share achieved .","entities":[{"id":932,"label":" The person's name ","start_offset":0,"end_offset":3},{"id":933,"label":" company ","start_offset":4,"end_offset":8},{"id":934,"label":" company ","start_offset":25,"end_offset":29}],"relations":[{"id":6,"from_id":932,"to_id":933,"type":" senior executive "}]}

{"id":1849,"text":" Lei jun , 2012 year 8 In June, Xiaomi company, which it invested and founded, officially released Xiaomi mobile phone .","entities":[{"id":941,"label":" The person's name ","start_offset":0,"end_offset":2},{"id":942,"label":" company ","start_offset":17,"end_offset":21}],"relations":[{"id":9,"from_id":941,"to_id":942,"type":" senior executive "}]}

Data loading

During data annotation , Don't get the relationship annotation wrong , See the previous article in detail , Annotation Teaching

doccano_file: from doccano Exported data annotation file .

save_dir: Training data storage directory , Default stored in data Under the table of contents .

negative_ratio: Maximum negative case ratio , This parameter is only valid for extraction type tasks , Properly constructing negative examples can improve the effect of the model . The number of negative instances is related to the actual number of tags , Maximum number of negative instances = negative_ratio * Number of positive examples . This parameter is only valid for training sets , The default is 5. In order to ensure the accuracy of the evaluation indicators , All negative examples of validation set and test set default construction .

splits: Training set when dividing data set 、 Proportion of validation sets . The default is [0.8, 0.1, 0.1] In accordance with the said 8:1:1 Divide the data into training sets 、 Validation set and test set .

task_type: Select the task type , There are two types of tasks: extraction and classification .

options: Specify the category label of the classification task , This parameter is only valid for category type tasks . The default is [“ positive ”, “ Negative ”].

prompt_prefix: Declare the classification task prompt Prefix information , This parameter is only valid for category type tasks . The default is " Emotional inclination ".

is_shuffle: Whether to randomly scatter the data set , The default is True.

seed: Random seeds , The default is 1000.

*separator: Entity category / Separator between evaluation dimension and classification label , This parameter is only applicable to entities / Evaluate the effectiveness of dimension level classification tasks . The default is "##".

import os

import time

import argparse

import json

import numpy as np

from utils_1 import set_seed, convert_ext_examples

def do_convert():

set_seed(args.seed)

tic_time = time.time()

if not os.path.exists(args.input_file):

raise ValueError("Please input the correct path of doccano file.")

if not os.path.exists(args.save_dir):

os.makedirs(args.save_dir)

if len(args.splits) != 0 and len(args.splits) != 3:

raise ValueError("Only []/ len(splits)==3 accepted for splits.")

if args.splits and sum(args.splits) != 1:

raise ValueError(

"Please set correct splits, sum of elements in splits should be equal to 1."

)

with open(args.input_file, "r", encoding="utf-8") as f:

raw_examples = f.readlines()

def _create_ext_examples(examples, negative_ratio=0, shuffle=False):

entities, relations = convert_ext_examples(examples, negative_ratio)

examples = [e + r for e, r in zip(entities, relations)]

if shuffle:

indexes = np.random.permutation(len(examples))

examples = [examples[i] for i in indexes]

return examples

def _save_examples(save_dir, file_name, examples):

count = 0

save_path = os.path.join(save_dir, file_name)

with open(save_path, "w", encoding="utf-8") as f:

for example in examples:

for x in example:

f.write(json.dumps(x, ensure_ascii=False) + "\n")

count += 1

print("\nSave %d examples to %s." % (count, save_path))

if len(args.splits) == 0:

examples = _create_ext_examples(raw_examples, args.negative_ratio,

args.is_shuffle)

_save_examples(args.save_dir, "train.txt", examples)

else:

if args.is_shuffle:

indexes = np.random.permutation(len(raw_examples))

raw_examples = [raw_examples[i] for i in indexes]

i1, i2, _ = args.splits

p1 = int(len(raw_examples) * i1)

p2 = int(len(raw_examples) * (i1 + i2))

train_examples = _create_ext_examples(

raw_examples[:p1], args.negative_ratio, args.is_shuffle)

dev_examples = _create_ext_examples(raw_examples[p1:p2])

test_examples = _create_ext_examples(raw_examples[p2:])

_save_examples(args.save_dir, "train.txt", train_examples)

_save_examples(args.save_dir, "dev.txt", dev_examples)

_save_examples(args.save_dir, "test.txt", test_examples)

print('Finished! It takes %.2f seconds' % (time.time() - tic_time))

if __name__ == "__main__":

# yapf: disable

parser = argparse.ArgumentParser()

parser.add_argument("--input_file", default="./data/data.json", type=str, help="The data file exported from doccano platform.")

parser.add_argument("--save_dir", default="./data", type=str, help="The path to save processed data.")

parser.add_argument("--negative_ratio", default=5, type=int, help="Used only for the classification task, the ratio of positive and negative samples, number of negtive samples = negative_ratio * number of positive samples")

parser.add_argument("--splits", default=[0.8, 0.1, 0.1], type=float, nargs="*", help="The ratio of samples in datasets. [0.6, 0.2, 0.2] means 60% samples used for training, 20% for evaluation and 20% for test.")

parser.add_argument("--is_shuffle", default=True, type=bool, help="Whether to shuffle the labeled dataset, defaults to True.")

parser.add_argument("--seed", type=int, default=1000, help="random seed for initialization")

args = parser.parse_args()

# yapf: enable

do_convert()

! python preprocess.py --input_file ./data/gaoguan.jsonl \

--save_dir ./data/ \

--negative_ratio 5 \

--splits 0.85 0.15 0 \

--seed 1000

Converting doccano data...

100%|██████████████████████████████████████████| 8/8 [00:00<00:00, 21358.65it/s]

Adding negative samples for first stage prompt...

100%|██████████████████████████████████████████| 8/8 [00:00<00:00, 88534.12it/s]

Constructing relation prompts...

Adding negative samples for second stage prompt...

100%|██████████████████████████████████████████| 8/8 [00:00<00:00, 24070.61it/s]

Converting doccano data...

100%|██████████████████████████████████████████| 2/2 [00:00<00:00, 25420.02it/s]

Adding negative samples for first stage prompt...

100%|██████████████████████████████████████████| 2/2 [00:00<00:00, 45839.39it/s]

Constructing relation prompts...

Adding negative samples for second stage prompt...

100%|██████████████████████████████████████████| 2/2 [00:00<00:00, 30066.70it/s]

Converting doccano data...

0it [00:00, ?it/s]

Adding negative samples for first stage prompt...

0it [00:00, ?it/s]

Save 64 examples to ./data/train.txt.

Save 6 examples to ./data/dev.txt.

The output part shows :

{"content": " Chief architect of Netease , ding 1997 year 6 In September, Netease was founded , Netease has developed from a private enterprise with more than a dozen people to today's nearly 3000 Well known Internet technology companies whose employees are publicly listed in the United States .", "result_list": [{"text": " ding ", "start": 12, "end": 14}], "prompt": " The person's name "}

{"content": " Chief architect of Netease , ding 1997 year 6 In September, Netease was founded , Netease has developed from a private enterprise with more than a dozen people to today's nearly 3000 Well known Internet technology companies whose employees are publicly listed in the United States .", "result_list": [{"text": " Netease company ", "start": 0, "end": 4}, {"text": " Netease company ", "start": 23, "end": 27}], "prompt": " company "}

{"content": " Chief architect of Netease , ding 1997 year 6 In September, Netease was founded , Netease has developed from a private enterprise with more than a dozen people to today's nearly 3000 Well known Internet technology companies whose employees are publicly listed in the United States .", "result_list": [{"text": " ding ", "start": 12, "end": 14}, {"text": " ding ", "start": 12, "end": 14}], "prompt": " Netease executives "}

{"content": " Robin Lee is the founder, chairman and CEO of Baidu , Be fully responsible for the strategic planning and operation management of Baidu company , After years of development , Baidu has firmly occupied the Chinese search engine more than 7 Market share achieved .", "result_list": [{"text": " Robin Li ", "start": 0, "end": 3}], "prompt": " The person's name "}

{"content": " Robin Lee is the founder, chairman and CEO of Baidu , Be fully responsible for the strategic planning and operation management of Baidu company , After years of development , Baidu has firmly occupied the Chinese search engine more than 7 Market share achieved .", "result_list": [{"text": " Baidu company ", "start": 4, "end": 8}], "prompt": " company "}

{"content": " Robin Lee is the founder, chairman and CEO of Baidu , Be fully responsible for the strategic planning and operation management of Baidu company , After years of development , Baidu has firmly occupied the Chinese search engine more than 7 Market share achieved .", "result_list": [{"text": " Robin Li ", "start": 0, "end": 3}], "prompt": " Baidu executives "}

2. model training

import argparse

import time

import os

from functools import partial

import paddle

from paddle.utils.download import get_path_from_url

from paddlenlp.datasets import load_dataset

from paddlenlp.transformers import ErnieTokenizer

from model import UIE

from utils_1 import set_seed, convert_example, reader, evaluate, create_dataloader, SpanEvaluator

from visualdl import LogWriter

def do_train():

paddle.set_device(args.device)

rank = paddle.distributed.get_rank()

if paddle.distributed.get_world_size() > 1:

paddle.distributed.init_parallel_env()

set_seed(args.seed)

hidden_size = 768

url = "https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base/model_state.pdparams"

tokenizer = ErnieTokenizer.from_pretrained('ernie-3.0-base-zh')

model = UIE('ernie-3.0-base-zh', hidden_size)

if args.init_from_ckpt is not None:

pretrained_model_path = args.init_from_ckpt

else:

pretrained_model_path = os.path.join(args.model, "model_state.pdparams")

if not os.path.exists(pretrained_model_path):

get_path_from_url(url, args.model)

state_dict = paddle.load(pretrained_model_path)

model.set_dict(state_dict)

print("Init from: {}".format(pretrained_model_path))

if paddle.distributed.get_world_size() > 1:

model = paddle.DataParallel(model)

train_ds = load_dataset(

reader,

data_path=args.train_path,

max_seq_len=args.max_seq_len,

lazy=False)

dev_ds = load_dataset(

reader,

data_path=args.dev_path,

max_seq_len=args.max_seq_len,

lazy=False)

trans_func = partial(

convert_example, tokenizer=tokenizer, max_seq_len=args.max_seq_len)

train_data_loader = create_dataloader(

dataset=train_ds,

mode='train',

batch_size=args.batch_size,

trans_fn=trans_func)

dev_data_loader = create_dataloader(

dataset=dev_ds,

mode='dev',

batch_size=args.batch_size,

trans_fn=trans_func)

optimizer = paddle.optimizer.AdamW(

learning_rate=args.learning_rate, parameters=model.parameters())

criterion = paddle.nn.BCELoss()

metric = SpanEvaluator()

# Initialize the logger

writer=LogWriter("./log/scalar_test")

writer1=LogWriter("./log/scalar_test1")

loss_list = []

global_step = 0

best_step = 0

best_f1 = 0

tic_train = time.time()

for epoch in range(1, args.num_epochs + 1):

for batch in train_data_loader:

input_ids, token_type_ids, att_mask, pos_ids, start_ids, end_ids = batch

start_prob, end_prob = model(input_ids, token_type_ids, att_mask,

pos_ids)

start_ids = paddle.cast(start_ids, 'float32')

end_ids = paddle.cast(end_ids, 'float32')

loss_start = criterion(start_prob, start_ids)

loss_end = criterion(end_prob, end_ids)

loss = (loss_start + loss_end) / 2.0

loss.backward()

optimizer.step()

optimizer.clear_grad()

loss_list.append(float(loss))

global_step += 1

if global_step % args.logging_steps == 0 and rank == 0:

time_diff = time.time() - tic_train

loss_avg = sum(loss_list) / len(loss_list)

writer.add_scalar(tag="train/loss", step=global_step, value=loss_avg) # Record loss

print(

"global step %d, epoch: %d, loss: %.5f, speed: %.2f step/s"

% (global_step, epoch, loss_avg,

args.logging_steps / time_diff))

tic_train = time.time()

if global_step % args.valid_steps == 0 and rank == 0:

# save_dir = os.path.join(args.save_dir, "model_%d" % global_step)

# if not os.path.exists(save_dir):

# os.makedirs(save_dir)

# save_param_path = os.path.join(save_dir, "model_state.pdparams")

# paddle.save(model.state_dict(), save_param_path)

precision, recall, f1 = evaluate(model, metric, dev_data_loader)

writer1.add_scalar(tag="train/precision", step=global_step, value=precision)

writer1.add_scalar(tag="train/recall", step=global_step, value=recall)

writer1.add_scalar(tag="train/f1", step=global_step, value=f1)

print("Evaluation precision: %.5f, recall: %.5f, F1: %.5f" %

(precision, recall, f1))

if f1 > best_f1:

print(

f"best F1 performence has been updated: {

best_f1:.5f} --> {

f1:.5f}"

)

best_f1 = f1

save_dir = os.path.join(args.save_dir, "model_best")

save_best_param_path = os.path.join(save_dir,

"model_state.pdparams")

paddle.save(model.state_dict(), save_best_param_path)

tic_train = time.time()

if __name__ == "__main__":

# yapf: disable

parser = argparse.ArgumentParser()

#!

parser.add_argument("--batch_size", default=2, type=int, help="Batch size per GPU/CPU for training.")

# parser.add_argument("--batch_size", default=16, type=int, help="Batch size per GPU/CPU for training.")

parser.add_argument("--learning_rate", default=1e-5, type=float, help="The initial learning rate for Adam.")

parser.add_argument("--train_path", default="./data/train.txt", type=str, help="The path of train set.")

parser.add_argument("--dev_path", default="./data/dev.txt", type=str, help="The path of dev set.")

parser.add_argument("--save_dir", default='./checkpoint', type=str, help="The output directory where the model checkpoints will be written.")

parser.add_argument("--max_seq_len", default=512, type=int, help="The maximum input sequence length. "

"Sequences longer than this will be truncated, sequences shorter will be padded.")

#! Here parameters determine the amount of training

parser.add_argument("--num_epochs", default=1, type=int, help="Total number of training epochs to perform.")

# parser.add_argument("--num_epochs", default=100, type=int, help="Total number of training epochs to perform.")

parser.add_argument("--seed", default=1000, type=int, help="Random seed for initialization")

parser.add_argument("--logging_steps", default=1, type=int, help="The interval steps to logging.")

parser.add_argument("--valid_steps", default=2, type=int, help="The interval steps to evaluate model performance.")

#!

parser.add_argument('--device', choices=['cpu', 'gpu'], default="cpu", help="Select which device to train model, defaults to gpu.")

parser.add_argument("--model", choices=["uie-base", "uie-tiny"], default="uie-tiny", type=str, help="Select the pretrained model for few-shot learning.")

#? Model parameter initialization path

parser.add_argument("--init_from_ckpt", default=None, type=str, help="The path of model parameters for initialization.")

args = parser.parse_args()

# yapf: enable

args.device = paddle.device.get_device()

do_train()

!python finetune.py \

--train_path "./data/train.txt" \

--dev_path "./data/dev.txt" \

--save_dir "./checkpoint" \

--learning_rate 1e-5 \

--batch_size 8 \

--max_seq_len 512 \

--num_epochs 50 \

--model "uie-base" \

--seed 1000 \

--logging_steps 10 \

--valid_steps 50 \

--device "gpu"

Some training effects are displayed : The specific output has been folded

global step 640, epoch: 80, loss: 0.00002, speed: 3.99 step/s

global step 650, epoch: 82, loss: 0.00002, speed: 3.87 step/s

global step 660, epoch: 83, loss: 0.00002, speed: 3.99 step/s

global step 670, epoch: 84, loss: 0.00002, speed: 4.03 step/s

global step 680, epoch: 85, loss: 0.00002, speed: 4.00 step/s

global step 690, epoch: 87, loss: 0.00002, speed: 3.88 step/s

global step 700, epoch: 88, loss: 0.00002, speed: 3.99 step/s

Evaluation precision: 1.00000, recall: 0.85714, F1: 0.92308

global step 710, epoch: 89, loss: 0.00002, speed: 3.99 step/s

global step 720, epoch: 90, loss: 0.00002, speed: 4.00 step/s

global step 730, epoch: 92, loss: 0.00002, speed: 3.86 step/s

global step 740, epoch: 93, loss: 0.00002, speed: 3.97 step/s

global step 750, epoch: 94, loss: 0.00002, speed: 3.99 step/s

global step 760, epoch: 95, loss: 0.00002, speed: 3.99 step/s

global step 770, epoch: 97, loss: 0.00002, speed: 3.86 step/s

global step 780, epoch: 98, loss: 0.00002, speed: 3.98 step/s

global step 790, epoch: 99, loss: 0.00002, speed: 4.00 step/s

global step 800, epoch: 100, loss: 0.00001, speed: 4.00 step/s

Evaluation precision: 1.00000, recall: 0.85714, F1: 0.92308

Recommended GPU Environmental Science , Otherwise, memory overflow may occur .CPU In the environment , You can modify model by uie-tiny, Adjust properly batch_size.

To increase the accuracy :–num_epochs Set up a bigger workout

Configurable parameter description :

train_path: Training set file path .

dev_path: Validation set file path .

save_dir: Model storage path , The default is ./checkpoint.

learning_rate: Learning rate , The default is 1e-5.

batch_size: Batch size , Please adjust it in combination with the video memory , If there is insufficient video memory , Please lower this parameter appropriately , The default is 16.

max_seq_len: Maximum segmentation length of text , When the input exceeds the maximum length, the input text will be automatically segmented , The default is 512.

num_epochs: Number of training rounds , The default is 100.

model Choose a model , The program will fine tune the model based on the selected model , Optional uie-base and uie-tiny, The default is uie-base.

seed: Random seeds , The default is 1000.

logging_steps: Log printing interval steps Count , Default 10.

valid_steps: evaluate The interval of steps Count , Default 100.

device: What equipment to use for training , Optional cpu or gpu.

3. Model to evaluate

!python evaluate.py \

--model_path ./checkpoint/model_best \

--test_path ./data/dev.txt \

--batch_size 8 \

--max_seq_len 512

[2022-07-25 00:17:12,509] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.tokenizer.ErnieTokenizer'> to load './checkpoint/model_best'.

W0725 00:17:12.535902 1512 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1

W0725 00:17:12.540369 1512 gpu_resources.cc:91] device: 0, cuDNN Version: 7.6.

[2022-07-25 00:17:16,896] [ INFO] - -----------------------------

[2022-07-25 00:17:16,897] [ INFO] - Class Name: all_classes

[2022-07-25 00:17:16,897] [ INFO] - Evaluation Precision: 1.00000 | Recall: 0.85714 | F1: 0.92308

**model_path**: Model folder path for evaluation , The path needs to contain the model weight file model_state.pdparams And configuration files model_config.json.

**test_path:** Test set files for evaluation .

**batch_size**: Batch size , Please adjust it according to the situation of the machine , The default is 16.

**max_seq_len**: Maximum segmentation length of text , When the input exceeds the maximum length, the input text will be automatically segmented , The default is 512.

**model**: Select the model used , Optional uie-base, uie-medium, uie-mini, uie-micro and uie-nano, The default is uie-base.

**debug**: Open or not debug The model evaluates each positive example category separately , This mode is only used for model debugging , Off by default .

4. Prediction of results

# Relationship extraction

from pprint import pprint

import json

from paddlenlp import Taskflow

def openreadtxt(file_name):

data = []

file = open(file_name,'r',encoding='UTF-8') # Open file

file_data = file.readlines() # Read all lines

for row in file_data:

data.append(row) # Insert each row of data into data in

return data

data_input=openreadtxt('./input/test.txt')

schema = {

" company ":" senior executive "}

few_ie = Taskflow('information_extraction', schema=schema, batch_size=16,task_path='./checkpoint/model_best')

results=few_ie(data_input)

with open("./output/result.txt", "w+",encoding='UTF-8') as f: #a : write file , If the file does not exist, it will be created first and then written , But it will not overwrite the original file , Instead, it is appended at the end of the file

for result in results:

line = json.dumps(result, ensure_ascii=False) # Default for Chinese ascii code . To output real Chinese, you need to specify ensure_ascii=False

f.write(line + "\n")

print(" Data results have been exported ")

for idx, text in enumerate(data_input):

print(data_input[idx],results[idx])

Input file display :

Huang Zheng ,1980 Born in Hangzhou, Zhejiang , Founder of pinduoduo , Graduated from Zhejiang University 、 Master's degree from the University of Wisconsin Madison .

The founder of Bili Bili company is Xu Yi , Xu Yi is the earliest founder of BiliBili , But always behind the scenes , Not particularly public . Used to be Acfun Members of bullet screen , Then imitate Acfun Set up your own website , Now the director .

Output shows :

Huang Zheng ,1980 Born in Hangzhou, Zhejiang , Founder of pinduoduo , Graduated from Zhejiang University 、 Master's degree from the University of Wisconsin Madison .

{' company ': [{'text': ' A lot of spelling ', 'start': 16, 'end': 19, 'probability': 0.935215170074585, 'relations': {' senior executive ': [{'text': ' Huang Zheng ', 'start': 0, 'end': 2, 'probability': 0.9996391253586268}]}}]}

The founder of Bili Bili company is Xu Yi , Xu Yi is the earliest founder of BiliBili , But always behind the scenes , Not particularly public . Used to be Acfun Members of bullet screen , Then imitate Acfun Set up your own website , Now the director . {' company ': [{'text': ' Bleep company ', 'start': 0, 'end': 6, 'probability': 0.7246855227849665, 'relations': {' senior executive ': [{'text': ' Xu Yi ', 'start': 11, 'end': 13, 'probability': 0.9985462800938478}]}}]}

5 summary

UIE(Universal Information Extraction):Yaojie Lu Et al. ACL-2022 A unified framework for general information extraction is proposed UIE. The framework implements Entity extraction 、 Relationship extraction 、 Event extraction 、 Sentiment analysis Unified modeling of such tasks , And make different tasks have good migration and generalization ability .PaddleNLP Learn from the method of this paper , be based on ERNIE 3.0 Knowledge enhancement pre training model , Train and open source the first Chinese general information extraction model UIE. The model can support the extraction of key information without limiting the industry field and the extraction target , Realize zero sample fast cold start , And have excellent small sample fine-tuning ability , Quickly adapt to specific extraction targets .

UIE The advantages of

Easy to use : Users can use natural language to customize the extraction target , The corresponding information in the input text can be extracted uniformly without training . Out of the box , And meet all kinds of information extraction needs .

Authors efficiency : The previous information extraction technology needs a large number of labeled data to ensure the effect of information extraction , In order to improve the development efficiency in the development process , Reduce unnecessary duplication of time , Open domain information extraction can achieve zero samples (zero-shot) Or less samples (few-shot) extract , Greatly reduce label data dependency , While reducing costs , It also improves the effect .

The effect is leading : Open domain information extraction is used in many scenarios , On a variety of tasks , All of them have excellent performance .

This time, I mainly share this case with you through relationship extraction , send demo The project is better , Interested students can try Cross task extraction 、 as well as Multi entity 、 Multi relation extraction

At present, I have been evaluating open source datasets F1 stay 85%–90% Between , By comparison, the difficulty of the data set is generally in line with expectations , You can leave a message if you have problems .

My blog :https://blog.csdn.net/sinat_39620217?type=blog

边栏推荐

- 金鱼哥RHCA回忆录:CL210管理存储--对象存储

- 知名手写笔记软件 招 CTO·坐标深圳

- thymeleaf设置disabled

- 关于ROS2安装connext RMW的进度条卡在13%问题的解决办法

- 2271. 毯子覆盖的最多白色砖块数 ●●

- RuntimeError: CUDA out of memory(已解决)[通俗易懂]

- The practice of depth estimation self-monitoring model monodepth2 in its own data set -- single card / multi card training, reasoning, onnx transformation and quantitative index evaluation

- 依迅总经理孙峰:公司已完成股改,准备IPO

- Leetcode 205. isomorphic string (2022.07.24)

- Depth estimation self-monitoring model monodepth2 paper summary and source code analysis [theoretical part]

猜你喜欢

飞沃科技IPO过会:年营收11.3亿 湖南文旅与沅澧投资是股东

Save the image with gaussdb (for redis), and the recommended business can easily reduce the cost by 60%

Use of Bluetooth function of vs wireless vibrating wire acquisition instrument

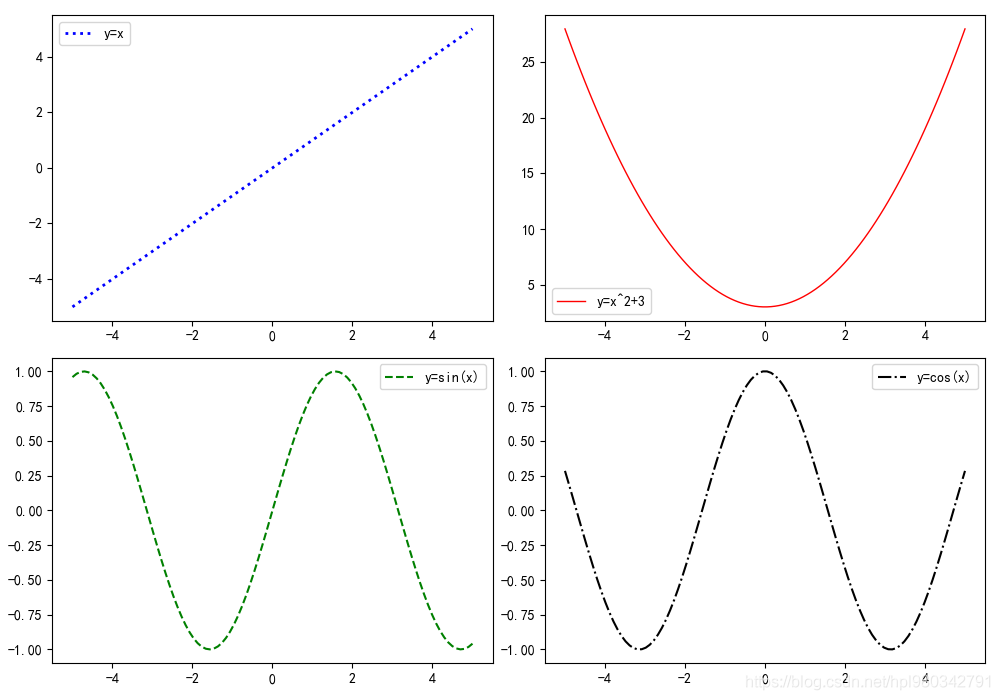

Matplotlib data visualization three minutes entry, half an hour enchanted?

sudo rosdep init Error ROS安装问题解决方案

![einsum(): operands do not broadcast with remapped shapes [original->remapped]: [1, 144, 20, 17]->[1,](/img/bb/0fd0fdb7537090829f3d8df25aa59b.png)

einsum(): operands do not broadcast with remapped shapes [original->remapped]: [1, 144, 20, 17]->[1,

The main function of component procurement system, digital procurement helps component enterprises develop rapidly

How to make a set of code fit all kinds of screens perfectly?

Mysql表的操作

数字孪生 - 认知篇

随机推荐

That day, I installed a database for my sister... Just help her sort out another shortcut

51单片机学习笔记(1)

It is predicted that 2021 will accelerate the achievement of super automation beyond RPA

What you must know about data engineering in mlops

Can the variable name be in Chinese? Directly fooled people

Melodic + Realsense D435i 配置及错误问题解决

金鱼哥RHCA回忆录:CL210管理存储--对象存储

From Anaconda to tensorflow to jupyter, step on the pit and fill it all the way

RuntimeError: CUDA out of memory(已解决)[通俗易懂]

知名手写笔记软件 招 CTO·坐标深圳

[original] nine point calibration tool for robot head camera calibration

IDEA设置提交SVN时忽略文件配置

实现一个家庭安防与环境监测系统(一)

Famous handwritten note taking software recruit CTO · coordinate Shenzhen

Save the image with gaussdb (for redis), and the recommended business can easily reduce the cost by 60%

轻松入门自然语言处理系列 12 隐马尔可夫模型

苹果手机端同步不成功,退出登录,结果再也登录不了

CTS测试介绍(面试怎么介绍接口测试)

jqgrid全选取消单行点击取消事件

结构体大小