当前位置:网站首页>Chapter 2 construction of self defined corpus

Chapter 2 construction of self defined corpus

2022-06-26 21:05:00 【Triumph19】

- As with all machine learning applications , The main challenge is to determine whether there is a signal in the noise , And where the signal is hidden . This is done through the feature analysis process , Determine what features the meaning and potential structure of the text are represented by coding 、 Attribute or dimension . In the previous chapter , We see , Despite the complexity and flexibility of natural languages , But if we can extract its structural features and contextual features , You can model .

- Most of the work in all subsequent chapters will revolve around " feature extraction " and " knowledge engineering " an , Including the recognition of individual words 、 Synonym set 、 Relationships between entities and semantic context . As we will see in this book , The representation of the underlying language structure we use largely determines the success of the processing . To determine how to express , We must first define the unit of language , To calculate 、 measurement 、 Analysis and learning .

- In a sense , Text analysis is the process of decomposing task subjects into small components ( Individual vocabulary words 、 Common phrases 、 syntactic pattern ), Then use the statistical mechanism to deal with . By learning these components , We can build language models , And give the application language the ability to predict . We will soon see , Analytical techniques can be applied at many levels , And all this revolves around a core text data set : corpus (Corpora).

What is a corpus ?

- A corpus is a collection of natural language related documents . The corpus can be large or small , Usually contains several GB、 Or even hundreds GB data . For example, the general email size is 2GB( As a reference ,Enron The complete version of the corporate email corpus was released about ten years ago , Include 118 Between users 1MB E-mail , The size is 160GB), The company has a general idea 200 Employees , The whole company will have about 0.5TB E-mail corpus . Some corpora are marked (annotated), It means that the text or document has been marked with the correct response of the supervised learning algorithm ( for example , Used to build a filter to detect spam ), Some are unmarked (unannotated) Of , It can be used for topic modeling and document clustering ( for example , Explore the potential themes of the text over time ).

- A corpus can be decomposed into documents or individual documents . The corpus contains documents of different sizes , From tweets to books , But they all contain text ( Or metadata ) And a set of related ideas . The document can be further divided into paragraphs and discourses (discourse) unit , Each discourse unit often expresses a single idea . Paragraphs can be further broken down into sentences , Sentences are also syntax (syntex) The basic unit of ; A complete sentence is a structurally reasonable expression . A sentence consists of words and punctuation marks , vocabulary (Lexical) Units are used to express basic meaning , Combination is more effective . Last , The word itself consists of syllables 、 factors 、 Affixes and characters make up , These units are meaningful only when they form words .

Specific corpora

- At the beginning, it is very common to test natural language models with general corpora . for example , There are many examples , Research papers often use off the shelf datasets , Such as brown corpus 、 Wikipedia corpus or Cornell film dialogue corpus, etc . however , The best language models tend to have limitations 、 Only suitable for specific applications .

- Why does a model trained in a specific domain or scene perform better than a model trained in a common language ? There are different terms in different fields ( vocabulary 、 abbreviation 、 Common phrases, etc ), therefore , Compared with corpora covering several different fields , The corpus with relatively pure domain can be better analyzed 、 modeling .

- such as bank The word , Probably in the economy 、 Financial or political fields , Means financial 、 Institutions related to monetary instruments ( Bank ), In the field of aviation or automobile , It is more likely to represent a form of motion that causes a change in the direction of a vehicle or aircraft ( Lean turn ). Fitting the model in a relatively narrow context , Smaller prediction space 、 More specifically , Therefore, it can better deal with the flexibility of language .

- Get domain specific corpus , It is very important to build high-performance language aware data products . therefore , The next question is " How to build data sets for learning language models ?" Whether by grabbing 、RSS extract , still API obtain , It is not easy to build an original text corpus that can support the construction of data products .

- Data scientists usually collect individual data from 、 Start with a static document , Then use conventional methods to analyze . however , If there is no regularization 、 Programmed data ingestion , Analysis can only be static , Unable to respond to the latest feedback , Nor can we cope with the dynamics of language .

- In this chapter , Our focus is not on how to get data , But how to build and manage data in a way that supports machine learning . In the next section , We will give a brief introduction to a system called Baleen Data ingestion engine framework , This framework is especially suitable for constructing domain specific corpus for text analysis .

Baleen Data ingestion engine

- Baleen Is an open source tool for building custom corpora . It works as a document for professional and amateur writers , Such as blogs and news , Ingest natural language data by classification .

- Given RSS The source of OPML file ( Common export formats for aggregated news readers ),Baleen Download all articles from these sources , And save them to MongoDB, Then export the text corpus that can be used for analysis . Although this task seems to be easily accomplished by a single function , But the actual ingestion process can be complicated ,API and RSS The source may change frequently . How to combine applications for best results , It can not only ingest by itself , It can also safely manage data , Obviously it takes a lot of thought .

- adopt RSS The complexity of conventional text ingestion is shown in Figure 2-1 Shown . Specify the sources to ingest and how to classify them , This information is stored in the disk read OPML In file . On the article 、 Feeds and other information to access and store MongoDB, Object document mapping required (ODM), Also define a single extraction task with a tool , Change the job to synchronize the entire feed , Then extract and integrate individual articles .

- Baleen Open the user program based on the above mechanism , On a regular basis ( for example , Every hour ) Run ingest task , Of course, some configuration is required , To specify database connection parameters and running frequency . Because this will be a long-term process ,Baleen A console is also provided to assist in scheduling 、 Logging and monitoring errors . Last ,Baleen The export tool of will export the corpus from the database .

- Whether it's a regular ingestion of documents or a partial acquisition of fixed collections , Must consider how to manage data , And prepare for analysis and model calculation . In the next section , We will discuss how to monitor the corpus , Because our ingestion process will continue , Data will grow and change .

Corpus data management

- Suppose the corpus to be processed is very large , May contain thousands of documents , The final size may be several GB. It is also assumed that , Language data will come from data sources whose data structures need to be cleaned and processed for analysis . The former assumption requires an extensible computing method ( In the 11 The chapter explores more comprehensively ), The latter means that we will convert the data irreversibly ( As we are in the 3 As Zhang saw ).

- Data products are usually written once 、 Multiple read (WORM) Storage facilities , As a transitional data management layer between ingestion and preprocessing , Such as 2-2 Shown .WORM Storage ( Sometimes called a data lake ) In repeatable 、 Scalable streaming access to raw data , Meet the requirements of high-performance computing . By saving the data in WORM In storage , Preprocessed data can be directly reanalyzed , No need to re ingest , This allows you to easily start a new exploration process on the raw data .

- Add... To the data extraction pipeline WORM Storage , It means keeping the data in two places ( Raw corpus and preprocessed corpus ), This raises a question : Where is the data ? When considering data management , We usually consider databases first . Database is undoubtedly a good tool for building language aware data products , Many databases provide full-text search and other class indexing functions . however , Most databases are designed to retrieve or update only a few rows of data per transaction . And access to text corpora , It mainly reads each complete document , The document will not be updated locally , Nor does it search or query individual documents . therefore , Using a database here tends to increase computational overhead , It doesn't actually do any good .

Relational database management system is very suitable for transactions that operate on a few rows of data at a time , Especially when these rows are updated frequently . The demand of machine learning on text corpus is different ; It mainly refers to multiple sequential reads of the entire data set . therefore , The priority is usually given to storing the corpus on disk ( Or document database ) in .

- To manage text data , The best choice is usually to save the data to NoSQL In the document database , This type of database can read documents with minimal overhead , Or write each document directly to disk . Even though NoSQL Databases are ideal for large applications , But the file - based approach also has its advantages : The compression technology on file directory is very suitable for text data , You can also use the file synchronization service to complete automatic replication . Building a corpus with data is beyond the scope of this book . Next , We will introduce ways to effectively organize data on disk , To support the system to access the corpus .

Corpus disk structure

- Organizing and managing a text-based corpus is the simplest 、 The most common method , A file system that stores separate documents on disk . By grouping the corpus into sub directories , The corpus can be classified or according to meta information ( Such as date ) Make meaningful divisions . Save each document as its own file , The corpus reader can quickly search different sub document sets , It can also be processed in parallel , Each process processes a different set of subdocuments .

In the next section, we will discuss how to use NLTK CopusReader Object from directory or Zip The data is read from the file .

- Text files are also the easiest format to compress , Compressed Zip Files can retain the directory structure on the disk , It is an ideal data distribution 、 Storage format . in addition , Corpora stored on disk are usually static , Can be treated as a whole , Meeting the requirements of the previous section WORD Storage requirements .

- however , Each document is saved as a separate file , It may bring some challenges . Too small a document ( E.g. e-mail or twitter ) It doesn't make sense to store as a single file . in addition , E-mail is usually in the form of MBox Format store , This is a way to separate the text with a separator 、HTML、 Images 、 Parts of the attachment HIME news , You can usually press e-mail service ( inbox 、 Plus star 、 Archiving, etc ) Include categories to organize . Tweets are usually small JSON data structure , Not only the text of the tweet , It also includes other metadata , Such as user or location information , A typical way to store multiple tweets , Are separated by newline characters JSON Content , Sometimes it's called JSON Line format . This format parses one line at a time , You get a tweet , You can also search the file to find different tweets . A single file containing tweets can be very large , So by user name 、 Position or date to organize tweets , You can reduce the size of a single file , Create multi file 、 More meaningful disk structure .

- Another way to store data in a logical structure , Is simply writing to a file with a maximum capacity limit . for example , We can write data continuously , Until a certain size limit is reached ( for example ,128M), Then open a new file and continue writing .

The corpus on disk must contain many files , Each file contains one or more documents in the corpus , Sometimes it is divided into subdirectories , These subdirectories represent meaningful groupings , Category . The corpus and document information must also be kept with the corresponding documents . The corpus on disk is organized according to the standard structure , Yes, make sure Python It is very important for the program to read these data effectively .

- Whether the document is aggregated into a multi document file , Or each document is saved as a separate file , Corpora represent many documents that need to be organized . If over time , The corpus takes in new data , A meaningful way to organize is by year 、 month 、 Daily organization subdirectory , Documents are placed in corresponding folders . If documents are categorized by emotion , Whether it's positive or negative , Each type of document can be grouped together , Put it in your own category subdirectory . If there are multiple users in the system , Generate their own specific writing subsets , Such as comments or tweets , Then each user can have its own subdirectory . All subdirectories need and exist in the root directory of the database . Another important point , Such as license 、 detailed list 、 Readme (README) Or reference to the corpus meta information , It must also be saved with the document , In this way, the corpus seems to be an independent whole .

Baleen File structure

- The choice of organization mode on disk is right CorpusReader The way objects read documents has a big impact , We will introduce in the next section .Baleen The corpus ingestion engine will HTML Corpus is written to disk , As shown below :

- There are a few caveats here . First , All documents are saved as HTML file , According to its MD5 Name the hash value ( To prevent repetition ), You can easily identify which files are documents , Which files are metafiles . Meta information ,citation.bib The document provides the properties of the corpus ,LICENSE.md File specifies the necessary permissions for other people to use this repository . Although these two pieces of information are usually reserved for public corpora , But include them , It is helpful to know how to use the corpus , This is the same as adding such information to the private software warehouse .feeds.json and manifest.json Are two corpus specific files , Used separately to identify information about categories and each specific file , Last ,README.md A document is a natural language description of a corpus .

- In these documents ,citation.bib,LICENSE.md and README.md It's a special document , It can be used citation() Method 、license() Methods and readme() Method in NLTK CorpusReader Object .

- A structured approach to corpus management and storage , It means that the application of text analysis follows the scientific repeatability process , This approach encourages the interpretability of the analysis , Enhance the credibility of analysis results . Besides , Build a corpus in this way , We can use CorpusReader Object for easy access , We will explain in detail in the next section .

- Modify these methods to handle Markdown Or reading a corpus specific file like a list is very simple :

import json

# In a custom corpus reader class

def manifest(self):

"""

Read and analyze the mainfest.json file ( If there is )

"""

return json.load(self.open('README.md'))

- These methods are open source , Allow the corpus to remain compressed while , The data is still readable , This minimizes the amount of storage required on the disk . in consideration of README.md Documents are essential to the composition of the account corpus , Not only for other users or developers of the corpus , Also for “ The future self ”, Maybe I don't remember the details 、 It is impossible to determine which models are trained on which corpus , And what other information these models have .

Corpus reader

- Once built on disk 、 Organize a corpus , It lays the foundation for two things : Access the corpus in the programming environment with systematic methods , And the monitoring and management of corpus changes . We will discuss the latter at the end of this chapter , Now let's discuss how to load the document for subsequent analysis .

- Most useful corpora contain thousands of documents , The total amount of text data may be GB. The original text string loaded from the document , It needs to be pretreated , And it is parsed into a representation suitable for analysis , This is an additional process for generating or copying data , Increased the amount of memory required for processing . From a computational point of view , This is an important consideration , Because if there is no proper way to stream and select documents from disk , Text analysis will soon be limited by the performance of a single machine , This limits our ability to generate interesting models . Fortunately, ,NLTK The library has taken this requirement into account , It also provides a tool for streaming access to the corpus from the disk , Can pass CorpusReader The object is Python Open the corpus in the program .

Hadoop Creation of equal distribution calculation framework , In response to the amount of text generated by search engine web crawlers (Hadoop Received two Google The inspiration of the paper , yes Nutch Subsequent search engine projects ). We will discuss cluster computing technology , In order to 11 Chapter use Spark(Hadoop The successor to distributed computing ) Expand .

- CorpusReader It's a programming interface , For reading 、 location 、 Streaming and filtering documents , You can also process the data , Such as programming and preprocessing , Open to code that needs access to corpus data .CorpusReader The initialization , You need to pass in the root path of the directory containing the corpus file 、 Used to find the signature of the document name and the file encoding ( By default UTF-8).

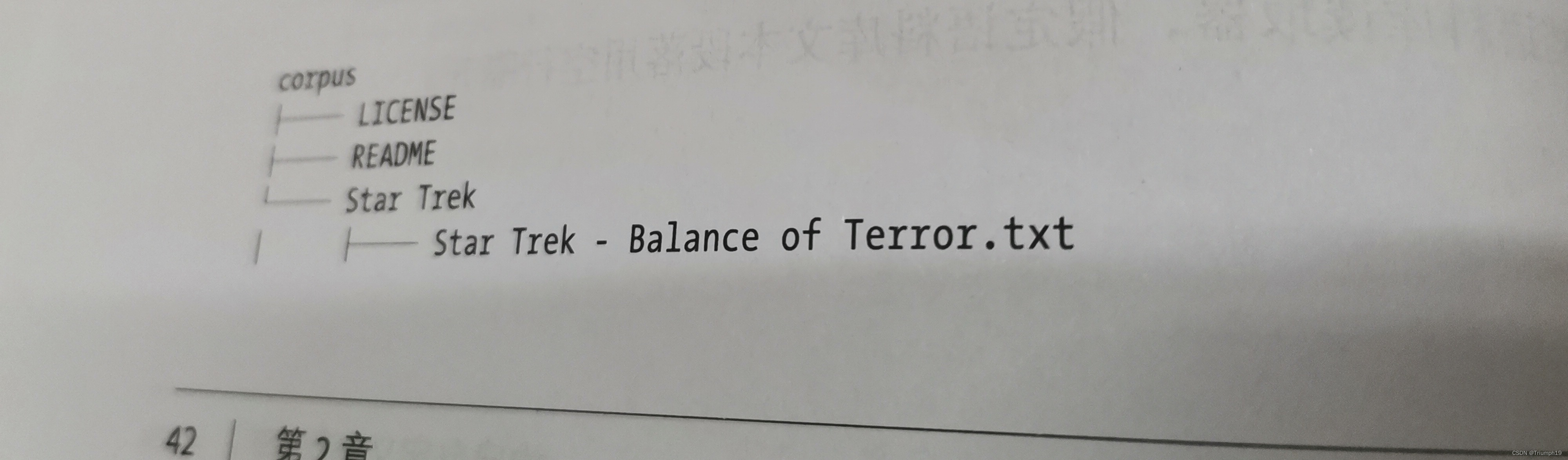

- Because the corpus contains more documents than can be used for analysis ( for example , Readme 、 quote 、 Permission, etc ), Therefore, the corresponding mechanism must be provided to the readers , To accurately identify which documents are part of the corpus . This mechanism is a parameter , You can explicitly specify... With a namelist , You can also implicitly specify with regular expressions , It will match all documents in the root directory ( for example ,\w +.txt), Match extension .txt The preceding file name is a file with one or more characters or numbers . for example , In the following directory , This regular expression pattern will match three voice files and scripts , But does not match the license 、README Or metadata file :

- then , These three simple parameters make CorpusReader It can list the absolute paths of all files in the corpus , Open each document with the correct encoding , And allow programmers to access metadata independently .

- By default ,NLTK CorpusReader Objects can even be accessed compressed to Zip Corpus of documents , Simple extensions also allow reading Gzip or Bzip Compressed files .

- In terms of itself ,CorpusReader The concept of may not seem obvious , But when dealing with a large number of documents , This interface allows the programmer to read one or more documents into memory , Locate a specific position in the corpus forward or backward 、 Without opening or reading unnecessary documents , Streaming data to an analysis process that saves only one document in memory at a time , Filter once or select only specific documents from the corpus . These techniques make it possible to analyze in memory texts in corpus , Because they can only handle a small number of documents loaded into memory at a time .

- therefore , To analyze your own text corpus in a specific domain , The corpus is entirely aimed at the model you are trying to build , You will need an application specific corpus reader . This is very important for an application of text analysis , So we'll focus most of this chapter on this ! In this section , We will discuss NLTK The attached corpus reader and the possibility of building a corpus , So that you can simply choose one of them . Last , We will discuss defining custom corpus readers that perform application specific work , That is to say, it is necessary to deal with the HTML file .

NLTK Streaming access

- NLTK Various corpus readers are attached , Dedicated to access NLTK Can download the text corpus and vocabulary resources . It also comes with more general tools CorpusReader object , The requirements for corpus structure are quite strict , But if you use it well, you can quickly create a corpus , And directly bind with the reader .NLTK It also provides customization of data application requirements CorpusReader The technique of . Here are a few interesting tool readers :

- PlaintextCorpusReader

— Plain text document corpus reader , Assume that the corpus text paragraphs are separated by blank lines . - TaggedCorpusReader

— Simple part of speech tagging corpus reader , The sentence is on a separate line , Entries are separated by corresponding part of speech markers . - BrackedCorpusReader

— Chunks formatted with parentheses ( Can be labeled ) Corpus reader - TwitterCorpusReader

— Twitter corpus reader , Data is delimited by serialized rows JSON Format - WordlistCorpusReader

— Word list reader , Each row of a , Empty lines will be ignored - XMLCorpusReader

— XML Document corpus reader - CategorizedCorpusReader

— Split hybrid document word library reader - Tagged 、 Parenthesized parsed , And the chunked corpus reader is a tagged corpus reader ; If you want to manually mark a specific field before machine learning , So the format open to readers is still very important for understanding .Twitter、XML And plain text corpus reading provide tips on how to deal with disk data with different parseable formats , Allow extensions to CSV corpus 、JSON Even other data sources such as databases . If your corpus has adopted one of these formats , Almost no additional work is required . for example , The format of the pure text script corpus for star wars and Star Trek Movies is as follows :

- CategorizedPlaintextCorpusReader Great for accessing data from movie scripts , Because the document is .txt file , And there are two categories :“ Star Wars: (Star Wars)” and “ Star Trek (Star Trek)”. In order to use CategorizedPlaintextCorpusReader, We need to specify a regular expression that allows the reader to automatically distinguish between file names and category names :

from nltk.corpus.reader.plaintext import CategorizedPlaintextCorpusReader

DOC_PATTERN = r'(?!\.)[\w_\s] + /[\w\s\d\-] + \.txt'

CAT_PATTERN = r'([\w_\s]+)/.*'

corpus = CategorizedPlaintextCorpusReader(

'/path/to/corpus/root',DOC_PATTERN,cat_pattern=CAT_PATTERN

)

- The document pattern regular expression specifies that the document is under the root directory of the language database , With one or more letters in the name 、 Numbers 、 Space or underline , Continuous type “/” character , Then one or more letters 、 Numbers 、 Space or “-”, And finally “.txt”. This will match the name shape as “Star Wars/Star Wars Episode 1.txt” Documents , But the file name will not match “episode.txt” Documents . Category pattern regular expression truncates the original regular expression with a capture group , The specified category is all directory names ( for example ,Star War/anything.txt Will capture Star Wars As a category ). Try to see how these names are captured , Start accessing disk data :

- Regular expressions can be a bit difficult , But they provide a powerful mechanism , It can specify exactly what the corpus reader should load , And how to load . You can also explicitly pass a list of categories and filegroups , But this will greatly reduce the flexibility of the corpus reader . Using regular expressions , You can add new categories by creating directories in the corpus , Add a new document by moving it to the correct directory .

- We get it NLTK Incidental CorpusReader How objects are used , Now let's take a look at the intake HTML How data is pipelined .

边栏推荐

- StringUtils判断字符串是否为空

- Leetcode question brushing: String 02 (reverse string II)

- 0基础学c语言(3)

- 基于QT开发的线性代数初学者的矩阵计算器设计

- The postgraduate entrance examination in these areas is crazy! Which area has the largest number of candidates?

- leetcode刷题:字符串05(剑指 Offer 58 - II. 左旋转字符串)

- Arduino uno + DS1302 uses 31 byte static RAM to store data and print through serial port

- 记录一次Redis大Key的排查

- SentinelResource注解详解

- 分布式ID生成系统

猜你喜欢

Muke 8. Service fault tolerance Sentinel

Dynamic planning 111

Android IO, a first-line Internet manufacturer, is a collection of real questions for senior Android interviews

Leetcode question brushing: String 05 (Sword finger offer 58 - ii. left rotation string)

基于SSH框架的学生信息管理系统

![[Bayesian classification 3] semi naive Bayesian classifier](/img/9c/070638c1a613be648466e4f2bc341e.png)

[Bayesian classification 3] semi naive Bayesian classifier

Dynamic parameter association using postman

分布式ID生成系统

Guomingyu: Apple's AR / MR head mounted display is the most complicated product in its history and will be released in January 2023

leetcode刷题:字符串04(颠倒字符串中的单词)

随机推荐

0 basic C language (3)

Leetcode: String 04 (reverse the words in the string)

MySQL中存储过程的详细详解

Muke 11. User authentication and authorization of microservices

Flutter TextField详解

基于Qt实现的“合成大西瓜”小游戏

Separate save file for debug symbols after strip

The source code that everyone can understand (I) the overall architecture of ahooks

Is it safe to open an online account in case of five-year exemption?

浏览器的垃圾回收机制

Two methods of QT to realize timer

windows系统下怎么安装mysql8.0数据库?(图文教程)

Bonne Recommandation: développer des outils de sécurité pour les terminaux mobiles

Is it safe to open a securities account? Is there any danger

Detailed explanation of shutter textfield

Is there any risk in opening a mobile stock registration account? Is it safe?

leetcode刷题:字符串02( 反转字符串II)

On the origin of the dispute between the tradition and the future of database -- AWS series column

710. random numbers in the blacklist

API管理之利剑 -- Eolink