当前位置:网站首页>What is the new generation cloud computing architecture cipu of Alibaba cloud?

What is the new generation cloud computing architecture cipu of Alibaba cloud?

2022-06-24 04:44:00 【Lingyun moment】

In this paper, I hope that through the analysis of CIPU In-depth technical interpretation of , Answer the questions that readers are generally concerned about :CIPU What is it ?CIPU What are the main problems to be solved ?CIPU To come from , Where will the future go ?

Write it at the front

In recent days, , Zhang Jianfeng, Alibaba cloud intelligent president, is in 2022 Alibaba cloud summit released the cloud infrastructure processor CIPU(Cloud Infrastructure Processing Unit), Said it would replace CPU Become the control and Acceleration Center of cloud computing . Under this new architecture ,CIPU Downward calculation of the data center 、 Storage 、 Network resources are quickly clouded and hardware accelerated , Upward access to the flying cloud operating system , Build millions of servers around the world into a supercomputer .

as everyone knows , Tradition IT Time , Microsoft Windows+Intel The alliance replaced IBM PC The supremacy of ; Mobile computing era , Google Android/ Apple iOS+ARM Jointly led the technical architecture of mobile terminals ; So the cloud computing era , Alibaba cloud Feitian operating system +CIPU What kind of value can the combination play ?

The status quo of cloud computing

2006 The originator of Cloud Computing AWS Successively released S3 and EC2,2010 Years have BAT Is Cloud Computing “ Old wine in new bottles ” The argument for . today ,Gartner 2021 The global IaaS The income has reached 900 Billion dollars , There are still some pseudo cloud computing concepts on the market , For example, resale IDC Hardware 、 resale CDN etc. . We need to see clearly first , What is cloud computing we need ?

As with water 、 Public resources and social infrastructure like electricity , The core feature of the cloud is “ elastic ” and “ Multi rent ”.

What is elasticity ?

elastic , In a broad sense , It's Jean IT Ability to easily keep up with the business development of users ; In a narrow sense , It gives users unparalleled flexibility .

Let's first look at the value of generalized elasticity , In short, it means sufficient supply capacity ,“ Unlimited demand ”.IT Computing power has become the supporting capability of many businesses . When business grows rapidly , If the computational force can't keep up , Then the business is bound to be seriously restricted .

But the construction of computing power is not achieved overnight , From the ground 、 electric 、 Water to machine room construction , From data center network laying to Internet Access , From the server 、 customized 、 Purchase to deployment 、 Go live and operate , From single room 、 Multi machine rooms to cross regions and even continents , Then there's security 、 stability 、 disaster 、 Backup …… The last is the most difficult , Recruitment of excellent talents 、 Training and retention , All of these are time-consuming 、 consume manpower 、 Money consuming matters , Easier said than done . And the emergence of elastic Computing , It makes the acquisition of computing power simple and easy .

The following figure shows the growth curve of the computing power purchased by public cloud users with the rapid expansion of business , In a matter of 15 Months , The demand for computing power has exploded from zero to millions of cores . Elastic computing is an abundant supply of computing power , Make the development of user business even stronger .

What is multi rent ?

A logical reader may already have a vague sense of “ elastic ” and “ Multi rent ” Not strictly orthogonal and juxtaposition , So why did the author put “ Multi rent ” Rise to “ elastic ” Discuss at the same height ?

Strictly speaking , Multi rent is to achieve the ultimate flexibility and the ultimate society IT One of the necessary conditions for resource efficiency . Undeniable? , Private cloud really solves the problem of enterprises to some extent IT Flexible and efficient use of resources , But private cloud and public cloud are “ Multi rent ” This core business feature is different , It makes a huge difference between the two .

Accurately complete the cloud computing “ elastic ” and “ Multi rent ” Definition of business characteristics , Then the technical implementation level can be further discussed . How to achieve “ elastic ” and “ Multi rent ” function ? We can be extremely safe 、 Extremely stable 、 Ultimate performance 、 The four dimensions of extreme cost discuss the evolution of cloud computing technology at the implementation level .

IaaS The heel of Achilles

as everyone knows ,IaaS It's calculation 、 Storage 、 Network and other three major items IT Public service of resources ;PaaS Mainly refers to the database 、 big data 、AI And other data management platforms K8s Cloud native and middleware ;SaaS Microsoft Office 365、Salesforce And so on . In the traditional sense , Cloud Computing mainly refers to IaaS The cloud service ,PaaS and SaaS It is IaaS Cloud native products and services on the cloud platform ; At the same time, due to the theme of this article CIPU Mainly located in IaaS layer , therefore PaaS and SaaS Yes CIPU The requirements of this article will not focus on . In order to achieve IaaS Calculation 、 Storage 、 Network, etc IT Flexible supply of resources on demand , Its core feature is resource pooling 、 Rent more services 、 Flexible supply, management, operation and maintenance automation, etc , The core technology behind it is virtualization technology . Let's briefly review the history of virtualization technology and public cloud services :

2003 year ,XenSource stay SOSP publish 《Xen and the Art of Virtualization》, Pull open x86 The big screen of platform virtualization technology .

2006 year ,AWS Release EC2 and S3, Open the curtain of public cloud services .EC2 The core of is based on Xen Virtualization technology .

It can be seen that , Virtualization technology and IaaS Cloud computing services make mutual achievements :IaaS The cloud service “ Discover and discover ” The business value of virtualization technology , Make virtualization technology become IaaS The cornerstone of cloud services ; meanwhile , Virtualization technology dividends make IaaS Cloud services have become possible . from 2003 year Xen Virtualization technology started , To 2005 In, Intel began to introduce virtualization support to Xeon processors , Add a new instruction set and change x86 Architecture , Make large-scale deployment of virtualization technology possible , then 2007 year KVM Virtualization technology was born , Keep close to 20 Year of IaaS Virtualization technology evolution , None is safer than the above 、 A more stable 、 Higher performance 、 Lower cost and other four business objectives . A brief review of history , We can clearly see IaaS The heel of Achilles —— The pain of virtualization .

firstly , cost .Xen Time ,Xen Hypervisor DOM0 Consume XEON Half CPU resources , That is, only half of them CPU Resources can be sold , You can see that the tax on virtual cloud computing is extremely heavy .

second , performance .Xen Time , The virtualization of kernel network has been extended to 150us The scale of , The network delay jitter is very large , Network forwarding pps Become the key bottleneck of the enterprise's core business ,Xen Virtualization architecture in storage and networking IO There are insurmountable performance bottlenecks in virtualization .

third , Security .QEMU A lot of device emulation code , about IaaS Cloud computing makes no sense , And these redundant codes will not only cause additional resource overhead , Further lead to security attack exposure (attack surface) There is no fundamental convergence . as everyone knows , One of the foundations for the establishment of the public cloud is data security in a multi tenancy environment . And continuously improve the reliability of hardware , The data is calculating 、 Storage 、 The security encryption capability in the flow process of subsystems such as the network , stay Xen/KVM The technical challenges under virtualization are enormous .

Its 4 , stability . The stability of cloud computing has been improved , Rely on two core technologies : Bottom chip white box , To output more RAS data ; And big data operation and maintenance based on these stable data . The virtualization system should further improve its stability , Further in-depth calculation is required 、 Implementation details of network and memory chip , So as to obtain more data affecting system stability .

Fifth , Elastic bare metal support . Such as Kata、Firecracker Wait for the safety container , Dorka GPU The server PCIe switch P2P Virtualization overhead , Head large users are seeking to reduce the overhead of extreme computing and memory virtualization , as well as VMware/OpenStack Support and other requirements , Elastic bare metal is needed to support such needs , And based on Xen/KVM Virtualization architecture cannot achieve elastic bare metal .

Six ,IO The gap between and computing power continues to widen . We use Intel XEON 2 Socket Server, for example , Analyze storage and networking IO as well as XEON CPU PCIe Bandwidth expansion capability , And CPU Make a simple comparative analysis of the development of computing power :

Further to 2018 year SkyLake 2S Various indicators of the server (CPU HT Number 、DDR Theoretical bandwidth of the whole machine, etc ) Benchmarking , Horizontal comparison of the development trend of various technical indicators . With CPU HT Quantity as an example ,96HT SkyLake Set as baseline 1,IceLake 128HT/96HT = 1.3,Sapphire Rapids 192HT/96HT = 2.0, We can get the following Intel 2S XEON The server CPU vs. MEM vs. PCIe/ Storage / The Internet IO Development trend :

From above 2018 Year to 2022 Comparison of data for four years , We can draw the following conclusion :

1、Intel CPU Promoted 2 times ( Not considered IPC Promotion factors ),DDR The bandwidth is improved 2.4 times , therefore CPU and DDR The bandwidth is matched ;

2、 Single network card ( Ethernet switching network with network card connection ) The bandwidth is improved 4 times , single NVMe The bandwidth is improved 3.7 times , The whole machine PCIe Bandwidth improvement 6.7 times , It can be seen that the network / Storage / PCIe etc. IO Ability and Intel XEON CPU Between the calculating power of gap It continues to grow ;

3、 The delay dimension data not analyzed in the above figure , because Intel CPU The frequency remains basically the same ,IPC No significant improvement , therefore CPU The data processing delay will be slightly improved ,PCIe And network card / The network delay is only slightly improved , And storage NVMe and AEP And so on , be relative to HDD Wait for the older generation of media , There is an order of magnitude decrease in delay ;

4、 The above calculation 、 The Internet 、 Unsynchronized development of storage and other infrastructure , The database, big data, etc PaaS Layer system architecture has a key impact , But this is not the focus of this article .

As a person with a background in virtualization technology , See the above analysis , The heart must be heavy . Because in Intel VT After the widespread deployment of computing and memory hardware virtualization technologies , Computing and memory virtualization overhead ( Including isolation 、 Shake, etc ) It has been solved to a considerable extent . And the above PCIe/NIC/NVMe/AEP etc. IO With the rapid development of Technology , If we continue to use PV Semi virtualization technology , Copy in memory 、VM Exit、 The technical challenges in terms of time delay will become increasingly prominent .

CIPU To come from ?

Through the above , We basically explained it clearly IaaS The problems and challenges cloud computing will face at the technical level , In this chapter we will discuss CIPU A summary of the history of technology development , The purpose is to answer a question :CIPU To come from ? After all “ Don't understand history , You can't see the future ”.

If the careful reader is satisfied with the above “ The pain of six virtualization technologies ” There is further thinking and analysis , It should be seen that the six pain points have a common feature : Are more or less discussing IO The cost of virtualizing subsystems 、 Safety and performance . Therefore, the logical technical solution should be from IO Start with the virtualization subsystem . And looking back 20 Years of technological development , It also confirms the above derivation logic . This paper only selects two key technologies , To illustrate CIPU Where to come from :

firstly ,IO Hardware virtualization –Intel VT-d

IO There is a huge demand and technical gap in the virtualization subsystem ,Intel Naturally, we will focus on solving .DMA Direct memory access , as well as IRQ Improvement of interrupt request under virtualization , And corresponding PCIe Follow up by standardization organizations , It will certainly become a necessity .

from IOMMU Address translation to interrupt remapping and posted interrupt, from PCIe SR-IOV/MR-IOV To Scalable IOV, The specific technical implementation details are not discussed in this article , There are a large number of relevant information on the Internet , Interested parties can search and study by themselves .

The author lists here Intel VT-d IO The only purpose of hardware virtualization technology is to say :CPU IO The maturity of hardware virtualization technology , yes CIPU The pre key technology of technology development depends on .

second , Network processor (NPU) And smart network card

CIPU Another design idea comes from the field of communication ( Especially the data communication technology ). People from digital communication background , Certainly for Ethernet switching chips 、 Routing chip 、fabric Especially familiar with chips , And the network processor (Network Processor Unit,NPU) It is a key supporting technology in the field of data communication . In particular , This article is about network NPU, Not AI Neural Processing Unit.

2012 Around the year , Driven by many good wishes of operators ( Whether it can land on a large scale is shown in the following table , But one must always have hope , otherwise “ What's the difference with salted fish ”), Whether in the wireless core network or broadband access server in the communication field (BRAS) in ( Here's the picture ),NFV( Virtualization of network functions ) Have become key research and development directions .

In a word ,NFV Is to pass the standard x86 The server , Standard Ethernet switching network 、 standard IT Storage, etc IT Standardized and virtualized infrastructure , To realize the network element function in the communication field , In order to get rid of the traditional communication chimney and vertical non-standard tight coupling software and hardware system , So as to reduce the cost and increase the efficiency of operators and improve the business agility .

( picture source : ETSI NFV Problem Statement and Solution Vision)

However ,NFV Running on the IT Standardized and virtualized infrastructure , There will certainly be quite a number of technical difficulties . One of these technical challenges is :NFV As a network business , be relative to IT Typical online transactions in the field / Offline big data and other businesses , The technical requirements for network virtualization vary greatly .NFV Natural to high bandwidth throughput ( Default line speed bandwidth processing )、 high pps There are stricter requirements for processing capacity, delay and jitter .

here , Tradition NPU Into the SDN/NFV View of technical requirements , But this time it's a NPU Just put it on the network card , The configuration NPU The network card of is called intelligent network card (Smart NIC).

You can see , signal communication NFV And other businesses want to deploy to standardized and virtualized IT On top of the common infrastructure , Then we encounter the performance bottleneck of network virtualization . At the same time ,IT domain The public cloud virtualization technology has encountered IO Virtualization technology bottlenecks . They are 2012 Around the year , Accidentaly across . thus , The Internet NPU、 Traditional communication technologies such as intelligent network cards have begun to enter IT domain Field of vision .

today , In solving Cloud Computing IO Virtualization , You can see the smart network card 、DPU、IPU And so on are still used by everyone . One of the reasons , Indeed, they have deep blood connections ; At the same time so many and so confused names , It also comes from the cross-border communication field to IT Engineers in the field and many large chip manufacturers in the United States are not familiar with cloud business needs and scenarios .

CIPU location

On the basis of relevant front-end technical reserves , Here the author gives CIPU Definition and positioning of .CIPU(Cloud Infrastructure Processing Unit, Cloud infrastructure processors ), seeing the name of a thing one thinks of its function , Is to put IDC Calculation 、 Storage 、 Dedicated business processors with cloud based network infrastructure and hardware acceleration . Computing devices 、 Storage resources 、 Once network resources are accessed CIPU, Turn cloud into virtual computing power , Scheduled by the cloud platform , Provide users with high-quality elastic cloud computing power cluster .

CIPU The architecture consists of the following parts :

IO Hardware device virtualization

adopt VT-d Front support technology , Achieve high performance IO Hardware device virtualization . Also consider the public cloud OS Ecological compatibility , The device model should be as compatible as possible . So the implementation is based on virtio-net、virtio-blk、NVMe And other industry standards IO Device model , Become a must . At the same time, notice IO High performance of equipment , So in PCIe Optimization at the protocol level is crucial . How to reduce PCIe TLP Traffic volume 、 Reduce guest OS Number of interrupts ( At the same time, the delay demand is balanced ), Realize flexible hardware queue resource pooling , new IO Business programmability and configurable flexibility , It's a decision IO The key to the realization of hardware virtualization .

VPC overlay Network hardware acceleration

The business pain points of network virtualization have been briefly analyzed above , Here we further expand the business requirements :

- demand 1: Bandwidth and line speed processing capacity

- demand 2: The acme E2E Low delay and low delay jitter

- demand 3: High without packet loss pps Forwarding capability

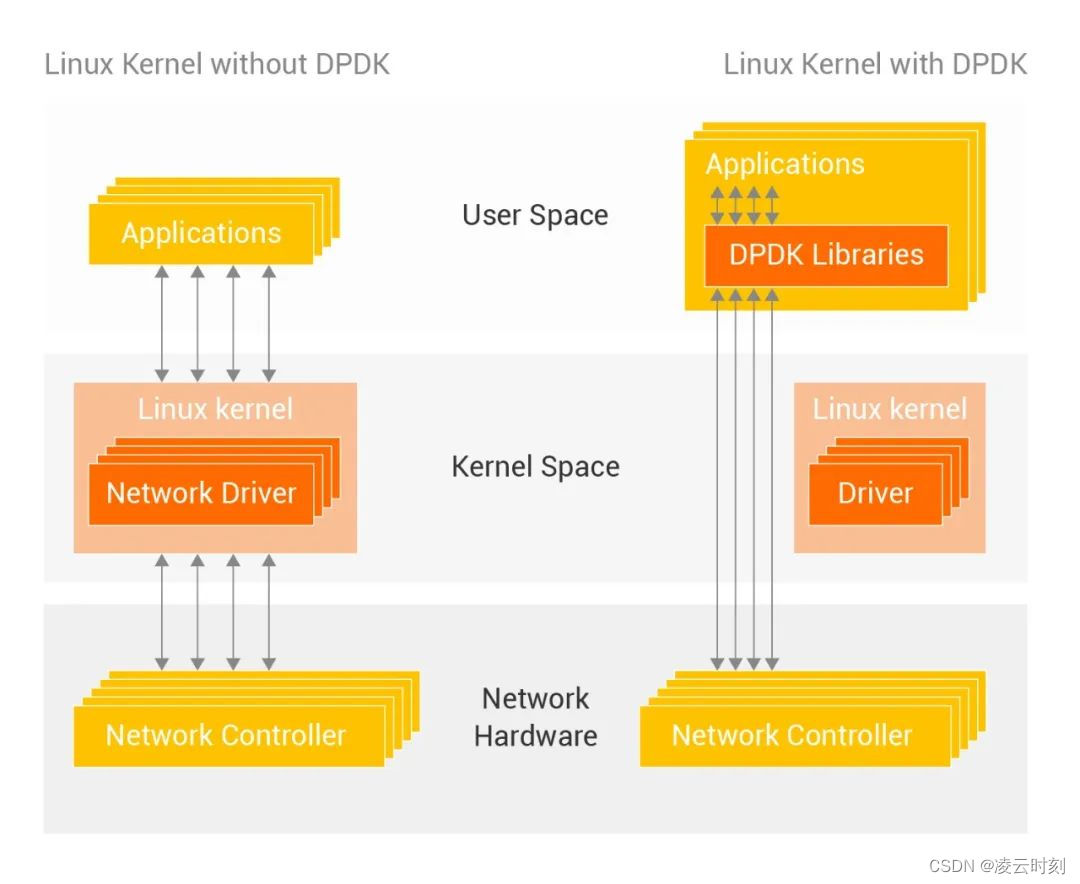

And the implementation level ,Xen Era of kernel network virtualization , To KVM Based on DPDK vSwitch User mode network virtualization , Facing the following problems :

1、 Network bandwidth and CPU The gap in processing capacity is widening

( Data sources : xilinx)

2、DPDK The bottleneck of software only network forwarding performance optimization is highlighted

Make a further analysis of the above two problems , We can see the following three fundamental difficulties :

- 100Gbps+ Large bandwidth data mobility , Lead to “ Von Neumann memory wall ” Outstanding problems ;

- CPU Scalar processing network virtualization services , The parallelism bottleneck is obvious ;

- Software based data path processing , Delay jitter is difficult to overcome .

here , The business demand based on hardware forwarding acceleration was born , The technical realization level can be divided into :

- Be similar to MNLX ASAP、Intel FXP、Broadcom trueflow Based on configurable ASIC Forwarding technology

- be based on many core Of NPU technology

- FPGA Reconfigurable logic implements forwarding technology

Intel FXP Based on configurable ASIC Forwarding technology , It has the highest performance to Watt ratio and the lowest forwarding delay , But the business flexibility is limited ; be based on many core Of NPU technology , It has certain forwarding business flexibility , however PPA(power-performance-area) Efficiency and forwarding delay cannot be configured ASIC competition .FPGA Reconfigurable logic implements forwarding technology ,time to market Ability has great advantages , But for 400Gbps/800Gbps Forwarding service , It's a big challenge .

At this point, the technical implementation level is tradeoff principle : commercial IPU/DPU The chip needs to cover more target customers , Will tend to sacrifice certain PPA Efficiency and forwarding delay , To achieve a certain degree of versatility ; Cloud vendors CIPU It will carry out more in-depth vertical customization based on its own forwarding business , So as to achieve more perfection PPA Efficiency and more extreme forwarding delay .

EBS Distributed storage access hardware acceleration

Public cloud storage should be realized 9 individual 9 Data persistence for , And the computing and storage should meet the elastic business requirements , It will inevitably lead to the separation of deposit and settlement ,EBS( Alibaba cloud block storage ) Must have high performance in the computer head 、 Low latency access to the tail of the distributed storage cluster . Specific demand level :

EBS As real-time storage , Must be realized E2E Extremely low latency and extremely P9999 Delay jitter ;

Realize line speed storage IO forward , Such as 200Gbps Under the network environment 6M IOPS;

new generation NVMe Hardware IO virtualization , While meeting the needs of shared disk business , solve PV NVMe Semi virtualization IO Performance bottleneck .

Calculation initiator And distributed storage target Storage protocol between , Generally, cloud vendors will highly optimize and customize vertically ; and CIPU Yes EBS This is the core point of hardware acceleration for distributed storage access .

Local storage virtualization hardware acceleration

The local store , Although there is no such thing as EBS 9 individual 9 Data persistence and reliability , But at low cost 、 High performance 、 It still has advantages in terms of low delay , For calculation cache、 Big data and other business scenarios are just needed . If the local disk is virtualized , bandwidth 、IOPS、 Zero attenuation of time delay , At the same time, it has one empty and many 、QoS Isolation 、 O & M capability , It is the core competitiveness of local storage virtualization hardware acceleration .

elastic RDMA

RDMA The network HPC、AI、 big data 、 database 、 Storage, etc data centric In business , Playing an increasingly important technical role . so to speak ,RDMA The Internet has become data centric The key to business differentiation . And how to realize the universal benefits on the public cloud RDMA Ability , It is CIPU Key business capabilities . Specific needs :

Cloud based overlay Large scale network deployment ,overlay Where the network can reach ,RDMA The network can reach ;

RDMA verbs ecology 100% compatible ,IaaS Zero code modification is the key to business success ;

Very large scale deployment , Tradition ROCE Technology based on PFC etc. data center bridging technology , In many aspects, such as network scale and switching network operation and maintenance , It's unsustainable . Cloud elasticity RDMA Technology needs to get rid of PFC And lossless network dependency .

elastic RDMA At the implementation level , First of all, we should go over VPC Low latency hardware forwarding ; And then in PFC And lossless networks are being abandoned , The deep vertical customization optimization of transmission protocol and congestion control algorithm has become CIPU The inevitable choice .

Security hardware acceleration

Look at cloud computing from the perspective of users ,“ Safety is 1”—— There is no security “1”, Other business capabilities are “0”. therefore , Continue to strengthen hardware trusted technology 、VPC The east-west traffic is fully encrypted 、EBS And local disk virtualization data full encryption , Hardware based enclave Technology, etc. , It is the key for cloud manufacturers to continuously improve cloud business competitiveness .

Cloud O & M capability support

The core of cloud computing is service( As a service ), So as to realize the user's understanding of IT Free operation and maintenance of resources . and IaaS The core of the O & M capability of elastic computing is the non-destructive thermal upgrade capability of all business components and the non-destructive thermal migration capability of virtual machines . It's about CIPU A large number of software and hardware collaborative design with the cloud platform base .

Elastic bare metal support

Elastic bare metal must realize the following eight key business characteristics at the specific definition level :

meanwhile , Cloud computing elastic business inevitably requires elastic bare metal 、 virtual machine 、 Pooling production and scheduling of computing resources such as security containers .

CIPU Pooling capacity

Considering the general calculation and AI Computing on the network 、 The demand for storage and computing power varies greatly ,CIPU Must have pooling capability . General calculation passed CIPU Pool technology , Marked improvement CIPU Resource utilization , So as to enhance the core competitiveness at the cost level ; At the same time, it can CIPU Meet the following requirements under the technical architecture system AI And other high bandwidth services .

Computing virtualization support

The business features of computing virtualization and memory virtualization are enhanced , Cloud vendors are all interested in CIPU There are many core requirements defined .

CIPU Architecture traceability

Through the fifth chapter on CIPU A complete definition of the business , We need to be right about CIPU The computing architecture of is further traced back to the theory . Only the practice of computer engineering has risen to the perspective of computer science , To gain a clearer insight into CIPU The essence of , And point out the technical direction for the next engineering practice . This must be a path from spontaneous to conscious ascension .

We have come to a conclusion :“ Single network card ( Ethernet switching network with network card connection ) The bandwidth is improved 4 times , single NVMe The bandwidth is improved 3.7 times , The whole machine PCIe Bandwidth improvement 6.7 times , It can be seen that the network / Storage /PCIe etc. IO Ability and Intel XEON CPU Between the calculating power of gap It continues to grow .”

If only based on the above conclusion , It is inevitable that CIPU Hardware acceleration is computing power offloading( uninstall ). But things are obviously not so simple .

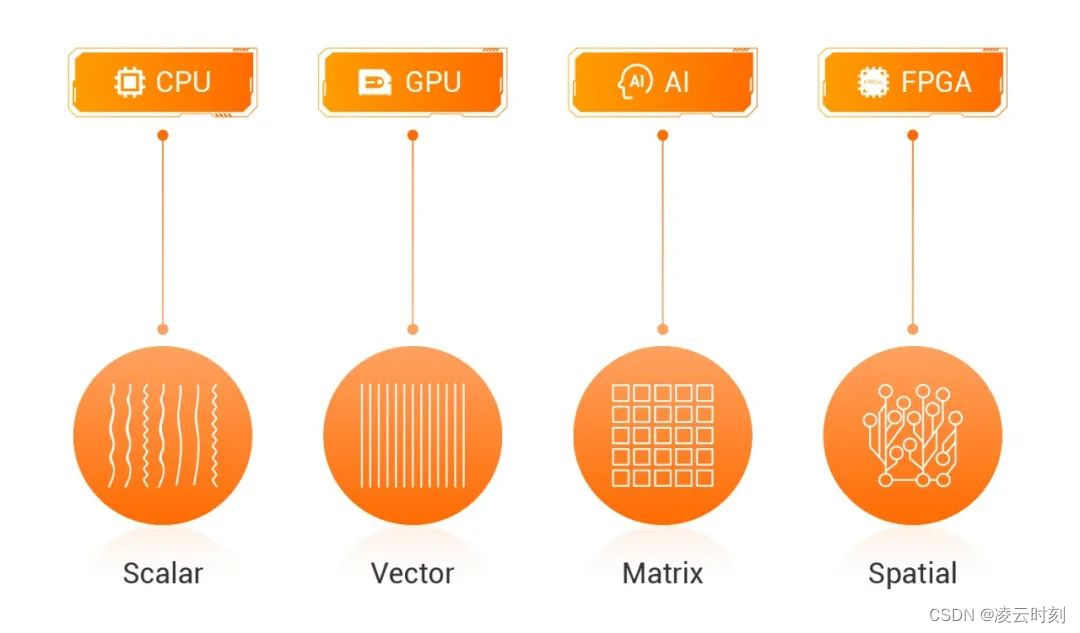

XEON The calculating force can be reduced to :ALU And so on + Data hierarchy cache And memory access . For universal computing ( Scalar computation ),XEON Superscalar computing power , It is perfect . And vector computing ,XEON Of AVX512 and SPR AMX, The software performance of directional optimization will greatly exceed the expectation , meanwhile GPU and AI TPU ISO heterogeneous computing achieves a high degree of optimization for vector computing .

therefore ,CIPU In general scalar computation and AI Vector computing and other business areas , To complete XEON ALU Calculative sum GPU stream processor Of offloading Obviously not .

Here's the picture ,Intel Precise definition workload Computational force characteristics , And the best matching computing power chip :

( picture source : Intel)

So here comes the question ,CIPU This socket, The most suitable business workload What are the common characteristics ? Make an in-depth analysis of the 10 One business , We can see their common business characteristics : In data flow ( Move ) In the process , Through deep vertical hardware and software co design , Minimize data movement , So as to improve the computing efficiency . therefore ,CIPU The main work from the perspective of computer architecture is : Optimize data hierarchy between and within cloud computing servers cache、 Memory and storage access efficiency ( As shown in the figure below ).

Writing at this point , With Nvidia Chief computer scientist Bill Dally An incisive exposition of “Locality is efficiency, Efficiency is power, Power is performance, Performance is king.” As a summary .

Since the CIPU Hardware acceleration is more than just computing power unloading , So what is it ? First give the answer :CIPU It is heterogeneous computing along the path .

Nvidia/Mellanox Has been continuously advocating in networking computing( Near network computing ) many years ,CIPU Random heterogeneous computing and its relationship ? Storage areas , It also exists for many years computational storage、in storage computing as well as near data computing( Near data calculation ) And so on ,CIPU Random heterogeneous computing and their relationship ?

The answer is simple :CIPU Random heterogeneous computing = Near network computing + Near memory computing

Further comparative analysis , It can deepen the understanding of path dependent heterogeneous computing :GPU、Google TPU、Intel QAT etc. , Can be summarized and classified as : Bypass heterogeneous computing ;CIPU On the road to networking and storage , Therefore, it is classified as : Random heterogeneous computing .

CIPU & IPU & DPU

DPU:Data Processing Unit, From the information in the industry , It should come from Fungible; And this name really developed and became famous for a while , Thanks to Nvidia The vigorous publicity and promotion of . stay Nvidia Acquisition Mellanox after ,NVidia CEO Huang Renxun's core judgment on the industry trend : The future of the data center will be CPU、GPU and DPU A situation of tripartite confrontation , And take it as Nvidia Bluefield DPU spin .

As can be seen from the above figure , China and the United States have set off a round of DPU/IPU Technology investment boom , But my judgment is : This socket Must be based on cloud platform software base (CloudOS) Business needs , complete CloudOS + CIPU Deep software and hardware co design . Only cloud vendors can play this role socket Maximum value of .

stay IaaS field , Cloud vendors pursue “ Northbound interface standardization ,IaaS Zero code modification , compatible OS And applied ecology ; At the same time, make deep foundation , Further pursue the deep vertical integration of software and hardware ”, The technical logic behind this is “ Software definition , Hardware acceleration ”.

Alibaba cloud has developed the Feitian cloud operating system and various core components of the data center , The technologist has a deep background . Cloud platform base software , Deep vertical integration of software and hardware , Introduction CIPU, It is the only way for Alibaba cloud .

It is also worth mentioning that , Business understanding and knowledge accumulation of cloud platform operating system precipitated in long-term and large-scale R & D and operation , And the vertically complete R & D technology team built in this process , It's just CIPU The meaning of the topic of . Chips and software are just one form of knowledge solidification .( The body of the finish )

边栏推荐

- Final summary of freshman semester (supplement knowledge loopholes)

- Cadence OrCAD Capture 批量修改网络名称的两种最实用的方法图文教程及视频演示

- 『渗透基础』Cobalt Strike基础使用入门_Cobalt Strike联动msfconsole

- Getattribute return value is null

- Opengauss version 3.0 source code compilation and installation guide

- Worthington木瓜蛋白酶化学性质和特异性

- Leetcode refers to offer II 089 House theft

- Facebook内部通告:将重新整合即时通讯功能

- Advanced authentication of uni app [Day12]

- 重新认识WorkPlus,不止IM即时通讯,是企业移动应用管理专家

猜你喜欢

Recognize workplus again, not only im but also enterprise mobile application management expert

15+ urban road element segmentation application, this segmentation model is enough

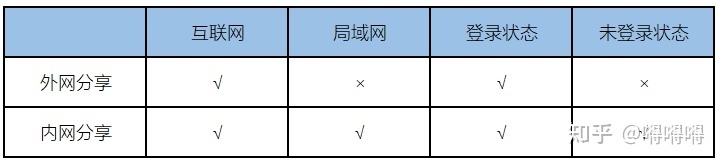

An interface testing software that supports offline document sharing in the Intranet

梯度下降法介绍-黑马程序员机器学习讲义

Idea creates a servlet and accesses the 404 message

Opengauss version 3.0 source code compilation and installation guide

Introduction à la méthode de descente par Gradient - document d'apprentissage automatique pour les programmeurs de chevaux noirs

大一下学期期末总结(补充知识漏洞)

uni-app进阶之认证【day12】

少儿编程教育在特定场景中的普及作用

随机推荐

少儿编程教育在特定场景中的普及作用

Worthington脱氧核糖核酸酶I特异性和相关研究

Cadence OrCAD Capture 批量修改网络名称的两种最实用的方法图文教程及视频演示

阿里云新一代云计算体系架构 CIPU 到底是啥?

Multi task video recommendation scheme, baidu engineers' actual combat experience sharing

web渗透测试----5、暴力破解漏洞--(6)VNC密码破解

Openeuler kernel technology sharing issue 20 - execution entity creation and switching

How does the compiler put the first instruction executed by the chip at the start address of the chip?

线性回归的损失和优化,机器学习预测房价

Idea创建Servlet 后访问报404问题

SAP mts/ato/mto/eto topic 10: ETO mode q+ empty mode unvalued inventory policy customization

Web penetration test - 5. Brute force cracking vulnerability - (9) MS-SQL password cracking

ServiceStack. Source code analysis of redis (connection and connection pool)

Summary of Android interview questions in 2020 (intermediate)

集成阿里云短信服务以及报签名不合法的原因

Web penetration test - 5. Brute force cracking vulnerability - (8) PostgreSQL password cracking

Abnova膜蛋白脂蛋白体解决方案

Activity recommendation | cloud native community meetup phase VII Shenzhen station begins to sign up!

Abnova荧光原位杂交(FISH)探针解决方案

Final summary of freshman semester (supplement knowledge loopholes)