当前位置:网站首页>71- analysis of an Oracle DBA interview with Alibaba in 2010

71- analysis of an Oracle DBA interview with Alibaba in 2010

2022-06-22 21:21:00 【Tiger Liu】

It is said that this is a 2010 Ali interview in Oracle DBA The interview questions , From a official account , The author interviewed Ali Oracle DBA Personal experience , be called “ Those who refuse people thousands of miles away SQL topic ”:

“ There is one with ID The aggregation table for the primary key , Table data volume is 2 Billion . To put another table with the same structure , Table data volume is 6000 ten thousand , Merge into the first table .

Please design an update process . The first table may contain Part of the data in the second table , Or maybe not . Nothing to insert , The matching should be updated .”

This is a typical merge operation , But the amount of data is a little larger . Is there a partition on the table , How much storage space does it take , How many indexes are there , Whether business interruption is required , These are all important conditions , Didn't mention , It shows that this is an open-ended problem .

The author didn't give the idea of solving the problem in the article , I'll talk about my ideas :

Method 1、 Whether the table has partitions or not , Direct use merge, Turn on parallel dml, This amount of data is OK in hardware condition , It's not a problem , With the method 2、3 comparison , The efficiency is just so . The key to this method is to enable parallelism dml. Know how to use merge, Get some points ; Plus it will enable parallelism dml, I think I can barely pass the exam . This depends on the starting point of the interviewer's questions .

Method 2、 still merge, Both tables are designed to press id Do the same hash Partitioned table , Turn on parallel at the same time DML, Efficiency will be greatly improved , Because this will use oracle Of partition wise join technology . Under hardware conditions ( Mainly storage throughput ) If it's ok , Open a 32 Parallel , If there is no index , A minute or two should solve the battle . If the interviewer is 2010 Years can know PWJ This knowledge point , The answer should pass the interviewer's test , Because it has arrived. 2019 year , Not many people know this knowledge point ( In some books, you can also see that some optimization Masters use manual implementation to achieve similar partition wise join Method of implementation , I wonder if I don't want to use parallelism . Large tables do similar operations , Parallel must be used ).

Method 3、 Create a new table with the same table structure , The original two watches do full outer join, Insert data into new table , And then again rename, This method is the most efficient . If there is an index on the table , That is the preferred method , You can insert data and then create an index . This method is combined with partition wise join、 parallel dml、nologging、compress technology , If the storage is strong enough , There is no problem solving the battle in one minute .

Method 4、11g Version 1 provides a slicing parallel method :DBMS_PARALLEL_EXECUTE. You can put 6000 Ten thousand watches , according to rowid Or in other ways ( This question is not correct 2 A million meters in pieces ), If there is 60 A shard , Then each fragment only needs to be processed 100 Ten thousand records . This method requires the use of plsql, The operation is a little complicated . Every 100 The division of million records and 2 Million records of the table to do merge, If you do it one by one Nested loop, The efficiency of cable running is not high ; If you use Hash join, be 2 Million meters need to be scanned 60 Time , Not very efficient . The advantage is that you can reduce the use of rollback segments ( Comparison method 1 and 2). This method may also be the thinking of many programmers , Large table segmentation , Open cursor , Deal with one by one , Efficiency is really not good .

summary :

Method 3 Highest efficiency ; Method 2 A simple and efficient , Partition coordination is required ; Method 1 The efficiency of general ; Method 4 For single table operation dml just so so , In this case , It's not appropriate .

Here are some ways I can think of , I don't know what Ali's interviewer's own ideas are , Maybe there are more advanced methods .

( End )

边栏推荐

- Baijia forum Daqin rise (lower part)

- [redis]redis6 master-slave replication

- Visualization of R language penguins dataset

- 【CM11 链表分割】

- MySQL adds (appends) prefix and suffix to a column field

- [redis] cluster and common errors

- 程序员必看的学习网站

- Golang學習筆記—結構體

- [palindrome structure of or36 linked list]

- Huawei cloud releases Latin American Internet strategy

猜你喜欢

随机推荐

Numpy learning notes (6) -- sum() function

Easyclick fixed status log window

使用Charles抓包

云服务器中安装mysql(2022版)

[redis]配置文件

苹果CoreFoundation源代码

查看苹果产品保修状态

Baijia forum in the 13th year of Yongzheng (lower part)

超快变形金刚 | 用Res2Net思想和动态kernel-size再设计 ViT,超越MobileViT

Golang learning notes - structure

[redis]redis persistence

启牛送的券商账户是安全的吗?启牛提供的券商账户是真的?

Objective-C byte size occupied by different data types

C语言中int和char的对应关系

513. find the value in the lower left corner of the tree / Sword finger offer II 091 Paint the house

[160. cross linked list]

PHP image making

How to calculate the Gini coefficient in R (with examples)

Huawei cloud releases Latin American Internet strategy

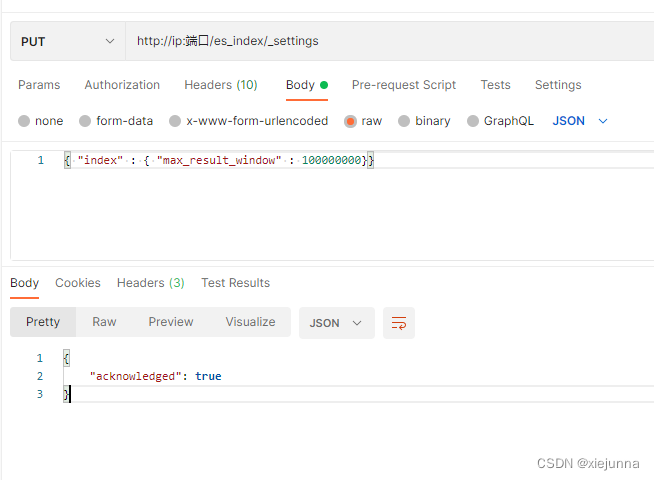

es 按条件查询数据总条数

![[redis]redis persistence](/img/83/9af9272bd485028062067ee2d7a158.png)

![[redis] three new data types](/img/ce/8a048bd36b21bfa562143dd2e47131.png)

![[160. cross linked list]](/img/79/177e2c86bd80d12f42b3edfa1974ec.png)

![[the penultimate node in the linked list]](/img/b2/be3b0611981dd0248b3526e8958386.png)