当前位置:网站首页>Non local mean filtering / attention mechanism

Non local mean filtering / attention mechanism

2022-07-23 20:04:00 【xx_ xjm】

One : Nonlocal mean

Mean filtering : In target pixels x Centered , Its radius is r Pixels in the range of weighting Sum and average as pixels x Filtered value

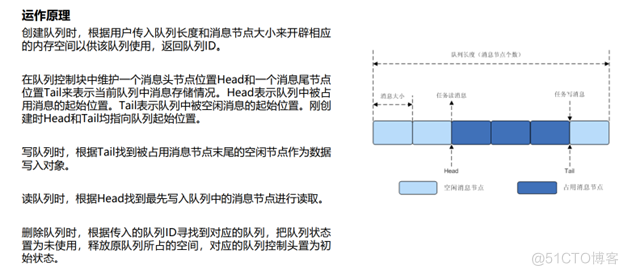

Nonlocal mean filtering : Mean filtering is for target pixels x Weighted sum of pixels in the range , But this weight is artificially set , Generally, it is to take 1, That is to say, the pixels in this range are relative to the center x The impact is the same , This is obviously not right . Then each pixel point to the center point x How to set the weight of ? Nonlocal mean filtering is actually to calculate the pixel points at different positions to the center point x The impact weight of , Then add and average . How to calculate this weight ?

Refer to the following first : Nonlocal mean filtering ( One )_ Shallow Yumo's blog -CSDN Blog _ Nonlocal mean filtering

The article has made it very clear , But I don't think what I said is very good , Here he sets the size of the picture to 7*7, And the search box is also 7*7, It's not easy to understand , Let me add here .

We can set the size of the picture to 256*256, So we set the search box to 7*7, The domain block is set to 3*3; That is to say, we need to calculate the search box 7*7 Inside 49 Pixels to the center of the search box x The impact weight of , This weight is calculated by comparing the field speed of each point in the search box with that of the center point

Two : Attention mechanism

There are two kinds of attention mechanisms : One is the soft attention mechanism , One is the hard attention mechanism , A simple understanding can think that the attention learned by soft attention is continuous [0,1] Probability value between , The attention learned by hard attention mechanism can only be 0, perhaps 1. Generally, we use soft attention , Hard attention usually needs reinforcement learning .

Reference from :Attention( attention ) Mechanism - Simple books (jianshu.io)

Soft attention mechanism : For example, calculate the attention relationship between channels , The representative is SENet; Or attention between regions , Channel attention , Spatial attention, etc .

Hard attention mechanism : Calculate point-to-point attention , The representative is Non-local Neural Networks This paper , yes self-attention A typical application of , Its idea is derived from the nonlocal mean filtering we mentioned above .

One more point : Attention can be understood as a way to calculate the interaction factors between elements , and non-local It's a way of calculation : For example, in the paper “Camouflflaged Object Segmentation with Distraction Mining ” As mentioned in :

边栏推荐

- Leetcode 151. 颠倒字符串中的单词

- 编译器LLVM-MLIR-Intrinics-llvm backend-instruction

- 项目实战第九讲--运营导入导出工具

- Paddle implementation, multidimensional time series data enhancement, mixup (using beta distribution to make continuous random numbers)

- [英雄星球七月集训LeetCode解题日报] 第23日 字典树

- 深入浅出边缘云 | 1. 概述

- Powercli management VMware vCenter one click batch deployment OVF

- 2022上半年中国十大收缩行业

- R language data The table package performs data grouping aggregation statistical transformations and calculates the grouping minimum value (min) of dataframe data

- dokcer镜像理解

猜你喜欢

梅科尔工作室-小熊派开发笔记3

【ASP.NET Core】选项模式的相关接口

![[激光器原理与应用-8]: 激光器电路的电磁兼容性EMC设计](/img/98/8b7a4fc3f9ef9b7e16c63a8c225b02.png)

[激光器原理与应用-8]: 激光器电路的电磁兼容性EMC设计

干货!神经网络中的隐性稀疏正则效应

使用多态时,判断能否向下转型的两种思路

Powercli moves virtual machines from host01 host to host02 host

The numerical sequence caused by the PostgreSQL sequence cache parameter is discontinuous with interval gap

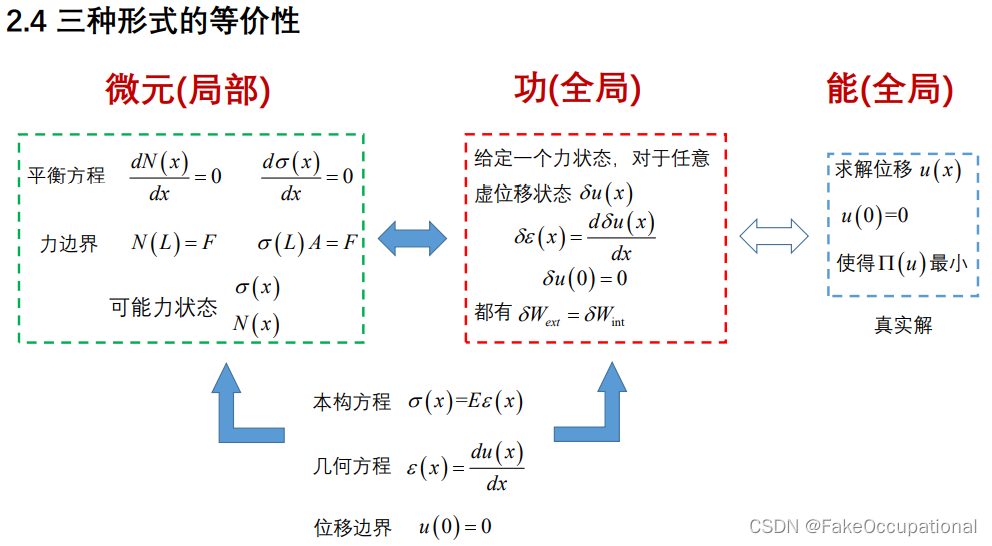

Energy principle and variational method note 14: summary + problem solving

【面试:并发篇22多线程:ReentrantLock】

梅科尔工作室-华为14天鸿蒙设备开发实战笔记四

随机推荐

The numerical sequence caused by the PostgreSQL sequence cache parameter is discontinuous with interval gap

Why do you get confused when you ask JVM?

[development experience] development project trample pit collection [continuous update]

能量原理与变分法笔记17:广义变分原理(识别因子方法)

搭建自己的目标检测环境,模型配置,数据配置 MMdetection

When does MySQL use table locks and row locks?

Meiker Studio - Huawei 14 day Hongmeng equipment development practical notes 4

I deliberately leave a loophole in the code. Is it illegal?

What is weak network testing? Why should weak network test be carried out? How to conduct weak network test? "Suggested collection"

AtCoder——Subtree K-th Max

重装系统后故障(报错:reboot and select proper boot deviceor insert boot media in selected boot device)

2022/7/21训练总结

Powercli imports licensekey to esxi

项目实战第九讲--运营导入导出工具

梅科尔工作室-华为14天鸿蒙设备开发实战笔记六

How important is 5g dual card and dual access?

R language uses the gather function of tidyr package to convert a wide table to a long table (wide table to long table), the first parameter specifies the name of the new data column generated from th

MySQL data recovery - using the data directory

2022第四届中国国际养老服务业展览会,9月26日在济南举办

R language filters the data columns specified in dataframe, and R language excludes (deletes) the specified data columns (variables) in dataframe