当前位置:网站首页>XXIX - orbslam2 real-time 3D reconstruction using realsensed435

XXIX - orbslam2 real-time 3D reconstruction using realsensed435

2022-06-25 16:49:00 【goldqiu】

Summary of previous articles :

Multi-sensor fusion SLAM part :

Open source framework testing

Twenty-four - University of Hong Kong Mars laboratory FAST-LIO2 The framework runs the official data set _goldqiu The blog of -CSDN Blog

twenty-seven -VIO-SLAM Open source framework Vin-mono run EuRoC Data sets _goldqiu The blog of -CSDN Blog

Real car test

calibration

Open source framework learning

From this blog to Localization、Navigation Part of it

twenty-five .SLAM in Mapping and Localization Difference and thinking

Planning

Global Localization

RealSenseD435 Drive installation

install SDK

1. download source

git clone https://github.com/IntelRealSense/librealsense

cd librealsenseIt is recommended to download a slightly lower version of SDK, I downloaded it librealsense-2.36.0 Version of , perhaps librealsense-2.45.0

2. Install dependencies

sudo apt-get install libudev-dev pkg-config libgtk-3-dev

sudo apt-get install libusb-1.0-0-dev pkg-config

sudo apt-get install libglfw3-dev

sudo apt-get install libssl-dev3. Install permission script

stay librealsense Folder

sudo cp config/99-realsense-libusb.rules /etc/udev/rules.d/

sudo udevadm control --reload-rules && udevadm trigger Uninstall and reload ,99-realsense-libusb.rules The file needs to be deleted .

4. Compilation and installation

mkdir build

cd build

cmake ../ -DBUILD_EXAMPLES=true

make

sudo make installrealsense-viewer Tool to see the effect

realsense-viewerinstall RealSense-ROS

sudo apt-get install ros-melodic-rgbd-launch

git clone https://github.com/IntelRealSense/realsense-ros.git

git clone https://github.com/pal-robotics/ddynamic_reconfigure.git

cd ~/catkin_ws && catkin_makeAfter compilation , Use the following command to test :

roslaunch realsense2_camera demo_pointcloud.launch It is recommended to download a slightly lower version of , What I downloaded are ddynamic_reconfigure-0.2.2 and realsense-ros-2.3.2, perhaps ddynamic_reconfigure-0.3.2 and realsense-ros-2.3.2

Open the camera node

roslaunch realsense2_camera rs_rgbd.launch Report errors :

/opt/ros/melodic/lib/nodelet/nodelet: symbol lookup error: /home/qjs/code/D435_ws/devel/lib//librealsense2_camera.so: undefined symbol: _ZN20ddynamic_reconfigure19DDynamicReconfigure16registerVariableIiEEvRKNSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEET_RKN5boost8functionIFvSA_EEES9_SA_SA_S9_

[camera/realsense2_camera_manager-2] process has died [pid 27469, exit code 127, cmd /opt/ros/melodic/lib/nodelet/nodelet manager __name:=realsense2_camera_manager __log:=/home/qjs/.ros/log/1eca8a82-e8c4-11ec-807e-70b5e831e2ce/camera-realsense2_camera_manager-2.log].

log file: /home/qjs/.ros/log/1eca8a82-e8c4-11ec-807e-70b5e831e2ce/camera-realsense2_camera_manager-2*.logsudo find / -name librealsense2_camera.so

Delete... In one of the places librealsense2_camera.so, Keep only one .

Warning :

10/06 21:55:35,555 WARNING [140685336372992] (messenger-libusb.cpp:42) control_transfer returned error, index: 768, error: Resource temporarily unavailable, number: 11No matter , have access to

Dr. Gao Xiang ORBSLAM2_with_pointcloud_map install

Install dependent Libraries

sudo apt-get install cmake gcc g++ git vim

sudo apt-get install libglew-dev

sudo apt-get install libboost-dev libboost-thread-dev

sudo apt-get install libboost-filesystem-dev

sudo apt-get install libpython2.7-dev

sudo apt-get install build-essentialinstall Pangolin and Eigen These two libraries , The suggestions are 0.5 and 3.2 edition , The probability of error reporting is small .

cd Pangolin

mkdir build

cd build

cmake ..

make

sudo make install

cd eigen

mkdir build

cd build

cmake ..

make

sudo make installCompile non ROS Environmental Science

Download the modified code :

g2o_with_orbslam2 Under the home folder

mkdir build

cmake ..

make -j8

sudo make install/ORB_SLAM2_modified/build /ORB_SLAM2_modified/Thirdparty/DBoW2/build /ORB_SLAM2_modified/Thirdparty/g2o/build Delete three build Folder

stay ORB_SLAM2_modified Right click to open the terminal

cd ORB_SLAM2_modified/Thirdparty/DBoW2

mkdir build

cd build

cmake .. -DCMAKE_BUILD_TYPE=Release

make Re in ORB_SLAM2_modified Right click to open the terminal

mkdir build

cd build

cmake .. -DCMAKE_BUILD_TYPE=Release

make -j8Dataset testing

./Examples/RGB-D/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM1.yaml datasets/rgbd_dataset_freiburg1_xyz datasets/rgbd_dataset_freiburg1_xyz/association.txt

./Examples/RGB-D/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM1.yaml datasets/rgbd_dataset_freiburg1_room datasets/rgbd_dataset_freiburg1_room/association.txtThe saved point cloud is in the main folder , The name is vslam.pcd

compile ROS Environmental Science

Came to ORB_SLAM2_modified Folder Add your... At the end of the open text ROS route , preservation

gedit ~/.bashrc

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/ Your directory /ORB_SLAM2_modified/Examples/ROSAfter saving, input... At the terminal

source ~/.bashrcTo configure ros Environmental Science

sudo gedit /opt/ros/melodic/setup.bash

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/ Your directory /ORB_SLAM2_modified/Examples/ROSAfter saving, input... At the terminal

source /opt/ros/melodic/setup.bash Delete ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/build Documents in

Then compile

chmod +x build_ros.sh

./build_ros.shROS Report errors ModuleNotFoundError: No module named ‘rospkg‘

pip install rospkg boost Library problems for :

stay ~/catkin_ws/src/ORB_SLAM2/Examples/ROS/ORB_SLAM2/CMakeLists.txt Add a sentence to -lboost_system

appear g2o Error reporting of Library , The probability is and ros Self contained g2o The conflict , uninstall :

sudo apt-get remove ros-melodic-libg2oView camera internal parameters

roslaunch realsense2_camera rs_rgbd.launch

rostopic echo /camera/color/camera_info

header:

seq: 1469

stamp:

secs: 1654853975

nsecs: 751267910

frame_id: "camera_color_optical_frame"

height: 480

width: 640

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [611.2393798828125, 0.0, 319.9852600097656, 0.0, 610.677490234375, 242.4569854736328, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [611.2393798828125, 0.0, 319.9852600097656, 0.0, 0.0, 610.677490234375, 242.4569854736328, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: FalseOn the terminal K, Is the parameter , among K = [fx 0 cx 0 fy cy 0 0 1 ] , The baseline 50mm

Modify the parameters , Get a new one D435i.yaml

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 611.239380

Camera.fy: 610.677490

Camera.cx: 319.985260

Camera.cy: 242.456985

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.p3: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

# bf = baseline (in meters) * fx, D435i Of baseline = 50 mm

Camera.bf: 50.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 40.0

# Deptmap values factor

DepthMapFactor: 1000.0

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

PointCloudMapping.Resolution: 0.01

meank: 50

thresh: 2.0function

roslaunch realsense2_camera rs_rgbd.launch

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.txt Examples/RGB-D/D435i.yamlRecording package

rosbag record -o 20220611.bag /camera/color/image_raw /camera/aligned_depth_to_color/image_raw Reference blog

The final result

边栏推荐

猜你喜欢

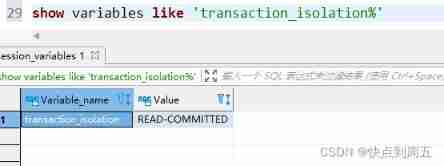

The third day of mysql45

这些老系统代码,是猪写的么?

Wechat official account server configuration

使用PyWebIO测试,刚入门的测试员也能做出自己的测试工具

从业一年,我是如何涨薪13K+?

批量--07---断点重提

Optimization of lazyagg query rewriting in parsing data warehouse

Resolve the format conflict between formatted document and eslint

Day_ eleven

Optimization of lazyagg query rewriting in parsing data warehouse

随机推荐

千万级购物车系统缓存架构方案

Reading mysql45 lecture - index continued

批量--07---断点重提

Understanding of reflection part

flutter

Problems encountered in using MySQL

ncnn源码学习全集

3. conditional probability and independence

20省市公布元宇宙路线图

心樓:華為運動健康的七年築造之旅

Structure de la mémoire JVM

论文笔记:Generalized Random Forests

Wechat official account server configuration

使用PyWebIO测试,刚入门的测试员也能做出自己的测试工具

Final, override, polymorphic, abstract, interface

Day_ eleven

How to view the change trend of cloud database from the behind of the launch of tidb to Alibaba cloud

Preliminary understanding of JVM

Shuttle pop-up returns to the upper level

Nsurlsession learning notes (III) download task