当前位置:网站首页>Classic model - Nin & googlenet

Classic model - Nin & googlenet

2022-06-26 03:28:00 【On the right is my goddess】

NiN

The problem of full connection layer : Contains a large number of parameters . It's easy to over fit .

Usually transport Enter into through Avenue Count × chart image ruler " × transport Out ruler degree Enter the number of channels \times Image size \times Output scale transport Enter into through Avenue Count × chart image ruler " × transport Out ruler degree

NiN The idea is : Don't connect all layers at all ;

One NiN block :

The convolution layer is followed by two 1x1 Convolution of , The stride is 1, No filling , The output shape is the same as the convolution output . It acts as a full connection layer ( Per pixel ).

NiN The architecture of :

- No full connection layer ;

- Use alternately NiN Blocks and strides are 2 The largest pool layer of ( Gradually reduce the height and width and increase the number of channels );

- Finally, the output is obtained by using the global average pooling layer ( The number of input channels is the number of categories );

If we want to get 1000 Class words , Finally, there is 1000 Channels , The confidence of the corresponding class of this channel is obtained by global average pooling .

summary :

- NiN Blocks use convolution layers +2 individual 1x1 Convolution layer , The latter adds nonlinearity to each pixel ;

- Global average pooling replaces VGG and AlexNet The full connection layer of , Few parameters , It's not easy to over fit .

Parameter used Alex That set , But I added some 1x1 Convolution of .

GoogleNet

How to choose the best super parameter ?

Convolution kernel 、 Pooling ways 、 The channel number ?

Inception block : Every convolution has to , Last concatenation( Height width unchanged , Channel number connection ).

You can see , The function of white blocks is to reduce the complexity of the model by changing the number of channels ( That is, the parameter quantity ). The blue block is used to extract information .

The design idea of first decreasing and then increasing is bottleneck The feeling of .

Inception A block is compared to a single 3x3 or 5x5 Compared to convolution , It has fewer parameters and computational complexity .

meanwhile Inception Blocks also increase the diversity of information learned from them .

Stage1 and Stage2 and VGG Agreement .GoogleNet Used a lot of NiN Thought , Use... In large quantities 1x1 Convolution reduces the amount of parameters .

Compared with AlexNet,GoogleNet The convolution kernel of is relatively small , This allows spatial information not to be compressed very quickly , Support information learning when the number of subsequent channels increases .

meanwhile , Spatial information is compressed , I think it is also a helpless move to increase the number of channels , The purpose is to reduce the number of parameters .

The third stage , You can see that the number of channels is still increasing , But every one of them Inception The parameters of the blocks are different . It is worth mentioning that ,3x3 Convolution is always the most allocated , This is because its parameters are not large , The effect of extracting information is also OK .

Inception There are many variants of the block follow-up ,V2 Joined the BN、V3 Modified convolution size 、V4 Residual connection is added .

边栏推荐

- 计组笔记——CPU的指令流水

- Cliquez sur le bouton action de la liste pour passer à une autre page de menu et activer le menu correspondant

- Xiaomi TV's web page and jewelry's web page

- On virtual memory and oom in project development

- progress bar

- 如何筹备一场感人的婚礼

- 虫子 构造与析构

- Good news | congratulations on the addition of 5 new committers in Apache linkage (incubating) community

- 2022年挖财证券开户安全嘛?

- golang正则regexp包使用-06-其他用法(特殊字符转换、查找正则共同前缀、切换贪婪模式、查询正则分组个数、查询正则分组名称、用正则切割、查询正则字串)

猜你喜欢

XGBoost, lightGBM, CatBoost——尝试站在巨人的肩膀上

2021-08-04

Tupu software is the digital twin of offshore wind power, striving to be the first

Partition, column, list

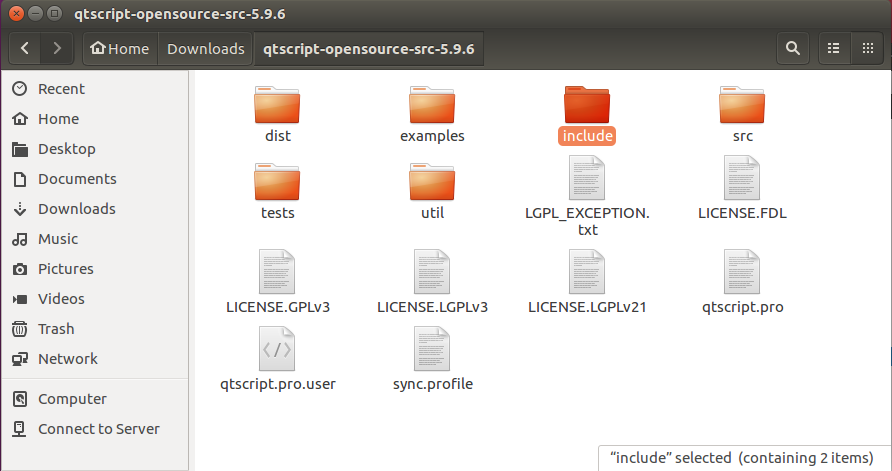

QT compilation error: unknown module (s) in qt: script

经典模型——ResNet

Une citation classique de la nature humaine que vous ne pouvez pas ignorer

项目部署遇到的问题-生产环境

Using meta analysis to drive the development of educational robot

ArrayList#subList这四个坑,一不小心就中招

随机推荐

国信金太阳靠谱吗?开证券账户安全吗?

云计算基础-0

用元分析法驱动教育机器人的发展

个人用同花顺软件买股票安全吗?怎么炒股买股票呢

Partition, column, list

Wealth freedom skills: commercialize yourself

Inkscape如何将png图片转换为svg图片并且不失真

Drawing structure diagram with idea

【论文笔记】Deep Reinforcement Learning Control of Hand-Eye Coordination with a Software Retina

P2483-[模板]k短路/[SDOI2010]魔法猪学院【主席树,堆】

Classic quotations from "human nature you must not know"

How Inkscape converts PNG pictures to SVG pictures without distortion

USB peripheral driver - Enumeration

Worm copy construction operator overload

【读点论文】FBNetV3: Joint Architecture-Recipe Search using Predictor Pretraining 网络结构和超参数全当训练参数给训练了

数字孪生智慧水务,突破海绵城市发展困境

培育项目式Steam教育理念下的儿童创造力

Une citation classique de la nature humaine que vous ne pouvez pas ignorer

Leetcode 175 Combine two tables (2022.06.24)

kitti2bag 安装出现的各种错误