当前位置:网站首页>Selenium in the crawler realizes automatic collection of CSDN bloggers' articles

Selenium in the crawler realizes automatic collection of CSDN bloggers' articles

2022-07-23 14:57:00 【Black horse Lanxi】

Share daily :

No flower , From the beginning, it was flowers

Catalog

One 、 Something to watch out for

1. Each time it slides, it will slide twice when it is loaded

2. get Judge whether you have clicked the collection before the website

Preface ( Thinking process ):

I have written brush likes before , Brush comments , Brush reading , Recently, brush collection has also been realized , Write an article to record , Feeling csdn I'm almost ruined by myself ( Manual formation )

At first, my idea was , First put all the articles of the blogger url Climb down , Put it in txt file , And then use selenium Control the browser for each URL ( article ) Click collect .

How to crawl the website of all articles ?

I looked at , This is crawling the data of the whole station , No hesitation , Direct use crawlspider The framework is designed to deal with the whole station data , At first, I still want to follow the previous idea , Change the homepage to the old version , But I can't find , You can only think of a new version of the home page ( The old version of the article is displayed in pages , The new version is displayed every time 20 individual , Sliding down will show 20 individual ...). Then I found that there was a classified column in the blogger's homepage , Can be in crawlspider Write three rule extractors , The first one is to extract the URL of each column on the homepage , Then extract the URL of each page for each column , Finally, extract the URL of each article from the URL of each page . Then there will be an accident if there is no accident

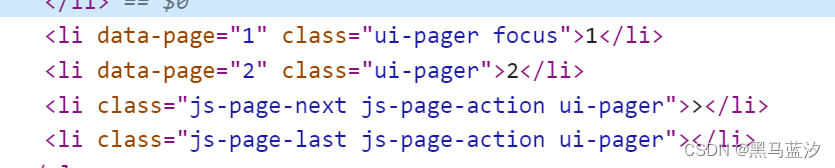

This is the label of each page , ah ? Why is there no website here ?( This is how I use regular matching ......) It's just , Then use selenium To achieve it. :

I want to , use selenium Control the browser to slide down , To make it load all the articles , After that xpath Get the website of all articles .( Realized , Next we will put the code )

Then it comes to the next question , Control the browser to click favorites

It's not difficult to realize click operation , But there's a problem , Each time selenium The controlled browser is not logged in , I think , Isn't that easy , I carry it directly cookie Don't we just solve it ? After that, I added cookie, But it doesn't work ( I didn't know why , Now I feel that it should be detected ); Since you add cookie no way , Then I'll use selenium Control to enter the account and password to log in , The result was another accident , Click operation is not difficult , You log in to it many times and let you slide the slider to verify , It's not hard , After sliding, he said failure , Let slide again , It is estimated that it was detected , I have tried many things online selenium Methods of avoiding detection , Doesn't work ( At first, I felt that maybe my code slider didn't slide well , Just optimize it, but it still doesn't work , Why do I think it was detected , Because I'm here selenium I tried to verify in the open browser , Manual sliding also failed , Then you open the browser manually , Login is no problem ), I've been stuck here for a long time , Later, I used Take over the browser Solved the problem ( Take over the browser and log in , Did not solve the detected problem ).

Change your mind , Use take over browser , You can log in manually , You can always use this browser in the code , Will also remain logged in , And then get Each website , Click collect ( Actually before that , My idea is to use selenium Log in once 【 Because I found , A span , Your first login will not allow you to perform sliding verification 】, Then click on the article , Click collect , Close the current tab , Click on the next article , Combined with the sliding operation , But there is something wrong with the code , Later, I came into contact with the method of taking over , Gave up ; How to put it? , This kind of thinking may not be very good , If the height of each article on the homepage is different , There may be problems later , That is, you can't click , But this is also a way of thinking )

One 、 Something to watch out for

1. Each time it slides, it will slide twice when it is loaded

Sometimes, a slide will fail to load , As a result, data cannot be crawled

The following is the code to get the website :

from selenium import webdriver

import time

url = " The address of the home page "

options = webdriver.ChromeOptions()

# # The configuration object adds a command to open the browser without interface

options.add_argument('--headless')

driver = webdriver.Chrome()

driver.get(url)

time.sleep(4)

# The number of cycles depends on the total number of articles , once 20, Calculate it by yourself

for i in range(58):

# Don't directly execute js Slide to the bottom every time , Will make mistakes , Sometimes the page does not update new articles , So I let it slide twice , Make sure to refresh

driver.execute_script("window.scrollTo(0,document.body.scrollHeight / 2)")

driver.execute_script("window.scrollTo(document.body.scrollHeight / 2,document.body.scrollHeight)")

time.sleep(1)

# Get the URL of each article ( It's not exactly a website , below get_attribute Is the website )

url_list = driver.find_elements('xpath', '//article[@class="blog-list-box"]/a')

f = open('urls.txt', 'a+', encoding='utf8')

for i in url_list:

i = i.get_attribute('href')

print(i)

f.write(i + '\n')

f.close()

driver.close()

2. get Judge whether you have clicked the collection before the website

At first, there was no judgment , After running for a while , It will go to the text that has been clicked and then click ( It will cause the collection to be cancelled ), I still don't know why , In short, it's right to add

The following is the code of click collection :

from selenium.webdriver import Chrome

from selenium import webdriver

import time

from selenium.webdriver.chrome.options import Options

# Take over the browser , send out get

def get_chrome_proxy(url):

options = Options()

options.add_experimental_option("debuggerAddress", "127.0.0.1: Port number ")

driver = Chrome(options=options)

driver.get(url)

driver.implicitly_wait(15)

return driver

# Achieve click operation

def manage(url):

try:

driver = get_chrome_proxy(url)

# Click collect

driver.find_element('xpath', '//*[@id="toolBarBox"]/div/div[2]/ul/li[4]').click()

time.sleep(2)

# Click favorites in the default folder

driver.find_element('xpath', '//*[@id="csdn-collection"]/div/div[2]/ul/li/span[2]').click()

except Exception as e:

print()

if __name__ == '__main__':

# Put the visited ones in the list

finish = []

with open('urls.txt', 'r') as f:

urls = f.readlines()

# Judge whether you have visited

for i in urls:

if i not in finish:

manage(i)

finish.append(i)But the efficiency is really a little low

边栏推荐

- Program design of dot matrix Chinese character display of basic 51 single chip microcomputer

- C thread lock and single multithreading are simple to use

- CSDN写文方法(二)

- Regular verification of ID number

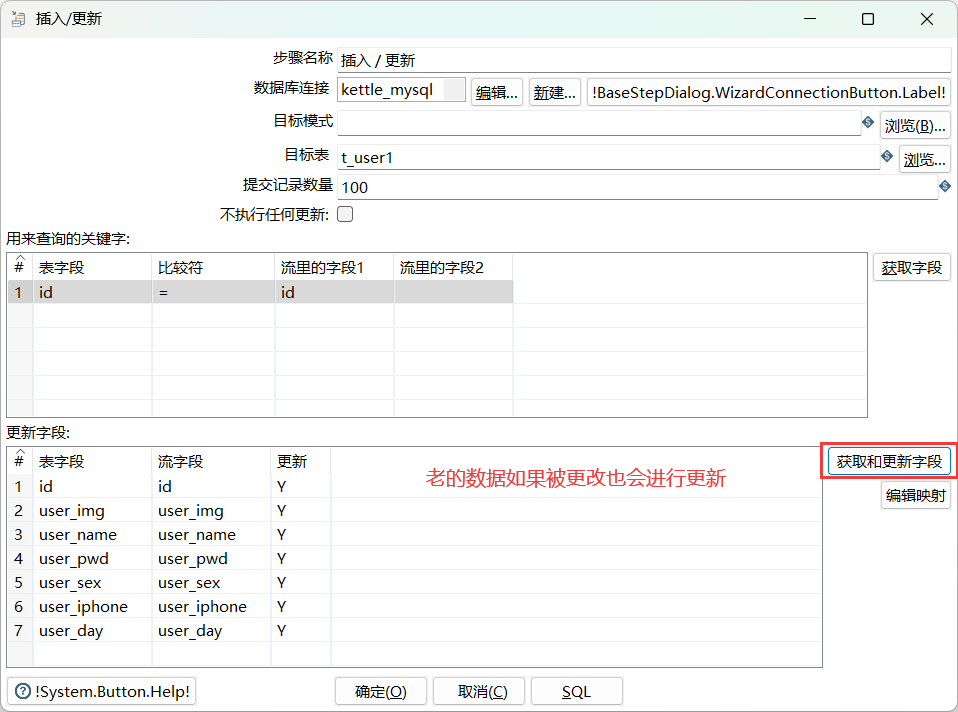

- Kettle實現共享數據庫連接及插入更新組件實例

- 【无标题】

- @FeignClient使用详细教程(图解)

- [software test] redis abnormal test encountered in disk-to-disk work

- [转]基于POI的功能区划分()

- Redis | 非常重要的中间件

猜你喜欢

Redis | 非常重要的中间件

@FeignClient使用詳細教程(圖解)

Kettle实现共享数据库连接及插入更新组件实例

Postgresql快照优化Globalvis新体系分析(性能大幅增强)

【小程序自动化Minium】三、元素定位- WXSS 选择器的使用

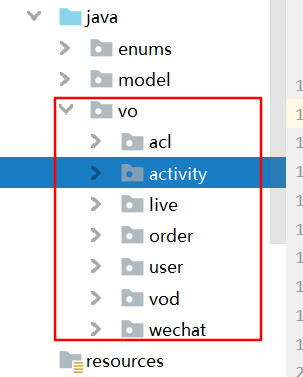

Live classroom system 03 supplement model class and entity

numpy和pytorch的版本对应关系

FastAPI应用加入Nacos

什么是Per-Title编码?

Yunna - how to strengthen fixed asset management? How to strengthen the management of fixed assets?

随机推荐

Kettle implémente une connexion de base de données partagée et insère une instance de composant de mise à jour

【测试平台开发】二十、完成编辑页发送接口请求功能

基于nextcloud构建个人网盘

Mathematical function of MySQL function summary

LeetCode-227-基本计算器||

js拖拽元素

4. Find the median of two positive arrays

Generate order number

Advanced operation and maintenance 03

Design and implementation of websocket universal packaging

What is per title encoding?

【测试平台开发】十七、接口编辑页面实现下拉级联选择,绑定接口所属模块...

String function of MySQL function summary

Quick introduction to PKI system

Regular expression common syntax parsing

The win11 installation system prompts that VirtualBox is incompatible and needs to uninstall the solution of virtual, but the uninstall list cannot find the solution of virtual

linux定时备份数据库脚本

dataframe.groupby学习资料

Yunna | how to manage the fixed assets of the company? How to manage the company's fixed assets better?

Work notes: one time bag grabbing