当前位置:网站首页>Towhee weekly model

Towhee weekly model

2022-07-23 14:32:00 【Zilliz Planet】

Weekly newspaper producer :Towhee Technical team

This week we share 5 Video related AI Model :

Portable and easy-to-use video action recognition model series MoViNets、 Realize the cross modal search of text and video CLIP4Clip、 Than CLIP4Clip Better video retrieval model DRL、 Break away from the limitations of video data Frozen in Time、 To champion model MMT Upgraded again MDMMT.

If you think the content we share is good , Please don't be stingy and give us some free encouragement : give the thumbs-up 、 like 、 Or share it with your friends .

MoViNets Series model , A good helper for real-time classified videos on mobile phones

Need video understanding , But the model is too heavy 、 It takes too long ? The lightweight motion recognition model is upgraded again , By Google research in 2021 Put forward in MoViNets Series can infer streaming video more efficiently , And support the implementation of classification of video streams captured by mobile devices .MoViNets General data set for video action recognition Kinetics、Moments in Tme and Charades Advanced accuracy and efficiency have been obtained on , Proved its high efficiency and wide applicability .

MoViNets: Streaming Evaluation vs. Multi-Clip Evaluation

MoViNets It is a series of Convolutional Neural Networks , Yes 2D Video classifiers and 3D Video classifiers learn from each other , The key advantages of being compatible with them , And alleviate their respective limitations . This series of models obtain rich and efficient video network structure through neural structure search , Reference stream buffering technology makes 3D Convolution can accept any length of streaming video sequence , Then simply integrate multiple models to improve accuracy , Finally, the calculation amount is effectively balanced 、 Memory overhead 、 precision .

Related information :

Model use case :action-classification/movinet

The paper :MoViNets: Mobile Video Networks for Efficient Video Recognition

More information :MoViNets: Make real-time video understanding a reality

Multimodal model CLIP4Clip Take you to realize mutual search between text and video

CLIP4Clip Cross modal graphic model CLIP Based on , Successfully realized the text / Video retrieval task . Whether it's by text looking for relevant content videos , Or automatically match the most appropriate description for the video ,CLIP4Clip Can help you do . Through a large number of ablation experiments ,CLIP4Clip Proved its effectiveness , And in MSR-VTT、MSVC、LSMDC、ActivityNet and DiDeMo Wait for the text - On the video data set SoTA result .

CLIP4Clip: Main Structure

CLIP4Clip Based on the pre trained graphic model , Complete the task of video retrieval through migration learning or fine-tuning . It uses pre trained CLIP Model as backbone network , It solves the task of video clip retrieval from frame level input , And uses the parameterless type 、 Sequence type and close type similarity calculator to get the final result .

Related information :

Model use case : video-text-embedding/clip4clip

The paper : CLIP4Clip: An Empirical Study of CLIP for End to End Video Clip Retrieval

More information : CLIP4Clip: CLIP Next city , utilize CLIP Realize video retrieval

Have better text video interaction ,DRL Separation framework improvements CLIP4Clip

Even though CLIP4Clip Realize cross modal text / Video Retrieval , However, the network structure still has some limitations or room for improvement . therefore 2022 Beginning of the year , Then there is DRL(Disentangled Representation Learning) Match content of different granularity across modes . In the task of video retrieval , The improved model greatly improves the accuracy of the major text video data sets .

Overview of DRL for Text-Video Retrieval

CLIP4Clip When calculating the similarity between text and video , Only the overall representation of the two modes is considered , Lack of fine-grained interaction . such as , When the text description only corresponds to a part of the video frame , If we extract the overall features of the video , Then the model may be disturbed and misled by the information of other video frames .DRL Yes CLIP4Clip Two important improvements are proposed , One is Weighted Token-wise Interaction, Dense prediction of similarity , adopt max The operation finds potentially active token. The other is Channel Decorrelation Regularization, Channel decorrelation regularization can reduce the redundancy and competition of information between channels , Use the covariance matrix to measure the redundancy on the channel .

Related information :

Model use case : video-text-embedding/drl

The paper : Disentangled Representation Learning for Text-Video Retrieval

More information : Video multimodal pre training / Retrieval model

Treat images as video snapshots ,Frozen in Time Break away from the data limitations of multimodal video retrieval

Oxford University is in ICCV2021 Published Frozen in Time, Use text flexibly / Images and text / Video data sets , It provides an end-to-end video image joint encoder . The model is for the latest ViT and Timesformer Modification and extension of structure , And include attention in space and time .

Frozen in Time: Joint Image and Video Training

Frozen in Time It can be trained by using text images and text video data sets alone or in combination . When using image training , The model treats it as a frozen snapshot of the video , Gradually learn the context of time level in training . Besides , The author also provides a new video text pre training data set WebVid-2M , contain 200 More than 10000 videos . Although the amount of training is an order of magnitude smaller than other general data sets , But experiments have shown that , Use the data set to pre train the model in the standard downstream video retrieval benchmark ( Include MSR-VTT、MSVD、DiDeMo、LSMDC) Can produce SOTA Result .

Related information :

Model use case : video-text-embedding/frozen-in-time

The paper : Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval

from MMT To MDMMT, Comprehensively optimize text and video retrieval

MDMMT Published in 2021 year , Yes, the year before last cvpr Video pentathlon challenge champion MMT ( Published in ECCV 2020) An extended study of . This research attempts and optimizes the training data set , Continue to lead the text video retrieval race .

MMT: Cross-modal Framework

MMT For extraction 、 Integrate video features , Including image features 、 Phonetic features and phonetic corresponding text features . Firstly, the pre trained expert network is used to extract features for the processing of the three modes , Then for each modal feature , And use maxpool Generate an integrated feature . The integrated feature and the corresponding modal feature sequence are spliced , Then the features of different modal groups are spliced . It will also learn a corresponding modal mark feature insertion for each mode , And the corresponding different frame feature insertion . That is to add modal information and frame number information to each feature .MDMMT Use with MMT Same loss function and similar structure , But it is optimized on the super parameter .

Related information :

Model use case : video-text-embedding/mdmmt

The paper : MDMMT: Multidomain Multimodal Transformer for Video RetrievalMulti-modal Transformer for Video Retrieval

More information : Video multimodal pre training / Retrieval model

For more project updates and details, please pay attention to our project ( https://github.com/towhee-io/towhee/blob/main/towhee/models/README_CN.md) , Your attention is a powerful driving force for us to generate electricity with love , welcome star, fork, slack Three even :)

zilliz User communication

边栏推荐

- 云呐|公司固定资产如何管理?公司固定资产如何管理比较好?

- Renforcement de l'apprentissage - points de compréhension du gradient stratégique

- Aruba学习笔记05-配置架构- WLAN配置架构

- wacom固件更新错误123,数位板驱动更新不了

- STM32 outputs SPWM wave, Hal library, cubemx configuration, and outputs 1kHz sine wave after filtering

- -bash: ifconfig: command not found

- FFmpeg 2 - ffplay、ffprobe、ffmpeg 命令使用

- 链下数据互操作

- 第三章 复杂一点的查询

- C language implements memcpy and memmove

猜你喜欢

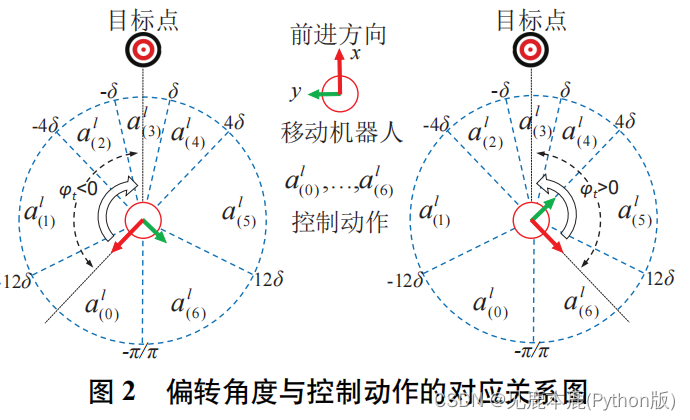

【论文笔记】基于分层深度强化学习的移动机器人导航方法

494. 目标和

链下数据互操作

JS calendar style pie chart statistics plug-in

![[download attached] several scripts commonly used in penetration testing that are worth collecting](/img/01/3b74c5ab4168059827230578753be5.png)

[download attached] several scripts commonly used in penetration testing that are worth collecting

![webstrom ERROR in [eslint] ESLint is not a constructor](/img/e9/b084512d6aa8c4116d7068fdc8fc05.png)

webstrom ERROR in [eslint] ESLint is not a constructor

Tensor, numpy, PIL format conversion and image display

数据库连接池 & DBUtils

10 years of software testing engineer experience, very confused

152. 乘积最大子数组

随机推荐

10年软件测试工程师经验,很茫然....

剑指offer19 正则表达式

云呐|怎样管理固定资产?如何进行固定资产管理?

Find the maximum area of the island -- depth first search (staining method)

完全背包!

基于EFR32MG24的AI 加速度姿势识别体验

-bash: ifconfig: command not found

手机股票开户风险性大吗,安全吗?

【论文笔记】基于分层深度强化学习的移动机器人导航方法

股票炒股开户风险性大吗,安全吗?

Is it risky and safe to open an account for stock speculation?

Fabric. JS basic brush

VK36N5D抗电源干扰/手机干扰 5键5通道触摸检测芯片防呆功能触摸区域积水仍可操作

STM32 outputs SPWM wave, Hal library, cubemx configuration, and outputs 1kHz sine wave after filtering

单调栈!!!

云呐-如何加强固定资产管理?怎么加强固定资产管理?

拖拽----

koa框架的使用

JS to obtain age through ID card

ValidationError: Invalid options object. Dev Server has been initialized using an options object th