当前位置:网站首页>Two ways to improve the writing efficiency of hard disk storage data

Two ways to improve the writing efficiency of hard disk storage data

2022-06-23 03:51:00 【Sojson Online】

There are many ways to store data today , The hard disk has the advantages of price and data protection , It is the first choice of most users . however , Compared with memory, hard disk is IO Reading and writing are several orders of magnitude slower , Then why prefer hard disk ?

The first thing to mention is , The reason why the operation of the disk is slow is mainly because the reading and writing of the disk takes time . Reading and writing mainly takes three parts : Seek time + Rotation time + Transmission time , Among them, the seeking time is the longest . Because seek needs to move the head to the corresponding track , The magnetic arm is driven by a motor to move , It is a mechanical movement, so it takes a long time . At the same time, our operations on the disk are usually random read-write , It is necessary to frequently move the head to the corresponding track , This prolongs the time , The performance is relatively low .

In this way, if you want to make the disk read and write faster , As long as you don't use random reading and writing , Or reduce the number of random , You can effectively improve the disk read and write speed . How to operate it ?

Sequential reading and writing

Let's talk about the first method , How to use sequential reading and writing , Not random reading and writing ? As mentioned above, the seek time is the longest , So the most intuitive idea is to save this part of time , And the order IO Just enough to meet the needs .

Append writing is a typical order IO, The typical product optimized with this idea is message queue . With popular Kafka For example ,Kafka For high performance IO, Many optimization methods are used , The optimization method of sequential writing is used .

Kafka Time complexity is O(1) To provide message persistence , Even for TB Data above level 1 can also guarantee the access performance of constant time complexity . For each partition , It takes the Producer Messages received , Write the corresponding... In sequence log In file , When a file is full , Just open a new file . Consumption time , It also starts from a global position , That is, some one log Start somewhere in the file , Read out messages in sequence .

Reduce the number of random

After reading the sequence , Let's look at ways to reduce the number of random writes . In many scenarios , In order to facilitate our subsequent data reading and operation , We require that the data written to the hard disk is orderly . For example MySQL in , Index in InnoDB In the engine, there is B+ Organized in a tree way , and MySQL The primary key is a clustered index ( An index type , Data and index data are put together ), Since the data and index data are put together , So when data is inserted or updated , We need to find where to insert , Then write the data to a specific location , This produces random IO. therefore , If every time we insert 、更 New data is written to .ibd Word of the file , Then the disk also needs to find the corresponding record , Then update , The whole process IO cost 、 Search costs are high , The performance and efficiency of the database will be greatly reduced .

by 了 Address write performance issues ,InnoDB introduce 了 WAL Mechanism ,更 To be exact , Namely redo log. Let me give you a brief introduction Redo Log.

InnoDB redo log Is a sequential write 、 Fixed size circular log . There are two main functions :

- Improve InnoDB The efficiency of the storage engine in writing data

- Guarantee crash-safe Ability

Here we only care about how it improves the efficiency of writing data . The picture below is redo log Schematic diagram .

As you can see from the diagram ,red olog The writing of is sequential ,不 Need to find a specific index location , But simply from write-pos The pointer position is appended .

Second, when one writes a transaction or 更 New transaction execution 行 when ,InnoDB First take out the corresponding Page, Then enter 行 modify . When the transaction commits , Will be located in memory redo log buffer Force refresh to hard disk , If 不 consider binlog Words , We can think of things as 行 Can return success 了, write in DB The operation of is carried out asynchronously by another thread 行.

And then , May by InnoDB Of Master Thread Regularly remove the dirty pages in the buffer pool , That is, the page we modified above , Refresh to disk , At this time, the modified data is really written to . ibd file .

summary

Article from Cloud again contribute

边栏推荐

- [JS reverse hundreds of cases] the login of a HN service network is reverse, and the verification code is null and void

- 如何处理大体积 XLSX/CSV/TXT 文件?

- LRU cache

- Flink practice tutorial: advanced 7- basic operation and maintenance

- 【LeetCode】179. Maximum number

- 数据交易怎样实现

- Centos7 installing MySQL and configuring InnoDB_ ruby

- RTOS system selection for charging point software design

- D overloading nested functions

- Swiftui component encyclopedia creating animated 3D card scrolling effects using Scrollview and geometryreader

猜你喜欢

Full analysis of embedded software testing tool tpt18 update

1058 multiple choice questions (20 points)

Svn local computer storage configuration

第一批00后下场求职:不要误读他们的“不一样”

直接插入排序

One of the touchdesigner uses - Download and install

Insérer le tri directement

【曾书格激光SLAM笔记】Gmapping基于滤波器的SLAM

Centos7 installing MySQL and configuring InnoDB_ ruby

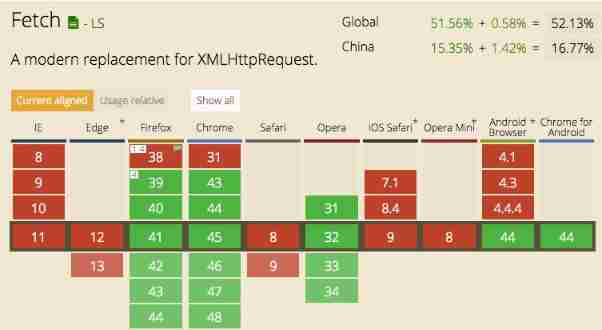

Fetch request details

随机推荐

纳瓦尔宝典:不靠运气致富的原则

[OWT] OWT client native P2P E2E test vs2017 build 2: test unit construction and operation

LRU cache

MySQL common instructions

How to get started with apiccloud app and multi terminal development of applet based on zero Foundation

How to realize data transaction

Downloading sqlserver versions (2016-2019)

Parsing the implementation of easygbs compatible token as parameter passing

What is the difference between ArrayList and LinkedList?

直接插入排序

[greed] leetcode991 Broken Calculator

TRTC zero foundation -- Video subscription on the code

[tcapulusdb knowledge base] [list table] sample code of asynchronous scanning data

Hierarchical attention graph convolution network for interpretable recommendation based on knowledge graph

Easygbs service is killed because the user redis is infected with the mining virus process. How to solve and prevent it?

How to save the model obtained from sklearn training? Just read this one

Navar's Treasure Book: the principle of getting rich without luck

如何处理大体积 XLSX/CSV/TXT 文件?

What is the digital "true" twin? At last someone made it clear!

For patch rollback, please check the cbpersistent log