当前位置:网站首页>机器学习小试(10)使用Qt与Tensorflow创建CNN/FNN测试环境

机器学习小试(10)使用Qt与Tensorflow创建CNN/FNN测试环境

2022-07-24 10:27:00 【丁劲犇】

时隔多年,我们继续开始机器学习方面的话题。上一篇文章还是在2017年,时隔五年,人们对机器学习的认知早已变得理性。我们借助之前课程的成果,进行适应性的改造。

现在,Tensorflow 2.X 已经是常用的工具。我们希望一方面扩展增量学习网络的功能,一方面迁移API到TF2.x。要保证网络的参数仍旧是可配置的。采用ini文件定义网络,采用Qt界面调用应用训练、评估、判决。完整工程参考https://gitcode.net/coloreaglestdio/qtfnn_kits,在Linux下测试通过。windows Anacona 环境也是可以的,就是配置cuda有些麻烦。

1. 定制CNN模型

我们希望有一个能够涵盖基本CNN应用的通用模型,以便通过配置简单的参数,就能够伸缩规模。

这样的模型类似下图:

其中,输入的维度/尺寸,各级网络的规模、池化比例,FNN规模都可调。

2. 参数与INI配置

这些网络的参数,可以通过一个GUI配置。该GUI如下: 主要的配置文件定义了网络的构成,比如:

主要的配置文件定义了网络的构成,比如:

[cnn1d]

channels=3

filter_height="11,7,5"

filters="5,5,5"

height=220

pool_rates="2,2,2"

[cnn2d]

channels=3

filter_height="11,7,5"

filter_width="5,4,3"

filters="16,8,5"

height=180

pool_rates_height="2,2,3"

pool_rates_width="2,2,2"

width=40

[fnn]

hidden_layer_size="256,256"

lambda=1e-10

output_nodes=20

scalar_n=1

[performance]

file_deal_times=100

iterate_times=10

train_step=128

trunk=256

将生成下面的结构(TF输出)

CNN1:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv1d (Conv1D) (None, 210, 5) 170

max_pooling1d (MaxPooling1D (None, 105, 5) 0

)

conv1d_1 (Conv1D) (None, 99, 5) 180

max_pooling1d_1 (MaxPooling (None, 49, 5) 0

1D)

conv1d_2 (Conv1D) (None, 45, 5) 130

max_pooling1d_2 (MaxPooling (None, 22, 5) 0

1D)

flatten (Flatten) (None, 110) 0

=================================================================

Total params: 480

Trainable params: 480

Non-trainable params: 0

_________________________________________________________________

CNN2:

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 170, 36, 16) 2656

max_pooling2d (MaxPooling2D (None, 85, 18, 16) 0

)

conv2d_1 (Conv2D) (None, 79, 15, 8) 3592

max_pooling2d_1 (MaxPooling (None, 39, 7, 8) 0

2D)

conv2d_2 (Conv2D) (None, 35, 5, 5) 605

max_pooling2d_2 (MaxPooling (None, 11, 2, 5) 0

2D)

flatten_1 (Flatten) (None, 110) 0

=================================================================

Total params: 6,853

Trainable params: 6,853

Non-trainable params: 0

_________________________________________________________________

FNN:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 256) 56832

dense_1 (Dense) (None, 256) 65792

dense_2 (Dense) (None, 20) 5140

=================================================================

Total params: 127,764

Trainable params: 127,764

Non-trainable params: 0

_________________________________________________________________

MIXNN:

Model: "model"

______

Layer (type) Output Shape Param # Connected to

======

input_2 (InputLayer) [(None, 220, 3)] 0 []

input_3 (InputLayer) [(None, 180, 40, 3) 0 []

]

input_1 (InputLayer) [(None, 1)] 0 []

sequential (Sequential) (None, 110) 480 ['input_2[0][0]']

sequential_1 (Sequential) (None, 110) 6853 ['input_3[0][0]']

concatenate (Concatenate) (None, 221) 0 ['input_1[0][0]',

'sequential[0][0]',

'sequential_1[0][0]']

sequential_2 (Sequential) (None, 20) 127764 ['concatenate[0][0]']

======

Total params: 135,097

Trainable params: 135,097

Non-trainable params: 0

3. TF2网络构建方法大大简化

在源码里,同时提供了相同接口的TF-1,TF-2代码,可以看到网络构建方法大大简化了。

3.1 旧的 tensorflow-1代码

def create_graph(scalar_N,conv1d_P,conv2d_P,

cnn1d_filters,cnn1d_filter_height,cnn1d_pool_rates,

cnn2d_filters,cnn2d_filter_height,cnn2d_filter_width,

cnn2d_pool_rates_height,cnn2d_pool_rates_width,

K,dlambda,ns_hidden):

""" create_graph创建神经网络,分为两部分.卷积部分\全链接部分 一 卷积部分 @para scalar_N 是标量的特征,直接进入FNN @para conv1d_P 是参加一维卷积的向量参数,[长度、 波段数, 特征器组个数, 特征器长度, 降维采样次数] @para conv2d_P 是参加二维卷积的向量参数,[高度、宽度、波段数, 特征器组个数,特征器高,特征器宽, 降维采样次数] 二 全连接部分 @para n 输入特征数,会自动计算。 @para K 输出向量维度 @para dlambda 正则化小数 0.00001 @para ns_hidden [第一隐层数目,第二隐层数目,...最后一个隐层数目 ] @return 图,可用get_tensor_by_name等函数获得其中的各类结构,主要结构: """

ns_array = ns_hidden[:]

#Output is the last layer, append to last

ns_array.append(K)

#最后一层为线性组合的输出,以便输出任意范围的值

#ns_array.append(K)

hidden_layer_size = len(ns_array)

cnn1d_pool_times = len(cnn1d_pool_rates)

cnn2d_pool_times = len(cnn2d_pool_rates_height)

#--------------------------------------------------------------

#create graph

graph = tf.Graph()

with graph.as_default():

punish = tf.constant(0.0, name='regular')

with tf.name_scope('cnn1d'):

in1_vector = tf.placeholder(tf.float32,

[None,conv1d_P[0],conv1d_P[1],

conv1d_P[2]],name="cnn1d_in")

#进行P[4]次卷积+降维

data_1d = [in1_vector]

filters_1d = []

bais_1d = []

convres_1d = []

activat_1d = []

out1d = []

size1d_height = [conv1d_P[0]]

#池化次数

for idx in range(0,cnn1d_pool_times) :

print(data_1d[idx])

channles = conv1d_P[2]

if (idx>0):

channles = cnn1d_filters[idx-1]

#添加新的卷积层滤波器,值-1,1

filters_1d.append(tf.Variable(np.random.rand(

cnn1d_filter_height[idx],#height

1,#1d conv always 1

channles, #channels

cnn1d_filters[idx])*2-1,#filters

dtype=np.float32,

name="cnn1d_filter"+str(idx)))

print(filters_1d[idx])

#偏置量, 若使用非0对称的激活函数,修改为 0,1比较好

bais_1d.append(tf.Variable(tf.random_uniform(

[cnn1d_filters[idx]],-1,1),

name='cnn1_b'+str(idx)))

#惩罚

punish = punish + tf.reduce_sum(filters_1d[idx]**2)* dlambda

#卷积运算

convres_1d.append(tf.nn.conv2d(data_1d[idx],#input

filters_1d[idx],#filters

strides=[1,1,1,1],

padding="SAME")+bais_1d[idx])

print(convres_1d[idx])

#激励

activat_1d.append(tf.nn.tanh(convres_1d[idx]))

#池化

data_1d.append(tf.nn.avg_pool(activat_1d[idx],

[1,cnn1d_pool_rates[idx],1,1],

strides=

[1,cnn1d_pool_rates[idx],1,1],

padding="SAME"

))

#确定池化后的大小

if (size1d_height[idx]%cnn1d_pool_rates[idx]>0):

addition_1d = 1;

else:

addition_1d = 0;

size1d_height.append(int(size1d_height[idx]/cnn1d_pool_rates[idx])

+addition_1d)

out1d = data_1d[idx+1]

length_1d = size1d_height[idx+1]

chan_1d = cnn1d_filters[idx];

tfs.histogram('cnn1d_filter'+str(idx),filters_1d[idx])

print(out1d)

with tf.name_scope('cnn2d'):

in2_vector = tf.placeholder(tf.float32,

[None,conv2d_P[0],conv2d_P[1],conv2d_P[2]],

name="cnn2d_in")

#进行P[4]次卷积+降维

data_2d = [in2_vector]

filters_2d = []

convres_2d = []

bais_2d = []

activat_2d = []

out2d = []

size2d_size = [[conv2d_P[0]],[conv2d_P[1]]]

for idx in range(0,cnn2d_pool_times) :

print(data_2d[idx])

channles = conv2d_P[2]

if (idx>0):

channles = cnn2d_filters[idx-1]

#滤波器

filters_2d.append(tf.Variable(np.random.rand(

cnn2d_filter_height[idx], #height

cnn2d_filter_width[idx],#width

channles, #channels

cnn2d_filters[idx])*2-1,#filters

dtype=np.float32,

name="cnn2d_filter"+str(idx))

)

print(filters_2d[idx])

#惩罚

punish = punish + tf.reduce_sum(filters_2d[idx]**2)* dlambda

#偏置量, 若使用非0对称的激活函数,修改为 0,1比较好

bais_2d.append(tf.Variable(tf.random_uniform([

cnn2d_filters[idx]],-1,1),

name='cnn2_b'+str(idx)))

#卷积计算

convres_2d.append(tf.nn.conv2d(data_2d[idx],#input

filters_2d[idx],#filters

strides=[1,1,1,1],

padding="SAME")+bais_2d[idx])

#激励

activat_2d.append(tf.nn.tanh(convres_2d[idx]))

#池化

data_2d.append(tf.nn.max_pool(activat_2d[idx],

[1,

cnn2d_pool_rates_height[idx],

cnn2d_pool_rates_width[idx],

1],strides=[1,

cnn2d_pool_rates_height[idx],

cnn2d_pool_rates_width[idx],

1],padding="SAME"

))

#确定池化后数据的大小

if (size2d_size[0][idx]%cnn2d_pool_rates_height[idx]):

h_addseed = 1;

else:

h_addseed = 0;

size2d_size[0].append(int(size2d_size[0][idx]

/cnn2d_pool_rates_height[idx])

+h_addseed)

if (size2d_size[1][idx]%cnn2d_pool_rates_width[idx]):

w_addseed = 1;

else:

w_addseed = 0;

size2d_size[1].append(int(size2d_size[1][idx]

/cnn2d_pool_rates_width[idx])

+w_addseed)

print(convres_2d[idx])

out2d = data_2d[idx+1]

length_2d = [size2d_size[0][idx+1],size2d_size[1][idx+1]]

chan_2d = cnn2d_filters[idx];

tfs.histogram('cnn2d_filter'+str(idx),filters_2d[idx])

print(out2d)

#特征整合、一维化

with tf.name_scope('feature_gather'):

print (length_1d,chan_1d,length_2d,chan_2d)

reshape1d = tf.reshape(out1d, [tf.shape(out1d)[0], -1])

reshape2d = tf.reshape(out2d, [tf.shape(out2d)[0], -1])

scalar_input = tf.placeholder(tf.float32,[None,scalar_N],

name="scalar_input")

fullinput = tf.concat([scalar_input ,reshape1d, reshape2d], 1,

name="full_input")

#全连接网络的输入

n = scalar_N+length_1d*chan_1d + length_2d[0]*length_2d[1]*chan_2d

print (n)

with tf.name_scope('fnn'):

#传统的全连接神经网络

s = [n]

a = [fullinput]

W = []

b = []

z = []

for idx in range(0,hidden_layer_size) :

s.append(int(ns_array[idx]))

#若使用非0对称的激活函数,修改为 0,1比较好

W.append(tf.Variable(tf.random_uniform([s[idx],s[idx+1]],-1,1),

name='W'+str(idx+1)))

#偏置量, 若使用非0对称的激活函数,修改为 0,1比较好

b.append(tf.Variable(tf.random_uniform([1,s[idx+1]],-1,1),

name='b'+str(idx+1)))

z.append(tf.add(tf.matmul(a[idx],W[idx]) , b[idx],

name='z'+str(idx+1)))

if (idx < hidden_layer_size - 1):

a_name = 'a'+str(idx+1)

else:

a_name = 'output'

a.append(tf.nn.tanh(z[idx],name=a_name))

punish = punish + tf.reduce_sum(W[idx]**2) * dlambda

tfs.histogram('W'+str(idx+1),W[idx])

tfs.histogram('b'+str(idx+1),b[idx])

tfs.histogram('a'+str(idx+1),a[idx+1])

#--------------------------------------------------------------

with tf.name_scope('loss'):

y_ = tf.placeholder(tf.float32,[None,K],name="tr_out")

pure_loss = tf.reduce_mean(tf.square(a[hidden_layer_size]-y_),

name="pure_loss")

loss = tf.add(pure_loss, punish, name="loss")

tfs.scalar('loss',loss)

tfs.scalar('punish',punish)

tfs.scalar('pure_loss',pure_loss)

with tf.name_scope('train'):

optimizer = tf.train.AdamOptimizer(name="optimizer")

optimizer.minimize(loss,name="train")

#记录网络被训练了多少样本次

train_times = tf.Variable(tf.zeros([1,1]), name='train_times')

train_trunk = tf.placeholder(tf.float32,[None,1],

name="train_trunk")

tf.assign(train_times , train_trunk + train_times,

name="train_times_add")

merged_summary = tfs.merge_all()

sys.stdout.flush()

return {

"graph":graph,"merged_summary":merged_summary}

3.2 tensorflow-2 keras API

def create_graph(scalar_N, conv1d_P, conv2d_P,

cnn1d_filters, cnn1d_filter_height, cnn1d_pool_rates,

cnn2d_filters, cnn2d_filter_height, cnn2d_filter_width,

cnn2d_pool_rates_height, cnn2d_pool_rates_width,

K, dlambda, ns_hidden):

""" create_graph创建神经网络,分为两部分.卷积部分\全链接部分 一 卷积部分 @para scalar_N 是标量的特征,直接进入FNN @para conv1d_P 是参加一维卷积的向量参数,[长度、1, 波段数] @para conv2d_P 是参加二维卷积的向量参数,[高度、宽度、波段数] 二 全连接部分 @para n 输入特征数,会自动计算。 @para K 输出向量维度 @para dlambda 正则化小数 0.00001 @para ns_hidden [第一隐层数目,第二隐层数目,...最后一个隐层数目 ] @return 图,可用get_tensor_by_name等函数获得其中的各类结构,主要结构: """

ns_array = ns_hidden[:]

#Output is the last layer, append to last

#最后一层为线性组合的输出,以便输出任意范围的值

#ns_array.append(K)

hidden_layer_size = len(ns_array)

cnn1d_pool_times = len(cnn1d_pool_rates)

cnn2d_pool_times = len(cnn2d_pool_rates_height)

#--------------------------------------------------------------

#1D Conv

model_cnn1 = tf.keras.Sequential()

for idx in range(0, cnn1d_pool_times):

model_cnn1.add(layers.Conv1D(

cnn1d_filters[idx],

kernel_size=cnn1d_filter_height[idx],

strides=1, activation=tf.nn.leaky_relu))

model_cnn1.add(layers.MaxPooling1D(

pool_size=cnn1d_pool_rates[idx], strides=cnn1d_pool_rates[idx]))

model_cnn1.add(layers.Flatten())

#2D Conv

model_cnn2 = tf.keras.Sequential()

for idx in range(0, cnn2d_pool_times):

model_cnn2.add(layers.Conv2D(

cnn2d_filters[idx],

kernel_size=(cnn2d_filter_height[idx], cnn2d_filter_width[idx]),

strides=1, activation=tf.nn.leaky_relu)) # 第二个卷积层, 16 个3x3 卷积核

model_cnn2.add(layers.MaxPooling2D(

pool_size=(

cnn2d_pool_rates_height[idx], cnn2d_pool_rates_width[idx]),

strides=(

cnn2d_pool_rates_height[idx], cnn2d_pool_rates_width[idx])))

model_cnn2.add(layers.Flatten()) # 打平层,方便全连接层处理

#FULL FNN

model_fnn = tf.keras.Sequential()

for idx in range(0, hidden_layer_size):

model_fnn.add(layers.Dense(

ns_array[idx], activation=tf.nn.leaky_relu,

kernel_regularizer=tf.keras.regularizers.l2(dlambda)))

model_fnn.add(layers.Dense(K, activation=None,kernel_regularizer=tf.keras.regularizers.l2(dlambda)));

#全连接网络的输入

input_scalar = tf.keras.Input(shape=(scalar_N,))

input_1d = tf.keras.Input(shape=(conv1d_P[0],conv1d_P[2],))

input_2d = tf.keras.Input(shape=(conv2d_P[0],conv2d_P[1],conv2d_P[2],))

data_1d = model_cnn1(input_1d)

data_2d = model_cnn2(input_2d)

combined = tf.keras.layers.concatenate([input_scalar,data_1d,data_2d])

final_out = model_fnn(combined)

#train

from tensorflow.keras import optimizers,losses

model_root = tf.keras.Model(inputs = [input_scalar,input_1d,input_2d],outputs=final_out)

model_root.compile(optimizer=optimizers.Adam(lr=0.001),

loss=losses.MeanSquaredError(),

metrics=['accuracy'] # 设置测量指标为准确率

);

print('CNN1:');

model_cnn1.summary();

print('CNN2:');

model_cnn2.summary();

print('FNN:');

model_fnn.summary();

print('MIXNN:');

model_root.summary();

return {

"graph": model_root}

可见,API被大大简化,不需要自行定义输入输出的规模。

4. 输入数据格式

用于训练的输入数据,要保证满足尺寸的要求。主要有四种文件:

- 标量输入:<自然计数>.scalar

- 一维矢量:<自然计数>.1d

- 二维矩阵:<自然计数>.2d

- 参照输出:<自然计数>.train

这四个文件里,存储的都是C语言双精度的double值。其中,标量输入的个数要和项目配置的标量特征数一致。矢量、矩阵可以有多个波段,比如色彩,其排列是自然的RGB顺序。

5. 后续讨论

后续将使用一个图像识别的例子,介绍如何操作这个工具。

边栏推荐

- Sentinel 流量控制快速入门

- Differential restraint system -- 1 and 2 -- May 27, 2022

- The best time to buy and sell stocks includes handling charges (leetcode-714)

- 脚手架文件目录说明、文件暴露

- Dr. water 3

- OSPF includes special area experiments, mGRE construction and re release

- Uniapp uses PWA

- 差分约束系统---1且2--2022年5月27日

- 火山引擎:开放字节跳动同款AI基建,一套系统解决多重训练任务

- New:Bryntum Grid 5.1.0 Crack

猜你喜欢

谷歌联合高校研发通用模型ProteoGAN,可设计生成具有新功能的蛋白质

![[electronic device note 4] inductance parameters and type selection](/img/b1/6c5cb27903bc1093bf49e278e31353.png)

[electronic device note 4] inductance parameters and type selection

Home raiding III (leetcode-337)

How does ribbon get the default zoneawareloadbalancer?

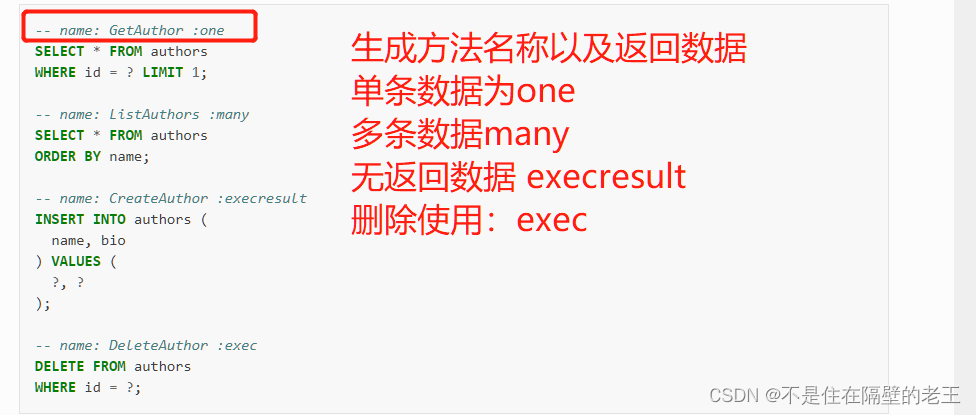

Simply use golang SQLC to generate MySQL query code

Mysql database JDBC programming

OSPF includes special area experiments, mGRE construction and re release

ffmpeg花屏解决(修改源码,丢弃不完整帧)

Query about operating system security patch information

Programmers can't JVM? Ashes Engineer: all waiting to be eliminated! This is a must skill!

随机推荐

【LeeCode】获取2个字符串的最长公共子串

Is it safe to open an online stock account?

Mina framework introduction "suggestions collection"

JMeter setting default startup Chinese

The best time to buy and sell stocks includes handling charges (leetcode-714)

Sentinel 三种流控效果

zoj-Swordfish-2022-5-6

Sentinel 三种流控模式

Sub query of multi table query_ Single row and single column

fatal: unable to commit credential store: Device or resource busy

Image processing: rgb565 to rgb888

Android uses JDBC to connect to a remote database

脚手架文件目录说明、文件暴露

[correcting Hongming] what? I forgot to take the "math required course"!

《nlp入门+实战:第二章:pytorch的入门使用 》

Application of for loop

JSON tutorial [easy to understand]

Detailed explanation of uninstalling MySQL completely under Linux

AttributeError: module ‘sipbuild. api‘ has no attribute ‘prepare_ metadata_ for_ build_ wheel‘

Web Security Foundation - file upload (file upload bypass)