当前位置:网站首页>Convolution kernel and characteristic graph visualization

Convolution kernel and characteristic graph visualization

2022-06-24 14:08:00 【Burn, man】

Convolution kernel , And feature layer visualization

Recently read AlexNet This article CNN It's the beginning of the mountain , There is the visualization of convolution kernel of convolution layer , So record that , Other networks can follow suit , Hope to get a valuable praise .

One 、 Convolution kernel Visualization

1、 Prepare a trained model

It is suggested to use a trained model for visualization , The visualized results can help to observe some potential characteristics ( What I'm using here is AlexNet Pre training model ).

2、 Convolution kernel Visualization

Here is a simple comb :

First layer convolution kernel :torch.Size([64, 3, 11, 11]),

Number of output channels :64, The number of corresponding convolution kernels

Enter the number of channels :3, Number of channels corresponding to convolution kernel

Convolution kernel width :11,

Convolution kernel is high :11

Single channel convolution kernel visualization see the following figure for multi-channel convolution kernel visualization :

The code is as follows :

import os

import torch

import torch.nn as nn

from torch.utils.tensorboard import SummaryWriter

import torchvision.utils as vutils

import torchvision.models as models

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

log_dir = os.path.join(BASE_DIR, "results")

writer = SummaryWriter(log_dir=log_dir, filename_suffix="_kernel")

path_state_dict = os.path.join("data", "alexnet-owt-4df8aa71.pth")

alexnet = models.alexnet()

alexnet.load_state_dict(torch.load(path_state_dict))

kernel_num = -1

vis_max = 1

# Take the first two convolution kernels

for sub_module in alexnet.modules():

if not isinstance(sub_module, nn.Conv2d):

continue

if kernel_num >= vis_max:

break

kernel_num += 1

kernels = sub_module.weight

c_out, c_int, k_h, k_w = tuple(kernels.shape) # Number of output channels , Enter the number of channels , Convolution kernel width , Convolution kernel is high

print(kernels.shape)

for o_idx in range(c_out):

kernel_idx = kernels[o_idx, :, :, :].unsqueeze(1) # get (3, h, w), however make_grid need BCHW, Expand here C Dimension becomes (3, 1, h, w)

kernel_grid = vutils.make_grid(kernel_idx, normalize=True, scale_each=True, nrow=8) # The convolution kernel is visualized in the grid

# nrow: Number of images displayed per line

writer.add_image('{}_Convlayer_split_in_channel'.format(kernel_num), kernel_grid, global_step=o_idx)

# name , picture , Picture number

kernel_all = kernels.view(-1, 3, k_h, k_w) # 3, h, w

kernel_grid = vutils.make_grid(kernel_all, normalize=True, scale_each=True, nrow=8) # c, h, w

writer.add_image('{}_all'.format(kernel_num), kernel_grid, global_step=620)

print("{}_convlayer shape:{}".format(kernel_num, tuple(kernels.shape)))

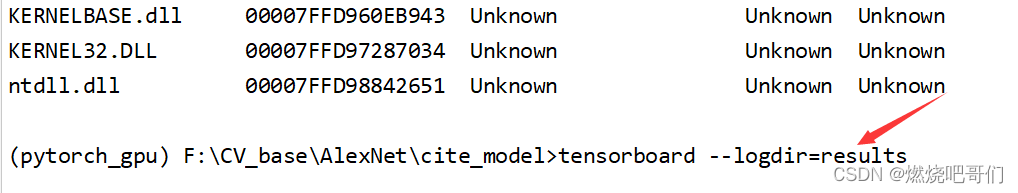

| Supplementary operation |

|---|

After running the code, a results file , Next  |

Execute the following code :tensorboard --logdir= Stored results File path ) |

Click the following link to jump to the visual interface  |

The results are shown in the following figure

Two 、 Visual feature map

Only the first floor is shown here , Input the visualization of the feature layer after the first layer convolution of a picture

The code is as follows :

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

log_dir = os.path.join(BASE_DIR, "results")

path_state_dict = os.path.join("data", "alexnet-owt-4df8aa71.pth")

alexnet = models.alexnet()

alexnet.load_state_dict(torch.load(path_state_dict))

writer = SummaryWriter(log_dir=log_dir, filename_suffix="_feature map")

# data

path_img = os.path.join(BASE_DIR,"data", "tiger cat.jpg") # your path to image

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.49139968, 0.48215827, 0.44653124], [0.24703233, 0.24348505, 0.26158768])

])

img_pil = Image.open(path_img).convert('RGB')

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw -> bchw

convlayer1 = alexnet.features[0] # The first convolution

fmap_1 = convlayer1(img_tensor)

fmap_1.transpose_(0, 1) # bchw=(1, 64, 55, 55) --> (64, 1, 55, 55)

fmap_1_grid = vutils.make_grid(fmap_1, normalize=True, scale_each=True, nrow=8)

writer.add_image('feature map in conv1', fmap_1_grid, global_step=620)

writer.close()

The following are the original and effect drawings :

If there are any deficiencies, please point out , Finally, ask for a little praise again .

边栏推荐

- HarmonyOS.2

- 在线文本实体抽取能力,助力应用解析海量文本数据

- Kotlin coroutine context and scheduler

- Jerry added an input capture channel [chapter]

- Can a team do both projects and products?

- Maximum path sum in binary tree [handle any subtree, then handle the whole tree]

- AutoRF:从单视角观察中学习3D物体辐射场(CVPR 2022)

- 记录一次Mongotemplate的And和Or的各种套

- Activity lifecycle

- Kotlin asynchronous flow

猜你喜欢

win10系统问题

【从零开始学zabbix】一丶Zabbix的介绍与部署Zabbix

万用表的使用方法

龙蜥开发者说:首次触电,原来你是这样的龙蜥社区? | 第 8 期

Go语言三个高效编程的技巧

Télétravail: Camping à la maison gadgets de bureau | rédaction communautaire

Unity 热力图建立方法

Three efficient programming skills of go language

SAP Marketing Cloud 功能概述(四)

Autorf: learn the radiation field of 3D objects from single view (CVPR 2022)

随机推荐

Baidu map API drawing points and tips

2022 coal mine gas drainage operation certificate examination questions and simulation examination

【R语言数据科学】(十四):随机变量和基本统计量

谷歌WayMo提出R4D: 采用参考目标做远程距离估计

真正的项目经理强者,都是闭环高手!

项目经理的晋级之路

根据前序&中序遍历生成二叉树[左子树|根|右子树的划分/生成/拼接问题]

智源社区周刊#86:Gary Marcus谈大模型研究可借鉴的三个语言学因素;谷歌提出媲美Imgen的文生图模型Parti;OpenAI提出视频预训练模型VPT,可玩MC游戏

Antd checkbox, limit the selected quantity

Jerry's infrared filtering [chapter]

leetcode:1504. 统计全 1 子矩形的个数

Jerry's test mic energy automatic recording automatic playback reference [article]

杰理之可能出现有些芯片音乐播放速度快【篇】

数学之英文写作——基本中英文词汇(几何与三角的常用词汇)

吉时利静电计宽测量范围

win10系统问题

4 reasons for "safe left shift"

Jericho turns on shouting in all modes to increase mic automatic mute [chapter]

2022年氟化工艺考试模拟100题及答案

Rongyun communication has "hacked" into the heart of the bank