当前位置:网站首页>Overview and deployment of GFS distributed file system

Overview and deployment of GFS distributed file system

2022-06-28 00:38:00 【[email protected]】

List of articles

- 1 GlusterFS( distributed file system )

- 2 GFS Deploy

- 2.1 Cluster environment

- 2.2 Change node name

- 2.3 Node partition and mount

- 2.4 Modify hostname , To configure /etc/hosts file

- 2.5 Install local source ( install 、 start-up GlusterFS( all node Operation on node )

- 2.6 Add nodes to create clusters ( Add nodes to the storage pool )

- 2.7 Create volumes according to the plan

- 2.8 Deploy Gluster client (192.168.16.24)

- 2.9 test Gluster file system

- 2.10 Redundancy testing ( View the distributed striped volume distribution )

- 2.11 Other maintenance commands

- 3 summary

Add a hard disk

Partition <=2.2TB:fdisk >2.2TB:parted

format mkfs -t xfs/ext4

mount mount

Partition 8e LVM type

Physical volume pvcreate

The volume group vgcreate

Logic volume lvcreate

format mkfs -t

mount mount

Expand vgextend lvextend

Refresh disk xfs:xfs_growfs ext4:resize2fs

distributed file system

File system storage

Block storage : Hard disk

File store :NFS(raid lvm NAS)SISC

Object storage : Public cloud (OSS S3)

Distributed storage :GFS MFS Ceph FastdFS

1 GlusterFS( distributed file system )

GlusterFS Is an open source distributed file system . By the storage server 、 Client and NFS/Samba Storage gateway ( Optional , Choose to use as needed ) form . No metadata server component , It helps to improve the performance of the whole system 、 Reliability and stability .

FS It's the file system , The file system consists of file system interfaces 、 A collection of software for object management 、 Objects and properties .

1.1 MFS And GlusterFs Compare

- MFS: Traditional distributed file systems mostly store metadata through meta servers , Metadata contains directory information on the storage node 、 Directory structure, etc . This design has a high efficiency in browsing the directory , But there are also some defects , For example, single point of failure . Once the metadata server fails , Even if the node has high redundancy , The entire storage system will also crash . and GlusterFs Distributed file system is based on the design of no meta server , Strong horizontal data expansion ability , High reliability and storage efficiency .

- GlusterFs It's also Scale-out( Horizontal scaling ) Storage solutions Gluster At the heart of , It has strong horizontal expansion ability in storing data , It can support numbers through extension PB Storage capacity and handling of thousands of clients .

- GlusterFS Support with the help of TCP/IP or InfiniBandRDMA The Internet ( A kind of Supports multiple concurrent Linked Technology , have High bandwidth 、 Low latency 、 High scalability Characteristics ) Bring together physically dispersed storage resources , Provide unified storage services , And use a unified global namespace to manage data .

1.2 GlusterFS characteristic

1.2.1 Scalability and high performance

GlusterFs Leverage dual features to provide high-capacity storage solutions .

- Scale-Out The architecture allows to improve storage capacity and performance by simply adding storage nodes ( disk 、 The calculation and I/O Resources can be increased independently ), Support 10GbE and InfiniBand High speed internet connection .

- Gluster Elastic Hash (ElasticHash) It's solved GlusterFS Dependency on metadata server , Improved single point of failure and performance bottlenecks , The parallel data access is realized .GlusterFS The elastic hash algorithm can intelligently locate any data fragment in the storage pool ( Store the data in pieces on different nodes ), There is no need to view the index or query the metadata server .

1.2.2 High availability

GlusterFS You can copy files automatically , Such as mirror image or multiple copies , To ensure that data is always accessible , Even in case of hardware failure, it can be accessed normally .

When the data is inconsistent , The self-healing function can restore the data to the correct state , Data repair is performed in the background in an incremental manner , Almost no performance load is generated .

GlusterFS Can support all storage , Because it did not design its own private data file format , Instead, it uses the mainstream standard disk file system in the operating system ( Such as EXT3、XFS etc. ) To store files , Therefore, data can be accessed in the traditional way of accessing disk .

1.2.3 Global unified namespace

Distributed storage , Integrate the namespaces of all nodes into a unified namespace , Form the storage capacity of all nodes of the whole system into a large virtual storage pool , The front-end host can access these nodes to complete data reading and writing operations .

1.2.4 Elastic volume management

GlusterFS By storing data in logical volumes , The logical volume is obtained by independent logical partition from the logical storage pool .

Logical storage pools can be added and removed online , No business interruption . Logical volumes can grow and shrink online as needed , And load balancing can be realized in multiple nodes .

File system configuration can also be changed and applied online in real time , It can adapt to changes in workload conditions or online performance tuning .

1.2.5 Based on standard protocol

Gluster Storage services support NFS、CIFS、HTTP、FTP、SMB And Gluster Native protocol , Completely with POSIX standard ( Portable operating system interface ) compatible .

Existing applications can be modified without any modification Gluster To access the data in , You can also use dedicated API Visit .

1.3 GlusterFS The term

Brick( Memory block ): It refers to the private partition provided by the host for physical storage in the trusted host pool , yes GlusterFS Basic storage unit in , It is also the storage directory provided externally on the server in the trusted storage pool . The format of the storage directory consists of the absolute path of the server and directory , The expression is SERVER:EXPORT, Such as 192.168.16.16:/data/mydir/.

Volume( Logic volume ): A logical volume is a set of Brick Set . A volume is a logical device for data storage , Be similar to LVM Logical volumes in . Most of the Gluster Management operations are performed on volumes .

FUSE: It's a kernel module , Allow users to create their own file systems , There is no need to modify the kernel code .

VFS( Virtual ports ): The interface provided by kernel space to user space to access disk .

Glusterd( Background management process ): Run on each node in the storage cluster .

Summary : Use GFS Will use the above virtual file system

1.4 GlusterFS constitute

Modular stack architecture

GlusterFS Use modularity 、 Stack architecture .

Through various combinations of modules , To achieve complex functions . for example Replicate The module can implement RAID1,Stripe The module can implement RAID0, Through the combination of the two, we can realize RAID10 and RAID01, At the same time, higher performance and reliability .

API: Application programming interface

modularization : Each module can provide different functions

Stack : Enable multiple modules at the same time , Multiple functions can be combined , Implement complex functions

- I/O cache:I/O cache

- Read Ahead: Kernel file read ahead

- Distribute/Stripe: Distributed 、 Banding

- Gige: Gigabit network / Gigabit interface

- TCP/IP: Network protocol

- InfiniBand: Network protocol , And TCP/IP comparison ,TCP/IP It has the characteristics of forwarding lost packets , Based on this communication protocol, communication may slow down , and IB Use trust based 、 Flow control mechanism to ensure connection integrity

- RDMA: Responsible for data transmission , There is a data transfer protocol , function : In order to solve the delay of data processing between client and server in the process of transmission

Read the picture above :

The top half is the client , In the middle is the network layer , The lower part is the server

- Encapsulate multiple functional modules , Form a stack structure , To implement complex functions

- Then interact with the client in the form of request , The client interacts with the server , Due to possible system compatibility problems , Need to pass through posix To solve the system compatibility problem , Let the client's command pass posix After filtering, it can be executed on the server

1.5 GlusterFs workflow

(1) Client or application through GlusterFS The mount point of access data .

(2)linux The system kernel passes through VFS API Receive requests and process .

(3)VFS Submit the data to FUSE Kernel file system , And register an actual file system with the system FUSE, and FUSE File systems pass data through /dev/fuse The equipment documents were submitted to GlusterFS client End . Can be FUSE The file system is understood as a proxy .

(4)GlusterFS client After receiving the data ,client Process the data according to the configuration of the configuration file .

(5) after GlusterFs client After processing , Transfer data over the network to the remote GlusterFS server, And write the data to the server storage device .

(6)Server Then transfer the data to VFS Pseudo file system , Again by VFS Carry out deposit transfer processing , At the end of the day EXT3

1.6 Back end storage how to locate files

1.6.1 elastic HASH Algorithm

elastic HASH The algorithm is Davies-Meyer The specific implementation of the algorithm , adopt HASH The algorithm can get a 32 Bit integer range hash value , Suppose there are... In the logical volume N Units of storage Brick, be 32 The integer range of bits will be divided into N A continuous subspace , Each space corresponds to a Brick.

1.6.2 elastic HASH The advantages of the algorithm

Make sure that the data is evenly distributed in each Brick in .

Solved the dependency on metadata server , And then solve the single point of failure and access bottlenecks .

1.7 GlusterFS The type of volume

GlusterFS Seven volumes are supported , Distributed volumes 、 Strip roll 、 Copy volume 、 Distributed striped volume 、 Distributed replication volumes 、 Striped replication volumes and distributed striped replication volumes .

Distributed volumes ( Default ): File by HASH The algorithm is distributed to all Brick Server On , This kind of roll is GFS The basis of ; In document units according to HASH The algorithm hashes to different Brick, In fact, it just expands the disk space , Not fault tolerant , It belongs to file level RAID 0

Strip roll ( Default ): similar RAID 0, The files are divided into databases and distributed to multiple servers in a polling manner Brick Server On , File storage is in blocks , Support large file storage , The bigger the file , The more efficient the read is

Copy volume (Replica volume): Synchronize files to multiple Brick On , Make it have multiple copies of files , It belongs to file level RAID 1, Fault tolerance . Because the data is scattered in multiple Brick in , So the read performance has been greatly improved , But write performance drops

Distributed striped volume (Distribute Stripe volume):Brick Server The number is the number of bands ( Block distribution Brick Number ) Multiple , It has the characteristics of distributed volume and strip

Distributed replication volumes (Distribute Replica volume):Brick Server The number is the number of mirrors ( Copy of data Number ) Multiple , Features of both distributed and replicated volumes

Strip copy volume (Stripe Replca volume): similar RAID 10, It has the characteristics of striped volume and replicated volume at the same time

Distributed striped replication volumes (Distribute Stripe Replicavolume): The composite volume of three basic volumes is usually used for class Map Reduce application

2 GFS Deploy

2.1 Cluster environment

Node1 node :node1/192.168.16.16 disk : /dev/sdb1 Mount point : /data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node2 node :node2/192.168.16.18 disk : /dev/sdb1 Mount point : /data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node3 node :node3/192.168.16.20 disk : /dev/sdb1 Mount point : /data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Node4 node :node4/192.168.16. 22 disk : /dev/sdb1 Mount point : /data/sdb1

/dev/sdc1 /data/sdc1

/dev/sdd1 /data/sdd1

/dev/sde1 /data/sde1

Client node :192.168.16.24

1、 First , Add four disks to each node , Just experiment , It doesn't need to be too big

2、 then , Restart the server , Ready to deploy

2.2 Change node name

node1(192.168.16.16)

hostname node1

su -

node2(192.168.16.18)

hostname node2

su -

node(192.168.16.20)

hostname node3

su -

node(192.168.16.22)

hostname node4

su -

2.3 Node partition and mount

All nodes ( Use here node1 As a demonstration )

Turn off firewall

systemctl stop firewalld

setenforce 0

vim /opt/fdisk.sh

#!/bin/bash

NEWDEV=`ls /dev/sd* | grep -o 'sd[b-z]' | uniq`

for VAR in $NEWDEV

do

echo -e "n\np\n\n\n\nw\n" | fdisk /dev/$VAR &> /dev/null

mkfs.xfs /dev/${VAR}"1" &> /dev/null

mkdir -p /data/${VAR}"1" &> /dev/null

echo "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0" >> /etc/fstab

done

mount -a &> /dev/null

========》wq

chmod +x /opt/fdisk.sh

cd /opt/

./fdisk.sh

2.4 Modify hostname , To configure /etc/hosts file

# With Node1 Node as an example

echo "192.168.16.16 node1" >> /etc/hosts

echo "192.168.16.18 node2" >> /etc/hosts

echo "192.168.16.20 node3" >> /etc/hosts

echo "192.168.16.22 node4" >> /etc/hosts

2.5 Install local source ( install 、 start-up GlusterFS( all node Operation on node )

[[email protected] /opt] # ls

fdisk.sh rh

[[email protected] /opt] # rz -E

rz waiting to receive.

[[email protected] /opt] # ls

fdisk.sh gfsrepo.zip rh

[[email protected] /opt] # unzip gfsrepo.zip

[[email protected] /opt] # cd /etc/yum.repos.d/

ls

local.repo repos.bak

mv *.repo repo.bak

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

========》wq

yum clean all && yum makecache

#yum -y install centos-release-gluster # If official YUM Source installation , It can point directly to the Internet warehouse

yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

systemctl start glusterd.service

systemctl enable glusterd.service

systemctl status glusterd.service

2.6 Add nodes to create clusters ( Add nodes to the storage pool )

Add nodes to Storage trust pool in ( Just operate on one node , I'm still here node1 Operation on node )

gluster peer probe node1

gluster peer probe node2

gluster peer probe node3

gluster peer probe node4

# At every Node View cluster status on node

gluster peer status

2.7 Create volumes according to the plan

======== Create volumes according to the following plan =========

Volume name Volume type Brick

dis-volume Distributed volumes node1(/data/sdb1)、node2(/data/sdb1)

stripe-volume Strip roll node1(/data/sdc1)、node2(/data/sdc1)

rep-volume Copy volume node3(/data/sdb1)、node4(/data/sdb1)

dis-stripe Distributed striped volume node1(/data/sdd1)、node2(/data/sdd1)、node3(/data/sdd1)、node4(/data/sdd1)

dis-rep Distributed replication volumes node1(/data/sde1)、node2(/data/sde1)、node3(/data/sde1)、node4(/data/sde1)

2.7.1 Create distributed volumes

# Create distributed volumes , No type specified , Distributed volumes are created by default

gluster volume create dis-volume node1:/data/sdb1 node2:/data/sdb1 force #force Mandatory creation

gluster volume list # View the current gluster Inside the roll ( View volume list )

gluster volume start dis-volume # Start the new distributed volume

gluster volume info dis-volume # View information about creating distributed volumes

2.7.2 Create a striped roll

# The specified type is stripe, Values for 2, And followed by 2 individual Brick Server, So you're creating a striped volume

gluster volume create stripe-volume stripe 2 node1:/data/sdc1 node2:/data/sdc1 force

gluster volume start stripe-volume

gluster volume info stripe-volume

2.7.3 Create replication volume

# The specified type is replica, Values for 2, And followed by 2 individual Brick Server, So what is created is a copy volume

gluster volume create rep-volume replica 2 node3:/data/sdb1 node4:/data/sdb1 force

gluster volume start rep-volume

gluster volume info rep-volume

2.7.4 Create distributed striped volumes

# The specified type is stripe, Values for 2, And followed by 4 individual Brick Server, yes 2 Twice as many , So you create a distributed striped volume

gluster volume create dis-stripe stripe 2 node1:/data/sdd1 node2:/data/sdd1 node3:/data/sdd1 node4:/data/sdd1 force

gluster volume start dis-stripe

gluster volume info dis-stripe

2.7.5 Create a distributed replication volume

# The specified type is replica, Values for 2, And followed by 4 individual Brick Server, yes 2 Twice as many , So you create a distributed replication volume

gluster volume create dis-rep replica 2 node1:/data/sde1 node2:/data/sde1 node3:/data/sde1 node4:/data/sde1 force

gluster volume start dis-rep

gluster volume info dis-rep

gluster volume list # View a list of all current volumes

2.8 Deploy Gluster client (192.168.16.24)

2.8.1 Install client software

# Turn off firewall

systemctl stop firewalld

setenforce 0

# take gfsrepo Software uploaded to /opt Suborder

cd /opt

unzip gfsrepo.zip

cd /etc/yum.repos.d/

mkdir repo.bak

mv *.repo repo.bak

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

========》wq

yum clean all && yum makecache

yum -y install glusterfs glusterfs-fuse

2.8.2 Create mount directory

mkdir -p /test/{

dis,stripe,rep,dis_stripe,dis_rep}

ls /test

echo "192.168.16.16 node1" >> /etc/hosts

echo "192.168.16.18 node2" >> /etc/hosts

echo "192.168.16.20 node3" >> /etc/hosts

echo "192.168.16.22 node4" >> /etc/hosts

2.8.3 mount Gluster file system

# To mount temporarily

mount.glusterfs node1:dis-volume /test/dis

mount.glusterfs node1:stripe-volume /test/stripe

mount.glusterfs node1:rep-volume /test/rep

mount.glusterfs node1:dis-stripe /test/dis_stripe

mount.glusterfs node1:dis-rep /test/dis_rep

df -Th

# Permanently mount

vim /etc/fstab

node1:dis-volume /test/dis glusterfs defaults,_netdev 0 0

node1:stripe-volume /test/stripe glusterfs defaults,_netdev 0 0

node1:rep-volume /test/rep glusterfs defaults,_netdev 0 0

node1:dis-stripe /test/dis_stripe glusterfs defaults,_netdev 0 0

node1:dis-rep /test/dis_rep glusterfs defaults,_netdev 0 0

2.9 test Gluster file system

2.9.1 Write files to the volume , Client operation

cd /opt

dd if=/dev/zero of=/opt/demo1.log bs=1M count=40

dd if=/dev/zero of=/opt/demo2.log bs=1M count=40

dd if=/dev/zero of=/opt/demo3.log bs=1M count=40

dd if=/dev/zero of=/opt/demo4.log bs=1M count=40

dd if=/dev/zero of=/opt/demo5.log bs=1M count=40

ls -lh /opt

cp /opt/demo* /test/dis

cp /opt/demo* /test/stripe/

cp /opt/demo* /test/rep/

cp /opt/demo* /test/dis_stripe/

cp /opt/demo* /test/dis_rep/

cd /opt/test/

tree

.

├── dis

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── dis_rep

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── dis_stripe

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── rep

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

└── stripe

├── demo1.log

├── demo2.log

├── demo3.log

├── demo4.log

└── demo5.log

5 directories, 25 files

2.9.2 View file distribution

# View distributed file distribution (node1:/dev/sdb1、node2:/dev/sdb1)

[[email protected] ~]# ls -lh /data/sdb1

[[email protected] ~]# ll -h /data/sdb1

# View the Striped volume file distribution (node1:/dev/sdc1、node2:/dev/sdc1)

[[email protected] ~]# ls -lh /data/sdc1

[[email protected] ~]# ll -h /data/sdc1

# View the distribution of replicated volume files (node3:/dev/sdb1、node4:/dev/sdb1)

[[email protected] ~]# ll -h /data/sdb1

[[email protected] ~]# ll -h /data/sdb1

# View the distributed striped volume distribution (node1:/dev/sdd1、node2:/dev/sdd1、node3:/dev/sdd1、node4:/dev/sdd1)

[[email protected] ~]# ll -h /data/sdd1

[[email protected] ~]# ll -h /data/sdd1

[[email protected] ~]# ll -h /data/sdd1

[[email protected] ~]# ll -h /data/sdd1

# View the distributed replication volume distribution (node1:/dev/sde1、node2:/dev/sde1、node3:/dev/sde1、node4:/dev/sde1)

[[email protected] ~]# ll -h /data/sde1

[[email protected] ~]# ll -h /data/sde1

[[email protected] ~]# ll -h /data/sde1

[[email protected] ~]# ll -h /data/sde1

2.10 Redundancy testing ( View the distributed striped volume distribution )

# Hang up node2 Node or close glusterd Service to simulate failure

[[email protected] ~]# systemctl stop glusterd.service

On the client side (192.168.16.24) Check whether the file is normal

2.10.1 Distributed volume data viewing

# The lack of demo5, This is node2 Upper , No redundancy

# Hang up node2 Node or close glusterd Service to simulate failure

[[email protected] test]# ll /test/dis/

2.10.2 Strip roll

[[email protected] test]#ll /test/stripe/ # cannot access , No redundancy

# Distributed striped volume

[[email protected] test]# ll /test/dis_stripe/ # cannot access , Distributed striped volumes are not redundant

# Distributed replication volumes

[[email protected] test]# ll /test/dis_rep/ # You can visit , Distributed replication volumes are redundant

2.10.3 Copy volume , stay node3 and node4 Upper , close

# Hang up node2 and node4 node , Check whether the file is normal on the client

# Test whether the replicated volume is normal

[[email protected] rep]# ls -l /test/rep/ # Test normal on the client The data are

# Test whether the distributed stripe volume is normal

( Distributed striped volume , No redundancy )

[[email protected] test]#ll /test/dis_stripe/ ## There is no data to test on the client

# Test whether the distributed replication volume is normal ( Distributed replication volumes , With redundancy )

[[email protected] test]#ll /test/dis_rep/ # Test normal on the client There's data

above , With replicated data , Compared with , The data is relatively safe

2.11 Other maintenance commands

1. see GlusterFS volume

gluster volume list

2. View information for all volumes

gluster volume info

3. View the status of all volumes

gluster volume status

4. Stop a volume

gluster volume stop dis-stripe

5. Delete a volume , Be careful : When deleting a volume , You need to stop the volume first , And no host in the trust pool is in the down state , Otherwise, the deletion will not succeed

gluster volume delete dis-stripe

6. Set the access control for the volume

# Just refuse

gluster volume set dis-rep auth.allow 192.168.16.100

# Only allowed

gluster volume set dis-rep auth.allow 192.168.16.* # Set up 192.168.16.0 All of the segments IP The address can be accessed dis-rep volume ( Distributed replication volumes )

3 summary

1.GlusterFS characteristic

- Scalability and high performance :Scale-Out The architecture achieves horizontal expansion , To increase his storage capacity ; Elastic Hash (ElasticHash) To locate the relevant data , No index is required to find the source server .

- High availability :GlusterFS Multiple copies of files can be made , After the data is mounted , Ensure that the file data is not lost , At the same time, the file can also be read easily

- Global unified namespace

- Elastic volume management

- Based on standard protocol

2.GlusterFS The term

- Brick( Block storage server ) The server that actually stores user data

- Volume Local file system " Partition

- FUSE File system in user space ( Category EXT4),” This is a pseudo file system “, Client's - - VFS( Virtual ports ) Kernel virtual file system , The user is submitting a request to VFS then VFS hand FUSH, Give it back GFS client , Finally, the client gives it to the remote storage

- Glusterd( service ) Is the process that runs the re storage node ( The client is running gluster client)GFS The whole process of use GFS The exchange between is made by Gluster client and glusterd complete

3. elastic HASH Algorithm

adopt HASH The algorithm obtains a fixed length of data ( Here is 32 An integer )

Usually , Different data give different results

In order to solve the problem of distributed file data index 、 The complexity of positioning , And used HASH Algorithm to assist

4.GFS Seven volumes supported

Distributed volumes ( Default )

- Expand disk space

- No fault tolerance , It belongs to file level RAID 0

Strip roll ( Default )

- similar RAID 0, File storage is in blocks , Support large file storage , The bigger the file , The more efficient the read is

Copy volume (Replica volume)

- File level RAID 1, Fault tolerance .

- The reading performance is greatly improved , But write performance drops

Distributed striped volume

- Take into account the functions of distributed volume and striped volume

- Mainly used for large file access processing

- At least... Is needed 4 Servers

Distributed replication volumes

- Both distributed and replicated volumes

- Used when redundancy is required

Strip copy volume (Stripe Replca volume)

- similar RAID 10

- Features striped and replicated volumes

Distributed striped replication volumes (Distribute Stripe Replicavolume)

- The composite volume of three basic volumes is usually used for class Map Reduce application

版权声明

本文为[[email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/179/202206272200410706.html

边栏推荐

- Alchemy (4): mental model of programmers

- plot_ Model error: pydot and graphviz are not installed

- 什么是cookie,以及v-htm的安全性隐患

- #796 Div.2 F. Sanae and Giant Robot set *

- Learning notes for qstringlist

- plot_model报错:没有安装pydot, graphviz

- Alchemy (1): identify prototype, demo and MVP in project development

- #796 Div.2 C. Manipulating History 思维

- 吴恩达《机器学习》课程总结(14)_降维

- Hcip/hcie Routing & Switching / datacom Reference Dictionary Series (19) comprehensive summary of PKI knowledge points (public key infrastructure)

猜你喜欢

![[idea] idea formatting code skills](/img/06/38079517e901bc48dc4ca0f8cc63fe.jpg)

[idea] idea formatting code skills

Summary of wuenda's machine learning course (14)_ Dimensionality reduction

Sword finger offer 61 Shunzi in playing cards

![用两个栈实现队列[两次先进后出便是先进先出]](/img/de/07297816f1a44d41389bb45d012c80.png)

用两个栈实现队列[两次先进后出便是先进先出]

表单form 和 表单元素(input、select、textarea等)

Modern programming languages: zig

![软件工程作业设计(1): [个人项目] 实现一个日志查看页面](/img/95/0c3f0dde16d220ddecb5758a4c31e7.png)

软件工程作业设计(1): [个人项目] 实现一个日志查看页面

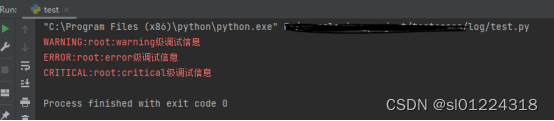

Logging log usage

Technical debt wall: a way to make technical debt visible and negotiable

LabVIEW continuous sampling and limited sampling mode

随机推荐

RNA-seq入门实战(一):上游数据下载、格式转化和质控清洗

SQL reported an unusual error, which confused the new interns

翻译(4): 文本自动完成的匹配规则

Customize MySQL connection pool

MYSQL的下载与配置安装

Mongodb- install a mongodb database locally on the windows computer

Sword finger offer 65 Add without adding, subtracting, multiplying, dividing

股市小白在网上股票开户安全吗?

Deployment and test of crtmp live video server

QStringList 的学习笔记

Leetcode 720. The longest word in the dictionary

Alchemy (1): identify prototype, demo and MVP in project development

Alchemy (9): simple but not simple, never-ending test -- always_ run

哪个证券公司炒股开户是比较好,更安全靠谱的

证券注册账户安全吗,会有风险吗?

用两个栈实现队列[两次先进后出便是先进先出]

Matlb| improved forward push back method for solving power flow of low voltage distribution network

【无标题】

Oracle数据库的启停

吴恩达《机器学习》课程总结(14)_降维