当前位置:网站首页>Summary of wuenda's machine learning course (14)_ Dimensionality reduction

Summary of wuenda's machine learning course (14)_ Dimensionality reduction

2022-06-28 00:21:00 【51CTO】

Q1 Motive one : data compression

Dimension reduction of features , Such as reducing the relevant two-dimensional to one-dimensional :

Three dimensional to two dimensional :

And so on 1000 Dimension data is reduced to 100 D data . Reduce memory footprint

Q2 Motivation two : Data visualization

Such as 50 The data of dimensions cannot be visualized , The dimension reduction method can reduce it to 2 dimension , Then visualize .

The dimension reduction algorithm is only responsible for reducing the dimension , The meaning of the new features must be discovered by ourselves .

Q3 Principal component analysis problem

(1) Problem description of principal component analysis :

The problem is to make n Dimensional data reduced to k dimension , The goal is to find k Vector , To minimize the total projection error .

(2) Comparison between principal component analysis and linear regression :

The two are different algorithms , The former is to minimize the projection error , The latter is to minimize the prediction error ; The former does not do any analysis , The latter aims to predict the results .

Linear regression is a projection perpendicular to the axis , Principal component analysis is a projection perpendicular to the red line . As shown in the figure below :

(3)PCA It's a new one “ Principal component ” The importance of vectors , Go to the front important parts as needed , Omit the following dimension .

(4)PCA One of the advantages of is the complete dependence on data , There is no need to set parameters manually , Independent of the user ; At the same time, this can also be seen as a disadvantage , because , If the user has some prior knowledge of the data , Will not come in handy , May not get the desired effect .

Q4 Principal component analysis algorithm

PCA take n Dimension reduced to k dimension :

(1) Mean normalization , Minus the mean divided by the variance ;

(2) Calculate the covariance matrix ;

(3) Compute the eigenvector of the covariance matrix ;

For one n x n The matrix of dimensions , On the type of U It is a matrix composed of the direction vector with the minimum projection error with the data , Just go to the front k Vectors get n x k The vector of the dimension , use Ureduce Express , Then the required new eigenvector is obtained by the following calculation z(i)=UTreduce*x(i).

Q5 Choose the number of principal components

Principal component analysis is to reduce the mean square error of projection , The variance of the training set is :

Hope to reduce the ratio of the two as much as possible , For example, I hope the ratio of the two is less than 1%, Select the smallest dimension that meets this condition .

Q6 Reconstructed compressed representation

Dimension reduction formula :

The reconstruction ( That is, from low dimension to high dimension ):

The schematic diagram is shown below : The picture on the left shows dimension reduction , The picture on the right is reconstruction .

Q7 Suggestions on the application of principal component analysis

Use the case correctly :

100 x 100 Pixel image , namely 10000 Whitman's sign , use PCA Compress it to 1000 dimension , Then run the learning algorithm on the training set , In the prediction of the , Apply what you learned before to the test set Ureduce Will test the x convert to z, Then make a prediction .

Incorrect usage :

(1) Try to use PCA To solve the problem of over fitting ,PCA Cannot solve over fitting , It should be solved by regularization .

(2) By default PCA As part of the learning process , In fact, the original features should be used as much as possible , Only when the algorithm runs too slowly or takes up too much memory, the principal component analysis method should be considered .

author : Your Rego

The copyright of this article belongs to the author , Welcome to reprint , But without the author's consent, the original link must be given on the article page , Otherwise, the right to pursue legal responsibility is reserved .

边栏推荐

- After a period of silence, I came out again~

- SQL reported an unusual error, which confused the new interns

- Chapter 2 integrated mp

- Mise en œuvre du pool de Threads: les sémaphores peuvent également être considérés comme de petites files d'attente

- [AI application] detailed parameters of Jetson Xavier nx

- Introduction to data warehouse

- [黑苹果系列] M910x完美黑苹果系统安装教程 – 2 制作系统U盘-USB Creation

- Matlb| optimal configuration of microgrid in distribution system based on complex network

- 【论文阅读|深读】SDNE:Structural Deep Network Embedding

- Transmitting and receiving antenna pattern

猜你喜欢

免费、好用、强大的开源笔记软件综合评测

![[PCL self study: pclplotter] pclplotter draws data analysis chart](/img/ca/db68d5fae392c7976bfc93d2107509.png)

[PCL self study: pclplotter] pclplotter draws data analysis chart

Flutter series: Transformers in flutter

炼金术(1): 识别项目开发中的ProtoType、Demo、MVP

![[读书摘要] 学校的英文阅读教学错在哪里?--经验主义和认知科学的PK](/img/7b/8b3619d7726fdaa58da46b0c8451a4.png)

[读书摘要] 学校的英文阅读教学错在哪里?--经验主义和认知科学的PK

NoSQL之Redis配置与优化

MySQL分表查询之Merge存储引擎实现

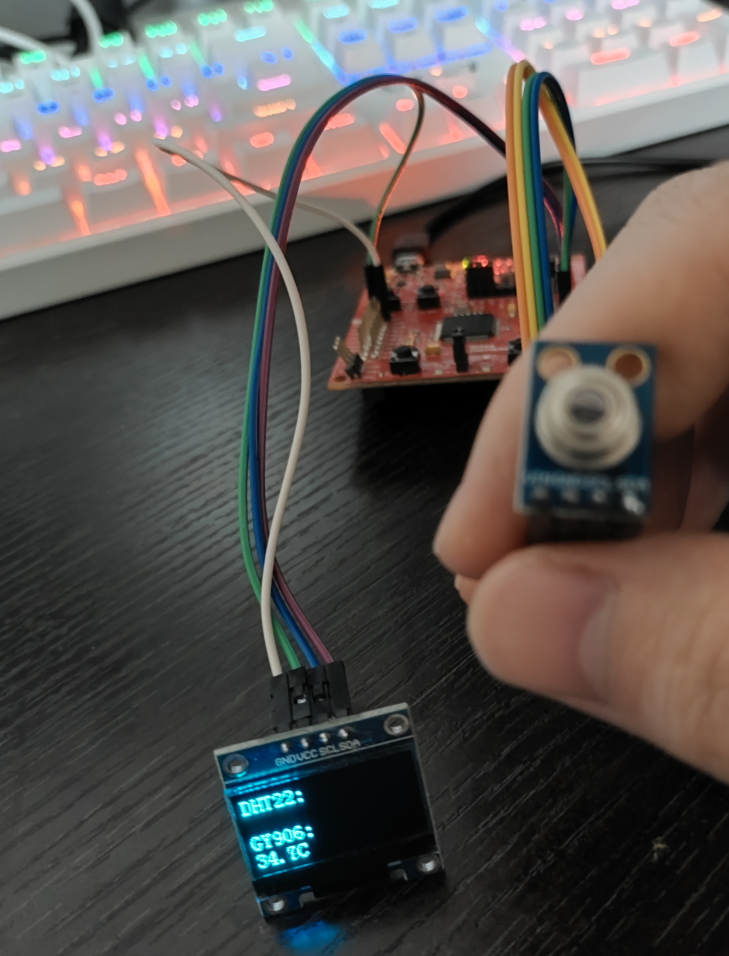

Msp430f5529 MCU reads gy-906 infrared temperature sensor

吴恩达《机器学习》课程总结(14)_降维

Hcip/hcie Routing & Switching / datacom Reference Dictionary Series (19) comprehensive summary of PKI knowledge points (public key infrastructure)

随机推荐

Mise en œuvre du pool de Threads: les sémaphores peuvent également être considérés comme de petites files d'attente

Matlab基本函数 length函数

[黑苹果系列] M910x完美黑苹果系统安装教程 – 2 制作系统U盘-USB Creation

Alchemy (8): parallel development and release

request对象、response对象、session对象

互联网业衍生出来了新的技术,新的模式,新的产业类型

Instructions for vivado FFT IP

【无标题】

Learning notes for qstringlist

剑指 Offer 65. 不用加减乘除做加法

表单form 和 表单元素(input、select、textarea等)

数据仓库入门介绍

技术的极限(11): 有趣的编程

Safe, fuel-efficient and environment-friendly camel AGM start stop battery is full of charm

[paper reading | deep reading] sdne:structural deep network embedding

A summer party

[AI application] detailed parameters of NVIDIA Tesla v100-pcie-32gb

MySQL enterprise parameter tuning practice sharing

Arduino UNO通过电容的直接检测实现简易触摸开关

Comprehensive evaluation of free, easy-to-use and powerful open source note taking software