当前位置:网站首页>Fraud detection cases and Titanic rescued cases

Fraud detection cases and Titanic rescued cases

2022-07-24 14:20:00 【Strong fight】

Fraud detection cases ( The sample is unbalanced , Standardization , Cross validation , Model to evaluate )

# Draw a category scale

count_classes = pd.value_counts(data['Class'], sort=True).sort_index()

count_classes.plot(kind="bar")

plt.title("Fraud class histogram")

plt.xlabel("Class")

plt.ylabel("Frequency")

# Standardized operation

from sklearn.preprocessing import StandardScaler

data['normAmount'] = StandardScaler().fit_transform(data['Amount'].reshape(-1, 1)) # -1 The system automatically calculates the number of rows

data =data.drop("Time", "Amount", axis=1)

# Down sampling strategy

X=data.ix[:, data.columns != 'Class']

y=data.ix[:, data.columns == 'Class']

# Get category 1 The number of

number_records_fraud = len(data[data.Class==1])

fraud_indices = np.array(data[data.Class==1].index)

normal_indices = data[data.Class == 0].index

# From category as 0 In the index group of Random selection And category is 1 The same number Take it out

random_normal_indices = np.random.choice(normal_indices, number_records_fraud,replace=False)

random_normal_indices = np.array(random_normal_indices)

# Mix two categories of indexes

under_sample_indices = np.concatenate([fraud_indices, random_normal_indices])

under_sample_data = data.iloc[under_sample_indices,:]

X_undersample = under_sample_data.ix[:, under_sample_data !='Class']

y_undersample = under_sample_data.ix[:, under_sample_data =='Class']

# Call the tool to segment the training set and the test set

from sklearn.cross_validation import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.3,random_state=0)

from sklearn.linear_model imprt LogisticRegression

from sklearn.cross_validation import KFold, cross_val_score

from sklearn.metrics import confusion_matrix,recall_score,classification_report

Confusion matrix

# threshold The value can be specified by yourself , The larger the value, the stricter

lr=LogisticRegression(C=0.01, penalty='l1')

lr.fit(X_train_undersample, y_train_undersample.values.ravel())

y_pred_undersample_proba = lr.predict_proba(X_test_undersample.values) # Obtain the category probability value given by the model

thresholds = [0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

plt.figure(figsize=(10,10))

j=1

for i in thresholds:

y_test_predictions_high_recall = y_pred_undersample_proba[:,1]>i # Only when the probability value is greater than the threshold can it be determined as a certain category

plt.subplot(3,3,j)

j+=1

cnf_matrix = confusion_matrix(y_test_undersample, y_test_predictions_high_recall)

np.set_printoptions(precision=2)

class_names =[0,1]

plot_confusion_matrix(cnf_matrix, classes=class_names, title='Threshold >= %s'%i)

plt.show()

Oversampling strategy :

#SMOTE Algorithm Training set generation

import imblearn.over_sampling import SMOTE

oversampler=SMOTE(random_state=0)

os_features, os_labels=oversampler.fit_sample(features_train, labels_train)

The Titanic was rescued ( Missing value fill , Numeric character mapping , The extracted features , Algorithm integration )

Call the linear regression algorithm

from sklearn.linear_model import LinearRegression

from sklearn.cross_validation import KFold

predictors = ["Pclass", "Sex", "Age", "SibSp", "Parch"]

alg = LinearRegression()

kf = KFold(titaniic.shape[0], n_folds=3, random_state=1)

predictors=[]

for train, test in kf:

train_predictors = (titanic[predictors].iloc[train, :])

train_target = titanic["Survived"].iloc[train]

alg.fit(train_predictors, train_target)

test_predictions = alg.predict(titanic[predictors].iloc[test,:])

predictions.append(test_predictions)

import numpy as np

predictions = np.concatenate(predictions, axis=0)

predictions[predictions >.5]=1

predictions[predictions<=.5]=0

accuracy = sum(predictions[predictions == titanic["Survived"]])/len(predictions)

Call the logistic regression algorithm Try

from sklearn import cross_validation

from sklearn linear_model import LogisticRegression

alg = LogisticRegression(random_state=1)

scores = cross_validation.cross_val_score(alg, titanic[predictors], titanic["Survived"], cv=3)

scores.mean()

Call the random forest algorithm Try

from sklearn.ensemble import RandomForestClassifier

alg=RandomForestClassifier(random_state=1, n_estimators=10, min_samples_split=2, min_samples_leaf=1)

kf = cross_validation.KFold(titanic.shape[0], n_folds=3, random_state=1)

scores = cross_validation.cross_val_score(alg, titanic[predictors], titanic["Survived"],cv=kf)

Adjust the parameters

alg=RandomForestClassifier(random_state=1, n_estimators=50, min_samples_split=4, min_samples_leaf=2)

Extracting features

titanic["Familysize"]=titanic[""]+titanic[""]

titanic["NameLength"]=titanic["Name"].apply(lambda x:len(x))

import re

def get_title(name):

title_search = re.search('([A-Za-z]+)\.', name)

if title_search:

return title_search.group(1)

return ""

titles = titanic["Name"].apply(get_title)

print(pandas.value_counts(titles))

title_mapping={"Mr":1, "Miss":2, "Mrs":3, }

for k,v in title_mapping.items():

title[titles == k] = v

titanic["Title"]=titles

Verify the importance of each feature

import numpy as np

from sklearn.feature_selection import SelectKBest, f_classif

import matplotlib.pyplot as plt

predictors=["", "", ""]

selector = SelectKBest(f_classif, k=5)

selector.fit(titanic[predictors],titanic["Survived"])

scores = -np.log10(selector.pvalues)

plt.bar(range(len(predictors)), scores)

plt.xticks(range(len(predictors)), predictors, rotation='vertical')

plt.show()

Algorithm integration

import numpy as np

algorithms = [

[GradientBoostingClassifier(random_state=1, n_estimators=25,max_depth=3),["Pclass","Sex"]],

[LogisticRegression(random_state=1), ["Pclass","Sex"]]

]

kf = KFold(titanic.shape[0], n_folds=3, random_state=1)

predictions = []

for train,test in kf:

train_target = titanic["Survived"].iloc[train]

full_test_predictions = []

for alg, predictors in alograms:

alg.fit(titanic[predictors].iloc[train, :], train_target)

test_predictions = alg.predict_proba(titanic[predictors].iloc[test,:].astype(float))[:,1]

full_test_predictions.append(test_predictions)

test_predictions = (full_test_predictions[0] + full_test_predictions[1])/2

test_predictions[test_predictions <=.5]=0

test_predictions[test_predictions > .5]=1

边栏推荐

- JS judge whether it is an integer

- No response to NPM instruction

- Csp2021 T1 corridor bridge distribution

- The fourth edition of probability and mathematical statistics of Zhejiang University proves that the absolute value of the correlation coefficient of random variables X and Y is less than 1, and some

- String - 459. Repeated substrings

- C language -- three ways to realize student information management

- JS get object attribute value

- 字符串——28. 实现 strStr()

- Rasa 3.x learning series -rasa [3.2.3] - new version released on July 18, 2022

- Binlog and iptables prevent nmap scanning, xtrabackup full + incremental backup, and the relationship between redlog and binlog

猜你喜欢

看完这篇文章,才发现我的测试用例写的就是垃圾

The server switches between different CONDA environments and views various user processes

Csp2021 T3 palindrome

Notes on the use of IEEE transaction journal template

After five years of contact with nearly 100 bosses, as a headhunter, I found that the secret of promotion was only four words

![[oauth2] III. interpretation of oauth2 configuration](/img/31/90c79dbc91ee15c353ec46544c8efa.png)

[oauth2] III. interpretation of oauth2 configuration

Similarities and differences between nor flash and NAND flash

对话框管理器第二章:创建框架窗口

![The solution to the error of [installation detects that the primary IP address of the system is the address assigned by DHCP] when installing Oracle10g under win7](/img/25/aa9bcb6483bb9aa12ac3730cd87368.png)

The solution to the error of [installation detects that the primary IP address of the system is the address assigned by DHCP] when installing Oracle10g under win7

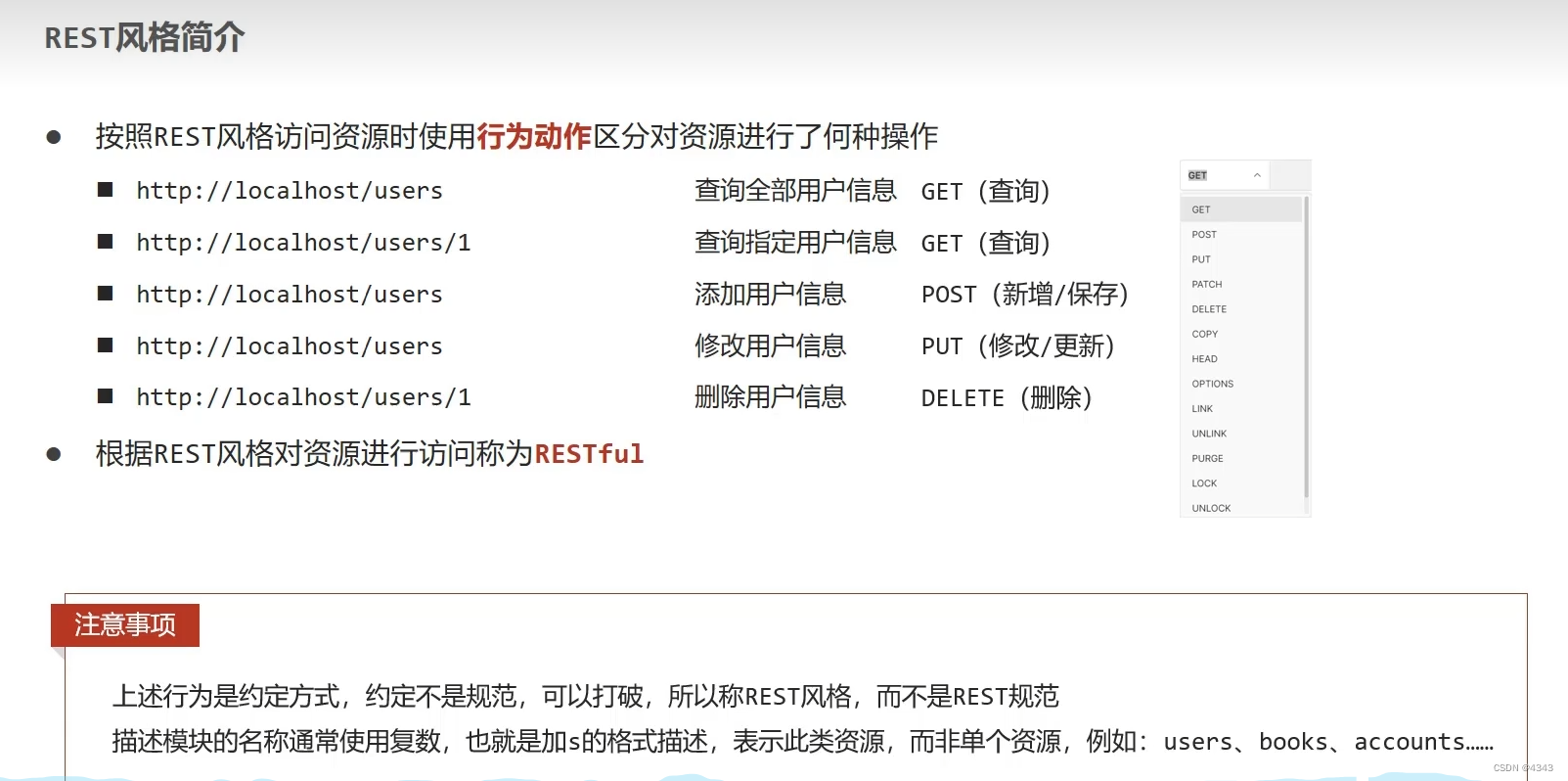

REST风格

随机推荐

JS judge whether it is an integer

Rasa 3.x learning series -rasa fallbackclassifier source code learning notes

Similarities and differences between nor flash and NAND flash

Centos7安装达梦单机数据库

Caffe framework and production data source for deep learning

The spiral matrix of the force buckle rotates together (you can understand it)

Csp2021 T3 palindrome

After five years of contact with nearly 100 bosses, as a headhunter, I found that the secret of promotion was only four words

OWASP zap security testing tool tutorial (Advanced)

Moving the mouse into select options will trigger the mouseleave event processing scheme

5年接触近百位老板,身为猎头的我,发现升职的秘密不过4个字

Native asynchronous network communication executes faster than synchronous instructions

"XXX" cannot be opened because the identity of the developer cannot be confirmed. Or what file has been damaged solution

Video game design report template and

【C语言笔记分享】——动态内存管理malloc、free、calloc、realloc、柔性数组

Where can Huatai Securities open an account? Is it safe to use a mobile phone

Rasa 3.x learning series -rasa [3.2.4] - 2022-07-21 new release

Build ZABBIX monitoring service in LNMP architecture

Flink comprehensive case (IX)

String - Sword finger offer 58 - ii Rotate string left