当前位置:网站首页>Chinese character style transfer --- unsupervised typesetting transmission

Chinese character style transfer --- unsupervised typesetting transmission

2022-07-24 13:16:00 【Ah, here comes the dish】

List of articles

- 1、 Problem statement

- 2 Related work and motivation

- 3 Proposed Approach

- 4 Data Description

- 5 Model Description

- 6 Experiments

- 6.1 Transfer performance under strong pair policy

- 6.2 Transfer performance under soft pair policy

- 6.3 Transfer performance under random pair policy

- 6.4 Transfer performance on calligraphy fonts

- 6.5 Transfer performance for non-Chinese languages

- 6.6 Effect of various loss weights

- 6.7 Effect of pretraining

- 6.8 Effect of batch normalization

- 7 Evaluation

- 8 Future Work

- 9 Acknowledgement

- References

- Appendix

https://github.com/kaonashi-tyc/zi2zi/

https://www.google.com/get/noto/help/cjk/

http://zh.wikipedia.com/zh-hans/%E5%8D%8E%E6%96%87%E8%A1%8C%E6%A5%B7

1、 Problem statement

Printing plays an important role in the publishing industry , Different use cases usually require different printing techniques . However , Designing a new font is very expensive and time-consuming , Especially for Chinese involving a large number of characters . Some semi-automatic typesetting and synthesis methods are proposed . These methods use the commonness of the structure to simplify the design process . A method for the [1] First, manually design character subsets for new fonts , Then automatically generate the remaining characters . In this project , We will try to use generative countermeasure Networks (GAN) To explore this problem .

2 Related work and motivation

The traditional Chinese typesetting synthesis method regards Chinese characters as a collection of radicals and strokes , But they rely on manually defining keys , This still takes a lot of time . Some recent computer vision research [2] Put forward a new method : Treat each Chinese character as an independent and indivisible image , Therefore, pre-processing and post-processing of each Chinese character can be avoided . then , Through the combination of transmission network and discrimination network , It can transfer one layout to another , Such as [1] Described in . Although the performance of the model is quite satisfactory , But the training process needs supervision , This means that in the training data , Every character in the source and target fields needs to be perfectly matched . Sometimes pairing takes time , Sometimes there is no perfect match , For example, the pairing between traditional Chinese and simplified Chinese .

3 Proposed Approach

Input is a subset T And a whole set of typesetting . Our proposed method will T The style of is transferred to S On , Get a complete set with T Style characters . Refer to other work on unsupervised style transfer [3,4,5], We define three loss terms ( chart 1). a LGAN It is encouraged to generate indistinguishable samples from the training samples of the source domain and the target domain . The second item ,LCON ST Used for... After domain transfer f Uniformity , It means f(x) and f(G(c)) Should be close to . The third one LT ID requirement G The identity matrix of the sample close to the target domain .f The function is usually a pre training model composed of several convolution layers , Used to extract the content representation of character images .g Function is a series of deconvolution layers , Add a target domain style to the role . We plan to use zi2zi 1 The pre training encoder in the project acts as f. We also suggest adding U-Net Skip connection technique introduced in , To keep the edges of the generated font sharp , Avoid blurring .

4 Data Description

In the style conversion task , We use Noto Sans CJK As the source ,Noto Serif CJK As the goal of the intermediate report 2. The former is sans serif , The latter is serif . Extract from it 1000 The character : about 900 For training ,100 For testing . Each character is preprocessed as 256×256×3 vector . at present , Our model has been extended to brush fonts ,SinoType XingKai3. See the figure in the appendix for an example of typesetting 10. Besides , We also extend our model to other Asian languages , Including Japanese and Korean . Pairing in the training set does not have to be completely aligned . Those who have N Right training set , Will be the first i The source image and the target image in the alignment are expressed as Si and Ti. From strict to loose , We can define three pairing strategies :

- 1、 Strong pairing : Apply to any i∈ {1,…,N},Si and Ti Represents the same character under different font styles .

- 2、 Soft double : Apply to i∈ {1,…,N},Si and Ti Cannot refer to the same character . However , For any i, There is a value j∈ {1,…,N}, therefore Si and Tj It refers to the same character . let me put it another way , Although pairing by element is wrong , But in the training set , The overlap between the source font set and the target font set is 100%.

- 3、 Random pairing : be used for i∈ {1,…,N},Si and Ti Cannot refer to the same character . Besides , For any i, Values may or may not exist j∈ {1,…,N}, therefore Si and Tj It refers to the same character .

5 Model Description

Our model uses zi2zi The general architecture of , The architecture borrows U-Net[6] Skip connection skills in . Convolution layer and deconvolution layer are used 2×2 Step size and 5×5 nucleus . Each convolution layer was leaked before ReLu, Then batch normalization . Each deconvolution layer is then normalized in batches , And skip the connection with the corresponding convolution .Deconv2 and Deconv3 And after 0.5 As the leakage layer of leakage rate .Deconv8 Use condition instance normalization [7] Instead of batch standardization . For the discriminator , We use DTN[4] Ternary classifier introduced in , Instead of using binary classifiers . The detailed architecture is shown in the table 1 Shown .

Under the soft pair strategy ,L1 and L2 Loss is no longer available . therefore , One of our major changes is to add losses LT ID、LGAN and LCON ST To support unsupervised learning , And explore further unsupervised mechanisms : Random pairing .

6 Experiments

6.1 Transfer performance under strong pair policy

As a baseline , We have achieved Chinese format conversion (CTT)([1]) And the original word . We have implemented the zi2zi Unsupervised variant of . We construct a training set under the strong pairing strategy (900 For training ,100 For testing ;Noto Sans CJK As the source font ,Noto Serif CJK As the target font ;16 As a small batch size ). These three methods have good performance , And showed no significant difference .

The transmission results of connection sequence classification with strong pairs are shown in Figure 2 Shown . Please note that , Serif features have been successfully transferred , As the circle shows .

【 chart 2: The result of using strong pairing strategy :(a) Source font (b) Target font (c) Transfer fonts 】

6.2 Transfer performance under soft pair policy

When switching to soft pair strategy to generate training set , Disable the... Between the transfer font and the target font L2 In the case of loss , None of the existing methods work well . The transmitted font is unstable , Even gather into messy black blocks . However , Our method can still work well under the soft pair strategy . It is better than zi2zi and CTT Convergence is faster . after 5 Times , We observed a blurry serif . after 12 Times , The serif becomes clear and sharp . chart 3 Each shows th Convolution layer 1 And deconvolution 8 Characteristic graph .

6.3 Transfer performance under random pair policy

We discussed the overlap ratio in three (0.0、0.5 and 1.0) Model performance under . The model can learn serif features in all three settings . However , The model performs poorly under the low overlap rate of two aspects . First , It is inferred that the background noise of the image is large . Besides , Infer that the character structure in the image may change . for example , Some strokes may be lost . The difference is shown in the figure 4 Shown .

【 chart 3: Model ( Left ) Convolution layer 1( Right ) Deconvolution layer 8 Characteristic graph 】

【 chart 4: Impact of overlap rate .100 Comparison of the results generated after three times . The first 1 Column : Source font . The first 2 Column : The basic facts of the target font . The first 3 Column : Overlap rate =0. The first 4 Column : Overlap rate =0.5. The first 5 Column : Overlap rate =1.】

6.4 Transfer performance on calligraphy fonts

Calligraphy font is a very different font series from sans serif font and serif font . From the picture 10 It can be seen that , Due to the significant difference of font skeleton , The style conversion between non calligraphic fonts and calligraphic fonts seems more difficult .

We use soft pair strategy or zero overlap random pair strategy ( chart 12 Sum graph 13), take SinoType XingKai The style of is transferred to Noto Sans CJK We did experiments on . It is difficult to determine whether soft pair strategy or random pair strategy leads to better performance . We also observed that , The convergence rate is much slower than previous experiments .

In another experiment , An ancient Chinese character font ,FZ Xiaozhuan , Try as the target font . We met GANs A FAQ for , be called “ Model missing problem ”[8], Where the results lose diversity , The generated characters are similar and unrecognizable ( See the picture 5). We solve this problem by adding random shifts and scales to the source and target characters in each training iteration . Random shift and scaling are both used for data enhancement and regularization . Use this technique , The results look better , Pictured 14 Shown .

6.5 Transfer performance for non-Chinese languages

Although we only use simplified Chinese characters during training , But the model can still be extended to traditional Chinese characters and even non Chinese characters . In the figure 12、13 and 14 The rightmost column in is traditional Chinese . The leftmost column is Latin characters . Other columns are mixed with Japanese and Korean characters .

6.6 Effect of various loss weights

We achieved distribution LT ID、LCON ST and LT V The mechanism of weight , To assess the impact of these losses . In the midway report , We mentioned LT ID Loss is critical to performance . An extreme case illustrates this better : Manual will 0 Assigned to LT ID,Noto Sans CJK and Noto Serif CJK The results between are shown in the figure 6 Shown .

【 chart 6:LT ID=0( Left ) Ground true source font ( Right ) Transferring fonts Noto Sans CJK and Noto Serif CJK Transmission results on 】

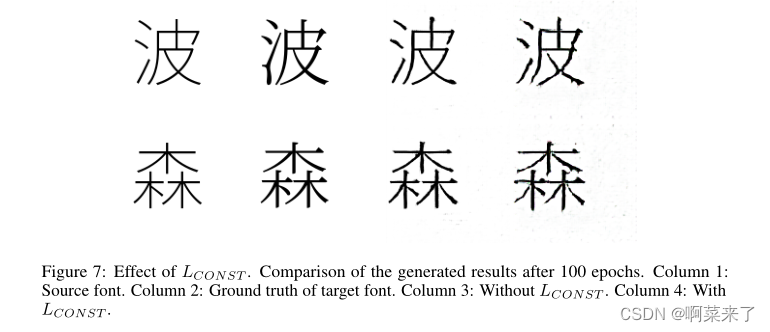

From the picture 6 Can be observed , after 100 In a few epochs , Liner transmission performance is poor , There are still noisy pixels in the background . By setting other weights to LT ID, Can be observed 5 With LT ID Increase the weight of , The serif transfer effect on the same two fonts is significantly improved ( But it also needs to be balanced with other losses ). therefore , In the final default settings ,LT ID The weight of is much larger than other losses . about LCON ST, We compared whether there is LCON ST Lost training results . Without loss , The inferred image has clearer strokes and background . However , Serif features are missing or not obvious on some characters , Although it is very obvious on some characters . And LCON ST Compared with the result of loss , The inferred image is closer to the source terrain . This is shown in the figure 7 Shown .

【 chart 7:LCON ST Influence .100 Comparison of generated results after three stages . The first 1 Column : Source font . The first 2 Column : The basic facts of the target font . The first 3 Column : nothing LCON ST. The first 4 Column : Yes LCON ST】

6.7 Effect of pretraining

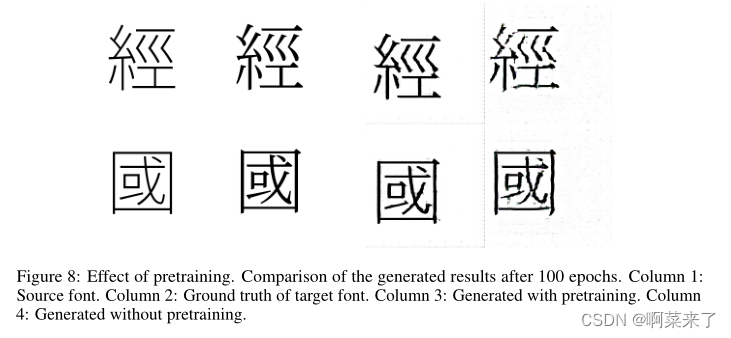

In the midway report , We mentioned that pre training does not help improve performance . However , We later found an error in the experiment . We corrected this error and did the experiment again .

When using zi2zi Pre training encoder training model , The inferred image is much better . In pre training , The strokes are clear , Without pre training , Strokes are sometimes torn to pieces , Straight strokes sometimes bend .“ Serif ” The function is also cleaner during pre training . These are shown in the figure 8 Shown .

【 chart 8: The effect of pre training .100 Comparison of the results generated after three times . The first 1 Column : Source font . The first 2 Column : The basic facts of the target font . The first 3 Column : Generate through pre training . The first 4 Column : Generate without pre training .】

Through pre training , The model behavior during training is also more stable . Due to the randomness of random gradient descent , Model parameters may sometimes reach poor settings ,6 Inferred images can be very noisy . This phenomenon is more serious without pre training than in pre training .

6.8 Effect of batch normalization

The batch normalization unit is divided into two stages , Training stage and reasoning stage . Different stages have different effects on the generated results . We found that , In the training phase , The generated characters are more noisy , With sharp serif . In the reasoning stage , The generated font is less noisy , But it loses the sharp serif and some similarities with the target font , Pictured 9 Shown . Although conditional instance normalization has been used to alleviate this problem , But we will continue to explore ways to balance noise and similarity .

【 chart 9: Batch normalized phase flag to transmit fonts ( Left ) Ground truth ( in ) Training phase ( Right ) The influence of the reasoning stage 】

7 Evaluation

at present , We use the L2 Loss , And experience rating to evaluate the performance of the model . However ,L2 The loss may not be a good estimate of the quality of the generated typesetting . We will try to design the steering test , As another qualitative and quantitative measurement . We will shuffle the generated results and the ground truth results , Ask volunteers to separate them . If the task of separation is difficult for humans , We can prove that the performance of our model is good enough .

8 Future Work

Our current model uses convolution / Deconvolution neural unit for style conversion . They are based on local assumptions , There may be no source / Overall view of the target layout . We are curious about the mechanism [9] Can we further improve the performance of the model by using some remote image context .

As mentioned earlier , The architecture we use may encounter “ Lack of mode ”. Existing losses , Include L2 loss 、LCON ST and LT ID, Cannot effectively detect SGD When to fall into local optimization . suffer [10] Inspired by the , We can Frechet The initial distance is adjusted to the transfer field of typesetting style , As a measure of model robustness and result diversity .

9 Acknowledgement

We would like to thank in particular [1] The author of often , He had a useful discussion with us on this project .

References

https://github.com/kaonashi-tyc/zi2zi/

https://www.google.com/get/noto/help/cjk/

http://zh.wikipedia.com/zh-hans/%E5%8D%8E%E6%96%87%E8%A1%8C%E6%A5%B7

[1] Jie Chang and Y ujun Gu. Chinese typography transfer. 07 2017.

[2] Phillip Isola, Jun-Y an Zhu, Tinghui Zhou, and Alexei A. Efros. Image-to-image translation with

conditional adversarial networks. 11 2016.

[3] Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. Image style transfer using convolu-

tional neural networks. In The IEEE Conference on Computer Vision and Pattern Recognition

(CVPR), June 2016.

[4] Yaniv Taigman, Adam Polyak, and Lior Wolf. Unsupervised cross-domain image generation.

11 2016.

[5] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. Unpaired image-to-image

translation using cycle-consistent adversarial networks. 03 2017.

[6] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for

biomedical image segmentation. CoRR, abs/1505.04597, 2015.

[7] Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur. A learned representation for

artistic style. CoRR, abs/1610.07629, 2016.

[8] Tong Che, Yanran Li, Athul Paul Jacob, Y oshua Bengio, and Wenjie Li. Mode regularized

generative adversarial networks. CoRR, abs/1612.02136, 2016.

[9] Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron C. Courville, Ruslan Salakhutdinov,

Richard S. Zemel, and Y oshua Bengio. Show, attend and tell: Neural image caption generation

with visual attention. CoRR, abs/1502.03044, 2015.

[10] Mario Lucic, Karol Kurach, Marcin Michalski, Sylvain Gelly, and Olivier Bousquet. Are gans

created equal? a large-scale study. arXiv preprint arXiv:1711.10337, 2017.

Appendix

边栏推荐

- selenium环境配置和八大元素定位

- July training (day 24) - segment tree

- 2022.07.21

- 基于matlab的语音处理

- On node embedding

- Win10 log in with Microsoft account and open all programs by default with administrator privileges: 2020-12-14

- 【论文阅读】TEMPORAL ENSEMBLING FOR SEMI-SUPERVISED LEARNING

- Representation and basic application of regular expressions

- About the concept of thread (1)

- Generator and async solve asynchronous programming

猜你喜欢

25. Middle order traversal of binary tree

I 用c I 实现 大顶堆

Modern data architecture selection: Data fabric, data mesh

setAttribute、getAttribute、removeAttribute

SSM在线考试系统含文档

Vscode configuration user code snippet (including deletion method)

English grammar_ Indefinite pronouns - Overview

如何画 贝赛尔曲线 以及 样条曲线?

ESP32ADC

Research on data governance quality assurance

随机推荐

Queue (stack)

Vscode configuration user code snippet (including deletion method)

Generator and async solve asynchronous programming

Modern data architecture selection: Data fabric, data mesh

有好用的免费的redis客户端工具推荐么?

36. Delete the penultimate node of the linked list

Compatibility problems of call, apply, bind and bind

Use of PageHelper

FinClip 「小程序导出 App 」功能又双叒叕更新了

EAS environment structure directory

Activity start (launchactivity/startactivity)_ (1)_ WMS of flow chart

[paper reading] mean teachers are better role models

Custom scroll bar

Teach you how to use power Bi to realize four kinds of visual charts

Knowledge sharing | sharing some methods to improve the level of enterprise document management

29. Right view of binary tree

基于matlab的声音识别

Windivert:可抓包,修改包

Make a fake! Science has exposed the academic misconduct of nature's heavy papers, which may mislead the world for 16 years

Modification of EAS login interface