当前位置:网站首页>Kubernetes theoretical basis

Kubernetes theoretical basis

2022-06-28 07:37:00 【Courageous steak】

1 Introduce

Highly available cluster replica data is best >= 3 An odd number

2 Component is introduced

k8s framework

2.1 Core components

2.1.1 api server

All services access a unified portal

2.1.2 ControllerManager

Expected number of copies maintained

2.1.3 Scheduler

Be responsible for introducing tasks , Select the appropriate node to assign tasks

2.1.4 etcd

Key-value pair database , Storage K8S All important information about the cluster ( Persistence )

- ETCD

etcd It is officially positioned as a trusted distributed key value storage service , It can store some key service data for the whole distributed cluster , Assist in the normal operation of distributed clusters .

Architecture diagram

AWL: journal

Store: Persistent write to local disk

2.1.5 Kubelet

Directly interact with the container to realize the life cycle management of the container

2.1.6 Kube-proxy

Responsible for writing rules to IPTABELS、IPVS, Implementation of service mapping access

2.2. Other plug-ins

2.2.1 CoreDNS

It can be used for SVC Create a domain name 、IP Correspondence analysis

2.2.2 dashboard

to K8S Cluster provides a B/S Structure access architecture

2.2.3 Ingress Controller

The government can only achieve four levels of agency ,Ingress Can achieve 7 layer

2.2.4 fedetation

Provide a cluster center that can span multiple K8S Unified management function

2.2.5 prometheus

Provide a K8S Cluster monitoring capability

2.2.6 ELK

Provide K8S Cluster log unified analysis intervention platform

3 Pod

3.1 Pod Concept

- Autonomous Pod

- Controller managed Pod

notes : It's not official to install the above classification

3.1.1 Pod Service type

HPA

Horizontal Pod Autoscaling Only applicable to Deployment and ReplicaSet, stay V1 Only according to Pod Of CPU Utilization expansion and reduction , stay vlalpha In the version , Support for memory and user-defined metric Expansion and contraction capacity .

StatefulSet

To solve the problem of stateful service ( Corresponding Deployments and ReplicaSets It is assumed for stateless service ), Its scenarios include :

- Stable persistent storage : namely Pod You can still access the same persistent data after rescheduling , be based on PVC To achieve .

- Stable network logo , namely Pod After rescheduling PodName and HostName unchanged , be based on Headless Service( That is, no Cluster Ip Of Service) To achieve .

- Orderly deployment , The orderly expansion , namely Pod There is a sequence , When deploying or expanding, it should be done in the order of definition ( From 0 To N-1, All before the next run Pod Must be Running and Read state ), be based on init containers To achieve

- Orderly contraction , In order to delete ( From N-1 To 0)

DaemonSet

Make sure that all ( Or some )Node Run a Pod Copy of . When there is Node When joining a cluster , It will also add a new one for them Pod. When there is Node When removing from a cluster , these Pod It will also be recycled . Delete DaemonSet It will delete all the Pod

Use DaemonSet Some typical uses of

- Running cluster storage daemon, For example, in each Node Up operation glusterd、ceph

- At every Node Running log on mobile phone daemon, for example fluentd、logstash

- At every Node On run monitoring daemon, for example Prometheus Node Exporter

Job

Responsible for batch processing tasks , That is to perform a task , It guarantees one or more of the batch tasks Pod A successful ending

Cron Job

Manage time-based Jod, namely

- Run only once at a given point in time

- Run periodically at a given point in time

3.1.2 Pod Service discovery

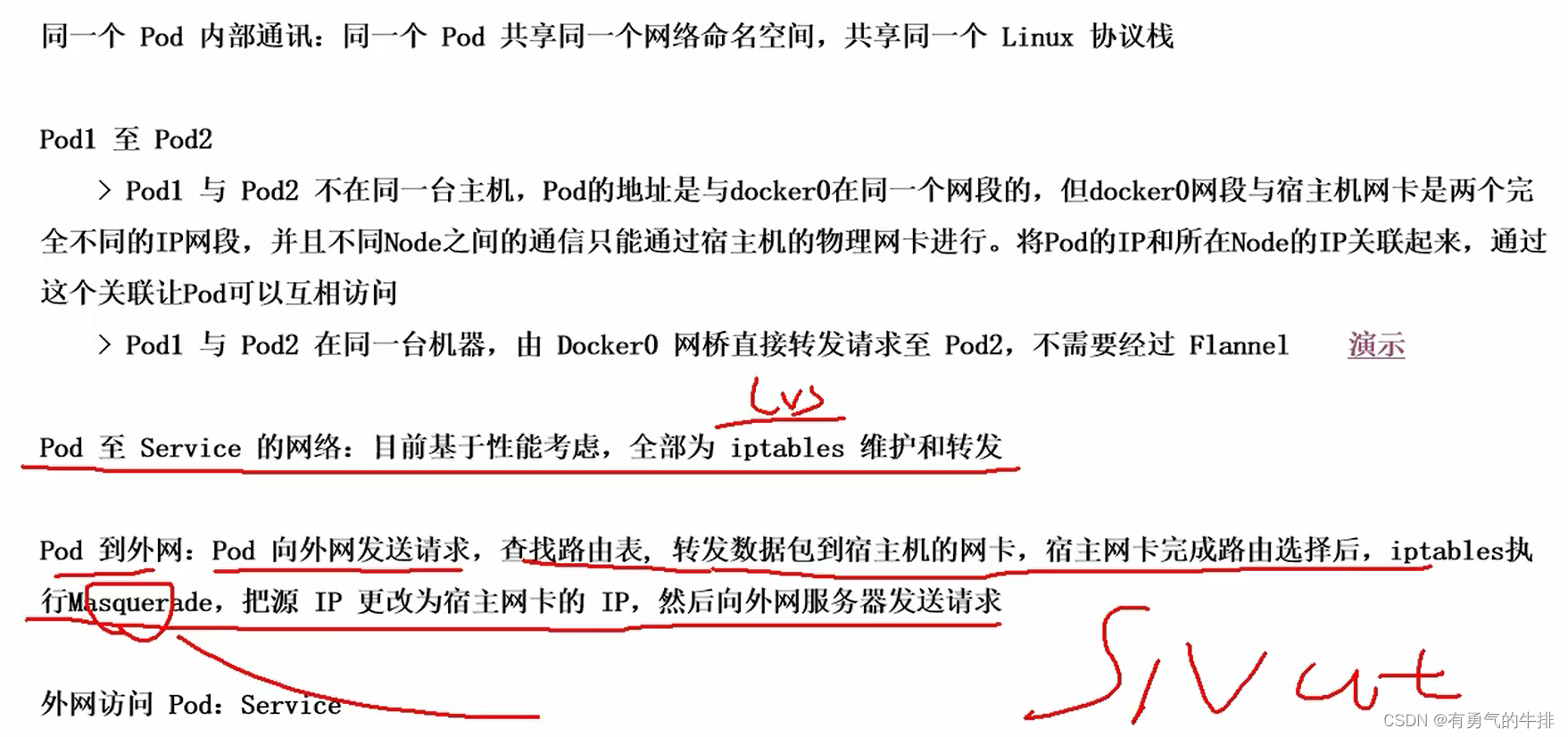

4 Network communication mode

Kubernetes The network model assumes that all Pod All in a flat network space that can be directly connected , This is in GCE(Google Comute Engine) It's a ready-made network model ,Kubernetes Suppose the network already exists . And build in the private cloud Kubernetes colony , You can't assume that this network already exists . We need to implement this network hypothesis ourselves , Put... On different nodes Docker The mutual access between containers should be made first , And then run Kubernetes.

- The same Pod Between multiple containers in :lo

- various Pod Communication between :Overlay Network

- Pod And Service Communication between : Of each node Iptables The rules

Flannel yes CoreOS Team directed Kubernetes Design of a network planning service , Simply speaking , Its function is to let different node hosts in the cluster create Docker Containers all have the unique virtual of the whole cluster IP Address . And it can be in these IP Build an overlay network between addresses (Overlay Network), Through this overlay network , Transfer the packet to the target container intact .

etcd And Flannel Provide instructions

- Storage management Flannel Distributable IP Address segment resources

- monitor etcd Each of them Pod The actual address of , And build maintenance in memory Pod Node routing table

Study address :

https://www.bilibili.com/video/BV1w4411y7Go

边栏推荐

- NDK 交叉编译

- Mysql8.0 and mysql5.0 accessing JDBC connections

- The practice of traffic and data isolation in vivo Reviews

- Leetcode learning records

- OPC 协议认识

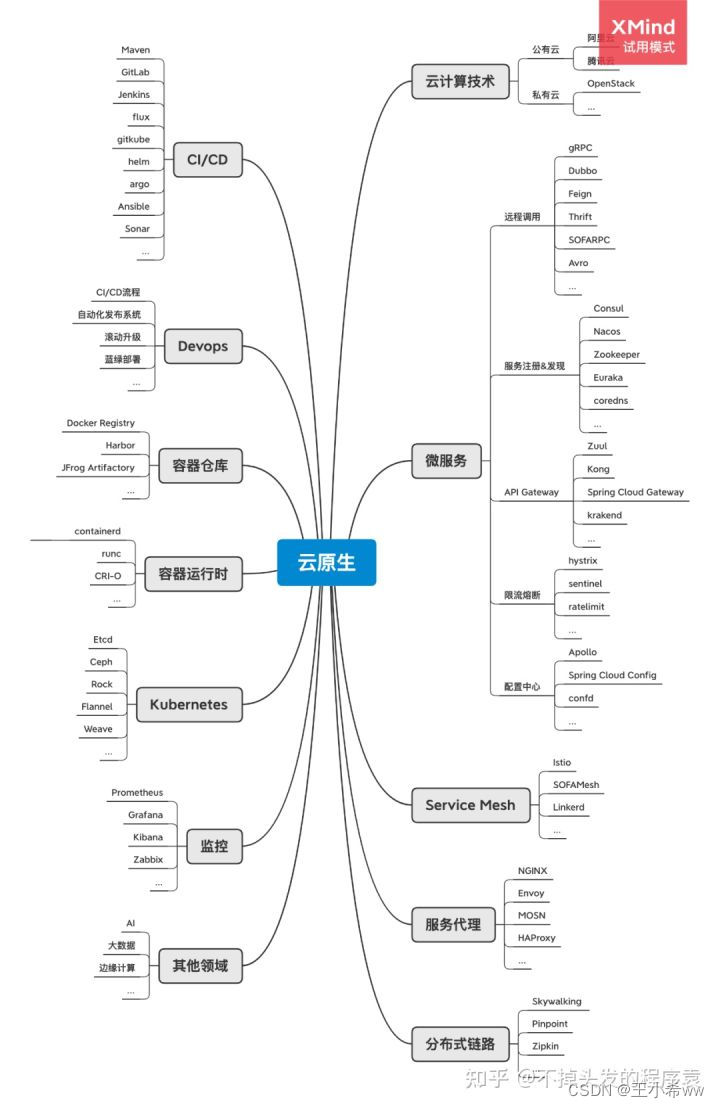

- 云原生(待更新)

- goland IDE和delve调试位于kubernetes集群中的go程序

- 股票炒股注册开户靠谱吗?安全吗?

- hack the box:RouterSpace题解

- Reading notes - MySQL technology l act: InnoDB storage engine (version 2)

猜你喜欢

随机推荐

Makefile

NDK cross compilation

R 语言 Kolmogorov-Smirnov 检验 2 个样本是否遵循相同的分布。

剑指Offer||:链表(简单)

Mysql57 zip file installation

No suspense about the No. 1 Internet company overtime table

HJ明明的随机数

Section 9: dual core startup of zynq

Force buckle 515 Find the maximum value in each tree row

安全培训是员工最大的福利!2022新员工入职安全培训全员篇

Application and Optimization Practice of redis in vivo push platform

ice - 资源

R 语言绘制 动画气泡图

打新债注册开户靠谱吗?安全吗?

股票炒股注册开户靠谱吗?安全吗?

看似简单的光耦电路,实际使用中应该注意些什么?

hack the box:RouterSpace题解

R language hitters data analysis

ES6 use of return in arrow function

Principle and practice of bytecode reference detection

![[ thanos源码分析系列 ]thanos query组件源码简析](/img/e4/2a87ef0d5cee0cc1c1e1b91b6fd4af.png)