当前位置:网站首页>[pyspark foundation] row to column and column to row (when there are more than one column)

[pyspark foundation] row to column and column to row (when there are more than one column)

2022-07-24 21:36:00 【Evening scenery at the top of the mountain】

List of articles

One 、 problem

Now? pyspark There are fields in user_id and k individual item_id Column , The goal is to achieve something similar sql Row to column and column to row for classic tasks in , That is, one by one user_id and item_id. Can pass df.printSchema() View the current df Field of :

root

|-- user_id: double (nullable = true)

|-- beat_id[0]: double (nullable = true)

|-- beat_id[1]: double (nullable = true)

|-- beat_id[2]: double (nullable = true)

|-- beat_id[3]: double (nullable = true)

.......

Two 、 Method 1

Start with a chestnut , The place that may be confused is selectExpr Inside stack, It can be understood as changing the corresponding original fields “ The stack ”, Then one more feed into the back as Renamed project Field :

# test_example

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('JupyterPySpark').enableHiveSupport().getOrCreate()

import pyspark.sql.functions as F

# Raw data

test = spark.createDataFrame([('2018-01',' project 1',100), ('2018-01',' project 2',200), ('2018-01',' project 3',300),

('2018-02',' project 1',1000), ('2018-02',' project 2',2000), ('2018-03',' project 4',999),

('2018-05',' project 1',6000), ('2018-05',' project 2',4000), ('2018-05',' project 4',1999)

], [' month ',' project ',' income '])

# test.show()

# One 、 Transfer line column

test_pivot = test.groupBy(' month ') \

.pivot(' project ', [' project 1', ' project 2', ' project 3', ' project 4']) \

.agg(F.sum(' income ')) \

.fillna(0)

test_pivot.show()

# Two 、 Column turned

# Inverse perspective Unpivot

unpivot_test =test_pivot.selectExpr("` month `",

"stack(4, ' project 1', ` project 1`,' project 2', ` project 2`, ' project 3', ` project 3`, ' project 4', ` project 4`) as (` project `,` income `)") \

.filter("` income ` > 0 ") \

.orderBy(["` month `", "` project `"]) \

unpivot_test.show()

+-------+-----+-----+-----+-----+

| month | project 1| project 2| project 3| project 4|

+-------+-----+-----+-----+-----+

|2018-03| 0| 0| 0| 999|

|2018-02| 1000| 2000| 0| 0|

|2018-05| 6000| 4000| 0| 1999|

|2018-01| 100| 200| 300| 0|

+-------+-----+-----+-----+-----+

+-------+-----+----+

| month | project | income |

+-------+-----+----+

|2018-01| project 1| 100|

|2018-01| project 2| 200|

|2018-01| project 3| 300|

|2018-02| project 1|1000|

|2018-02| project 2|2000|

|2018-03| project 4| 999|

|2018-05| project 1|6000|

|2018-05| project 2|4000|

|2018-05| project 4|1999|

+-------+-----+----+

3、 ... and 、 Solution

- Same idea , But if the field is Chinese , Need will be in

stackChinese to Chinese plus `` Symbol , But it is not conducive to later processing , So it's best to remove it with regular expressions . - If there are many columns that need to be transferred , It is more necessary to use the following implementation , Defined as

unpivotfunction .

from pyspark.sql.functions import regexp_replace

def unpivot(df, keys,feature,value):

'''df: Data frame to be converted keys: The primary key to be reserved in the table to be converted key, With list[] Type in feature, value: Converted column names , Customizable '''

# The conversion type is to avoid field class mismatch , Uniformly convert data into double type (string It's OK ), If you ensure that the data types are completely consistent , The sentence can be omitted

df = df.select(*[col(x).astype("double") for x in df.columns])

cols = [x for x in df.columns if x not in keys]

stack_str = ','.join(map(lambda x: "'`%s`', `%s`" % (x, x), cols))# here join To use connectors ‘,’ Will all ('`x`',`x`) Connect

df = (df.selectExpr(*keys, "stack(%s, %s) as (%s, %s)" % (len(cols), stack_str,feature,value))

.withColumn(feature,regexp_replace(feature,'\`',''))

)

return df

keys = ['user_id']

feature,value = 'features','beat_id'

# df_test.new = unpivot(df_test, keys,feature,value)

df_result3 = unpivot(df_result2, keys,feature,value)

df_result3.show()

+-----------+-----------+---------+

| user_id| features| beat_id|

+-----------+-----------+---------+

|1.9079423E7| beat_id[0]|1018216.0|

|1.9079423E7| beat_id[1]| 886351.0|

|1.9079423E7| beat_id[2]|1051107.0|

|1.9079423E7| beat_id[3]|1018226.0|

+-----------+-----------+---------+

Reference

[1] https://zhuanlan.zhihu.com/p/337437504

边栏推荐

- Leetcode skimming -- bit by bit record 017

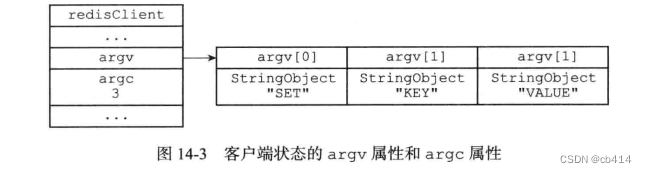

- Redis (12) -- redis server

- C # image template matching and marking

- [development tutorial 6] crazy shell arm function mobile phone - interruption experiment tutorial

- What is a self built database on ECs?

- Sword finger offer 15. number of 1 in binary

- Huawei cloud data governance production line dataarts, let "data 'wisdom' speak"

- “IRuntime”: 未声明的标识符

- Sqlserver BCP parameter interpretation, character format selection and fault handling summary

- Little Red Book Keyword Search commodity list API interface (commodity detail page API interface)

猜你喜欢

Intranet penetration learning (I) introduction to Intranet

Documentary of the second senior brother

rogabet note 1.1

Detailed explanation of ThreadLocal

Redis (12) -- redis server

Case analysis of building cross department communication system on low code platform

Codeforces Round #808 (Div. 2)(A~D)

About the acid of MySQL, there are thirty rounds of skirmishes with mvcc and interviewers

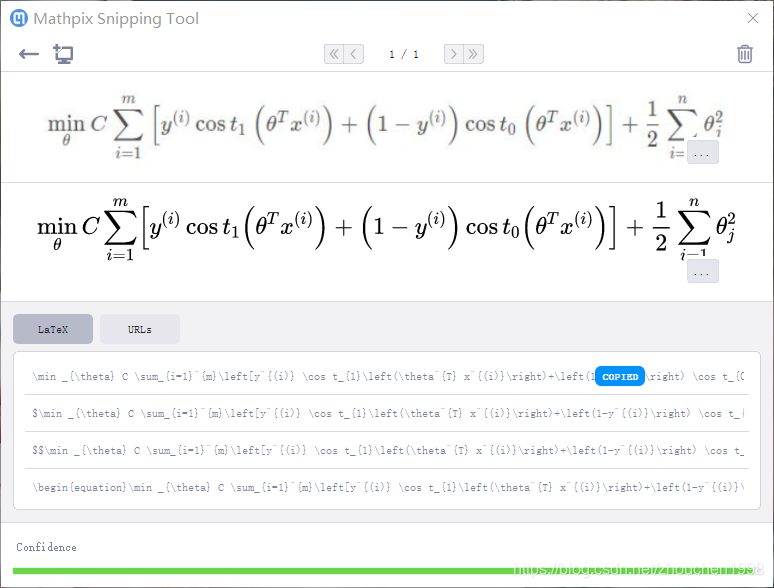

Mathpix formula extractor

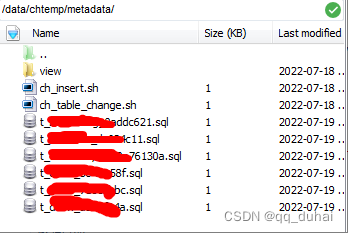

Ch single database data migration to read / write separation mode

随机推荐

ERROR 2003 (HY000): Can‘t connect to MySQL server on ‘localhost:3306‘ (10061)

It's the same type of question as just K above

About the acid of MySQL, there are thirty rounds of skirmishes with mvcc and interviewers

Baidu classic interview question - determine prime (how to optimize?)

[SOC] the first project of SOC Hello World

Eight transformation qualities that it leaders should possess

Thank Huawei for sharing the developer plan

Maxcompute instance related operations

Leetcode skimming -- bit by bit record 017

[record of question brushing] 16. The sum of the nearest three numbers

Among the database accounts in DTS, the accounts of MySQL database and mongodb database appear most. What are the specific accounts

Class notes (4) (3) -573. Lecture hall arrangement (Hall)

Sqlserver BCP parameter interpretation, character format selection and fault handling summary

Drawing library Matplotlib drawing

P2404 splitting of natural numbers

Scientific computing toolkit SciPy Fourier transform

Make good use of these seven tips in code review, and it is easy to establish your opposition alliance

CAD calls mobile command (COM interface)

MySQL - multi table query - seven join implementations, set operations, multi table query exercises

731. My schedule II (segment tree or scoring array)