当前位置:网站首页>[oceanbase] Introduction to oceanbase and its comparison with MySQL

[oceanbase] Introduction to oceanbase and its comparison with MySQL

2022-06-25 12:07:00 【Rookie millet】

summary

OceanBase It is a general distributed relational database independently developed by Alibaba and ant financial , Positioned as a commercial enterprise database .OceanBase Can provide financial level reliability , At present, it is mainly used in the financial industry , It also applies to non-financial industry scenarios . It combines the advantages of traditional relational database and distributed system , Using ordinary PC The server forms a database cluster , Excellent linear scalability .

Through the implementation of distributed engine at the bottom Paxos Majority protocol and multi copy feature ,OceanBase With commendable high availability and disaster tolerance capability , Not negative “ Never shut down ” The reputation of the database system , Can perfectly support more places and more lives 、 High availability deployment such as remote disaster recovery .

OceanBase Is a quasi memory database system , Unique read-write separation architecture and oriented SSD Efficient storage engine for solid state disk , It brings users an ultra-high performance experience .

OceanBase Locate as cloud database , By implementing multi tenant isolation within the database , Implementing a cluster can serve Multiple tenants , And the tenants are completely isolated , Will not affect each other .

OceanBase Currently fully compatible MySQL, Users can start at zero cost MySQL Migrate to OceanBase. meanwhile OceanBase The partition table and secondary partition function are realized in the database , Can completely replace MySQL Common database and table schemes .

OceanBase Storage engine for

OceanBase Essentially Is a baseline plus incremental storage engine , It is very different from relational database . The storage mechanism is LSM Trees (Log-Structured Merge Tree, Log structure merge tree ), This is also the majority NoSQL The storage mechanism used .OceanBase It adopts a read-write separation architecture , Divide the data into baseline data and incremental data , The incremental data is placed in memory (MemTable), The baseline data is placed in SSD disc (SSTable). Although not deliberately designed , but OceanBase It is indeed more suitable than traditional databases, such as double eleven 、 Second kill, coupon sales and other scenarios with sudden large traffic in a short time :

- A large number of users swarmed in a short time , The business flow is very large in a short time , The database system is under great pressure

- A span ( Seconds 、 A few minutes 、 Or half an hour ) After, the business flow dropped rapidly or significantly

OceanBase yes “ Baseline data ( Hard disk )”+“ Modify the increment ( Memory )” The architecture of , As shown in the figure below .

The entire database is in hard disk format ( Usually SSD) As a carrier , Data modification is incremental data , Write only memory , Recent increases 、 Delete 、 Change data ( Modify the increment ) In the memory , therefore DML Is a complete memory operation , Very high performance . The baseline data is stored on the hard disk , therefore OceanBase It can be regarded as a quasi memory database . The benefits of this are :

- Write transactions in memory ( Except that the transaction log must be on disk ), Improved performance .

- No random writing to the hard disk , Random reading of hard disk is not disturbed , The system performance improves significantly during peak hours ; For traditional databases , The peak period of business is usually a large number of random disk writes ( Brush dirty pages ) At the peak of , A lot of random disk writing consumes a lot of IO, Especially considering SSD Write amplification of , It has a great impact on reading and writing performance .

- Baseline data is read-only , cache (cache) Simple and effective .

Reading the data , The data may have an updated version in memory , There is a baseline version in the persistent storage , The two versions need to be merged , Get the latest version . At the same time, it realizes Block Cache and Row cache, To avoid random reading of baseline data . When the incremental data in memory reaches a certain scale , The merging of incremental data and baseline data is triggered , Drop the incremental data ( It's called a dump , Also called minor freeze). At the same time, every night's free time , The system will also automatically merge every day ( Merger for short ,major freeze).

OB Why use this special architecture , In a nutshell , It is based on such a theoretical basis —— Although the amount of data in the database itself is increasing , More and more records , But the amount of data added, deleted and modified every day is not large , It only accounts for a small proportion of the total database . This is not just the payment data for Alipay. , It is also applicable to the practical application of most other databases , yes OB The establishment of the above storage engine is an important theoretical basis .

Log-Structured Merge-Tree (LSM-Tree),log-structured, Log structure , Just keep Append Just fine .“Merge-tree”, That is to say “ Merge - Trees ”, Merge multiple files into one .LSM-tree The biggest feature is the fast writing speed , It mainly uses the sequential writing of the disk .LSM Tree storage engine and B The tree storage engine is the same , We also support the increase of 、 Delete 、 read 、 Change 、 Sequential scanning operation , And through mass storage technology to avoid disk random write problem . Of course, there are advantages and disadvantages in everything ,LSM Trees and B+ Trees are compared to ,LSM Trees sacrifice part of their reading performance , To greatly improve write performance .

LSM The design idea of trees is very simple : Keep incremental changes to the data in memory , Wait until the accumulation reaches the specified size , Then merge and sort the data in memory to the end of the disk queue ( Because all the trees to be sorted are ordered , You can quickly merge them by merging and sorting ). But it's a little troublesome to read , You need to merge the historical data in disk and the latest modification in memory , So the write performance is greatly improved , When reading, you may need to see whether it hits memory , Otherwise, you need to access more disk files .

The storage engine of log structure is a relatively new scheme , The key idea is to systematically convert random writes to sequential writes , Due to the performance characteristics of the hard disk , Write performance ratio B-Tree The storage engine is several times higher , Read performance is the opposite .B The tree puts all the pressure on the write operation , From the root node index to the location of the data store , You may need to read the file many times ; When you actually insert , It may also cause page Division , Write files many times . and LSM When the tree is inserted , Write directly to memory , As long as the data in memory is kept in order by using ordered data structures such as red black tree or jump table , So it can provide higher write throughput .LSM The basic idea of trees is simple enough to be effective , It can effectively support interval query , Support very high write throughput . But when LSM-Tree Find a database that does not exist key when , You need to check the index and all segment files , To optimize this situation , Storage engines usually use Bloom filters .

OceanBase How to ensure the atomicity of writing

What is the difficulty of distributed database technology ? In short , If you want a penny in your account, it's good , The database should support transactions , The transaction has ACID principle , These four letters represent :A Atomicity 、C Uniformity 、I Isolation, 、D persistence .

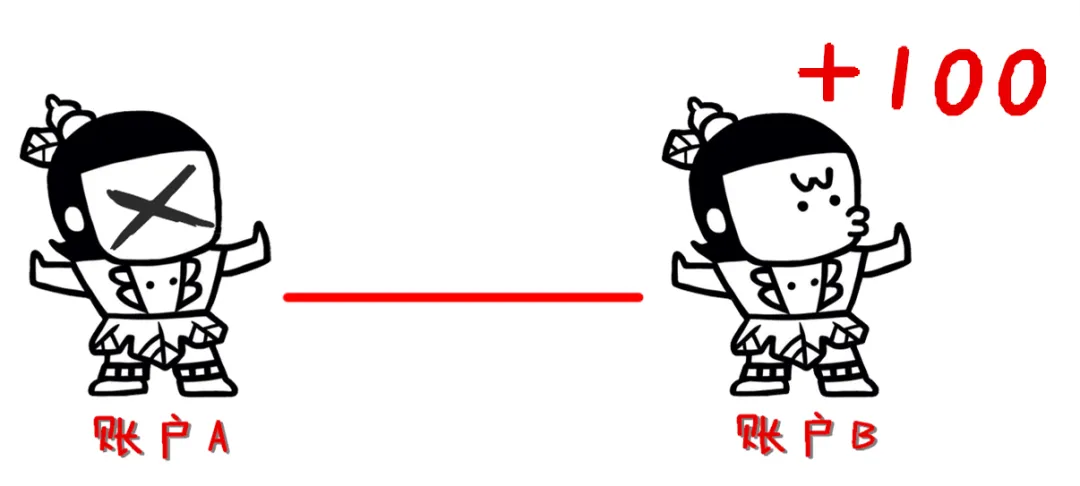

- Atomicity : Indicates that multiple operations in a transaction are either completed , All or nothing , There is no intermediate state , for example A Transfer to B100 Yuan ,A Account deduction 100 Block at the same time ,B The account must be increased 100 Yuan . These two things must be held together like an atom , Never allowed “A The money has been deducted ,B No more money ” What happened to .

- Uniformity :A Transfer to B100 Yuan , The moment the transfer is completed ,A Instantly check your latest balance , Must show that has been deducted 100 The amount after that ,B It must be found in an instant that it has been added 100 The balance after the block . All accounts must be perfectly correct at any one time .

- Isolation, : Indicates that multiple concurrent transactions do not affect each other ,A Transfer to B100 Yuan , Not right C Have any effect .

- persistence : Indicates that once the transaction succeeds, it will not be lost ,A Transfer to B100 Yuan , Once the transfer is completed , It will take effect forever .

Where consistency is the purpose , Atomicity, isolation and persistence are the means , Just do it well. ACID Medium AID,C Nature can satisfy .

Because the data is scattered on multiple database servers , The library was split . The biggest difficulty of distributed writing is to ensure ACID In the A—— Atomicity .

for instance , hypothesis A to B turn 100 Yuan , Because it is “ Distributed database ”,A The user's account data does not exist A On the machine ,B The user's account data does not exist B On the machine .

If the transaction occurs , Responsible for A Account deduction 100 The dollar A The machine crashed , Failed to buckle , And responsible for the account B Add 100 The dollar B The machine is working properly , Combined with the 100—— This will cause Alipay loss. 100 block .

conversely , If you are responsible for A Account deduction 100 The dollar A The machine is normal , It has been deducted 100, And responsible for the account B Add 100 The dollar B The machine crashed ,100 Block without , Then users will lose 100—— Finally, Alipay needs to compensate users. 100 block .

In order to ensure that these two embarrassing situations do not happen ,OceanBase 1.0 Using a whole set of technologies , But the main two .

Voting mechanism

Database adoption “ Three copies ”, That is, any account , There is one main car, two spare tires, and three copies of the same data .

for instance : Account A The data must exist on three machines at the same time . Transfer time , The transfer is completed only after the execution of at least two machines , It means , One of the three machines is broken , It does not affect the execution of the transfer . meanwhile , The three machines send messages to each other in real time “ Heartbeat signal ”, If a machine hangs up , The other two can feel it right away , After dealing with the transaction at hand , Send an alarm to the system immediately , The system automatically matches them with a new partner , Three machines continue to work . And replace the bad machine , Give it to a technician to repair , After repair, it will be put on the shelf again to become a standby machine , Wait to enter the battle sequence .

in other words , Unless two machines break down in the same year, month, day, minute, second and millisecond , Otherwise, the work of the database will not be affected .

Even that's not enough , At key points ,OceanBase Five nodes will be used for voting . That is, unless the three machines break down in the same year, month, day, minute, second and millisecond , Otherwise, the work of the database will not be affected . The probability is already low in the dust .

oceanBase In the storage layer , Through partition level Paxos Log synchronization enables high availability and automatic expansion , Support flexible storage architecture , Including local storage , And storage computing separation . The classic centralized database often adopts the master-slave synchronization scheme , There are two synchronization modes : The first is strong synchronization , Each transaction operation needs to be strongly synchronized to the standby machine before it can answer the user , This method can achieve server failure without losing data , But the service must be stopped , Availability cannot be guaranteed ; The other is asynchronous , Each transaction operation can respond to the user only after the host succeeds , This method can achieve high availability , However, the data between the primary database and the standby database are inconsistent , Data will be lost after the standby database is switched to the primary database .

Strong consistency and high availability are the two most important features of database , In the active / standby synchronization mode , You can't have both fish and bear paws .Paxos It is a distributed voting protocol based on majority , Each transaction needs to be successfully written to more than half of the server before it can answer the user . As the saying goes ,“ Three cobblers are better than one Zhuge Liang ”, Suppose there are 3 Servers , Each transaction operation requires at least 2 Succeeded on server , No matter any server fails , There are also... In the system 1 A server that contains all the data can work normally , So as not to lose data at all , And in 30 Select a new main library to restore service within seconds ,RPO be equal to 0,RTO Less than 30 second . All warranties RPO=0 All the agreements are Paxos Agreement or Paxos A variation of the agreement ,Raft The agreement is one of them .

Monitoring mechanism

The monitoring mechanism is actually a two-stage submission agreement (2PC) Practice , Think carefully , Except that the machine broke down , There are other situations that can undermine the atomicity of transactions .

for example :A The account will be deducted 100 block , But its balance is only 90 block , Or has reached today's transfer limit , In this case , If you rush to B Add... To the account 100 block ,A The account is not enough to deduct , You'll get into trouble .

On the contrary, if B The account status is abnormal , Cannot add 100 block , There will also be trouble . The solution to this problem , Is to introduce a “ Referee ”. The referee stood A Accounts and B Next to the account , Its workflow is like this :

- The referee asked A Account : Are your three machines all right ?A Account theory : That's all right. .

- The referee asked A Account : Your account allows you to deduct 100 Do you ?A Account theory : allow .

- The referee asked B Account : Are your three machines all right ?B Account theory : That's all right. .

- The referee asked B Account : Your account status is acceptable 100 Do you ?B say : allow .

- At this time , The referee whistled ,A、B Accounts are frozen at the same time .

- A buckle 100,B Add 100, Report to the referee from both sides “ success ”.

- The referee whistled again ,A、B The account is unfrozen at the same time .

above 7 Step , It's all done in chronological order , Stuck at any step , The accounts won't be messy , You won't lose a penny . It's completely in line “ Atomization ” The requirements of .

The referee acts as 2PC Coordinator role in .

OceanBase How to ensure transaction isolation

A database system cannot serve only one user , You need to allow multiple users to manipulate data at the same time , Concurrent . Therefore, it is necessary to ensure the isolation of multiple concurrent transactions , This involves concurrency control technology , Common concurrency control methods include lock based concurrency control (Lock based Concurrency Controll) And multi version concurrency control (Multiple version Concurrency Controll)

Lock based concurrency control

The database system locks every row of data operated by the user during the transaction , Read operation plus read lock , Write lock on write operation . Read and write blocking , Write about blocking . If two different transactions try to modify the same row of data , Business 1 Add write lock first and execute normally , Business 2 You can only wait , When a transaction 1 After releasing the write lock at the end of execution , Business 2 And go on with it .

Take the transfer operation as an example ,A The account has 100 element ,B The account has 200 element , If the transaction 1 Execution from A Account transfer 10 Yuan to B Account , So in business 1 In the process of execution ,A B Both lines of account data will be locked . If the transaction 2 Perform another transfer operation , from A Account transfer 50 Yuan to B Account , So here you are A Failed to write lock , Business 2 Will wait until the write lock is successfully added .

If you're in business 1 And business 2 During the execution of , Another business 3 Want to query A B Total balance of two accounts , Then you need to read A、B Two lines , Put a read lock before reading , But in business 1 And transaction 2 In operation , Reading lock failed , The transaction 3 You need to wait for the transaction 1 And transaction 2 Only when it's over .

Multi version concurrency control

In the example above , A read A、B Operation of the sum of two account balances , Regardless of business 1 And transaction 2 Execution completed , The results are certain (300 element ). But lock based concurrency control , Reading and writing are blocked , It greatly reduces the concurrency performance of the database , So it's here MVCC To do concurrency control . For database data , Keep the historical version for each modification , In this way, the read operation can be performed directly on the historical version , Will not be blocked by write operations .

stay MVCC Methods , Each transaction has a committed version , Continue with the example of transaction 3 above . Suppose the initial version of the data is 98, Suppose the transaction 1 Yes, version number 100, Business 2 yes 101. Then after the modifications are completed , The data status in the database is as follows :

The records of each data modification are concatenated . There is another global variable Global Committed Version(GCV) Indicates the version number of the global last submission . In the transaction 1 Perform before GCV yes 98, Business 1 After submission GCV become 100, After transaction 2 is committed GCV become 101. therefore , When a transaction 3 At the beginning of execution , First get GCV, Then read the corresponding data according to the version number .

MVCC The modification operation will still rely on the lock mechanism mentioned above , So the conflict between write operations still needs to wait . however MVCC The biggest advantage is that read operations are completely separated from write operations , Don't affect each other , It is very beneficial to improve the performance and concurrency of the database .

OceanBase The scheme used

Use MVCC Scenario time GCV The changes need to be incremented , If GCV It was modified to 101, Express 101 And all previous transactions have been committed .OceanBase After using distributed transactions , This guarantee becomes difficult , for example , The version number is 100 The transaction may be a distributed transaction ,101 It may be a stand-alone transaction , The commit process of distributed transactions is significantly longer than that of stand-alone transactions . result , Version number 101 Your business is done first ,GCV It was changed to 101. But the version number is 100 The transaction is still committed , Can't be read .

therefore ,OceanBase Adopted MVCC and Lock-based The way of combination , The read operation first obtains GCV, Then read each row of data according to the version number . If there is no modification on this line of data , Or there are modifications, but the modified version number will be greater than GCV, Then this line of data can be read directly according to the version number . If there is a committed transaction on this row of data , And the version number may be less than the version number used for reading , Then the read operation needs to wait for the end of the transaction , It's like Lock-based In the scheme, the read lock is the same as the write lock . however , The probability of this happening is very low , So the overall performance is still like MVCC As good as .

OceanBase High availability

High availability is one of the basic requirements of database , Traditional databases allow active and standby mirroring through log synchronization ; However , Because the primary database and the standby database in the primary and standby images cannot be fully synchronized , Therefore, the high availability of traditional database is actually based on the traditional highly reliable server and highly reliable shared storage .

OceanBase Similarly, it has high cost performance 、 Slightly less reliable servers , But the same data is stored in multiple computers (>=3) More than half of the servers ( for example 3 Taichung 2 platform ,5 Taichung 3 Taiwan, etc ), Each write transaction must reach more than half of the servers before it takes effect , Therefore, when a few servers fail, there will be no data loss . More Than This , When the primary database fails, the traditional database primary and standby images , It is usually necessary to upgrade the standby database to the main database by external tools or manual work , and OceanBase The underlying implementation Paxos High availability protocol , After the main library failure , The remaining servers will soon automatically elect a new master database , And continue to provide services ,OceanBase The database can switch automatically without losing data .

OceanBase And MySQL Comparison

1、 OB Of redo log Distributed consistency algorithm is used paxos Realized . So in CAP In theory , although OB Strong consistency model is used , however OB It can achieve high availability under the condition of certain network partition ( Generally speaking, more than half of the machines can work while they are still alive ), Official MySQL This cannot be done at present .

2、OB The storage structure of is two-level LSM tree. Where... In memory C0 Btree Leaf nodes do not need to be connected to the disk btree The same size , So it can be made smaller , Yes CPU Of Cache friendly , And there will be no write amplification problem . bring OB The write performance of has been greatly improved . At the same time... On disk C1 tree Not in a traditional sense Btree(Btree Uncompressed can waste half the space ). Space utilization is greatly improved . Simply put, it's fast , Cost saving .

3、 Database automatic slicing function ( Support hash/range, First level, second level, etc ), Provide independent proxy Route write query and other operations to the corresponding partition . This means that no matter how large the amount of data is, there is no need to manually divide databases and tables . And slicing can be online in all areas server Migration between , Solve hot issues ( The problem of uneven distribution of resources , Be flexible, add machines and reduce machines ). Each slice ( To be exact, it is the partition selected as the main ) Both support reading and writing , Do multi-point writing ( High throughput , Performance is linearly scalable ).

4、 Non blocking two-phase commit implemented inside the database ( Cross machine transactions ).

5、 Database native multi tenant support , It can directly isolate the between tenants cpu、mem、io And so on .

6、 High availability , Yes OceanBase for , The same data is saved in multiple computers (>=3) More than half of the servers ( for example 3 Taichung 2 platform ), Each write transaction must reach more than half of the servers before it takes effect , Therefore, when a few servers fail, there will be no data loss , Able to RPO Is equal to zero . More Than This ,OceanBase Implemented at the bottom Paxos High availability protocol , After the main library failure , The remaining servers will soon automatically elect a new master database , Automatic switching , And continue to provide services , In the actual production system RTO stay 20 In seconds .

7、 Expand capabilities .MySQL With the help of database middleware , Horizontal expansion can be realized by dividing databases and tables . This solution solves the common problem of vertical expansion of traditional databases , But there are still considerable limitations , For example, it is difficult to expand and shrink capacity 、 Cannot support global indexes , Data consistency is difficult to guarantee, etc .OceanBase It provides a true sense of horizontal expansion capability from the database level .OceanBase Implementation based on distributed system , It is convenient to expand and shrink , And can achieve no perception by users . meanwhile ,OceanBase Dynamic load balancing in the cluster 、 Multi machine distributed query 、 The ability of global index further enhances its scalability . Database and table splitting schemes for users ,OceanBase It provides the functions of partition table and secondary partition , Can completely replace .

About OceanBase The past and this life :OceanBase: Ants climb onto the stage

边栏推荐

- 云原生数据湖以存储、计算、数据管理等能力通过信通院评测认证

- Is industrial securities a state-owned enterprise? Is it safe to open an account in industrial securities?

- 学习笔记 2022 综述 | 自动图机器学习,阐述 AGML 方法、库与方向

- Encapsulation of practical methods introduced by webrtc native M96 basic base module (MD5, Base64, time, random number)

- 20、wpf之MVVM命令绑定

- R语言caTools包进行数据划分、scale函数进行数据缩放、e1071包的naiveBayes函数构建朴素贝叶斯模型

- 一个硬件工程师走过的弯路

- Cesium building loading (with height)

- Gradle知识点

- 黑马畅购商城---3.商品管理

猜你喜欢

Deeply understand Flink SQL execution process based on flink1.12

Use PHP script to view the opened extensions

黑馬暢購商城---3.商品管理

Continue to cut the picture after the ArcGIS Server is disconnected

Pd1.4 to hdmi2.0 adapter cable disassembly.

How do super rookies get started with data analysis?

使用php脚本查看已开启的扩展

What should I do to dynamically add a column and button to the gird of VFP?

Recommend a virtual machine software available for M1 computer

优品购电商3.0微服务商城项目实战小结

随机推荐

JS monitors the width and height changes of div

使用php脚本查看已开启的扩展

2022年首期Techo Day腾讯技术开放日将于6月28日线上举办

开哪家证券公司的账户是比较好,比较安全的

分享7个神仙壁纸网站,让新的壁纸,给自己小小的雀跃,不陷入年年日日的重复。

apple 为什么要改 objc_msgSend 的类型申明

Develop two modes of BS mode verification code with VFP to make your website more secure

R语言使用nnet包的multinom函数构建无序多分类logistic回归模型、使用summary函数获取模型汇总统计信息

一款好用的印章设计工具 --(可转为ofd文件)

The cloud native data lake has passed the evaluation and certification of the ICT Institute with its storage, computing, data management and other capabilities

19、wpf之事件转命令实现MVVM架构

Dark horse shopping mall ---2 Distributed file storage fastdfs

R语言使用scale函数对神经网络的输入数据进行最小最大缩放、把数据缩放到0到1之间、并划分数据集为训练集和测试集

揭秘GaussDB(for Redis):全面对比Codis

20、wpf之MVVM命令绑定

Deeply understand Flink SQL execution process based on flink1.12

Uncover gaussdb (for redis): comprehensive comparison of CODIS

JS indexof() always returns -1

Tool usage summary

A detour taken by a hardware engineer