当前位置:网站首页>[AI practice] xgb Xgbregression multioutputregressor parameter 2 (GPU training model)

[AI practice] xgb Xgbregression multioutputregressor parameter 2 (GPU training model)

2022-06-23 07:14:00 【szZack】

xgb.XGBRegressor Multiple regression MultiOutputRegressor Adjustable parameter 2(GPU Training models )

Environmental Science

- Ubuntu18.04

- python3.6.9

- TensorFlow 2.4.2

- cuda 11.0

- xgboost 1.5.2

Dependency Library

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.model_selection import GridSearchCV # The grid search from sklearn.metrics import make_scorer from sklearn.metrics import r2_score from sklearn.ensemble import GradientBoostingRegressor from sklearn.multioutput import MultiOutputRegressor import xgboost as xgb import joblibCall the core code

def tune_parameter(train_data_path, test_data_path, n_input, n_output, version): # Model parameter adjustment x,y = load_data(version, 'train', train_data_path, n_input, n_output) train_x,test_x,train_y,test_y = train_test_split(x,y,test_size=0.2,random_state=2022) gsc = GridSearchCV( estimator=xgb.XGBRegressor(seed=42, tree_method='gpu_hist', gpu_id=3), param_grid={ "learning_rate": [0.05, 0.10, 0.15], "n_estimators":[400, 500, 600, 700], "max_depth": [ 3, 5, 7], "min_child_weight": [ 1, 3, 5, 7], "gamma":[ 0.0, 0.1, 0.2], "colsample_bytree":[0.7, 0.8, 0.9], "subsample":[0.7, 0.8, 0.9], }, cv=3, scoring='neg_mean_squared_error', verbose=0, n_jobs=4) grid_result = MultiOutputRegressor(gsc).fit(train_x, train_y) #best_params = grid_result.estimators_[0].best_params_ print('-'*20) print('best_params:') for i in range(len(grid_result.estimators_)): print(i, grid_result.estimators_[i].best_params_) model = grid_result pre_y = model.predict(test_x) print('-'*20) # Calculate the decision coefficient r Fang r2 = performance_metric(test_y, pre_y) print('test_r2 = ', r2) def performance_metric(y_true, y_predict): score = r2_score(y_true,y_predict) MSE=np.mean(( y_predict- y_true)**2) print('RMSE: ',MSE**0.5) MAE=np.mean(np.abs( y_predict- y_true)) print('MAE: ',MAE) return scoreSave the model after parameter adjustment , Add the following code

joblib.dump(model, './ml_data/xgb_%d_%d_%s.model' %(n_input, n_output, version))Adjustable parameter : modify param_grid Set your own parameters

param_grid = { "learning_rate": [0.05, 0.10, 0.15], "n_estimators":[400, 500, 600, 700], "max_depth": [ 3, 5, 7], "min_child_weight": [ 1, 3, 5, 7], "gamma":[ 0.0, 0.1, 0.2], "colsample_bytree":[0.7, 0.8, 0.9], "subsample":[0.7, 0.8, 0.9], }Number of tuning threads n_jobs=4 , It can be set according to your own machine , Set up n_jobs=-1 It's easy to report mistakes

GPU Parameter setting :

estimator=xgb.XGBRegressor(seed=42, tree_method='gpu_hist', gpu_id=3)tree_method=‘gpu_hist’ Said the use of GPU Training models ,

gpu_id=3 Indicates the setting of the second 3 block GPU,

边栏推荐

猜你喜欢

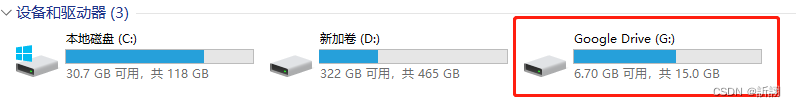

如何优雅的快速下载谷歌云盘的大文件 (二)

TP6+Redis+think-queue+Supervisor实现进程常驻消息队列/job任务

启发式的搜索策略

Configuration and compilation of mingw-w64, msys and ffmpeg

GINet

Interpreting the spirit of unity and cooperation in maker Education

20220621 Three Conjugates of Dual Quaternions

云原生落地进入深水区,博云容器云产品族释放四大价值

Regular expression graph and text ultra detailed summary without rote memorization (Part 1)

闫氏DP分析法

随机推荐

技术文章写作指南

PSP code implementation

A small method of debugging equipment serial port information with ADB

In depth learning series 47:stylegan summary

316. 去除重复字母

SSTable详解

深度学习系列47:超分模型Real-ESRGAN

About professional attitude

控制台程序

898. 子数组按位或操作

307. 区域和检索 - 数组可修改

407-栈与队列(232.用栈实现队列、225. 用队列实现栈)

20220620 uniformly completely observable (UCO)

GloRe

deeplab v3 代码结构图

Vs2013 ffmpeg environment configuration and common error handling

聚焦行业,赋能客户 | 博云容器云产品族五大行业解决方案发布

Deep learning series 47: Super sub model real esrgan

Arthas-thread命令定位线程死锁

306. Addenda