当前位置:网站首页>In depth learning series 47:stylegan summary

In depth learning series 47:stylegan summary

2022-06-23 07:12:00 【IE06】

1. styleGAN 1

gan One of the problems is that it is difficult to generate large-scale images , until 2018 year ,NVIDIA For the first time ProGAN Solved this challenge . It starts with very low resolution images ( Such as 4×4) Training generator and discriminator , Then add one higher resolution layer at a time . The initial input vector shape by [512,4,4], The final output is [3,1024,1024], Is the total 18 layer :

18 = 1( Initial entry conv layer )+8 * 2( Each block contains two convolution layers , take vector from [4,4] Change to [1024,1024])+1(to_rgb layer , Change channel to 3)

styleGAN yes proGAN Upgraded version :

1) Use 8 individual FC Layer encodes the input picture as an intermediate vector , similar PCA Transformation , Combine different features ( hair 、 eyes 、 Nose, etc ) Decoupling , Otherwise “ Feature entanglement ” problem , Too many features in the training data will affect other features .

2) Hidden variables w after A After conversion, it will be connected to each layer with different proportions , Used to scale each channel 、 The offset , be called AdaIN modular . This zoom and offset stores style information , Different values produce different styles .

because Synthesis network The network layer has 18 layer , That's why we say through w Generated 18 Two control vectors , Used to control different visual features .

3) Noise is not added to the original vector , Instead, add Synthesis In every layer of , Modify the scale and offset values :

4) Two are used during the training w, Write it down as A and B, It is used to train some network levels :

The resolution of the (4x4 - 8x8) The network part of the uses B Of style, The rest use A Of style, You can see that the identity of the image changes with souce B, But details such as skin color follow source A;

The resolution of the (16x16 - 32x32) The network part of the uses B Of style, At this time, the generated image no longer has B Identity characteristics of , hairstyle 、 The posture and so on have changed , But the color of skin still changes A;

The resolution of the (64x64 - 1024x1024) The network part of the uses B Of style, At this time, the identity characteristics change with A, Skin color with B.

2. styleGAN 2

stylegan2 Mainly to solve stylegan It is easy to appear on the generated image “ Water drop ” problem . The cause of the water drop is Adain operation ,Adain For each feature map Normalize , So it is possible to destroy feature Information between , The above phenomenon occurs . And removed Adain after , The problem is solved .

The main modification points include :

1) Remove the initial data processing

2) Cancel multiplying by the mean after standardizing the feature

3) take noise The module is external style Module add

4) Join in weight demodulation, Solve the problem that the features are not proportional after multiplying by the mean value :

5)Lazy regularization: Every time 16 individual minibatch Just optimize the regular term once , This reduces the amount of computation , At the same time, it has no effect on the effect .

3. styleGAN 3

stay GAN During the synthesis of , Some features depend on absolute pixel coordinates , This can lead to : The details seem to stick to the image coordinates , Not the surface of the object to be generated . The emergence of this problem is actually GAN A common problem of the model : The generation process is not a natural hierarchical generation . Rough features (GAN The output characteristics of the shallow network ) It mainly controls the fine features (GAN The output characteristics of the deep network ) The presence or absence , There is no precise control over where they appear .

The current generator network architecture is convolution + nonlinear + Up sampling and other structures , And such an architecture cannot do well Equivariance( Equivariant )

and stylegan3 Fundamentally solved stylegan2 The problem of adhesion between image coordinates and features , The real image translation is realized 、 Rotation invariance , The quality of image synthesis is greatly improved .

(1) utilize Fourier features ( Fourier characteristic ) Instead of stylegan2 Constant input to the generator

(2) Deleted noise Input ( The position information of the feature should come from the previous rough feature )

(3) Reduced network depth (14 layer , It used to be 18 layer ), Ban mixing regularization and path length regularization, And simple normalization is used before each convolution ( This is a bit of a direct reversal stylegan2 Some thoughts of )

(4) The ideal low-pass filter is used to replace bilinear sampling .

(5) In order to get the rotation invariant network , Make two improvements : The convolution kernel size of all layers is changed from 3 Replace with 1, By way of feature map Double the number of , To compensate for reduced feature capacity

4. styleGAN function

GAN There is a smooth and continuous hidden space , Not like VAE(Variational Auto Encoder) There is a gap . therefore , When you take two points in the lurking space f1 and f2, There will be two different faces , You can create a transition or interpolation between two faces by taking a linear path between two points .

边栏推荐

- deeplab v3 代码结构图

- 303. region and retrieval - array immutable

- Eureka

- 898. 子数组按位或操作

- 900. RLE 迭代器

- junit单元测试报错org.junit.runners.model.InvalidTestClassError: Invalid test class ‘xxx‘ .No runnable meth

- 初始化层实现

- RFID数据安全性实验:C#可视化实现奇偶校验、CRC冗余校验、海明码校验

- 318. maximum word length product

- C # how to obtain DPI and real resolution (can solve the problem that has been 96)

猜你喜欢

RFID数据安全性实验:C#可视化实现奇偶校验、CRC冗余校验、海明码校验

Swagger3 integrates oauth2 authentication token

【日常训练】513. 找树左下角的值

【项目实训】多段线扩充为平行线

Intentional shared lock, intentional exclusive lock and deadlock of MySQL

【BULL中文文档】用于在 NodeJS 中处理分布式作业和消息的队列包

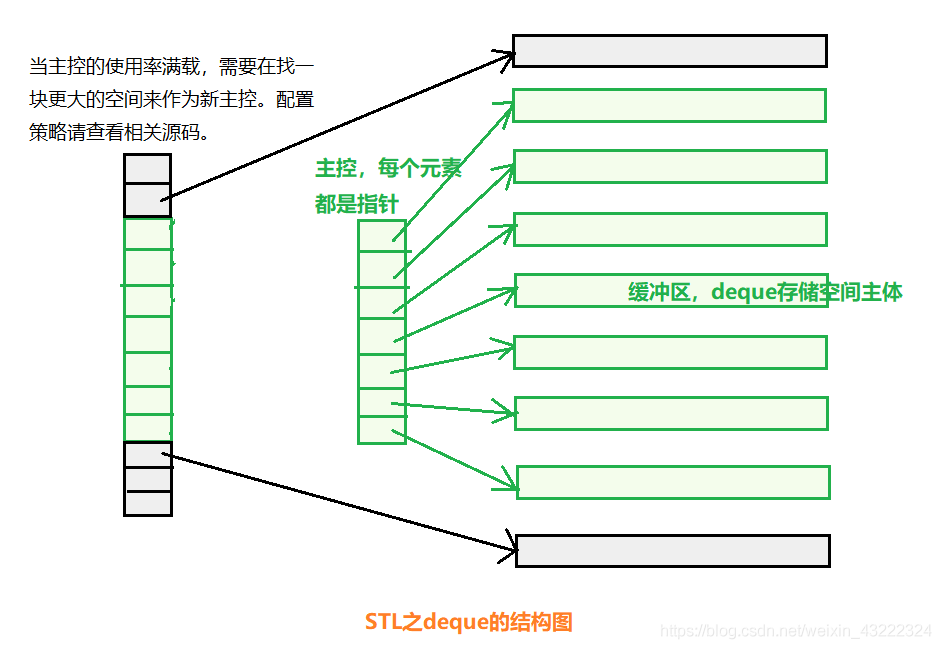

【STL】顺序容器之deque用法总结

深度学习系列47:超分模型Real-ESRGAN

Idea installing the cloudtoolkit plug-in

Quartz调度框架的学习使用

随机推荐

启发式的搜索策略

406 double pointer (27. remove elements, 977. square of ordered array, 15. sum of three numbers, 18. sum of four numbers)

Don't look for [12 super easy-to-use Google plug-ins are here] (are you sure you want to take a look?)

MySQL mvcc multi version concurrency control

[STL] summary of pair usage

QT designer cannot modify the window size, and cannot change the size by dragging the window with the mouse

Summarized benefits

900. RLE iterator

309. the best time to buy and sell stocks includes the freezing period

306. 累加数

【项目实训】线形箭头的变化

899. ordered queue

303. region and retrieval - array immutable

直播回顾 | 传统应用进行容器化改造,如何既快又稳?

901. 股票价格跨度

System permission program cannot access SD card

【***数组***】

MySQL的意向共享锁、意向排它锁和死锁

Open source oauth2 framework for SSO single sign on

junit单元测试报错org.junit.runners.model.InvalidTestClassError: Invalid test class ‘xxx‘ .No runnable meth