当前位置:网站首页>Intel openvino tool suite advanced course & experiment operation record and learning summary

Intel openvino tool suite advanced course & experiment operation record and learning summary

2022-06-23 21:48:00 【dnbug Blog】

Intel OpenVINO Tool suite advanced course & Experimental operation record and learning summary

List of articles

- Lesson 1 hands on experiment : How to make full use of OpenVINO Tool set ?- Online testing

- Lesson 2 hands on experiment : How to build a heterogeneous system —— Hands-on experiment - Online testing

- Lesson 3 hands on experiment :AI Video processing in applications - Online testing

- Lesson 4 hands on experiment : How to do AI Comparison of reasoning performance - Online testing

- Lesson 6 hands on experiment :AI Audio processing in applications - Online testing

- Lesson 7 hands on experiment : How to achieve DL-streamer Advanced features included ?- Online testing

- Lesson 8 hands on experiment : Integrated implementation AI Audio and video processing in applications - Online testing

Lesson 1 hands on experiment : How to make full use of OpenVINO Tool set ?- Online testing

1. Examples of human motion and posture recognition

Set the experimental path

Set up OpenVINO The path of :

export OV=/opt/intel/openvino_2021/

Set the path of the current experiment :

export WD=~/OV-300/01/3D_Human_pose/

notes : The experiment folder is named OV-300, Located in the home directory . In this environment , File upload and download methods , Refer to the help manual in the upper right corner .

Run initialization OpenVINO Script for

source $OV/bin/setupvars.sh

When you see :[setupvars.sh] OpenVINO environment initialized Express OpenVINO The environment has been initialized successfully .

function OpenVINO Script dependent installation

Go to the script Directory :

cd $OV/deployment_tools/model_optimizer/install_prerequisites/

install OpenVINO Dependency needed :

sudo ./install_prerequisites.sh

PS: This step is performed locally by the simulation development machine OpenVINO Steps used , therefore Then you use it locally OpenVINO You need to follow this step before .

install OpenVINO Dependent files of the model downloader

Enter the folder of the model downloader :

cd $OV/deployment_tools/tools/model_downloader/

Install the dependencies of the model downloader :

python3 -mpip install --user -r ./requirements.in

Install download conversion pytorch Model dependencies :

sudo python3 -mpip install --user -r ./requirements-pytorch.in

Install download conversion caffe2 Model dependencies :

sudo python3 -mpip install --user -r ./requirements-caffe2.in

PS: This step is performed locally by the simulation development machine OpenVINO Steps used , therefore Then you use it locally OpenVINO You need to follow this step before .

Download the human posture recognition model through the model downloader

Officially enter the experimental catalogue :

cd $WD

see human_pose_estimation_3d_demo List of models needed :

cat /opt/intel/openvino_2021//deployment_tools/inference_engine/demos/human_pose_estimation_3d_demo/python/models.lst

Download the model through the model downloader :

python3 $OV/deployment_tools/tools/model_downloader/downloader.py --list $OV/deployment_tools/inference_engine/demos/human_pose_estimation_3d_demo/python/models.lst -o $WD

Use the model converter to convert the model into IR Format

OpenVINO Support mainstream frameworks in the market, such as TensorFlow/Pytorch->ONNX/CAFFE The model built by the equal frame architecture is transformed into IR Format :

python3 $OV/deployment_tools/tools/model_downloader/converter.py --list $OV/deployment_tools/inference_engine/demos/human_pose_estimation_3d_demo/python/models.lst

PS: at present OpenVINO Our inference engine can only infer what has been transformed IR file , No direct reasoning .pb/.caffemode/.pt Wait for the documents .

compile OpenVINO Of Python API

You only need to compile :

source $OV/inference_engine/demos/build_demos.sh -DENABLE_PYTHON=ON

If you need to use OpenVINO Of PythonAPI, Please add the following compiled library address ( Otherwise, the library will not be found ):

export PYTHONPATH="$PYTHONPATH:/home/dc2-user/omz_demos_build/intel64/Release/lib/"

Play the experimental video to be identified

Due to web player restrictions , Please manually enter the following command to play the video :

show 3d_dancing.mp4

PS: Be sure to use the keyboard to enter letter by letter

Run human posture recognition Demo

Run human posture recognition Demo:

python3 $OV/inference_engine/demos/human_pose_estimation_3d_demo/python/human_pose_estimation_3d_demo.py -m $WD/public/human-pose-estimation-3d-0001/FP16/human-pose-estimation-3d-0001.xml -i 3d_dancing.mp4 --no_show -o output.avi

Please wait patiently for the program to run , If the screen appears Inference Completed!! It means that the reasoning is completed , Please enter “ls” List all files in the current folder

Convert and play the recognition result video

Due to platform constraints , We must first convert the output video into MP4 Format , Use the following command :

ffmpeg -i output.avi output.mp4

Manually input the following command to play the reasoning result video :

show output.mp4

2. Example of image shading

Set the experimental path

export OV=/opt/intel/openvino_2021/

export WD=~/OV-300/01/Colorization/

initialization OpenVINO

source $OV/bin/setupvars.sh

Start the experiment

Officially enter the working directory :

cd $WD

View the demo The required model :

cat $OV/deployment_tools/inference_engine/demos/colorization_demo/python/models.lst

Because the experimental model is large , The model has been downloaded in advance , Please proceed to the next step .

View the original video

all show Please enter all commands manually :

show butterfly.mp4

Run shading Demo

python3 $OV/inference_engine/demos/colorization_demo/python/colorization_demo.py -m $WD/public/colorization-siggraph/colorization-siggraph.onnx -i butterfly.mp4 --no_show -o output.avi

PS: Careful students will find out , This experiment can be used directly onnx Format for experiments , This shows that the reasoning engine supports simple onnx Reasoning ( Of course you convert to IR It's fine too ). Please wait patiently for the program to run , You will see “Inference Completed” The words... . Output avi Save in current folder , Use lowercase “LL” Command to view the current folder .

View the output video of coloring experiment

Please use first. ffmpeg take .avi Convert to .mp4 Format :

ffmpeg -i output.avi output.mp4

Manually input the instruction of playing video :

show output.mp4

PS: If the conversion is completed, use show The command cannot display , Please later 30s Try again .

3. Audio detection example

Initialization environment

# Initialize working directory

export OV=/opt/intel/openvino_2021/

export WD=~/OV-300/01/Audio-Detection/

# initial OpenVINO

source $OV/bin/setupvars.sh

Enter the audio detection Directory

# Get into OpenVINO The built-in audio detection example in :

cd $OV/data_processing/dl_streamer/samples/gst_launch/audio_detect

# You can view the detected tag file

vi ./model_proc/aclnet.json

# The audio files can be detected later

show how_are_you_doing.mp3

Run audio detection

# Run the example

bash audio_event_detection.sh

Analyze the audio detection results

# The results are not very suitable for observation , You can run the following command

bash audio_event_detection.sh | grep “label”:" |sed ‘s/.*label"//’ | sed ‘s/"label_id.start_timestamp"/’ | sed 's/}].//’

# Now you can see in the timestamp 600000000 When , We detected voice , But I don't know what the content is , Because it is a detection example , Not an example of recognition :“Speech”,600000000

4. Formula recognition

Initialization environment

# Initialization environment

export OV=/opt/intel/openvino_2021/

export WD=~/OV-300/01/Formula_recognition/

# initialization OpenVINO

source $OV/bin/setupvars.sh

View recognizable characters

cd $WD

# Handwritten characters :

vi hand.json

# Print character :

vi latex.json

View the formula to be identified

# Enter the material catalog

cd $WD/…/Materials/

# View the print formula

show Latex-formula.png

# View handwritten formula

show Hand-formula.png

Run formula recognition

cd $WD

# Identify the print formula

python3 $OV/inference_engine/demos/formula_recognition_demo/python/formula_recognition_demo.py -m_encoder $WD/intel/formula-recognition-medium-scan-0001/formula-recognition-medium-scan-0001-im2latex-encoder/FP16/formula-recognition-medium-scan-0001-im2latex-encoder.xml -m_decoder $WD/intel/formula-recognition-medium-scan-0001/formula-recognition-medium-scan-0001-im2latex-decoder/FP16/formula-recognition-medium-scan-0001-im2latex-decoder.xml --vocab_path latex.json -i $WD/…/Materials/Latex-formula.png -no_show

Recognize handwritten formulas

# Recognize handwritten formulas

python3 $OV/inference_engine/demos/formula_recognition_demo/python/formula_recognition_demo.py -m_encoder $WD/intel/formula-recognition-polynomials-handwritten-0001/formula-recognition-polynomials-handwritten-0001-encoder/FP16/formula-recognition-polynomials-handwritten-0001-encoder.xml -m_decoder $WD/intel/formula-recognition-polynomials-handwritten-0001/formula-recognition-polynomials-handwritten-0001-decoder/FP16/formula-recognition-polynomials-handwritten-0001-decoder.xml --vocab_path hand.json -i $WD/…/Materials/Hand-formula.png -no_show

Challenge task

# You can upload your own handwriting formula , Save as PNG Format to identify examples

# The method of uploading files is located in the help manual in the upper right corner of the web page

# If you want to skip this task, just click OK

5. Environmental depth identification

Initialization environment

# Environmental Directory

export OV=/opt/intel/openvino_2021/

export WD=~/OV-300/01/MonoDepth_Python/

# initialization OpenVINO

source $OV/bin/setupvars.sh

Convert the original model file to IR file

Enter working directory

cd $WD

# The downloaded model is TensorFlow Format , Use converter Quasi replacement IR Format :

python3 $OV/deployment_tools/tools/model_downloader/converter.py --list $OV/deployment_tools/inference_engine/demos/monodepth_demo/python/models.lst

View the original image that needs to be recognized

# Check the original file

show tree.jpeg

Run depth identification example

# Enter working directory

cd $WD

Run the example , The function of this example is to automatically separate places with different depth of field in the picture :

python3 $OV/inference_engine/demos/monodepth_demo/python/monodepth_demo.py -m $WD/public/midasnet/FP32/midasnet.xml -i tree.jpeg

# View the results

show disp.png

6. Example of target recognition

Initialization environment

# Definition OpenVINO Catalog

export OV=/opt/intel/openvino_2021/

# Define working directory

export WD=~/OV-300/01/Object_Detection/

# initialization OpenVINO

source $OV/bin/setupvars.sh

# Enter working directory

cd $WD

Choose the model that suits you

# Because there are many models supporting target detection , You can choose the appropriate model under different topology networks :

vi $OV/inference_engine/demos/object_detection_demo/python/models.lst

notes : About SSD, Yolo, centernet, faceboxes or Retina The difference between topology and network , This course will not go further , Interested students can go online to learn about . stay OpenVINO Medium deployment_tools/inference_engine/demos/ Each of them demo There are... In the folder model.lst Lists the demo The supported can be directly through downloader Download the model used

Convert model to IR Format

# This experiment has been completed in advance :pedestrian-and-vehicle-detector-adas And yolo-v3-tf

# Use Converter Conduct IR transformation , because pedestrian-and-vehicle-detector-adas Pre training model for Intel , Has transformed IR complete , Only need to yolo-v3 convert :

python3 $OV/deployment_tools/tools/model_downloader/converter.py --name yolo-v3-tf

View the video to be detected

cd $WD/…/Materials/Road.mp4

# Play the video :

show Road.mp4

Use SSD Model running target detection example

cd $WD

# function OMZ (ssd) model

python3 $OV/inference_engine/demos/object_detection_demo/python/object_detection_demo.py -m $WD/intel/pedestrian-and-vehicle-detector-adas-0001/FP16/pedestrian-and-vehicle-detector-adas-0001.xml --architecture_type ssd -i $WD/…/Materials/Road.mp4 --no_show -o $WD/output_ssd.avi

# Convert to mp4 Format to play

ffmpeg -i output_ssd.avi output_ssd.mp4

show output_ssd.mp4

function Yolo-V3 Example of target detection under

# function the Yolo V3 model

python3 $OV/inference_engine/demos/object_detection_demo/python/object_detection_demo.py -m $WD/public/yolo-v3-tf/FP16/yolo-v3-tf.xml -i $WD/…/Materials/Road.mp4 --architecture_type yolo --no_show -o $WD/output_yolo.avi

# Convert to mp4 Format to play

ffmpeg -i output_yolo.avi output_yolo.mp4

show output_yolo.mp4

! Please compare the detection performance of the two models under the same code

7. Examples of natural language processing (NLP)—— Automatically answer questions

Initialization environment

# Define working directory

export OV=/opt/intel/openvino_2021/

export WD=~/OV-300/01/NLP-Bert/

# initialization OpenVINO

source $OV/bin/setupvars.sh

# Entry directory

cd $WD

View a list of supported models

# Available list :

cat $OV/deployment_tools/inference_engine/demos/bert_question_answering_demo/python/models.lst

notes : stay OpenVINO Medium deployment_tools/inference_engine/demos/ Each of them demo There are... In the folder model.lst Lists the demo The supported can be directly through downloader Download the model used , And we have downloaded all the models in advance as IR Format .

Open the URL to be identified

# Use the browser to open an English website for browsing , for example Intel Official website :https://www.intel.com/content/www/us/en/homepage.html

function NLP Example

python3 $OV/inference_engine/demos/bert_question_answering_demo/python/bert_question_answering_demo.py -m $WD/intel/bert-small-uncased-whole-word-masking-squad-0001/FP16/bert-small-uncased-whole-word-masking-squad-0001.xml -v $OV/deployment_tools/open_model_zoo/models/intel/bert-small-uncased-whole-word-masking-squad-0001/vocab.txt --input=https://www.intel.com/content/www/us/en/homepage.html --input_names=input_ids,attention_mask,token_type_ids --output_names=output_s,output_e

# stay Type question (empty string to exit): Input core. You can view the current for core( core ) Known information , for example : Intel Core processors provide a range of performance from entry-level to the highest level . Of course, you can also enter other questions . Compare the relevant descriptions on the website

notes :–input=https://www.intel.com/content/www/us/en/homepage.html For the English website we need to visit

Challenge task

# Based on the experiment of the previous step , Try using different URLs as –input The input of , And try to ask some other keyword questions . And think about how you can improve the accuracy of this example .

# Based on the experiment of the previous step , Try using different models as -m/-v The input of ( The model is located in $WD/INTEL/ Under the table of contents ), And try to ask some other keyword questions . And think about how you can improve the accuracy of this example .

notes : This example only supports English websites , And the website can be accessed normally .

Lesson 2 hands on experiment : How to build a heterogeneous system —— Hands-on experiment - Online testing

1. Upload the performance evaluation script to DevCloud

Initial experimental path

# Definition OpenVINO Folder

export OV=/opt/intel/openvino_2021/

# Define working directory

export WD=~/OV-300/02/LAB1/

# initialization OpenVINO

source $OV/bin/setupvars.sh

# Enter the experimental directory

cd $WD

View current device information

adopt OpenVINO The built-in script can query the device information of the current environment :

python3 $OV/inference_engine/samples/python/hello_query_device/hello_query_device.py

DevCloud Introduce

Intel DevCloud Provides support for a variety of Intel framework CPU/GPU/NCS2/ Free access to intelligent hardware and other devices , Help you get intel Instant hands-on experience of software , And execute your edge 、 Artificial intelligence 、 High performance computing (HPC) And rendering workloads . With pre installed intel Optimize the framework 、 Tools (OpenVINO Toolkit, OneAPI) And Ku , Have everything you need to quickly prototype and learn .

This experiment provides 4 Available nodes :

idc004nc2: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

idc007xv5: Intel - xeon e3-1268l-v5, intel-hd-p530

idc008u2g: Intel - atom e3950, intel-hd-505,myriad-1-VPU

idc014:Intel - i7 8665ue, intel-uhd-620

notes :NCS2 The Chinese name is the second generation neural computing rod , It is an edge computing device launched by Intel , Volume sum U Disk similarity , Interface for USB3.0, built-in Myriad X Computing chip , Power consumption is only 2W, The theoretical calculation force can reach 1TOPS.OpenVINO Can pass “MYRIAD” The plug-in deploys the deep learning model on it .

Upload Benchmark_App.py to DevCloud Run in

We submit the code we need to run to idc004nc2 Node :

python3 submit_job_to_DevCloud.pyc idc004nc2

PS: Because there is a certain network delay , If the command is unresponsive , You can repeat the command several times .

Please wait patiently for the experimental state to become “C”, Script execution completed , The uploaded script is located in the current file directory , be known as userscript.py, Its function is to OpenVINO The face recognition model runs in NCS2 To get the performance parameters .

It's different CPU Next NCS2 Performance of

According to the instructions just uploaded , Upload the same code to idc008u2g node .

The upload instruction is :

python3 submit_job_to_DevCloud.pyc “ Target node name ”

PS: This command uploads the current directory by default userscript.py Script .

Please compare the different CPU(Atom and Core) Under the condition of ,NCS2 Is there any difference in the performance of ?

The comparison results are as follows :

idc004nc2: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc004nc2

[Step 11/11] Dumping statistics report

Count: 260 iterations

Duration: 10297.15 ms

Latency: 158.08 ms

Throughput: 25.25 FPS

idc008u2g: Intel - atom e3950, intel-hd-505,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc008u2g

[Step 11/11] Dumping statistics report

Count: 260 iterations

Duration: 10294.75 ms

Latency: 157.99 ms

Throughput: 25.26 FPS

Challenge task 1: Deploy reasoning tasks on different reasoning devices of nodes

modify userscript.py:

vi userscript.py

In the 43 That's ok : target_device= “MYRIAD”

notes : You can use “:wq” Command to save the changes and exit the interface .

Here you can choose the device you want to run :"CPU/GPU/MYRIAD”

CPU: Corresponding Intel CPU

GPU: Corresponding Intel integrated graphics card

MYRIAD: The device corresponding to this name is just mentioned NCS2

You can play here by yourself , Try using different devices perhaps Devcloud The node runs this performance test script

Try using different devices perhaps Devcloud The node runs this performance test and the results are as follows :

equipment :GPU

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

[Step 11/11] Dumping statistics report

Count: 724 iterations

Duration: 10031.90 ms

Latency: 27.45 ms

Throughput: 72.17 FPS

equipment :CPU

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

[Step 11/11] Dumping statistics report

Count: 920 iterations

Duration: 10015.39 ms

Latency: 10.82 ms

Throughput: 91.86 FPS

target_device= “MYRIAD”

equipment :MYRIAD

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

2022 year 02 month 21 Japan Monday 06:17:38 CST, equipment :MYRIAD, Run script error RuntimeError: Can not init Myriad device: NC_ERROR.

2022 year 02 month 21 Japan Monday 03:56:50 CST, Webpage shell Stuck failure , Unable to save exit (:wq), No execution result .2022 year 02 month 21 Japan Monday 05:55:18 CST,ok It's handled , It's not stuck, it's an order web shell The invalid , change to the use of sth. ctrl+[ sign out INSERT The pattern is ok 了 .

Challenge task 2: Print out NCS2 Operating temperature of

Please according to :hello_query_device.py The snippet about temperature display in the code , change userscript.py, Make it possible to display the current NCS2 Operating temperature of .

Please be there. userscript.py Of line:136 after Add the appropriate code , To display the current NCS2 Temperature of .

Tips : Please make sure target_device by “MYRIAD” Under the premise of , Using functions :self.ie.get_metric(metric_name=“DEVICE_THERMAL”,device_name=‘MYRIAD’ ) Get the temperature .

answer userscript…py Located in the upper directory Solution In the folder , You can copy it to the current folder for use .

2022 year 02 month 21 Japan Monday 04:03:53 CST, This is difficult , Skip first .

2. Using multi hardware collaborative reasoning

Initialize the experiment Directory

# Definition OV Catalog

export OV=/opt/intel/openvino_2021/

# Define working directory

export WD=~/OV-300/02/LAB2/

# initialization OpenVINO

source $OV/bin/setupvars.sh

# Enter working directory

cd $WD

Performance test of different models

We have prepared three models for you :

face-detection-adas-0001.xml

head-pose-estimation-adas-0001.xml

text-spotting-0003-recognizer-encoder.xml

Yes, please. userscript.py Editing :

stay line 44: path_to_model="/app/face-detection-adas-0001.xml" Chinese vs face-detection-adas-0001.xml Replace , You can test different models

The performance test results of different models are as follows :

equipment :CPU

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Model :head-pose-estimation-adas-0001.xml

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

[Step 11/11] Dumping statistics report

Count: 720 iterations

Duration: 10055.01 ms

Latency: 27.66 ms

Throughput: 71.61 FPS

equipment :CPU

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Model :face-detection-adas-0001.xml

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

[Step 11/11] Dumping statistics report

Count: 923 iterations

Duration: 10018.43 ms

Latency: 10.79 ms

Throughput: 92.13 FPS

2022 year 02 month 21 Japan Monday 05:27:26 CST,vi edit userscript.py When you file , It is found that there is less content in the file ( It may be the previous experimental operation , Operation error: deleted by mistake ?), Less line 44: path_to_model="/app/face-detection-adas-0001.xml" Configure this section , How about that ….

2022 year 02 month 21 Japan Monday 05:50:11 CST, The system is restored by default ???.

Use multi hardware collaborative reasoning

OpenVINO It provides a method of multi hardware collaborative reasoning “MULTI” plug-in unit , You just need to compile Target_device The object of , No need to change the original code , Collaborative reasoning can be realized .

for example :target_device=“MULTI:CPU,GPU” , You can use CPU+GPU Collaborative reasoning , among CPU(1st priority) and GPU (2nd priority).

notes : All the in this experiment GPU All Intel integrated graphics cards , Before the experiment , Be sure to confirm that the running node contains GPU equipment ( Some models of Xeon processors do not include GPU equipment ).MYRIAD It's the same thing , Please ensure that the experimental node has the hardware before the experiment !

The test results using multi hardware collaborative reasoning are as follows :

target_device=“MULTI:CPU,GPU”

equipment :MULTI:CPU,GPU

Devcloud node :idc007xv5: Intel - core i5-6500te, intel-hd-530,myriad-1-VPU

Carry out orders :python3 submit_job_to_DevCloud.pyc idc007xv5

[Step 11/11] Dumping statistics report

Count: 1065 iterations

Duration: 10037.20 ms

Throughput: 106.11 FPS

Conduct performance comparison experiment

Please be there. idc004nc2 Under the node , Complete the following table and analyze the performance data :

2022 year 02 month 21 Japan Monday 06:33:15 CST, it's time for bed , Wake up again .

Intel DevCloud guide

Lesson 3 hands on experiment :AI Video processing in applications - Online testing

Lesson 4 hands on experiment : How to do AI Comparison of reasoning performance - Online testing

Lesson 6 hands on experiment :AI Audio processing in applications - Online testing

Lesson 7 hands on experiment : How to achieve DL-streamer Advanced features included ?- Online testing

Lesson 8 hands on experiment : Integrated implementation AI Audio and video processing in applications - Online testing

BEGIN:2022-02-20 23:53:49

END:

边栏推荐

- 数据可视化之:没有西瓜的夏天不叫夏天

- Start optimization - directed acyclic graph

- How to use smart cloud disk service differences between local disk service and cloud disk service

- 蓝牙芯片|瑞萨和TI推出新蓝牙芯片,试试伦茨科技ST17H65蓝牙BLE5.2芯片

- How to calculate individual income tax? You know what?

- SAP retail wrmo replenishment monitoring

- HR SaaS is finally on the rise

- Beitong G3 game console unpacking experience. It turns out that mobile game experts have achieved this

- Improve efficiency, take you to batch generate 100 ID photos with QR code

- What causes the applet SSL certificate to expire? How to solve the problem when the applet SSL certificate expires?

猜你喜欢

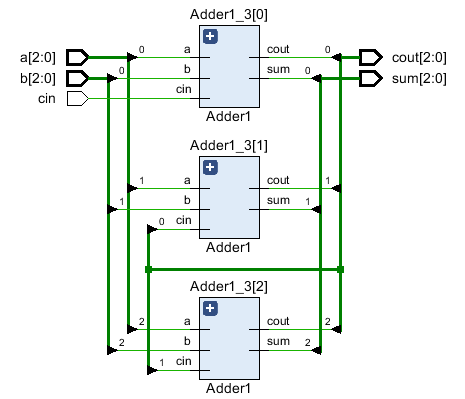

HDLBits-> Circuits-> Arithmetic Circuitd-> 3-bit binary adder

蓝牙芯片|瑞萨和TI推出新蓝牙芯片,试试伦茨科技ST17H65蓝牙BLE5.2芯片

Outlook开机自启+关闭时最小化

How to use the serial port assistant in STC ISP?

Lightweight, dynamic and smooth listening, hero earphone hands-on experience, can really create

Beitong G3 game console unpacking experience. It turns out that mobile game experts have achieved this

大一女生废话编程爆火!懂不懂编程的看完都拴Q了

Freshman girls' nonsense programming is popular! Those who understand programming are tied with Q after reading

Facing the problem of lock waiting, how to realize the second level positioning and analysis of data warehouse

Minimisé lorsque Outlook est allumé + éteint

随机推荐

[同源策略 - 跨域问题]

How to view the hard disk of ECS? How about the speed and stability of the server

Wechat smart operation 3.0+ Alipay digital transformation 3.0

How does the video platform deployment give corresponding user permissions to the software package files?

Freshman girls' nonsense programming is popular! Those who understand programming are tied with Q after reading

Using barcode software to make certificates

Is it safe to open an account for flush stock?

Improve efficiency, take you to batch generate 100 ID photos with QR code

The transaction code mp83 at the initial level of SAP retail displays a prediction parameter file

Framework not well mastered? Byte technology Daniel refined analysis notes take you to learn systematically

What is the use of PMP certification?

Cloud native practice of meituan cluster scheduling system

Infrastructure splitting of service splitting

Data visualization: summer without watermelon is not summer

High quality "climbing hand" of course, you have to climb a "high quality" wallpaper

Markdown syntax summary

Real time crawler launches a number of special new products

Outlook开机自启+关闭时最小化

2021-12-25: given a string s consisting only of 0 and 1, assume that the subscript is from

大一女生废话编程爆火!懂不懂编程的看完都拴Q了