当前位置:网站首页>Introduction to machine learning compilation course learning notes lesson 1 overview of machine learning compilation

Introduction to machine learning compilation course learning notes lesson 1 overview of machine learning compilation

2022-06-24 22:44:00 【herosunly】

List of articles

1. Course introduction

This course is organized by XGBoost The author of Chen Tianqi For teaching . from 2022 year 6 month 18 Japan The official start of the ( Fresh ), One class per week . For details, please refer to the link : Chinese official website perhaps English official website . If you have any questions, you can also leave a message in the discussion area : Chinese discussion area 、 English discussion area .

The course catalogue is shown below :

- Machine learning compilation Overview

- Tensor operator function

- Integration of tensor function and whole network model

- Integrate custom computing Libraries

- Automated program optimization

- Integration with machine learning framework

- Custom hardware backend

- Automatic tensioning

- Graph optimization : Operator fusion and memory optimization

- Deploy the model to the service environment

- Deploy models to edge devices

Objective to , This course is not suitable for beginners of machine learning or deep learning . But it is highly recommended that students learn the first lesson first : Machine learning compilation Overview . Then decide whether to study more deeply .

In this lesson slides Links are as follows :https://mlc.ai/summer22-zh/slides/1-Introduction.pdf;notes Links are as follows :https://mlc.ai/zh/chapter_introduction/.

2. The outline of this lesson

- What is machine learning compilation ?

- What is the goal of machine learning compilation ?

- Why learn machine learning to compile ?

- What are the core elements of machine learning compilation ?

3. Definition of machine learning compilation

Machine learning compiler (machine learning compilation, MLC) Refer to , Machine learning algorithm from Development form , Through transformation and optimization algorithm , Make it into a Deployment form . Simply speaking , Is to apply the trained machine learning model to the ground , The process of deploying in a specific system environment .

Development form refers to the form we use when developing machine learning models . Typical forms of development include PyTorch、TensorFlow or JAX( It mainly refers to the deep learning model ) And other general framework , And the weight associated with it .

Deployment modality refers to the modality required to execute a machine learning application . It usually involves the supporting code for each step of the machine learning model 、 Management resources ( For example, memory ) The controller , And interface with application development environment ( For example Android Application's Java API).

Different AI The deployment environments corresponding to applications are different from each other . The following is an example : The indispensable recommendation system algorithm for e-commerce platforms is usually deployed on cloud platforms ( The server ) On ; Autopilot algorithms are usually dedicated computing devices deployed on vehicles ; All kinds of mobile phones APP Take the input method of voice to text as an example , Finally, it is the computing devices deployed on mobile phones .

When deploying machine learning models , Not only the hardware system environment should be considered , At the same time, we also need to consider the software environment ( Such as operating system environment 、 Machine learning platform environment, etc ).

4. The goal of machine learning compilation

The direct goals of machine learning compilation include two points : Minimize dependencies and leverage hardware acceleration . The ultimate goal of machine learning compilation is to achieve performance ( Time complexity 、 Spatial complexity ) Optimize .

Minimizing dependencies can be thought of as integration (Integration) Part of , Extract the application related libraries ( Delete libraries unrelated to the application ), Thus reducing the size of the application , Achieve the purpose of saving space .

Using hardware acceleration refers to using the characteristics of the hardware itself to accelerate . You can build deployment code that calls the native acceleration library or generate instructions that utilize native ( Such as TensorCore) Code to do this .

5. Why learn machine learning to compile ?

- Build solutions for machine learning deployment .

- Form a deeper understanding of the existing machine learning framework .

6. The core elements of machine learning compilation

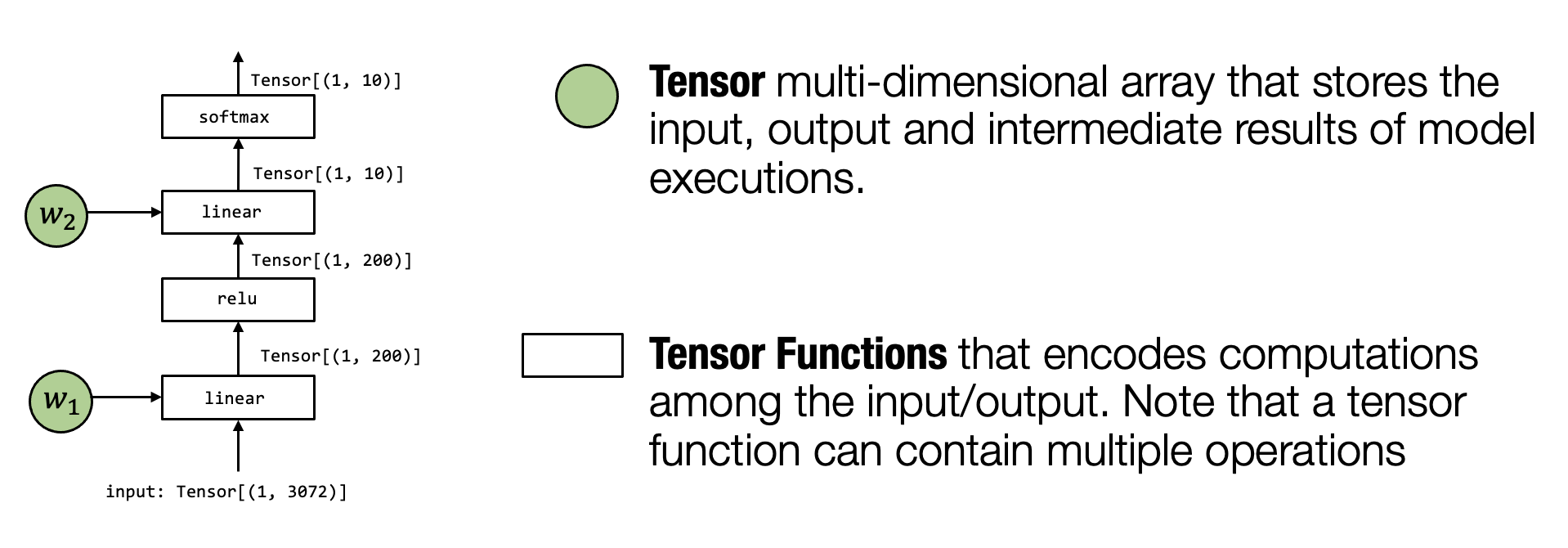

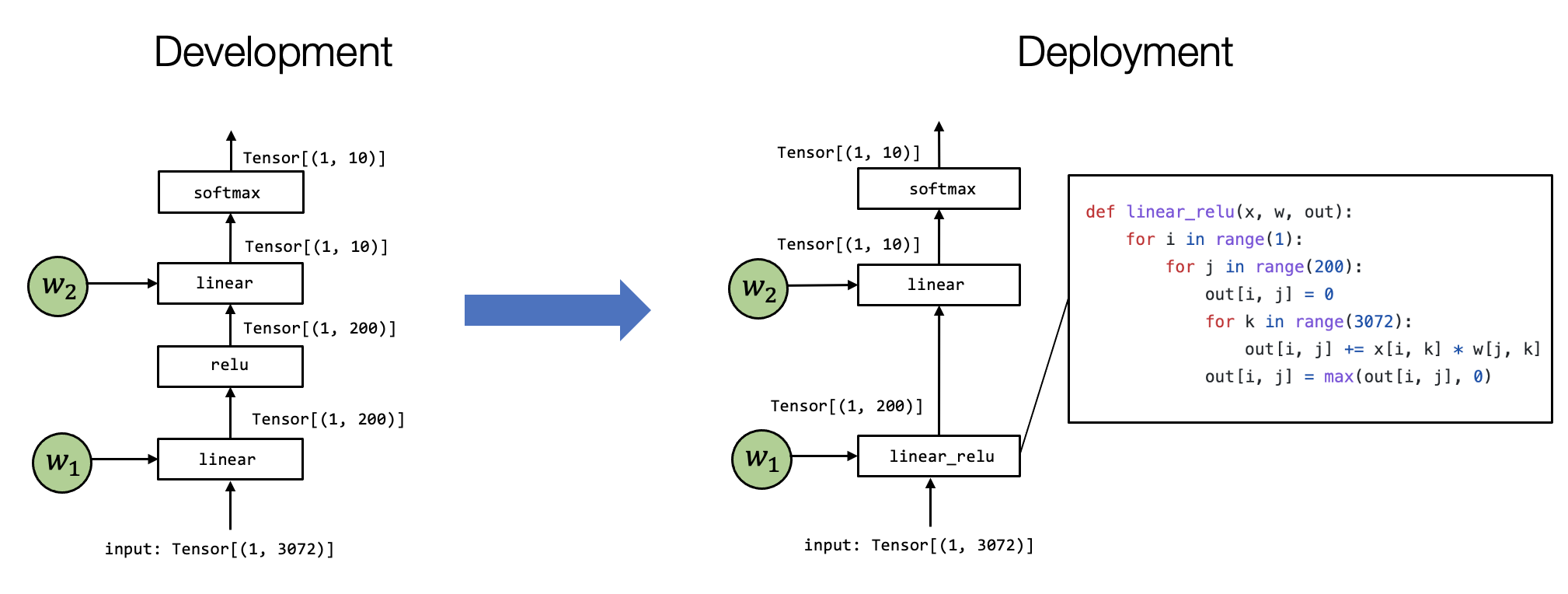

tensor(Tensor) Is the most important element in execution . A tensor is an input that represents the execution of a neural network model 、 A multidimensional array of output and intermediate results .Tensor function(Tensor functions) Neural network “ knowledge ” It is encoded in the calculation sequence of weight and acceptance tensor and output tensor . We call these calculations tensor functions . It is worth noting that , The tensor function does not need to correspond to a single step of neural network calculation . Part of the calculation or the whole end-to-end calculation can also be regarded as tensor function . in other words , Not only a single function can be considered as a tensor function , You can also put some of them ( Or the whole thing ) As a tensor function .

A practical example is given in the figure below , first linear Layer and the relu The calculation is folded into a linear_relu function , This requires a specific linear_relu Detailed implementation of .

6.1. remarks : Abstraction and Implementation

There are different particle sizes for the same target . For example linear_relu for , You can use the two block diagrams on the left to represent , It can also be represented by the loop on the right .

We use abstract (Abstraction) To represent the way we represent the same tensor function . Different abstractions may specify some details , Ignore other implementations (Implementations) details . for example ,linear_relu You can use a different for Loop to achieve .

abstract and Realization Probably the most important keyword in all computer systems . Specify abstractly “ What do you do ”, Implementation provides “ how ” do . There are no specific boundaries . In our opinion ,for The loop itself can be seen as an abstraction , Because it can be used python The interpreter implements or compiles into native assembly code .

Machine learning compilation is actually in The process of transforming and assembling tensor functions under the same or different abstractions . We will study different abstract types of tensor functions , And how they work together to address challenges in machine learning deployment .

Four types of abstractions will be involved in subsequent courses :

- Calculation chart

- Tensor program

- library ( Runtime )

- Hardware specific instructions

7. summary

The goal of machine learning compilation

- Integrate and minimize dependencies

- Use hardware to speed up

- General optimization

Why learn machine learning to compile

- Build machine learning deployment solutions

- Gain insight into existing machine learning frameworks

- Build software stacks for emerging hardware

Key elements of machine learning compilation

- Tensor and tensor function

- Abstraction and implementation are tools worth thinking about

边栏推荐

- 源码阅读 | OpenMesh读取文本格式stl的过程

- Extend your kubernetes API with aggregated apiserver

- 【軟件工程】期末重點

- Cache control of HTTP

- NIO 零拷贝

- Use of selector for NiO multiplexing

- NIO、BIO、AIO

- ACL (access control list) basic chapter - Super interesting learning network

- In the era of industrial Internet, there is no Internet in the traditional sense

- New features of go1.18: efficient replication, new clone API for strings and bytes standard library

猜你喜欢

环境配置 | VS2017配置OpenMesh源码和环境

Panorama of enterprise power in China SSD industry

Technology inventory: past, present and future of Message Oriented Middleware

In the first year of L2, arbitrum nitro was upgraded to bring more compatible and efficient development experience

Future development of education industry of e-commerce Express

CDN principle

O (n) complexity hand tear sorting interview questions | an article will help you understand counting sorting

Servlet details

Description of transparent transmission function before master and slave of kt6368a Bluetooth chip, 2.4G frequency hopping automatic connection

Learning bit segment (1)

随机推荐

C language operators and expressions

Kubevela v1.2 release: the graphical operation console velaux you want is finally here

Common voting governance in Dao

Fanuc robot_ Introduction to Karel programming (1)

YGG recent game partners list

Principle of IP routing

Firewall working principle and detailed conversation table

How to automatically remove all . orig files in Mercurial working tree?

The usage difference between isempty and isblank is so different that so many people can't answer it

2022-06-16 工作记录--JS-判断字符串型数字有几位 + 判断数值型数字有几位 + 限制文本长度(最多展示n个字,超出...)

In the multi network card environment, the service IP registered by Nacos is incorrect, resulting in inaccessible services

MySQL + JSON = King fried!!

How to solve the problem that the computer suddenly can't connect to WiFi

OSPF basic content

Huada 04A operating mode / low power consumption mode

ThreadLocal内存泄漏问题

Learning bit segment (1)

NIO、BIO、AIO

Interrupt, interrupted, isinterrupted differences

STP spanning tree protocol Foundation