当前位置:网站首页>Graphmae ---- quick reading of papers

Graphmae ---- quick reading of papers

2022-06-24 07:59:00 【WW935707936】

target:

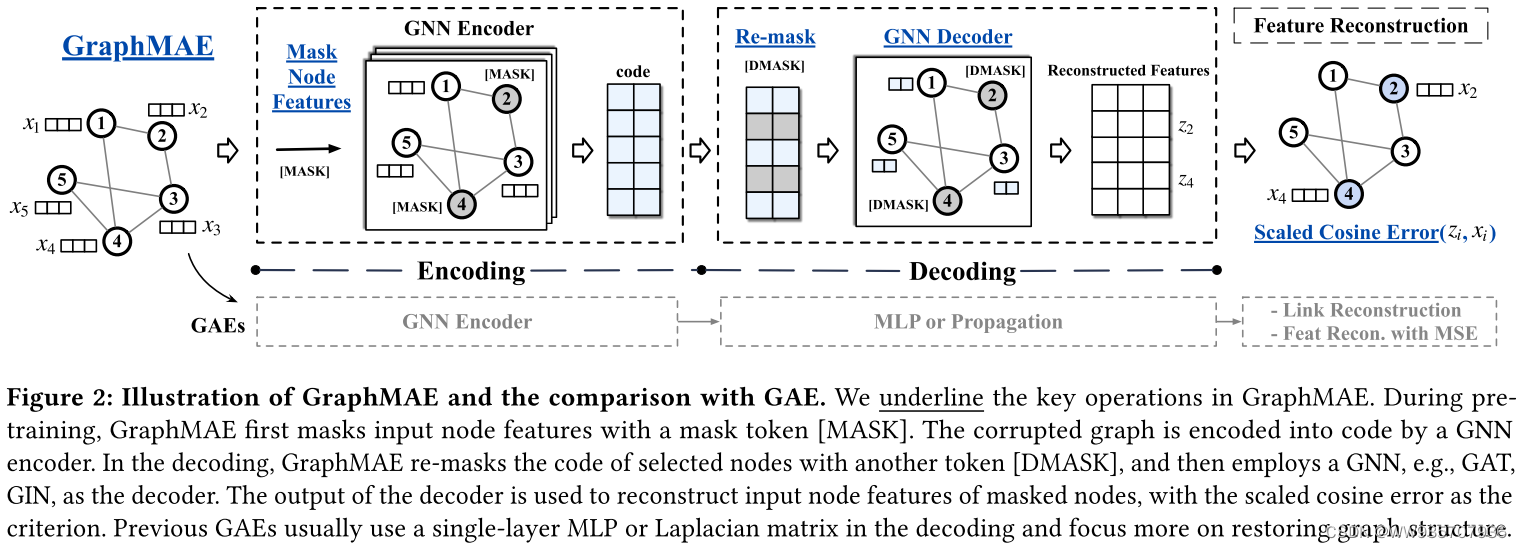

Namely , The author thinks that generative SSL In such as NLP And other fields have been mature applications (BERT,GPT). however , stay graph in , Mostly by contrastive SSL instead of generative SSL. because ,generative SSL There are still problems in the following aspects :(1)reconstruction objective, (2)training robustness, and (3)error metric. therefore , Put forward :a masked graph autoencoder GraphMAE.

In short , Namely : Reinvigorate graph Inside generative SSL.

To make a long story short , The whole article focuses on the following four points :

![]()

( I think , This article can be regarded as , stay GAE Under the overall ideological framework , It adds some useful training tips trick. These can actually be used in our own models .)

In recent years , Self supervised learning (SSL) It's been extensively studied . Especially generative Ssl It has achieved great success in naturallanguageprocessing and other fields , Such as BERT and GPT It is widely used . For all that , Contrast learning, which relies heavily on structural data expansion and complex training strategies, has always been a graphical problem SSL The dominant approach , And graph generation SSL The progress of the , Especially graphic coding (graphautoencoders,GAE), So far, the potential of other fields has not been reached . In this paper , We identified and studied the right GAE Problems that have a negative impact on development , Including its reconstruction objectives 、 Training robustness and error measurement . We propose an automatic mask encoder Graphmae, It can alleviate these problems , For generative self supervised graph learning . Instead of rebuilding the structure , We suggest focusing on feature reconstruction , Using both masking strategy and scaling cosine error , This is beneficial to the robust training of graphics . We aim at three different graphic learning tasks , stay 21 Extensive experiments were carried out on a public data set . It turns out that ,Graphmae—— A simple automatic graphic encoder , After our careful design , Can consistently produce better performance than comparing and generating the latest baseline . This study provides an understanding of the automatic graphic coder , It also shows the potential of generative self supervised learning in graphics .

边栏推荐

- 保留一位小数和保留两位小数

- 没有专业背景,还有机会成为机器学习工程师吗?

- 解决错误: LNK2019 无法解析的外部符号

- Oracle advanced SQL qualified query

- 4-操作列表(循环结构)

- Inline element, block element, inline block element

- Chapitre 2: dessiner une fenêtre

- Svn actual measurement common operation record operation

- OpenGauss数据库在 CentOS 上的实践,配置篇

- 报错“Computation failed in `stat_summary_hex()`”

猜你喜欢

本地备份和还原 SQL Server 数据库

Practice of opengauss database on CentOS, configuration

Backup and restore SQL Server Databases locally

Hongmeng OS development III

第 2 篇:绘制一个窗口

云开发谁是卧底小程序源码

Configure your own free Internet domain name with ngrok

【资料上新】迅为基于3568开发板的NPU开发资料全面升级

SVN实测常用操作-记录操作大全

Moonwell Artemis is now online moonbeam network

随机推荐

语料库数据处理个案实例(句子检索相关个案)

Thread considerations

运行npm run eject报错解决方法

any类备注

tuple(元组)备注

基于Distiller的模型压缩工具简介

Using kubeconfig files to organize cluster access

慕思股份在深交所上市:毛利率持续下滑,2022年一季度营销失利

First acquaintance with JUC - day01

[C language] system date & time

The monthly salary of two years after graduation is 36K. It's not difficult to say

OpenGauss数据库在 CentOS 上的实践,配置篇

5-if语句(选择结构)

chrono 使用备注

BOM notes

ImportError: cannot import name ‘process_pdf‘ from ‘pdfminer.pdfinterp‘错误完全解决

Error:Kotlin: Module was compiled with an incompatible version of Kotlin. The binary version of its

Free ICP domain name filing interface

【资料上新】迅为基于3568开发板的NPU开发资料全面升级

位运算