当前位置:网站首页>On gpu: historical development and structure

On gpu: historical development and structure

2022-06-27 06:30:00 【honky-tonk_ man】

List of articles

GPU history

To put GPU Of course from CPU Start talking about ,CPU At the beginning of the design, no specific workload, Instead, assume that all workload It's all going to happen here CPU On , therefore CPU Is designed to “ Universal (generalists)” Various working scenarios

We all know CPU There is an assembly line , however ,CPU Our designers try to CPU The design of the assembly line is shorter , For each instruction in CPU There are the following steps in the pipeline

- From memory load Instructions for CPU( At this point, the address or pointer of the instruction is already in PC in )

- CPU Decoding instructions , And get the corresponding operand from the register according to the instruction , This operand is required by our instruction

- CPU Execution instruction ,CPU use ALU Execution instruction

- Suppose the instruction is about memory , We need to read or write data from memory

- Write the result of the instruction execution back to the register

Suppose we have the following pseudocode

a := b+c

if a == 0 then

do this...

else

do that:

endif

We see that the pseudocode above has many branches , First of all, we must implement the first IF Instructions , When our IF The command is executed until CPu The second stage of the pipeline is the decoding stage , We are in accordance with the CPU Pipeline design load The second instruction goes to CPU In the first stage of the assembly line , But the result of our first order hasn't come out yet , We didn't know it was load then The following instructions are still load,else Later instructions … here CPU There will be a guess, Is to choose a branch at random load, If you're right and you're done, go ahead , If it is wrong, clear the instructions in the previous pipeline and load The correct branch instruction re enters the pipeline , therefore CPU The assembly line is very long , If branch prediction fails , We have too many instructions to clear in the pipeline

FPU

In the history of FPU Always as a coprocessor about CPU Come on ( It's usually integrated in CPU in ),FPU Generally, it will not run by itself , Only to CPU Conduct Floating point numbers It is only used when calculating FPU Calculate

Why? CPU No floating-point operation , It is to hand over to FPU Well ? because CPU Floating point arithmetic is very slow , Why? CPU Floating point execution is so slow ? Because floating point numbers are not compared with integers according to “ routine ” Storage , Floating point number storage is generally divided into 3 Parts of , They are sign bits ( Represents the positive and negative of a floating-point number ), Exponential position , digit , such as -161.875, First is negative, so the first sign bit is 1, Then we will 161.875 Into binary 161 The binary of is 10100001,875 The binary of is 1101101011, Together with 10100001.1101101011, however Floating point numbers The exponent of is only 8 position

And ours cpu In the reading float The value will be obtained first and then analyzed to form the final float value , and int Dissimilarity ,

GPU

Said the FPU So with us GPU What does it matter ? Need to know GPU It's for Do a lot of floating-point operations in parallel , because GPU There are many operations that need to handle different data of the same instruction , therefore GPU use SIMD(single instructtion multiple data) Designed a very long pipeline

GPU framework

First, compare GPU and CPU

CPU Great optimization has been made in terms of delay , The purpose is to run the program quickly , Minimize delays , Switch to other processes for optimization as soon as possible

and GPU The throughput is optimized , Its single core execution efficiency may not be CPU Mononuclear power , But the number of cores is very large , This means that a large number of tasks or processes are received at the same time , A large number of receiving processes also means The throughput is very large

Let's look at modern times first CPU The architecture of

From the architecture above, we know CPU Frequent use cache To reduce the delay ( Need to know cache Much faster than memory )

every last core There are two L1 caches and one L2 cache , Of every nucleus 2 The first level cache is the data cache and the core instruction cache , And they share more L2 cache space than L1 cache ( Of course, L2 cache is slower than L1 cache in terms of speed )

Finally, each core shares the L3 cache ( The L3 cache has more space than the L2 cache , More slowly ), Finally, I left CPU The category of , For example, main memory

We'll see GPU framework

and CPU every last core There are two levels of cache ,GPU Cache usage is much less , The delay is certainly greater than CPU A lot bigger

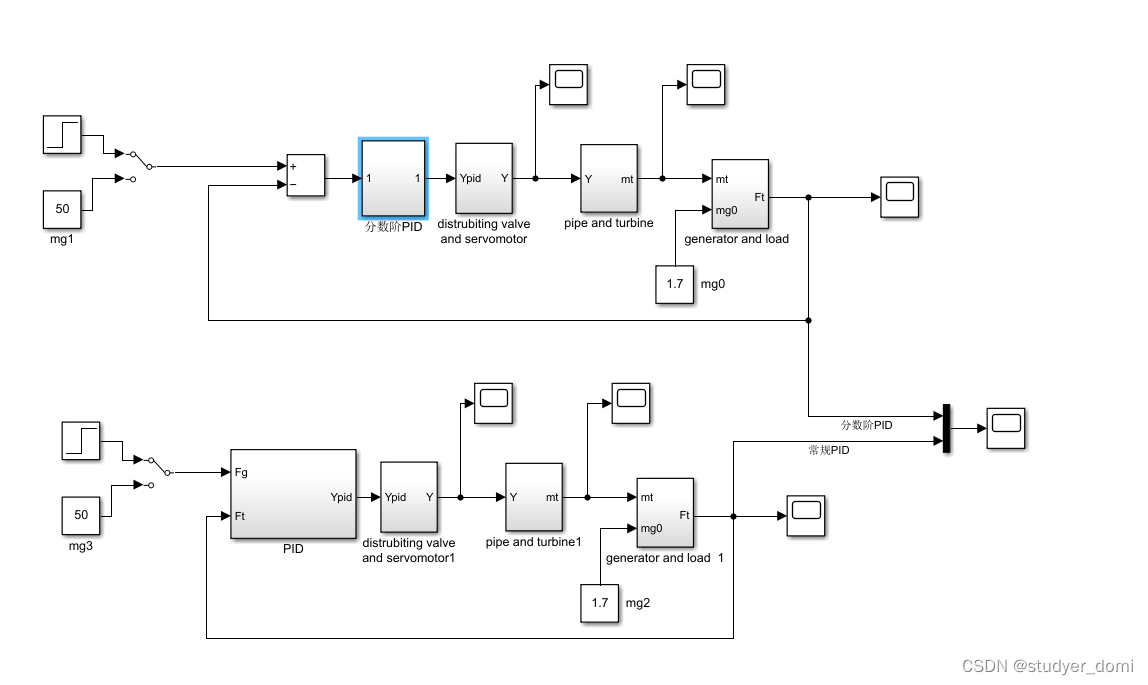

From the above figure

One GPU By multiple processor cluster form ( The picture above shows four although they are marked with n)

One processor cluster By multiple Streaming Multiprocessors form

One Streaming Multiprocessors It may contain multiple core, From the above figure Streaming Multiprocessors A certain number of core Share L1 cache , Multiple Streaming Multiprocessors Shared L2 cache

thus GPU There is no cache on the , The others are GPU On board memory inserted ( It seems that it can not be disassembled ) This onboard memory is generally relatively new , For example, the above figure is used DDR5 Of memory

The program from CPU To GPU We need to use PCIE Bus to transmit data , This is very slow , If we have a lot of data to need GPU Performing this transfer may be a bottleneck

One of our servers may be plugged into multiple servers GPU, NVIDIA GPU The data can be transmitted through a special way , such as NVlink, The rate is PCIE Hundreds of times …

边栏推荐

猜你喜欢

随机推荐

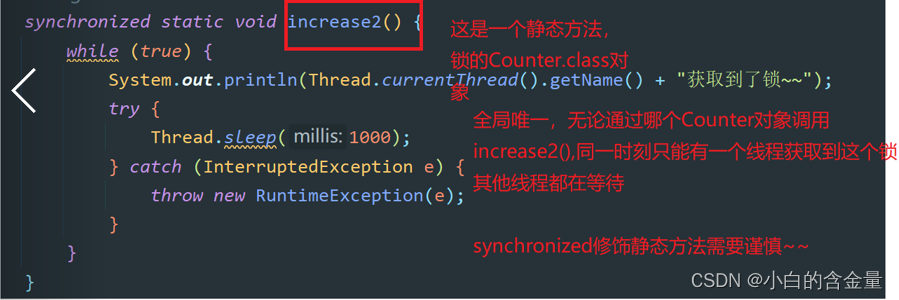

Multithreading basic Part3

TiDB的使用限制

[QT dot] realize the watchdog function to detect whether the external program is running

Quick personal site building guide using WordPress

G1 and ZGC garbage collector

thrift

信息系统项目管理师---第七章 项目成本管理

JVM整体结构解析

TiDB 中的视图功能

Crawler learning 5--- anti crawling identification picture verification code (ddddocr and pyteseract measured effect)

Altium Designer 19 器件丝印标号位置批量统一摆放

创建一个基础WDM驱动,并使用MFC调用驱动

Functional continuous

The SCP command is used in the expect script. The perfect solution to the problem that the SCP command in the expect script cannot obtain the value

Download CUDA and cudnn

[QT] use structure data to generate read / write configuration file code

力扣 179、最大数

汇编语言-王爽 第11章 标志寄存器-笔记

G1和ZGC垃圾收集器

Ahb2apb bridge design (2) -- Introduction to synchronous bridge design