当前位置:网站首页>PG 的 statistics 与 extended statistics

PG 的 statistics 与 extended statistics

2022-08-05 17:06:00 【墨天轮】

大家好,这次大表哥和大家分享的是PG的统计信息和扩展统计信息。

当然我们今天讨论的统计信息是基于表和索引的统计信息,PG 的 query planner 生成执行计划的依据就是统计信息和 cost 常量参数。

关于 planner cost 常量 类似于计算成本中的各种加权因子 具体可以参考官方文档: https://www.postgresql.org/docs/14/runtime-config-query.html

常见的有: seq_page_cost, cpu_tuple_cost, cpu_index_tuple_cost …

而表和索引的统计信息,为执行计划的生成提供了基础的数据统计支持。

我们先来看一下 row estimate 或者 cardinality :

对于行的基数的预估是参考: pg_class 的属性 reltuples

[email protected][local:/tmp]:1992=#60266 create table t1(id int, name varchar(200));CREATE TABLE[email protected][local:/tmp]:1992=#60266 insert into t1 values (generate_series(1,1000),'hello PG!');INSERT 0 1000[email protected][local:/tmp]:1992=#60266 select reltuples from pg_class where relname = 't1';reltuples----------- 1000[email protected][local:/tmp]:1992=#60266 explain analyze select * from t1; QUERY PLAN ----------------------------------------------------------------------------------------------------Seq Scan on t1 (cost=0.00..16.00 rows=1000 width=14) (actual time=0.016..0.207 rows=1000 loops=1)Planning Time: 0.214 msExecution Time: 0.385 ms(3 rows)

我们看一下 SQL 语句加上 where 过滤条件之后的 cardinality 的预估:

[email protected][local:/tmp]:1992=#92372 explain analyze select * from t1 where id <= 505; QUERY PLAN --------------------------------------------------------------------------------------------------Seq Scan on t1 (cost=0.00..18.50 rows=505 width=14) (actual time=0.021..0.139 rows=505 loops=1) Filter: (id <= 505) Rows Removed by Filter: 495Planning Time: 0.344 msExecution Time: 0.233 ms(5 rows)

我们来看一下 column id 的直方图分布 一共100个桶,范围是10

[email protected][local:/tmp]:1992=#60266 SELECT histogram_bounds FROM pg_statsdbtest-# WHERE tablename='t1' AND attname='id'; histogram_bounds -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------{1,10,20,30,40,50,60,70,80,90,100,110,120,130,140,150,160,170,180,190,200,210,220,230,240,250,260,270,280,290,300,310,320,330,340,350,360,370,380,390,400,410,420,430,440,450,460,470,480,490,500,510,520,530,540,550,560,570,580,590,600,610,620,630,640,650,660,670,680,690,700,710,720,730,740,750,760,770,780,790,800,810,820,830,840,850,860,870,880,890,900,910,920,930,940,950,960,970,980,990,1000}

我们看一下官方文档上给出的 选择率的计算: id <= 505 所在的桶 [505,510] 所在的桶是 51号桶, 由于是 height-balanced 的直方图那么默认的

公式是 N 是 查询条件所在的 桶的编号

selectivity = ((N-1) + (cer - bucket[N].min)/(bucket.max[N] - bucket.min[N]))/num_buckets

selectivity = (50 + (505 - bucket.min)/(bucket.max - bucket.min))/num_buckets = (50 + (505 - 500)/(510 - 500))/100 = 0.505

所以过滤后的cardinality 就是

reltuples * selectivity = 1000 * 0.505 = 505 这个预期是符合我们执行计划中的 rows=505

我们现在看一下 等值的情况:

[email protected][local:/tmp]:1992=#60266 update t1 set name = 'Great PG!' where id <= 10;UPDATE 10[email protected][local:/tmp]:1992=#60266 select count(1), name from t1 group by name;count | name -------+----------- 10 | Great PG! 990 | hello PG!(2 rows)[email protected][local:/tmp]:1992=#60266 analyze t1;ANALYZE

我们分别 用 where name = ‘Great PG!’ 和 where name = ‘hello PG!’ 查看一下执行计划里面的 cardinality:

name = ‘Great PG!’ : 预估的 rows=10

name = ‘hello PG!’ : 预估的 rows=990

[email protected][local:/tmp]:1992=#60266 explain analyze select * from t1 where name = 'Great PG!'; QUERY PLAN ------------------------------------------------------------------------------------------------Seq Scan on t1 (cost=0.00..18.50 rows=10 width=14) (actual time=0.090..0.091 rows=10 loops=1) Filter: ((name)::text = 'Great PG!'::text) Rows Removed by Filter: 990Planning Time: 0.084 msExecution Time: 0.104 ms(5 rows)[email protected][local:/tmp]:1992=#60266 explain analyze select * from t1 where name = 'hello PG!'; QUERY PLAN --------------------------------------------------------------------------------------------------Seq Scan on t1 (cost=0.00..18.50 rows=990 width=14) (actual time=0.018..0.194 rows=990 loops=1) Filter: ((name)::text = 'hello PG!'::text) Rows Removed by Filter: 10Planning Time: 0.061 msExecution Time: 0.258 ms(5 rows)

我们看一下 name 列上的值分布: 我们可以看到 列上出现了 类似 high frequency 的直方图:

n_distinct :2 只有2个值

most_common_vals: {“hello PG!”,“Great PG!”}

most_common_freqs: {0.99,0.01}

由于重复值的频率很高,这里PG的并不会采用 height balance 测直方图 histogram_bounds 是 null

name = ‘Great PG!’ : 预估的 rows=10 = reltuples * most_common_freqs = 1000* 0.01 = 10

name = ‘hello PG!’ : 预估的 rows=990 = reltuples * most_common_freqs = 1000* 0.99 = 990

[email protected][local:/tmp]:1992=#60266 SELECT * FROM pg_statsWHERE tablename='t1' AND attname='name';-[ RECORD 1 ]----------+--------------------------schemaname | publictablename | t1attname | nameinherited | fnull_frac | 0avg_width | 10n_distinct | 2most_common_vals | {"hello PG!","Great PG!"}most_common_freqs | {0.99,0.01}histogram_bounds |correlation | 0.9405999most_common_elems |most_common_elem_freqs |elem_count_histogram |

我们再来看一下 where 条件中 含有2个不同的列的组合情况下,cardinality 的预估:

select * from t1 where name = ‘hello PG!’ and id > 500 ;

我们 cardinality rows=495 是如何根据统计信息预估的? 其实和简单就是概率学上 两个列值的 selectivity1 * selectivity2

selectivity = selectivity(name = ‘hello PG!’) * selectivity(id <= 505)

= 0.99 * (50 + (505 - 500)/(510 - 500))/100

= 0.99 * 0.505

= 0.49995

rows = 1000 * 0.49995

=499.95

= 约等于 500 (经过四舍五入)

[email protected][local:/tmp]:1992=#92372 explain analyze select * from t1 where name = 'hello PG!' and id <= 505; QUERY PLAN --------------------------------------------------------------------------------------------------Seq Scan on t1 (cost=0.00..21.00 rows=500 width=14) (actual time=0.016..0.103 rows=495 loops=1) Filter: ((id <= 505) AND ((name)::text = 'hello PG!'::text)) Rows Removed by Filter: 505Planning Time: 0.078 msExecution Time: 0.129 ms(5 rows)

我们再来看一下,2个表连接的情况:

准备2张表, tt1和tt2 是 1对多的关系。

[email protected][local:/tmp]:1992=#92372 insert into tt1 values (generate_series(1,100),'inner join tt1');INSERT 0 100[email protected][local:/tmp]:1992=#92372 insert into tt2 values (generate_series(1,100),'inner join tt2');INSERT 0 100[email protected][local:/tmp]:1992=#92372 insert into tt2 values (generate_series(1,100),'inner join tt2');INSERT 0 100[email protected][local:/tmp]:1992=#92372 select count(1) from tt1;count------- 100(1 row)[email protected][local:/tmp]:1992=#92372 select count(1) from tt2;count------- 200(1 row)[email protected][local:/tmp]:1992=#92372 analyze tt1;ANALYZE[email protected][local:/tmp]:1992=#92372 analyze tt2;ANALYZE

我们来看一下 select * from tt1 , tt2 where tt1.id = tt2.id and tt1.name = ‘inner join tt1’; 的SQL 执行计划:

[email protected][local:/tmp]:1992=#92372 explain analyze select * from tt1 , tt2 where tt1.id = tt2.id and tt1.name = 'inner join tt1'; QUERY PLAN --------------------------------------------------------------------------------------------------------------Hash Join (cost=3.50..10.25 rows=200 width=38) (actual time=0.053..0.117 rows=200 loops=1) Hash Cond: (tt2.id = tt1.id) -> Seq Scan on tt2 (cost=0.00..4.00 rows=200 width=19) (actual time=0.013..0.033 rows=200 loops=1) -> Hash (cost=2.25..2.25 rows=100 width=19) (actual time=0.032..0.033 rows=100 loops=1) Buckets: 1024 Batches: 1 Memory Usage: 13kB -> Seq Scan on tt1 (cost=0.00..2.25 rows=100 width=19) (actual time=0.008..0.021 rows=100 loops=1) Filter: ((name)::text = 'inner join tt1'::text)Planning Time: 0.104 msExecution Time: 0.152 ms(9 rows)

查询一下: tt1和tt2 表的 null_frac 和(空值频率) n_distinct (NDV 的数量):

n_distinct 为 -1 的时候 , num_distinct 就是 reltuples = 100

n_distinct 为 -0.5 表示 数据有 0.5 (50%)是重复的,这个时候的num_distinct = reltuples * n_distinct = 200 * 0.5 = 100

(n_distinct 这个值 为正数的时候,代表num_distinct , 为负数的时候,代表重复率的百分比, -1的时候,代表 num_distinct = reltuples )

[email protected][local:/tmp]:1992=#92372 SELECT tablename, null_frac,n_distinct FROM pg_stats WHERE tablename IN ('tt1', 'tt2') AND attname='id';tablename | null_frac | n_distinct-----------+-----------+------------tt1 | 0 | -1tt2 | 0 | -0.5(2 rows)

计算公式为:

selectivity = (1 - null_frac1) * (1 - null_frac2) * min(1/num_distinct1, 1/num_distinct2)

= (1-0)* (1-0) * min(1/(1100),1/(0.5200))

= 0.01

rows = (outer_cardinality * inner_cardinality) * selectivity

= (200 * 100) * 0.01

= 200

接下来,我们看一下 Extended Statistics 扩展统计信息, PG 里面也叫 multivariate statistics, 即为多列的统计信息。 这个功能是从PG 10 版本引进的

可以简单的理解为,如果一张表中有多个列是在业务上有相关联的业务联系,那么联合这几个列一起收集统计信息,对优化器生成最佳的执行计划提供准确的数据支持。

对于列的扩展统计信息,系统是不会自动收集的,需要的时候,可以手动创建。 扩展的统计信息 只要保存在 pg_statistic_ext 和pg_statistic_ext_data 中

命令如下:

CREATE STATISTICS stts (dependencies|ndistinct|mcv) ON col1, col2 FROM table;

大家可以看到 dependencies, ndistinct, mcv 这几个关键字,表示 col1,col2 在业务上或者生活常识上有一定的依赖关系。 用官方文档上的例子, 一张表中有 zipcode , city 这2个列。按照常识来说 如果知道city 和 zipcode 应该是 1对1 的关系,

也就是说 where 条件中 如果 city = ‘TJ’ and zipcode = ‘300100’ 可以得到一个选择率很高,row estimation (cardinality) 很低的统计信息

我们现在举一个实际的例子:

假设一下表 tt_ext 中 col1 和 col2 的业务联系 : 我们建立一个 col1 的值和 col2 的值 是 1比1 的关系

1)co11 = ‘hello’ 的时候, col2 = ‘PG’

2)co11 = ‘你好’ 的时候, col2 = ‘Postgres’

[email protected][local:/tmp]:1992=#46124 create table tt_ext (id int, col1 varchar(20), col2 varchar(20));CREATE TABLE[email protected][local:/tmp]:1992=#46124 insert into tt_ext values (generate_series(1,20),'hello','PG');INSERT 0 20[email protected][local:/tmp]:1992=#46124 insert into tt_ext values (generate_series(21,25),'你好','Postgres');INSERT 0 5[email protected][local:/tmp]:1992=#46124 analyze tt_ext;ANALYZE

select * from tt_ext where col1 = ‘hello’ and col2=‘PG’ 的执行计划中, 我们看到的 rows = 16 的计算方式是

selectivity = selectivity(col1 = ‘hello’) * selectivity(col2=‘PG’)

= 0.8*0.8

= 0.64

rows = reltuples * selectivity

= 25 * 0.64

= 16

postgres-# WHERE tablename='tt_ext' AND attname in ('col1','col2');-[ RECORD 1 ]----------+--------------schemaname | publictablename | tt_extattname | col1inherited | fnull_frac | 0avg_width | 6n_distinct | 2most_common_vals | {hello,你好}most_common_freqs | {0.8,0.2}histogram_bounds |correlation | 1most_common_elems |most_common_elem_freqs |elem_count_histogram |-[ RECORD 2 ]----------+--------------schemaname | publictablename | tt_extattname | col2inherited | fnull_frac | 0avg_width | 4n_distinct | 2most_common_vals | {PG,Postgres}most_common_freqs | {0.8,0.2}histogram_bounds |correlation | 1most_common_elems |most_common_elem_freqs |elem_count_histogram |[email protected][local:/tmp]:1992=#46124 explain analyze select * from tt_ext where col1 = 'hello' and col2='PG'; QUERY PLAN ---------------------------------------------------------------------------------------------------Seq Scan on tt_ext (cost=0.00..1.38 rows=16 width=14) (actual time=0.021..0.032 rows=20 loops=1) Filter: (((col1)::text = 'hello'::text) AND ((col2)::text = 'PG'::text)) Rows Removed by Filter: 5Planning Time: 0.098 msExecution Time: 0.062 ms(5 rows)

我们来手动的创建一下 col1和 col2的 multivariate statistics, 这里需要注意的是, 执行完 create statistics 的命令之后, 必须要执行一下 analyze 命令,才会真正的收集 扩展的统计信息。

关于扩展统计信息的查询可以通过 pg_statistic_ext 和 pg_statistic_ext_data 的表连接查询 或者 pg_stats_ext 直接查询

stxname: create statistic 指定的统计信息名称

stxkeys: 第2列和第3列: col1 和 col 2

stxddependencies: “2 => 3”: 1.000000 这个表示 col1 可以 100%决定 col2 的值

“3 => 2”: 1.000000 这个表示 col2 可以 100%决定 col1 的值

[email protected][local:/tmp]:1992=#46124 create statistics stats_ext_tt_col1_col2 (dependencies) on col1,col2 from tt_ext;CREATE STATISTICS[email protected][local:/tmp]:1992=#46124 analyze tt_ext;ANALYZE[email protected][local:/tmp]:1992=#46124 SELECT stxname, stxkeys, stxddependenciesFROM pg_statistic_ext join pg_statistic_ext_data on (oid = stxoid)WHERE stxname = 'stats_ext_tt_col1_col2'; stxname | stxkeys | stxddependencies ------------------------+---------+------------------------------------------stats_ext_tt_col1_col2 | 2 3 | {"2 => 3": 1.000000, "3 => 2": 1.000000}(1 row)[email protected][local:/tmp]:1992=#46124 SELECT * FROM pg_stats_ext WHERE tablename = 'tt_ext';-[ RECORD 1 ]----------+-----------------------------------------schemaname | publictablename | tt_extstatistics_schemaname | publicstatistics_name | stats_ext_tt_col1_col2statistics_owner | postgresattnames | {col1,col2}exprs |kinds | {f}inherited | fn_distinct |dependencies | {"2 => 3": 1.000000, "3 => 2": 1.000000}most_common_vals |most_common_val_nulls |most_common_freqs |most_common_base_freqs |

这个时候再来看一下 : rows=20 是精准的了

由于 selectivity(col1 = ‘hello’) 是 可以决定 selectivity(col2=‘PG’)

或者说是 selectivity(col2=‘PG’) 是 可以决定 selectivity(col1 = ‘hello’)

所以2者的 selectivity 选一 即可

selectivity = selectivity(col1 = ‘hello’) | selectivity(col2=‘PG’)

= 0.8

rows = reltuples * selectivity

= 25 * 0.8

= 20

[email protected][local:/tmp]:1992=#46124 explain analyze select * from tt_ext where col1 = 'hello' and col2='PG'; QUERY PLAN ---------------------------------------------------------------------------------------------------Seq Scan on tt_ext (cost=0.00..1.38 rows=20 width=14) (actual time=0.008..0.012 rows=20 loops=1) Filter: (((col1)::text = 'hello'::text) AND ((col2)::text = 'PG'::text)) Rows Removed by Filter: 5Planning Time: 0.160 msExecution Time: 0.026 ms(5 rows)

接下来,我们看一下 Multivariate N-Distinct Counts , 简单地说就是收集多列的 number of distinct count 的统计信息:

我们还使用 之前的表 tt_ext :

[email protected][local:/tmp]:1992=#46124 select col1,col2 from tt_ext group by col1,col2;col1 | col2 -------+----------hello | PG你好 | Postgres(2 rows)

创建 Multivariate N-Distinct Counts 的统计信息如下:

n_distinct : {“2, 3”: 2} 表示 {col1,col2} 的 distinct number of value 是 2

[email protected][local:/tmp]:1992=#46124 create statistics stats_ext_tt_col1_col2_nvd (ndistinct) on col1,col2 from tt_ext;CREATE STATISTICS[email protected][local:/tmp]:1992=#46124 analyze tt_ext;ANALYZE[email protected][local:/tmp]:1992=#46124 select * from pg_stats_ext where tablename = 'tt_ext';-[ RECORD 2 ]----------+-----------------------------------------schemaname | publictablename | tt_extstatistics_schemaname | publicstatistics_name | stats_ext_tt_col1_col2_nvdstatistics_owner | postgresattnames | {col1,col2}exprs |kinds | {d}inherited | fn_distinct | {"2, 3": 2}dependencies |most_common_vals |most_common_val_nulls |most_common_freqs |most_common_base_freqs |

接下来,我们看一下 Multivariate MCV Lists , 简单地说就是收集多列的 most common values 的信息进行统计:

most_common_vals: {{hello,PG},{你好,Postgres}} – 最常见的2组值

most_common_val_nulls:{{f,f},{f,f}} – f 表示不存在空值

most_common_freqs: {0.8,0.2} 2个列组合起来的概率: {hello,PG} 出现是概率是 80%, {你好,Postgres} 出现的概率是 20%

most_common_base_freqs:col1 的基础概率是 64% = 0.8 * 0.8 = 0.64 , col2的基础概率是 4% = 0.2*0.2 = 0.04

[email protected][local:/tmp]:1992=#46124 create statistics stats_ext_tt_col1_col2_mcv (mcv) on col1,col2 from tt_ext;CREATE STATISTICS[email protected][local:/tmp]:1992=#46124 analyze tt_ext;ANALYZE[email protected][local:/tmp]:1992=#95670 select * from pg_stats_ext where tablename = 'tt_ext';-[ RECORD 1 ]----------+-----------------------------------------schemaname | publictablename | tt_extstatistics_schemaname | publicstatistics_name | stats_ext_tt_col1_col2_mcvstatistics_owner | postgresattnames | {col1,col2}exprs |kinds | {m}inherited | fn_distinct |dependencies |most_common_vals | {{hello,PG},{你好,Postgres}}most_common_val_nulls | {{f,f},{f,f}}most_common_freqs | {0.8,0.2}most_common_base_freqs | {0.6400000000000001,0.04000000000000001}

最后我们来总结一下 PG 表的统计信息:

1)基础的统计信息 可以通过 pg_stats, pg_class 来查询

2)扩展的统计信息 (多列的联合统计信息) 可以通过 pg_statistic_ext 和 pg_statistic_ext_data 的表连接查询 或者 pg_stats_ext 直接查询。支持如下几种:

Dependencies,Multivariate N-Distinct Count, Multivariate MCV Lists

Have a fun !

边栏推荐

- 【Case】3d navigation

- 不能不知道的excel实用操作技巧!

- [Today in History] August 5: WeChat 5.0 was released; Baidu was listed; LinkedIn founder was born

- Good code in the eyes of a compiler engineer: Loop Interchange

- NLP 论文领读|无参数机器翻译遇上对比学习:效率和性能我全都要!

- stm32f103开发板入门到手进行开发

- 单液压缸(Single-Acting Hydraulic Cylinder)数学模型和PID闭环控制(PLC比例流量阀控制)

- Fragment 这么多 API 被废弃

- Why do so many people discourage software testing?Don't click here if the monthly salary is more than 10,000.

- Flutter DataGrid教程之表格图标日历Excel完整App源码(教程含源码)

猜你喜欢

随机推荐

The role and use of mmap()

阿里百万级Redis小册震撼开源,打开源码新世界

数制转换(暑假每日一题 16)

借生态力量,openGauss突破性能瓶颈

Interface test association explanation

js实现下拉菜单 人性化体验

【案例】3d相册

Why do so many people discourage software testing?Don't click here if the monthly salary is more than 10,000.

SwiftUI 教程之使用 DocumentGroup 和 FileDocument 创建基于文本文档的应用程序

一种基于目标检测实现黑花屏分类任务的方案

2022 第四周全球 AI 模型周报

开学季邀新,赢腾讯内推实习机会

【2022新生学习】第四周要点

【案例】animation动画

How to use MATLAB to preprocess CSI data (Kalman filter)

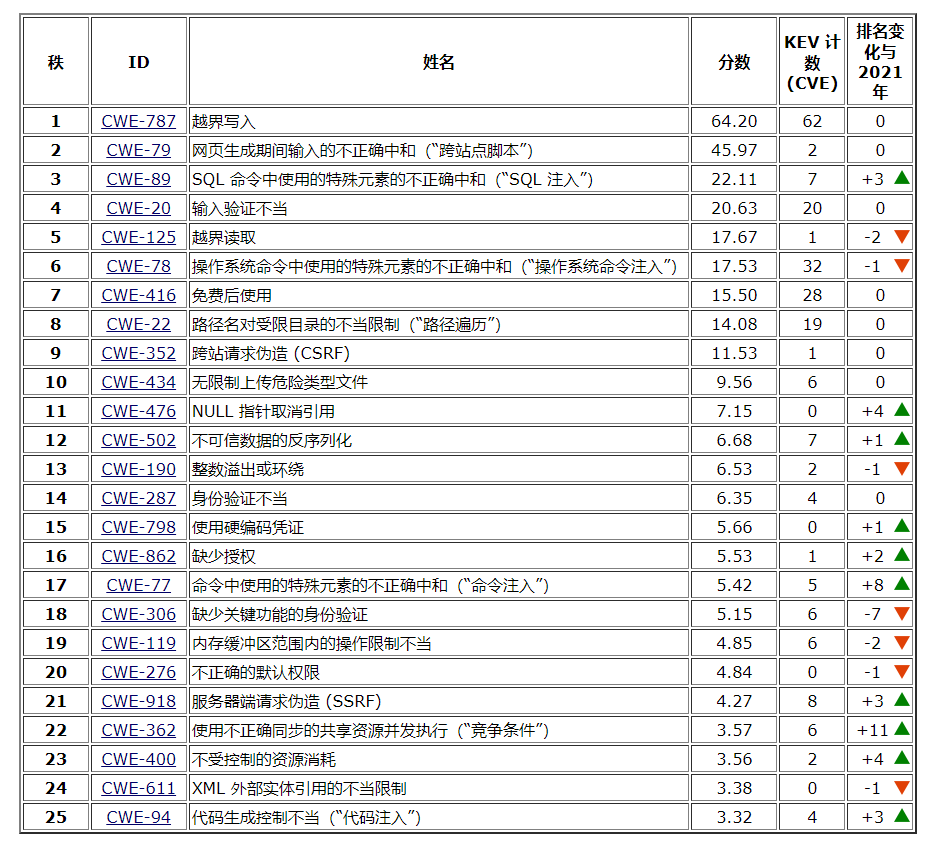

What is Common Defect Enumeration (CWE)

【无标题】

[uvm]create_item???

Good code in the eyes of a compiler engineer: Loop Interchange

详解Oracle DELETE和TRUNCATE 的区别