当前位置:网站首页>Mxnet implementation of densenet (dense connection network)

Mxnet implementation of densenet (dense connection network)

2022-07-25 13:40:00 【Yinque Guangqian】

Address of thesis :Densely Connected Convolutional Networks

DenseNet Actually, it's the same as the one in front ResNet It's very similar , We know ResNet The gradient of can be directly passed through the identity function ( The output before activating the function is added to the previous cross layer one ) Flow from the back layer to the front layer . But by Sum up Combination , It may hinder the flow of information in the network . therefore DenseNet Made improvements , in other words , The input of each layer will cross layers to each subsequent layer , In other words, each subsequent layer will have direct input from each previous layer , Then they are not added , But in The channel dimension makes a connection . It's easy to understand when we look at the picture intuitively , From the diagram, we can know the model of any layer , Their connection number can be expressed as L(L+1)/2,L Layer number , such as 3 layer , The number of connections is 6,4 The number of connections in the layer is 10; Traditionally, the number of layers is the number of connections .

Because each layer is closely connected with other layers , So for such a model , We call it “ Densely connected network ” Or call “ Dense convolution network ”.

It is also mentioned in the paper “ bottleneck ” Design ( This is in ResNet In the same ) about DenseNet The model is also very effective , Is in the 3x3 Between convolutions, a 1x1 Convolution of , Such a model is called DenseNet-B.

To further improve the compactness of the model , We can reduce the number of characteristic graphs of the transition layer . For example, dense blocks contain m A feature map , Then let the lower transition layer generate θm Output characteristic graphs , among 0<θ≤1,θ For the compression factor . When θ=1, The number of characteristic diagrams across the transition layer remains unchanged , be called DenseNet-C.

If you use both a bottleneck layer and a θ<1 The transition layer , be called DenseNet-BC. about DenseNet-BC Model of , There are very few parameters , And the performance is very good ,0.8M The parameter of is equal to 10.2M Parametric 1001 layer ( Pre activation )ResNet Quite accurate .

A variety of data sets are compared , Especially the closest ResNet Comparison , And you can see that DenseNets Fewer parameters are used , And achieve a lower error rate . Here's the picture :

For the architecture diagram of the entire dense network , as follows :

Building dense blocks

import d2lzh as d2l

from mxnet import gluon,init,nd

from mxnet.gluon import nn

#ResNet Improved convolution block

#BN--ReLU--3x3 Convolution

def conv_block(num_channels):

blk=nn.Sequential()

blk.add(nn.BatchNorm(),nn.Activation('relu'),nn.Conv2D(num_channels,kernel_size=3,padding=1))

return blk

# Dense blocks

# Multiple conv_block form , Each block uses the same number of channels

# In the forward calculation , Connect the input and output of each block in the channel dimension ( That is, the current block will be linked with all the previous blocks )

class DenseBlock(nn.Block):

def __init__(self,num_convs,num_channels,**kwargs):

super(DenseBlock,self).__init__(**kwargs)

self.net=nn.Sequential()

for _ in range(num_convs):

self.net.add(conv_block(num_channels))

def forward(self,X):

for blk in self.net:

Y=blk(X)

X=nd.concat(X,Y,dim=1)

return X

# Observe the shape change , Especially the number of channels

blk=DenseBlock(4,10)# The number of channels is 10 Of 4 Convolution blocks

blk.initialize()

X=nd.random.uniform(shape=(4,5,22,22))

XX=blk(X)

print(XX.shape)#4*10+5=45

#(4, 45, 22, 22)We can see that the number of channels has increased , If too much, it will make the model complex , Here we use transition layer to deal with , Use one 1x1 Convolution layer to reduce the number of channels , And use steps of 2 The average pool layer reduces the width and height by half , So as to further reduce the complexity of the model .

Transition layer

def transition_block(num_channels):

blk=nn.Sequential()

blk.add(nn.BatchNorm(),nn.Activation('relu'),nn.Conv2D(num_channels,kernel_size=1),

nn.AvgPool2D(pool_size=2,strides=2))

return blk

blk=transition_block(10)

blk.initialize()

print(blk(XX).shape)

#(4, 10, 11, 11)DenseNet Model construction and training

#DenseNet Model

net=nn.Sequential()

net.add(nn.Conv2D(64,kernel_size=7,strides=2,padding=3),

nn.BatchNorm(),nn.Activation('relu'),

nn.MaxPool2D(pool_size=3,strides=2,padding=1))

#num_channels Is the current number of channels , Later, it will be halved through the transition layer ,growth_rate The growth rate is the number of channels of convolution blocks in dense blocks

num_channels,growth_rate=64,32

num_convs_in_dense_blocks=[4,4,4,4]#4 A dense block , In each dense block 4 Convolution layers

for i,num_convs in enumerate(num_convs_in_dense_blocks):

net.add(DenseBlock(num_convs,growth_rate))

# Number of output channels of the last dense block

num_channels+=num_convs*growth_rate

# A transition layer with half the number of channels is added between dense blocks

if i!=len(num_convs_in_dense_blocks)-1:

num_channels //= 2

net.add(transition_block(num_channels))

# Finally, connect the global pooling layer and the full connection layer

net.add(nn.BatchNorm(),nn.Activation('relu'),nn.GlobalAvgPool2D(),nn.Dense(10))

# Training models , Because the model is deep , Wide and high 224 Reduced to 48 To simplify the calculation , Otherwise, a memory overflow error is reported

lr,num_epochs,batch_size,ctx=0.1,5,256,d2l.try_gpu()

net.initialize(force_reinit=True,ctx=ctx,init=init.Xavier())

trainer=gluon.Trainer(net.collect_params(),'sgd',{'learning_rate':lr})

train_iter,test_iter=d2l.load_data_fashion_mnist(batch_size,resize=48)

d2l.train_ch5(net,train_iter,test_iter,batch_size,trainer,ctx,num_epochs)

'''

epoch 1, loss 0.4878, train acc 0.821, test acc 0.871, time 35.3 sec

epoch 2, loss 0.3063, train acc 0.885, test acc 0.862, time 32.2 sec

epoch 3, loss 0.2618, train acc 0.902, test acc 0.865, time 32.2 sec

epoch 4, loss 0.2367, train acc 0.911, test acc 0.909, time 31.9 sec

epoch 5, loss 0.2146, train acc 0.919, test acc 0.905, time 31.8 sec

'''边栏推荐

猜你喜欢

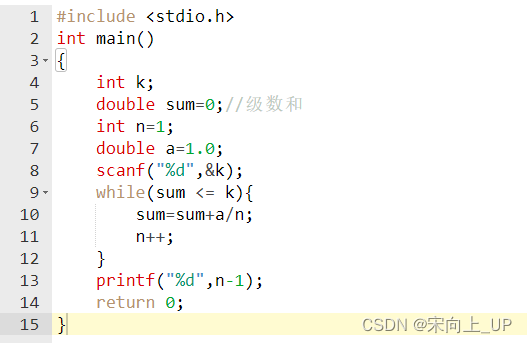

刷题-洛谷-P1152 欢乐的跳

Okaleido上线聚变Mining模式,OKA通证当下产出的唯一方式

Friends let me see this code

Generate SQL script file by initializing the latest warehousing time of vehicle attributes

Based on Baiwen imx6ull_ Ap3216 experiment driven by Pro development board

Install oh my Zsh

刷题-洛谷-P1150 Peter的烟

Esp32-c3 is based on blinker lighting control 10 way switch or relay group under Arduino framework

刷题-洛谷-P1035 级数求和

二叉树基本知识

随机推荐

mujoco+spinningup进行强化学习训练快速入门

刷题-洛谷-P1161 开灯

Excel录制宏

IM系统-消息流化一些常见问题

leetcode--四数相加II

Peripheral system calls SAP's webapi interface

Applet starts wechat payment

Serious [main] org.apache.catalina.util.lifecyclebase Handlesubclassexception initialization component

Canvas judgment content is empty

并发编程之并发工具集

Leetcode 113. path sum II

Hcip day 6 notes

In order to improve efficiency, there are various problems when using parallelstream

Applet enterprise red envelope function

安装mujoco报错:distutils.errors.DistutilsExecError: command ‘gcc‘ failed with exit status 1

Install mujoco and report an error: distutils.errors DistutilsExecError: command ‘gcc‘ failed with exit status 1

Uniapp handles background transfer pictures

Sword finger offer special assault edition day 10

刷题-洛谷-P1089 津津的储蓄计划

[server data recovery] HP EVA server storage raid information power loss data recovery