当前位置:网站首页>Getting started with ffmpeg

Getting started with ffmpeg

2022-06-24 08:15:00 【Wang Lei -ai Foundation】

Main reference ffmpeg Official documents and ffmpeg basics

FFmpeg brief introduction

FFmpeg Command line tools

Command line tools | grammar | explain |

|---|---|---|

ffmpeg |

| Fast audio and video coders / decoder |

ffplay |

| Media player |

ffprobe |

| Displays information about the media file |

ffserver |

| Use HTTP and RTSP Protocol broadcast server for multimedia streaming |

- ffplay There are also some shortcut key operations when playing , Like fast forward ->

- ffplay -showmode The default is video, You can also choose rdft( Inverse real discrete Fourier transform ) and waves( Audio wave from filter display wave

ffplay -f lavfi -i testsrc -vf pad='400:300:(ow-iw)/2:(oh-ih)/2:orange'

FFmpeg Software library

Software library | explain |

|---|---|

libavcodec | Codec library for decoding and encoding multimedia |

libavdevice | Is a special device reuse / Demultiplexing Library , yes libavformat Supplement to the library |

libavfilter | Filter Library , It's for FFmpeg And client libraries or applications to provide a media filtering layer |

libavformat | Audio / Video container format demultiplexing and multiplexing library |

libavutil | be used for FFmpeg Auxiliary library for routines in different parts of , Such as escaping , Calculate audio channel, etc |

libpostproc | Software libraries for post-processing |

libswresample | Software library for audio resampling |

libswscale | Video image zoom Library |

- FFmpeg The library is incorporated into Chrome, To support the HTML5 Audio and video elements . Other uses FFmpeg Your browser also includes Chromium and Orygin Web browser

common ffmepg The noun in / abbreviation

- d/e/a/v/s: decoder【 decoder 】/encoder【 Encoder 】/audio【 Audio 】/video【 video 】/subtitle【 subtitle 】

- dar/sar/par: Aspect ratio of image and video / The aspect ratio of an image or video frame , Depending on the video source / The ratio of pixel width to its height , It's usually 1:1, DAR = PAR x SAR

- iw/ow/ih/oh: input/ouput width/height

- 【todo Add more , Continuous updating 】

ffmpeg command

ffmpeg [global_options] {[input_file_options] -i INPUT_FILE} ... {[output_file_options] OUTPUT_FILE}...

// such as :

ffmpeg [ Parameters 1]global_options [ Parameters 2]input_file_options -i [ Parameters 3]INPUT_FILE [ Parameters 4]output_file_options [ Parameters 5]OUTPUT_FILE- For debugging, please refer to here , For example, use -debug, -fdebug, -debug_ts, Set up -loglevel

Some descriptions of the command

- ffmpeg Is a very fast video / Audio Converter , You can also grab audio / Video source , And at any sampling rate 、 Adjust video between sizes , And provide a variety of high-quality filters filter System .

- ffmpeg From any number / Form input file ( It can be ordinary files , The Conduit , Network flow , Device source, etc ), Set the input file through the input file option , adopt -i marked , And write to any number / Form output file , Any string information that cannot be interpreted as an option on the command line ( Of course not by -i Information specified as input file ) As an output file .

- In principle, each input or output file can contain a different number of data streams ( video / Audio / subtitle / The attachment / data ….), Quantity and... Contained in the specific documents / Or the data type is limited by the container format of the file , The specific selection of those streams from the input file to the output file may be automatic or based on -map Option to specify ,【 Learn how to use stream specifiler For learning ffmpeg Orders matter 】.

- As a general rule , Option is used to specify the following file , So the order in the command is very important , You can repeat the same option multiple times in the command , Each time it can be applied to the next input or output file that follows . The exception is the global option ( For example, the option of process information output detail level ), These options must first be specified , Can be used globally .

- To specify the input file explicitly , You have to use from 0 The beginning of the numerical indexing method , That is to say 1 Input files are created by 0 Indexes , The first 2 One is 1. alike , Specifying the data flow in a file is also through the same rule of indexing , namely 2:3 It means the first one 3 The... Of the first input file 4 Data streams .

- ffmpeg call libavformat library ( contain demuxer) Read input file , Separate all kinds of encoded packets ( flow ), When there are multiple input files ,ffmpeg Try to track the lowest timestamp to synchronize any input stream . Coded packet ( Unless it is specified as a streaming copy , For relevant contents, please refer to the description of feature description streaming copy ) The uncompressed data frame is decoded by the decoder (raw video /PCM Format audio …), These data frames can be further processed by the filter . The filtered data is recoded into new packets ( flow ), Then it is mixed in a mixer ( For example, audio packets and video packets are cross combined in a certain order and proportion ), Write to output file , See the following figure for the specific processing flow .

- ffmpeg Of help The output is complex , The presence of certain letters means that this option applies to encoding (E)、 decode (D)、 video (V)、 Audio (A) Or subtitles (S)

- Can be in FFmpeg Used in SI Prefix : It means to ffmepg This form can be used to formulate the numbers inside : 1500000 => 1500K => 1.5M => 0.0015G

- stay FFmpeg A large number of variables can be used in the command of , Different commands can use different variables , such as filter crop Inside x, y Location :x The default value is (iw - ow)/2, y The default value is (ih - oh)/2, except iw,ih ,ow, oh You can also use variables : a, sal etc.

_______ ______________

| | | |

| input | demuxer | encoded data | decoder

| file | ---------> | packets | -----+

|_______| |______________| |

v

_________

| |

| decoded |

| frames |

|_________|

________ ______________ |

| | | | |

| output | <-------- | encoded data | <----+

| file | muxer | packets | encoder

|________| |______________|Show output preview

- FFplay Media player preview :

ffplay -i input_file ... test_options - Use SDL Output device preview :

ffmpeg -f lavfi -i rgbtestsrc -pix_fmt yuv420p -f sdl Example

Filters, filterchains,filtergraphs

- In multimedia processing , The term filter refers to a software tool that modifies input before coding to output . Filters are divided into audio and video filters .FFmpeg Built in many multimedia filters , They can be combined in many ways .FFmpeg The filter API( Application programming interface ) yes libavfilter Software library , It allows the filter to have multiple inputs and outputs . Filters include the use of... Between inputs and outputs -vf Option Video Filter and -af Options audio filter .

# Clockwise rotation 90°: Use transpose filter ffplay -f lavfi -i testsrc -vf transpose=1 # Use atempo The audio filter reduces the speed of input audio to 80%: ffmpeg -i input.mp3 -af atempo=0.8 output.mp3

- Before coding ,ffmpeg It can be done to raw( real / primary ) Audio and video usage libavfilter Filter in the library . stay ffmpeg It seems that only 2 Seed filter : Simple filter , Composite filter .

- Simple filter

filter filtergraphIt's just 1 Input and output filters , The data on both sides of the filter is of the same type , It can be understood as simply adding a step from the uncompressed data frame to the recoding - Composite filter

-filter_complex filtergraphIt is the case that cannot be simply described as a linear process applied to a stream , For example, when there are multiple inputs and / Or output , Or when the output stream type is different from the input . - Composite filters usually use

-lavfiperhaps-filter_complex, The two are equivalent . 【 Here is a confusing fflay -f lavfi to virtual device Provide input 】 - filter_complex There are some rules :

- input use

[file_index:stream_specifier]Express label, Can be in -map I quote ; If stream_specifier Match multiple stream, Use the first one - No, label Of input Will automatically use the first one that is not used stream; If the output does not label Will automatically output to the first output

- Detailed syntax reference here

- input use

- Simple filter

_________ ______________

| | | |

| decoded | | encoded data |

| frames |\ _ | packets |

|_________| \ /||______________|

\ __________ /

simple _\|| | / encoder

filtergraph | filtered |/

| frames |

|__________|

_________

| |

| input 0 |\ __________

|_________| \ | |

\ _________ /| output 0 |

\ | | / |__________|

_________ \| complex | /

| | | |/

| input 1 |---->| filter |\

|_________| | | \ __________

/| graph | \ | |

/ | | \| output 1 |

_________ / |_________| |__________|

| | /

| input 2 |/

|_________|- Filters are usually used for filterchains( Comma separated filter sequence ) and filtergraphs( Semicolon separated filterchains Sequence ). stay filtergraphs in , You can use to represent the selected filterchain Output link labels , And can be found in the following filtergraphs Use in . for example , We want to link the input video to hqdn3d Compare the output of the filter output . without filtergraphs, We must use at least two commands , for example :

# -vf <=> -filter:v ffmpeg -i input.mpg -vf hqdn3d,pad=2*iw output.mp4 ffmpeg -i output.mp4 -i input.mpg -filter_complex overlay=w compare.mp4

Use... With link labels filtergraph, Just one command :

# The split filter divides the input into 2 Output labels [a] and [b], And then [a] The link is used as the second filterchain The input of , It is marked [a] The comparison of creates a pad.[b] The link is used as the third filterchain The input of , It creates a tag called [b] Output . the last one filterchain Use [A] and [B] Label as input to override filter , To produce a final comparison . ffplay -i i.mpg -vf 'split[a][b];[a]pad=2*iw[A];[b]hqdn3d[B];[A][B]overlay=w'

Here's another example : ffplay -f lavfi -i rgbtestsrc -vf "split[a][b];[a]pad=2*iw[1];[b]vflip[2];[1][2]overlay=w"

Select media streaming

- By default ,ffmpeg Put each type of input file ( video 、 Audio and subtitles ) Just use a stream transformation to output to the output file , Is to output the stream with the best effect : For video, it is the highest quality stream , For audio, it contains the most channels , For subtitles, it is the first subtitle track , If there are multiple homotypic rates ( Same type , Same bit rate ) Select the stream with the lowest index number . Default settings can be disabled , And use -vn/-an/-sn/-dn Option for specific exclusion , If full manual control is required , in -map Options , It will disable the default value and select the specified configuration . -map The parameter format of the option is

-map [-]input_file_id[:stream_specifier][?][,sync_file_id[:stream_specifier]] | [linklabel] (output), give an example :- -map 0 Select all streams of all types .

- -map i:v Select all video streams from the file , use i (index), -map i:a Select all audio streams ,-map i:s Select all subtitle streams , wait

- Special options - - -vn, -sn Exclude all audio separately 、 Video or subtitle stream

- Generally speaking, the selection of a stream has nothing to do with the processing of the stream 【 Except for subtitles 】【codec Used to specify the processing of the stream , If not specified ,ffmepg The default will be selected 】, If subtitles encoder Designated , The first subtitle stream will be include.

- Except for specific -map Options , The stream specifier is also used with many other options :

Stream formal specifier

Specifier form | describe |

|---|---|

stream_index | Select the stream for this index ( Number ) |

|

|

|

|

stream_id | Specified by format ID Select flow |

-codec:a:1 ac3Yesa:1Use ac3 codec,-codec: copyperhaps-codec copyFor all stream Use codec copy- To set up the use of audio and video -b Bit rate of option , We can use the command :

ffmpeg -i input.mpg -b:a 128k -b:v 1500k output.mp4

# In the following command out1.mkv out2.wav Will include A.avi, B.mp4 The best of video/audio And the first subtitle

# and out3.mov There will only be B.mp4 Medium audio, And will not handle , It's just copy

ffmpeg -i A.avi -i B.mp4 out1.mkv out2.wav -map 1:a -c:a copy out3.mov

# In the following order out1.mkv There will only be audio/video Because the default subtitle encoder yes Matroska muxer yes text-based, however C.mkv The subtitles inside are image-based

# So subtitles will not be selected ; and out2.mkv Only video/subtitle because audio By -an Operation excludes

ffmpeg -i C.mkv out1.mkv -c:s dvdsub -an out2.mkv

# In the following command overlay You need two inputs , But there is no designation , So automatically select A.avi and C.mkv Medium video Generate out1.mp4, meanwhile out1.mp4 There will be A,C Best of all

# audio, But there will be no subtitles , because mp4 No default subtitles encoder, I didn't take the initiative to set up .out2.srt Will choose A,C First of all text-based Subtitle stream

ffmpeg -i A.avi -i C.mkv -i B.mp4 -filter_complex "overlay" out1.mp4 out2.srt

# The following command uses labeled filtergraph, Pay attention here , about label outv1, outv2 Both ** Used only once **, without label Default output to the first output

# outv1, overlay, aresample Will be output to out1.mp4, -an Will not inhibit filtergraphs Output ;out2.mkv from automatic stream selection Decision output

# out3.mkv The input is hue filter An output of + B.mp4 One of the first audio

ffmpeg -i A.avi -i B.mp4 -i C.mkv -filter_complex "[1:v]hue=s=0,split=2[outv1][outv2];overlay;aresample" \

-map '[outv1]' -an out1.mp4 \

out2.mkv \

-map '[outv2]' -map 1:a:0 out3.mkvLavfi Virtual device

Lavfi Usually used to display test mode , For example, with commands SMPTE strip :

ffplay -f lavfi -i smptebars

- here -f I / O format is mandatory , Generally, it can be inferred from the suffix Other commonly used sources are color sources that can be displayed with commands :

ffplay -f lavfi -i color=c=blue

Color name

- Some video filters and sources have a color parameter , You need to specify the desired color , And there are 4 A method of color specification ( The default value is black ):

Show help and features

- FFmpeg The tool has a great console help , Can be displayed completely or about a particular element - decoder , Encoder, etc .

ffmpeg -? or ffmpeg -h;ffmpeg -h long or ffmpeg -h full;ffmpeg –? topic or ffmpeg -h topic

for example , To display information about FLV Decoder information , We can use the following command :

ffmpeg -h decoder=flv

- Other help

Help projects | command |

|---|---|

Available bitstream filters |

|

Available decoders |

|

Available encoders |

|

Available filters |

|

Available formats |

|

Available audio channel layouts |

|

Available pixel formats |

|

Available protocols |

|

Available audio sample formats |

|

Bit rate / Frame rate / file size

Frame rate ( frequency ) Introduction to

- The frame rate is the number of frames per second encoded into a video file (FPS or fps), The human eye needs at least about 15 fps To watch the continuous movement . Frame rate is also called frame rate , Its unit is hertz (Hz),LCD The display usually has 60 Hz The frequency of .

- There are two frame rates - Interlace ( stay FPS The number is followed by i) And line by line ( stay FPS The number is followed by p). Use interlaced frame rate in TV

Common video frame rates | describe |

|---|---|

24p or 23.976 | from 20 century 20 s , The standard frame rate in the film industry , All the films are shot at this frequency . When these films are adopted NTSC On television , Frame rate reduced to 24×1000/1001 = 23.976 value , But for PAL / SECAM TV , The frame rate of the movie has been increased to 25 frame / second . |

25p | because 25 Progressive video can be easily converted to 50 An interlaced TV field , So the movie frequency is 50 Hertz (PAL and SECAM standard ) The standard frame rate of film and television in the country . |

30p | Common video frame rates , Commonly used in digital cameras and video cameras . It can be used for 60 Hertz (NTSC) Television broadcasting in interlaced fields . |

50i | PAL and SECAM The standard field rate of TV ( Interlaced frame rate ). |

60ior 59.94 | NTSC The standard field frequency of a television , After the invention of color TV , The frame rate is reduced to 60 * 1000/1001 = 59.94 Value , To prevent interference between chrominance subcarriers and sound carriers . |

50p/60p | HDTV( High definition television ) Universal frame rate . |

48p | Proposed frame rate , It has been tested |

72p | Proposed frame rate , It has been tested |

120p | by UHDTV( Ultra high definition television ) Standardized progressive format , Plan to be UHDTV A single global “ Double precision ” Frame rate ( Instead of using PAL The standard 100 Hz and NTSC The standard 119.88 Hz) |

Frame rate setting

- To set the video frame rate , We use... Before exporting the file -r Options , Grammar is :

ffmpeg -i input -r fps output - Another way to set the frame rate is to use fps filter , This is especially useful in the filter chain . The grammar is :

fps=fps=number_of_frames - Except for values , Both methods of setting the frame rate accept the next predefined text value :

abbreviation | The exact value | Corresponding FPS( Corresponding frame ) |

|---|---|---|

ntsc-film | 24000/1001 | 23.97 |

film | 24/1 | 24 |

pal, qpal, spal | 25/1 | 25 |

ntsc, qntsc, sntsc | 30000/1001 | 29.97 |

# Change the frame rate of the video .avi File from the 25 To 30 fps ffmpeg -i input.avi -r 30 output.mp4 # To change the clip's input frame rate .mpg File to value 25 ffmpeg -i clip.mpg -vf fps=fps=25 clip.webm # Set the frame rate to 29.97 fps ffmpeg -i input.avi -r ntsc output.mpg

Bit rate

Bit rate is a parameter that determines the overall audio or video quality . It specifies the number of bits processed per time unit , stay FFmpeg in , The bit rate is expressed in bits per second .

- Bit rate determines storage 1 The number of bits of the second encoded stream , It USES -b Option is set ,

-b【encoding,audio/video】,-ba【encoding,audio】,-bt【encoding,video】 - Type of bit rate :

type | abbreviation | describe |

|---|---|---|

Average bit rate | ABR | The average number of bits processed per second , This value is also used for VBR code , When required, it is a certain file size for output |

Constant bit rate | CBR | The number of bits processed per second is constant , This is not practical for storage , Because parts with fast motion require more bits than static bits ,CBR Mainly used for multimedia streaming |

Variable bit rate | VBR | The number of bits processed per second is variable , Complex scenes or sounds are encoded with more data and associated with CBR Compare , For documents of the same size VBR mass ratio CBR Better (VBR Coding ratio CBR Need more time and CPU power , But recent media players can fully decode VBR.) relevant option: |

- You can set the output file size to a value , Automatically calculate the bit rate :

ffmpeg -i input.avi -fs 10MB output.mp4 - According to the bit rate, the file size can be calculated ( barring muxing Overhead and file metadata ):

file_size = (video_bitrate + audio_bitrate) * time_in_seconds / 8

Adjust and scale video

Adjust video

- The width and height of the output video can be set before the output file name -s Options 【 Equivalent to the Finally, I added a filter scale】. Video resolution in WxH Format input , among w For pixel width ,h For pixel height

- -s If set before input ,= video_size

- There are also some preset values for video frame size , such as vga == 640x480, hd720=1280x720, sxga=1280x1024, hd1080=1920x1080

- Video is usually adjusted to a smaller resolution than the source , This is called down sampling . In smaller sizes , Some details will be lost , This fact explains Nyquist -Shannon Sampling theorem : In order to completely reconstruct the sampled signal , We must use a frequency at least higher than the source frequency 2 Times the frequency . This means keeping small details in the reduced video , Their original size must be higher than the scale divided by 2.

- Another related command is

-aspectAdjust the horizontal and vertical ratio

# The following two commands are equivalent ffmpeg -i input.avi -s 640x480 output.avi ffmpeg -i input.avi -s vga output.avi

# for example ,800x600(SVGA) High resolution video contains 2 Pixel wide details . When retracted to 640x480(VGA) Resolution time , The zoom ratio is 0.8, also 2 The pixels are then scaled to 2 Pixels : 640 pixels / 800 pixels = 0.8 2 pixels * 0.8 = 1.6 ≈ 2 pixels # But when the video is zoomed to 160x120 (QQVGA) Resolution time , The details are lost : 160 pixels / 800 pixels = 0.2 2 pixels * 0.2 = 0.4 ≈ 0 pixels

Expansion filter

- Adjusting video to a larger frame size is rare , A special filter for smoothing the amplified source is super2xsai filter :

# take 128x96 Zoom the video to resolution 256x192 Pixels ffmpeg -i phone_video.3gp -vf super2xsai output.mp4

Advanced zoom Skills

- To manage where the zoom process starts , You can use the scaling filter directly .

scale=width:height[:interl={1|-1}]; There are some variables available , such as :iw or in_w: Enter the width of the ;ih or in_h: Enter the height of thea: Aspect ratio , And iw/ih identical .sar: Enter the aspect ratio of the sample , And dar/a identical ;dar: Enter the display aspect ratio , And *sar identical .

# Scale video input ffmpeg -i input.mpg -vf scale=iw/2:ih/2 output.mp4 # Extend to a predefined width or height : Scale proportionally ffmpeg -i input.avi -vf scale=400:400/a

Clip video

- Use video filter crop, grammar :

crop=ow[:oh[:x[:y[:keep_aspect]]]]; Available variables :x, y: Yes x Calculated value ( The number of pixels in the horizontal direction from the upper left corner ) and y( Number of vertical pixels ), Evaluate each frame ,x The default value is (iw - ow)/2, y The default value is (ih - oh)/2in_w, iw: Enter the width of the ;in_h, ih: Enter the height of theout_w, ow: Output ( tailoring ) Width , The default value is = iw;out_h, oh: Output ( tailoring ) Height , The default value is = iha: Aspect ratio , And iw/ih identical ;sar: Enter the sample scale ;dar: Enter the display aspect ratio , Equals the expression a*sarhsub, vsub: Horizontal and vertical chroma sub sample values , For pixel format yuv422p, hsub The value of is 2,vsub by 1n: Number of input boxes , from 0 Startpos: The location is in the file of the input box , If you don't know NANt: The timestamp is in seconds , If the input timestamp is unknown

- Crop box Center : Follow the above command instructions , It can be seen that if you do not set x.y Then the center will be automatically set for clipping , such as

ffmpeg -i input_file -vf crop=w:h output_fileCan cut the middle w:h Area - Automatically detect crop regions : Use cropdetect filter , The grammar is :

cropdetect[=limit[:round[:reset]]], limit The parameter represents the gray value < limit For cutting

Fill in the video

- Use filter pad, The grammar is

pad=width[:height[:x[:y[:color]]]]

Example

ffplay -f lavfi -i testsrc -vf pad=380:360:30:30:pink -t 50 # from 4:3 To 16:9 Fill video for ffmpeg -i input -vf pad=ih*16/9:ih:(ow-iw)/2:0:color output # from 16:9 To 4:3 Fill video for ffmpeg -i input -vf pad=iw:iw*3/4:0:(oh-ih)/2:color output

Flip and rotate video

- Flip horizontal , Use filter hflip, grammar :

-vf hflip - Flip vertically , Use filter vlfip, grammar :

-vf vflip - rotate , Use filter transpose, grammar :

transpose={0, 1, 2, 3}, See the following for the meaning of parameters In the example help

Example

ffplay -f lavfi -i testsrc -vf hflip

# One look help Example

> ffmpeg -h filter=transpose

Filter transpose

Transpose input video.

slice threading supported

Inputs:

#0: default (video)

Outputs:

#0: default (video)

transpose AVOptions:

dir <int> ..FV....... set transpose direction (from 0 to 7) (default cclock_flip)

cclock_flip 0 ..FV....... rotate counter-clockwise with vertical flip

clock 1 ..FV....... rotate clockwise

cclock 2 ..FV....... rotate counter-clockwise

clock_flip 3 ..FV....... rotate clockwise with vertical flip

passthrough <int> ..FV....... do not apply transposition if the input matches the specified geometry (from 0 to INT_MAX) (default none)

none 0 ..FV....... always apply transposition

portrait 2 ..FV....... preserve portrait geometry

landscape 1 ..FV....... preserve landscape geometryFuzzy , Sharpening and denoising

- Fuzzy use filter boxblur, grammar :

boxblur=luma_r:luma_p[:chroma_r:chroma_p[:alpha_r:alpha_p]]filter expects 2 or 4 or 6 parameters, r = radius , p = The weight , Degree of , power - Another blur filter by smartblur, grammar :

smartblur=luma_r:luma_s:luma_t[:chroma_r:chroma_s:chroma_t]r = radius, p = power, t = threshold - Sharpen use filterm, grammar :

l_msize_x:l_msize_y:l_amount:c_msize_x:c_msize_y:c_amountall parameters are optional, if not set, the default is 5:5:1.0:5:5:0.0 - Noise reduction You can use filters denoise3d, It is mp Part of the filter , grammar :

mp=denoise3d[=luma_spatial[:chroma_spatial[:luma_tmp[:chroma_tmp]]]] - Another noise reduction filter : hqdn3d, He is denoise3d Advanced version of the filter , The grammar is

hqdn3d=[luma_spatial[:chroma_spatial[:luma_tmp[:chroma_tmp]]]] - Use nr Option can also reduce noise , Its value is a value from 0 To 100000 The integer of , among 0 Is the default value , such as

ffplay -i input.avi -nr 500

overlay

- overlay Use fliter overlay, The grammar is

overlay[=x:y[[:rgb={0, 1}]]Parameters x and y It's optional , The default value is 0 rgb Parameters are optional , Its value is 0 or 1; This filter It means to overwrite the first input at the specified position . such asffmpeg -i input1 -i input2 -filter_complex overlay=x:y output - Be careful , Instead of using -vf Options , But use -filter_complex Options , Because there are two inputs

- If you want to overlay Show at a certain moment , have access to

itsoffset:ffmpeg -i video_with_timer.mp4 -itsoffset 5 -i logo.png -filter_complex overlay timer_with_logo.mp4

# overlay The important variables are : # main_w or W Main input width ; main_h or H Main input height # overlay_w or w overlay Enter width ; overlay_h or h overlay Enter the height # The following command will put overlay It's in the upper right corner ffmpeg -i pair.mp4 -i logo.png -filter_complex overlay=W-w pair2.mp4

Add text to the video

- Add text to use filter drawtext, Add text to your video from a text file or string , And use various parameters to modify . The text is loaded from the file specified by the text file parameter , Or directly use text parameters to enter . The other required parameter is the font file that specifies the selected font . Text position by x and y Parameter setting . The grammar is :

drawtext=fontfile=font_f:text=text1[:p3=v3[:p4=v4[...]]]p3,p4 ... Said parameters #3, Parameters #4 etc. ; This filter There are many parameters for , It can be usedffmpeg -h filter=drawtextsee - Use... In location t Variable , You can create a moving text effect

ffplay -f lavfi -i color=white -vf drawtext=fontfile=/Library/Fonts/Baskerville.ttc:text=Welcome # Horizontally moving text ffmpeg -f lavfi -i color=orange -vf drawtext="text=hello:x=w-t*50:y=h-th:fontcolor=blue:fontsize=30" -t 50 test.mp4

Encoding and decoding

- The function of encoding and decoding is complex , There are many kinds of codecs , There are many parameters ; First, understand the file format and codecs The difference between

- File format Corresponding to Multimedia container , It's a packaging format , Use

fmpeg -formatsYou can see all supported file formats ( Containers ), such as mp4, mp3 wait - Codec is the algorithm type of codec , Use

ffmpeg -codecsYou can see all the codecs , The codec on the command line is composed of -c or -codec Option specified , Grammar is :-codec[:stream_specifier] codec_name - If you only change the container and keep the codec , We can use -c copy or -c:a copy or -c:v copy Options :

ffmpeg -i input.avi -q 1 -c copy output.mov - You can specify codecs for input and output files , If the output contains multiple streams , Then each stream can use a different codec . If we specify the output format without a codec , be ffmpeg The default codec... Will be selected , such as .avi The default codec for the format is mpeg4, .mkv/mov/mp4 The codec of the format is h264

- There are so many parameters , use

ffmpeg -h encoder={name}and ffmpeg-formats file To see the help

# The grammar is as follows , Use in input In front of us decoder, Use in output In front of us encoder -c[:stream_specifier] codec (input/output,per-stream) -codec[:stream_specifier] codec (input/output,per-stream) # One stream There may be a lot of applications in the front codec, With last matching c option Subject to # For example, the following command will use libx264 encode first video, libvorbis encode The first 137 individual audio, other stream copy ffmpeg -i INPUT -map 0 -c copy -c:v:1 libx264 -c:a:137 libvorbis OUTPUT

Time operation

- Generally, two parameter styles are accepted :

[-]HH:MM:SS[.m…]and[-]S+[.m…], among ,HH For hours ,MM Represents minutes ,SS or S Represents the number of seconds ,m Represents milliseconds . - If you want to set the duration of the video 【 Cut the back 】, have access to -t Parameter control time or -frames 【-aframes <=> -frames:a Apply to audio In the same way -dframes/ -vframes】 Parameter controls the number of frames

- Want to record the input file from a certain point in time 【 Crop the front 】, have access to -ss (seek from start) Parameters , Pay attention to the combination of -ss and -t From ss Start input t Time

- The input stream is delayed , Use -itsoffset + -map

- Use -timestamp Option to record a timestamp in the video

- Timestamp and time base

- Audio and video speed modification : Video speed modification using setpts filter (set presentation timestamp), The grammar is

setpts=expression; Audio speed change use filter atempo - asyncts audio filter You can use timestamps to synchronize audio data

ffmpeg -i music.mp3 -t 180 music_3_minutes.mp3 ffmpeg -i video.avi -vframes 15000 video_10_minnutes.avi ffmpeg -i input.avi -ss 10 output.mp4 # from video.mpg Save the... In 5 The minute part ffmpeg -i video.mpg -ss 240 -t 60 clip_5th_minute.mpg # Audio is faster than video 1.5 second Do synchronization , here -c Express -codec[:stream_specifier] codec <=> -c[:stream_specifier] codec (input/output,per-stream) ffmpeg -i input.mpv -map 0:v -map 0:a -itsoffset 1.5 -c:a copy -c:v copy output.mov # The video is coming 5 second Do synchronization ffmpeg -i input.mov -map 0:v -itsoffset 5 -map 0:a -c:a copy -c:v copy output.mov # Two documents , Delay the audio stream 3 second ffmpeg -i v.mpg -itsoffset 3 -i a.mp3 -map 0:v:0 -map 1:a:0 output.mp4 # 3 Watch the video at double speed ffplay -f lavfi -i testsrc -vf setpts=PTS/3 # 2 Double speed to play the input audio ffplay -i speech.mp3 -af atempo=2 # Use timestamp to synchronize music.mpg The data in the file ffmpeg -i music.mpg -af asyncts=compensate=1 -f mpegts music.ts

Mathematical functions

- many FFmpeg All options require numeric values as arguments , Some of these can be in the form of expressions , Can contain arithmetic operators 、 Constants and various mathematical functions . Functions are typically used for audio and video filters and sources , such as aevalsrc【 Audio source 】,boxblur/overlay/crop/drawtext/scale【 Video filters 】 wait ; You can also use some built-in multi mathematical functions , such as :

abs,asin(x),eq(x, y),gte(x, y),if(expr1, expr2),min(x, y),while(cond, expr)wait

# The generation frequency is 523.251 Hz Of C5 tone ( Baritone high C) The tone of the music ffplay -f lavfi -i aevalsrc='sin(523.251*2*PI*t)'

Metadata and subtitles

- In the media file

MetadataIncluding artists , author , date , Schools , Publisher , Additional information such as title , Does not appear in video frames . Subtitles are text data , Displayed near the bottom of the video frame , Usually included in a separate file , Although some container file formats ( Such as VOB) Support including subtitle files . - View metadata : Use ffprobe, perhaps ffplay Metadata will be displayed when

- Create metadata : Use

-metadata k1=v1 -metadata k2=v2 - Save metadata to file :

-f ffmetadata; Load file metadata into file-i x -i y - Delete metadata :

-map_metadata -1 - subtitle : Subtitles also have many formats , such as ass, srt wait , The suffix can be used to identify the corresponding codec ssa/srt codec

- Part of the filter Support to encode subtitles into video streams , The grammar is

subtitles=filename[:original_size], Example :ffmpeg -i video.avi -vf subtitles=titles.srt video.mp4

# View one mp3 Metadata of the file

> ffprobe '~/Downloads/Jack Johnson - Imagine.mp3'

... Omit part

Input #0, mp3, from '~/Downloads/Jack Johnson - Imagine.mp3':

Metadata:

encoder : Lavf56.4.101

disc : 1

track : 11

artist : Jack Johnson

comment : 163 key(Don't modify):L64FU3W4YxX3ZFTmbZ+8/UnVjCnqpKdEibASol9of8KaPX//btdBYF+VVRkXkEDD/iGR355GeUXxh0IUGR/GwBNi9G4ezN6Z7cPYKcJ3G01aqRPkU1umKYjjZLXvNFMCMLrmf5mGqVQo+hTaZlpidWU9kf8oTXfXHN4cj2PKPLh7HFCdJ/oKzLj3tB/BKqQJjZ1moy59PXmgevX6IGXydNfGHhxwts+3ZlZ/1FBko

title : Imagine

album : The Mango Tree

# New metadata

ffmpeg -i '~/Downloads/Jack Johnson - Imagine.mp3' -metadata "k1=v1" -metadata "k2=v2" -y ~/Downloads/test.mp3

# Save metadata to file

ffmpeg -i ~/Downloads/test.mp3 -f ffmetadata ~/Downloads/test.txt

# Load file metadata into file

ffmpeg -i ~/Downloads/test.txt -i ~/Downloads/test.mp3 ~/Downloads/test2.mp3

# Delete metadata

ffmpeg -i input.avi -map_metadata -1 output.mp4The figure below may not be accurate , You can refer to the common predefined metadata What are they? key

The image processing

although FFmpeg The main purpose of the tool is related to audio and video , but ffmpeg Various image formats can be decoded and encoded , And many image related tasks can be completed quickly

- Supported formats include :

Y.U.V,BMP,GIF,JPG.... A little - Create an image :

- From the video screenshot :

ffmpeg -i input -ss t image.typess Where to start , t Express moment , This is a ffmpeg Consistent parameters - Cut from the video gif: Go straight to .gif【todo Add more practical examples 】

- Create images with built-in video sources : color/ mptestsrc/ rgbtestsrc/ smptebars/ testsrc, such as color, The parameters used are

color[=c=clr[:d=time[:r=fps[:sar=value[:s=resolution]]]]] - Frame extraction :

ffmpeg -i clip.avi frame%4d.jpg【todo Add more practical examples 】

- From the video screenshot :

- Resize , Crop and fill images : Similar to video

- Flip , Rotate and overlay images Similar to video

- Conversion between image types :

ffmpeg -i image.type1 image.type2 - Create a video from an image :

- From a picture :

ffmpeg -loop 1 -i photo.jpg -t 10 photo.mp4 - From multiple pictures :

ffmpeg -f image2 -i img%d.jpg -r 25 video.mp4

- From a picture :

# Screenshot ffmpeg -i in1.mp4 -ss 3 -frames:v 1 snap.jpg # gif ffmpeg -i in1.mp4 -pix_fmt rgb24 in1.gif # Generate pictures from built-in video sources , For example, generate a monochrome picture ffmpeg -f lavfi -i color=c=#008080:s=728x90 -frames:v 1 leaderboard.jpg

Digital audio

Digital audio is a technology , Used to capture 、 Record 、 edit 、 Encode and copy sounds , These sounds are usually modulated by pulse code (PCM) Encoding .FFmpeg Supports many audio formats , Include AAC、MP3、Vorbis、WAV、WMA etc.

Audio Fundamentals

- Audio quantization : Due to the physiological limitations of the human auditory system , The continuous value of pressure wave can be replaced by a finite series of values , The number of bits used to store audio in binary is called

Audio bit depth, Common bit depths for quantization are :- 8 bit: For the telephone , Old equipment

- 12 bit: DV( Digital video ) Standards for , For digital cameras, etc

- 14 bit: be used for NICAM Compress , TV stereo , wait

- 16 bit: Standard audio CD and DAT( Digital audio tape )

- 20 bit: Add standard super audio CD and DVD Audio

- 24 bit/32 bit: A little

- Audio sampling : Quantization is the representation of the ordinate , Abscissa is sampling , Common sampling frequencies are :

- 8000 Hz: For the telephone 、 Wireless network, microphone, etc

- 11025/16000/22050/32000 HZ: A little

- 44100 HZ: Audio CD standard , be used for MPEG-1, PAL TV, etc

- 48000 HZ: Professional use of standard rates , Provide... To consumers DV、DVD、 Digital TV, etc

- 96000 HZ: The standard DVD- Audio , Blu ray Disc ,HD DVD etc.

- 192000/352800 HZ: A little

- Audio file format : There are several categories :

- non-compressed : ALAC/AU/BWF/PCM (raw, without header)/WAV (PCM)

- lossless compression : AIFF (PCM)/ALS/ATRAC/FLAC/WavPack/WMA

- Lossy compression : AAC/AC-3/ AMR/ MP2, MP3/ Musepack/Speex/Vorbis (OGG)

Audio operation

- Sound synthesis : You can use the built-in Audio source

aevalsrcTo create a sound , The grammar isaevalsrc=exprs[::options]exprs: Is a colon separated list of expressions , Each new expression specifies a new channel ; options: key = Colon separated list of value pairs ;- exprs Available in the : n: Number of evaluation samples ; t: Time in seconds , from 0 Start ; s: Sampling rate ;

- options Re available :

c or channel_layout: Channel layout , The number of channels must be equal to the number of expressions ;d or duration: max The duration of the , If not specified , Or negative numbers , The audio will be generated until the program stops ; n or nb_samples: Number of samples per output frame per channel , The default is 1024 Samples ;s or sample_rate: Sampling rate , The default value is 44100 Hz - Use -layout Option to create a multichannel sound

- A special type of stereo is binaural ( Rhythm ) - The difference between the two frequencies is about 30Hz Or less , The frequency of two tones must be lower than 1000Hz

- Volume settings : Use

-vol [0-256], If value <1, thatoutput_volume = vol * input_volume, If there is a suffix dB,output_volume = 10 ^( volume / 20)* input_volume - Mix multiple sounds : Use the syntax

amix=inputs=ins[:duration=dur[:dropout_transition=dt]] - Set the stereo to mono , Surround sound : Use

pan=layout:channel_def[:channel_def[:channel_def...]] - Audio analysis : Use filter ashowinfo, Parameter information can be displayed for each audio frame

- Adjust earphone listening : Use filter earwax

-map_channelOption to change various audio parameters , Its grammar is :-map_channel [in_file_id.stream_spec.channel_id|-1][:out_file_id.stream_spec]- Combine two audio streams into one multi-channel stream : Use filter amerge

- Audio stream forwarding and buffering buffet order control : Use filter astreamsync

# Produce notes A4, Tuning standard of pitch , take tone_height Set to 440 Hz: ffplay -f lavfi -i aevalsrc='sin(440*2*PI*t)' -t 2 # Multichannel 【 stereo 】 ffplay -f lavfi -i aevalsrc='sin(495*2*PI*t)|cos(505*2*PI*t):c=FL+FR' # Audio analysis ffmpeg -report -i ~/Downloads/test.mp3 -af ashowinfo -f null /dev/null

Preset codec

- The function is to preset the default values of some codec options , simplify ffmpeg Use 【 There are too many options 】

- The format of the preset file is shown in the following example , Please view the document , Use mode: :

ffmpeg -i input -fpre mpeg2.ffpreset -q 1 MPEG2_video.mpg - One more

-targetcommand , such as-target vcdWill automatically set... For output bitrate, codecs, buffer sizes Equal parameter

vcodec=flv # Video codec , Required and unique b:v=300k # Video bitrate g=160 # Picture group size mbd=2 # macroblock Decision-making algorithm flags=+aic+mv0+mv4 # aic - h263 Advanced internal coding ; Always try to use mv=<0,0>;mv4 - Use macroblock Of 4 Motion vector trellis=1 # rate Distortion optimized quantization ac=1 # Track number ar=22050 # Audio sampling rate b:a=56k # Audio bitrate

Interlaced video

Interlaced video standard

- Interlaced scanning is a technology invented in the development of monochrome analog TV , Can eliminate old CRT The display flashes . Video frames are divided horizontally into regular lines , And then it's divided into 2 It's a field , The first field contains odd rows , The second field contains even rows .

- NTSC, PAL and SECAM TV standards See the table below

function | NTSC | PAL, SECAM |

|---|---|---|

Number of lines scanned | 525 | 625 |

Visible scan lines | 483 | 576 |

Frames per second | 30 | 25 |

Fields per second | 60 | 50 |

Interlaced video operation

A little , Reference resources https://www.jianshu.com/p/fb051e1457c2

Microphones and cameras

A little , https://www.jianshu.com/p/95012f6696e0

Color correction

A little ,https://www.jianshu.com/p/f2a94de1c26a

senior

- Use filter concat Connect video / Audio , All of these fragments must have the same number of streams of each type , for example 1 Audio and 1 A video , or 2 Audio and 1 A video , wait , grammar :

concat=a=a_streams:v=v_streams:n=segments[:unsafe] - Use filter delogo remove logo, It hides the logo of a TV station by simply interpolating the surrounding pixels . The user sets a rectangle to cover the logo , It usually disappears ( But in some cases , More obvious identification ). grammar :

delogo=x=0:y=0:w=width:h=height[:t=band:show={0,1}] - Use filter deshake Fix video jitter , grammar :

deshake=x:y:w:h:rx:ry:edge:blocksize:contrast:search:filename - Use filter aselect/select Select the specified frame for output , grammar :

select=expression - Use filter setdar/setsar To set the aspect ratio , grammar :

setdar[=r=aspect_ratio[:max=number]];setdar[=aspect_ratio[:number]] - Use filtergraph

Common parameters and examples

-f fmt (input/output) : Specify the input or output file format . General can be omitted and automatic assignment based on extension can be used -c: Specify encoder -c copy: Direct copy , Without recoding ( That's faster ) -c:v: Specify video encoder -c:a: Specify audio encoder -i: Specify input file -an: Remove the audio stream -vn: Remove the video stream -preset: Specifies the video quality of the output , Will affect the file generation speed , There are several values available ultrafast, superfast, veryfast, faster, fast, medium, slow, slower, veryslow. -y: Without confirmation , Directly overwrite the file with the same name when exporting . -c[:stream_specifier] codec (input/output,per-stream) -codec[:stream_specifier] codec (input/output,per-stream) Select Edit for a specific file / Decoding mode , The output file is the encoder , For an input or a stream, it is the decoder . Option parameters ( The one in the back ) in codec Is the name of the codec , Or is it copy( For output files only ) It means that the stream data is copied directly without coding // In the output file, the 2 Video streaming press libx264 code , The first 138 Audio stream press libvorbis code , The rest are copied directly ffmpeg -i INPUT -map 0 -c copy -c:v:1 libx264 -c:a:137 libvorbis OUTPUT -t duration (input/output): Limit input / Output time . If it's in -i front , Is to limit how long data can be read from the input ; If it is used to limit the output file , It indicates how long the data is written and then stops -ss position (input/output): When in -i Before , Indicates locating the input file to position Designated location . Note that some formats may not support precise positioning , therefore ffmpeg Locate the closest possible to position( Before ) Locatable point of . When used for output files , Will decode and discard position Corresponding to the input file data before the time code -dn (input/output) As an input option, blocks all data streams of a file from being filtered or being automatically selected or mapped for any output. See -discard option to disable streams individually. As an output option, disables data recording i.e. automatic selection or mapping of any data stream. For full manual control see the -map option. -dframes number (output): Set the specified number Data frame to output file , This is a -frames:d Another name for . frames[:stream_specifier] framecount (output,per-stream): Stop writing data after the specified count frame . -q[:stream_specifier] q (output,per-stream) -qscale[:stream_specifier] q (output,per-stream) Use fixed quality (VBR). Is used to specify the q|qscale Coding depends on . If qscale No follow stream_specifier For video only . The value of q The value is 0.01-255, The smaller the quality, the better -filter[:stream_specifier] filtergraph (output,per-stream): Create a by filtergraph Specified filter , And apply to the specified stream . // video (video) Options -vframes number (output): Set the number of frames of the output file , yes -frames:v Another name for -r[:stream_specifier] fps (input/output,per-stream): Set frame rate ( A kind of Hz value , Abbreviations or fractional values ) -s[:stream_specifier] size (input/output,per-stream): Set the size of the frame . -aspect[:stream_specifier] aspect (output,per-stream): Specifies the aspect ratio of the video ( Length width display scale ) -vn (output): Disable video output -vcodec codec (output): Set up video encoder , This is a -codec:v An alias for // OPTIONS FOR SETTING QUALITY -b:v or -b:a to set bitrate // e.g., -b:v 1000K = 1000 kbit/s, -b:v 8M = 8 Mbit/s -q:v or -q:a to set fixed-quality parameter //e.g., -q:a 2 for native AAC encoder // Examples of encoder-specific options: -crf to set Constant Rate Factor for libx264/libx265 -vbr to set constant quality for FDK-AAC encoder // Different kinds of rate control CBR/VBR/CQP..... //Rate depends on content characteristics // Audio options -aframes number (output): Set up number Audio frame output , yes -frames:a Another name for -ar[:stream_specifier] freq (input/output,per-stream): Set the audio sampling rate . The default is that the output is the same as the input . Set the input , Only the channel is a real device or raw Only the channels separated and mapped by the data are effective . For the output, the adoption rate of audio quantization can be forcibly set . -aq q (output): Set the audio quality ( The encoding is specified as VBR), It is -q:a Another name for . -ac[:stream_specifier] channels (input/output,per-stream): Set the number of audio channels . The default output will have the same audio channel as the input . Set the input , Only the channel is a real device or raw Only the channels separated and mapped by the data are effective . -an (output): Disable audio output -acode codec (input/output): Set audio decoding / Coding / decoder , yes -codec:a Another name for // Subtitle options -scodec codec (input/output): Set caption decoder , yes -codec:s Another name for .

Example

// Transcoding from one codec to another (e.g. H.264 using libx264):

ffmpeg -i <input> -c:v libx264 output.mp4

// Transmuxing from one container/format to another – without re-encoding:

ffmpeg -i input.mp4 -c copy output.mkv

// Cut a video from timestamp <start> for <duration>, or until <end>:

ffmpeg -ss 00:01:50 -i <input> -t 10.5 -c copy <output>

ffmpeg -ss 2.5 -i <input> -to 10 -c copy <output>

// Use SPEED/QUALITY PRESETS IN X264 preset preset

ffmpeg -i <input> -c:v libx264 -crf 23 -preset ultrafast -an output.mkv

ffmpeg -i <input> -c:v libx264 -crf 23 -preset medium -an output.mkv

ffmpeg -i <input> -c:v libx264 -crf 23 -preset veryslow -an output.mkv

// map input streams to output, e.g. to add audio to a video:

ffmpeg -i input.mp4 -i input.m4a -c copy -map 0:v:0 -map 1:a:0 output.mp4

// scale - Scale to 320×240:

ffmpeg -i <input> -vf "scale=w=320:h=240" <output>

// padding

ffmpeg -i <input> -vf "pad=1920:1080:(ow-iw)/2:(oh-ih)/2" <output>

// Simple fade-in and fade-out at a specific time for a specific duration.

ffmpeg -i <input> -filter:v "fade=t=in:st=0:d=5,fade=t=out:st=30:d=5" <output>

// Complex system for printing text on video

ffmpeg -i <input> -vf \

drawtext="text='Test Text':x=100:y=50:\

fontsize=24:fontcolor=yellow:box=1:boxcolor=red" \

<output>

// Decode three video/audio streams and append to one another:

ffmpeg -i <input1> -i <input2> -i <input3> -filter_complex \

"[0:0][0:1][1:0][1:1][2:0][2:1]concat=n=3:v=1:a=1[outv][outa]" \

-map "[outv]" -map "[outa]" <output>

// Show a watermark in the top left corner between seconds 1 and 2 only

ffmpeg -i <video> -i <watermark> -filter_complex \

"[0:v][1:v]overlay=10:10:enable='between(t,1,2)'[outv]" \

-map "[outv]" <output>Reference resources :

边栏推荐

- Decltype usage introduction

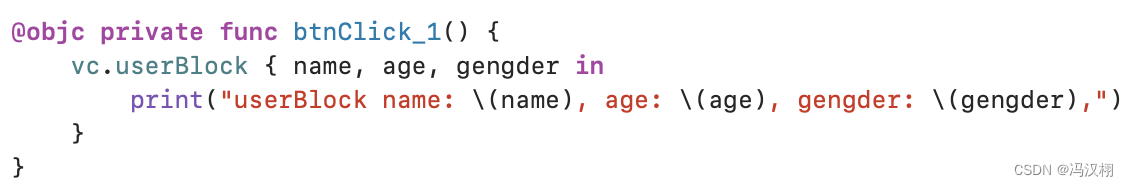

- Swift 基礎 閉包/Block的使用(源碼)

- Vscode topic recommendation

- decltype用法介绍

- Solve the problem of notebook keyboard disabling failure

- Swift 基础 Swift才有的特性

- Industrial computer anti cracking

- C语言_字符串与指针的爱恨情仇

- JS implementation to check whether an array object contains values from another array object

- Interview tutorial - multi thread knowledge sorting

猜你喜欢

Latest news of awtk: new usage of grid control

Swift 基础 闭包/Block的使用(源码)

Screenshot recommendation - snipaste

研究生英语期末考试复习

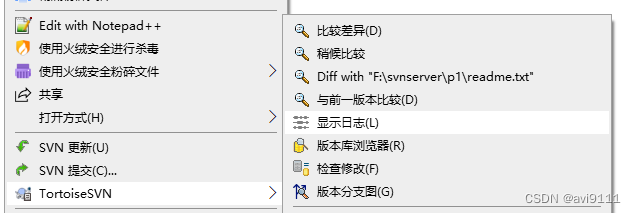

Svn actual measurement common operation record operation

Application of JDBC in performance test

宝塔面板安装php7.2安装phalcon3.3.2

Echart's experience (I): about y axis yaxis attribute

Review of postgraduate English final exam

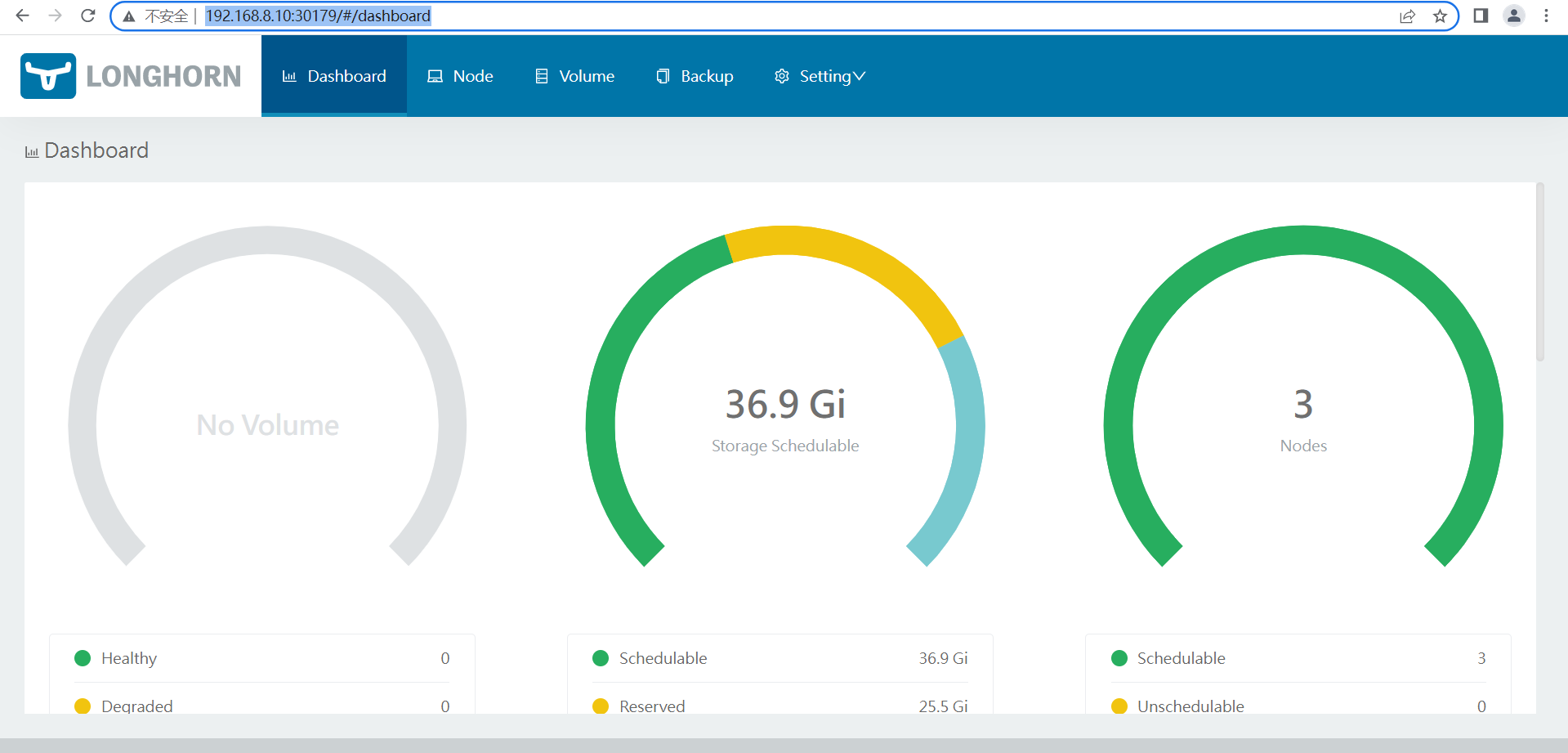

longhorn安装与使用

随机推荐

Screenshot recommendation - snipaste

你还只知道测试金字塔?

Question 1: the container that holds the most water

宝塔面板安装php7.2安装phalcon3.3.2

Live wire, neutral wire and ground wire. Do you know the function of these three wires?

Serialization of unity

Teach you how to use the reflect package to parse the structure of go - step 1: parameter type check

LINQ query (2)

On the H5 page, the Apple phone blocks the content when using fixed to locate the bottom of the tabbar

3-list introduction

1279_VMWare Player安装VMWare Tools时VSock安装失败解决

SCM stm32f103rb, BLDC DC motor controller design, schematic diagram, source code and circuit scheme

2022 PMP project management examination agile knowledge points (1)

【毕业季】你好陌生人,这是一封粉色信笺

Solve the problem of notebook keyboard disabling failure

Atguigu---15- built in instruction

基金的募集,交易与登记

Search and recommend those things

Leetcode 207: course schedule (topological sorting determines whether the loop is formed)

More appropriate development mode under epidemic situation