当前位置:网站首页>Openvino CPU acceleration survey

Openvino CPU acceleration survey

2022-06-23 01:13:00 【Johns】

Theoretical part

One . Introduce

OpenVINO It is used for optimization and deployment AI Open Source Toolkit for reasoning .

- Improve computer vision 、 Automatic speech recognition 、 Deep learning performance in natural language processing and other common tasks

- Use pass TensorFlow、PyTorch And other popular framework training models

- Reduce resource requirements and expand the range of Intel... From the edge to the cloud Deploy efficiently on the platform

Training 、 Optimize 、 Deploy

Two . Optimization principle

- Linear Operations Fusing( Operator fusion )

- Precision Calibration( Accuracy calibration ) It actually refers to the model INT8 quantitative , You can also use it inter Of NNCF Perform other model compression operations

3、 ... and . OpenVINO Introduction to common tools

- Deep learning model optimizer Deep Learning Model Optimizer- A cross platform command line tool , Used to import models and prepare them for best execution using the inference engine . Model optimizer import 、 Transform and optimize models , These models have been trained in popular frameworks , for example Caffe、TensorFlow、MXNet、Kaldi and ONNX*.

- Deep learning reasoning engine Deep Learning Inference Engine- A unified API, Allows high-performance reasoning on many hardware types , Including Intel CPU、 Intel integrated graphics 、 Intel Nerve calculator 2、 Intel Movidius Visual processing unit (VPU) Intel Visual accelerator design .

- Inference engine example Inference Engine Samples - A simple set of console applications , Demonstrates how to use the inference engine in your application .

- Deep learning workbench Deep Learning Workbench - be based on Web Drawing environment for , Allows you to easily use a variety of complex OpenVINO Kit components .

- Post training optimization tool Post-Training Optimization tool - A calibration model is then modeled with INT8 Tools for precision execution .

- Additional tools - A set of tools for working with models , Include (https://docs.openvinotoolkit.org/latest/openvino_inference_engine_tools_benchmark_tool_README.html), Cross Check Tool, Compile tool Tools

- Open Model Zoo Open Model Zoo

- Demos Demos - Console Application , Provide powerful application templates to help you implement specific deep learning scenarios .

- Other tools - A set of tools used with models , Include Accuracy Checker Utility and Model Downloader.Accuracy Checker Utility and Model Downloader.

- Pre training model document Documentation for Pretrained Models - Open Model Zoo Pre training model documentation available in the repository . Open Model Zoo repository.

The actual part

One . Environmental preparation

# Pull and start the container

docker pull openvino/ubuntu18_dev:latest

docker run -itd -p 8501:8501 -p 8500:8500 -p 8889:8889 -v "/root/openvino_notebooks:/openvino_notebooks" openvino/ubuntu18_dev:latest

# Into the container

docker exec -it -u root bc89fe5f98e6 /bin/bash

# Pull case base

git clone --depth=1 https://github.com/openvinotoolkit/openvino_notebooks.git

# install jupyter

cd openvino_notebooks

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install python3-venv build-essential python3-dev git-all

python -m pip install --upgrade pip

pip install -r requirements.txt

python -m ipykernel install --user --name openvino_env

apt-get install vim

# start-up jupyter

jupyter lab notebooks --allow-rootTwo . Model transformation ( Use jupyter notebook)

import time

from pathlib import Path

import matplotlib.pyplot as plt

import numpy as np

from IPython.display import Markdown

# Construct the command for Model Optimizer

mo_command = f"""mo

--saved_model_dir "/openvino_notebooks/open_model_zoo_models/custom/origin_model"

--data_type FP32

--input dense_input,sparse_ids_input,sparse_wgt_input,seq_50_input

--input_shape [100,587],[100,53],[100,53],[100,6,50]

--output_dir "/openvino_notebooks/open_model_zoo_models/custom/fp32"

--output "Identity"

"""

mo_command = " ".join(mo_command.split())

print("Model Optimizer command to convert TensorFlow to OpenVINO:")

display(Markdown(f"`{mo_command}`"))

! $mo_command3、 ... and . Model quantification ( Use jupyter notebook)

import os

from pathlib import Path

from openvino.tools.pot import DataLoader

import tensorflow as tf

import math

from yaspin import yaspin

# from TFRecord Reading data

def input_fn_tfrecord(filenames, batch_size=256):

"""make input fn for tfrecord file

"""

reader = tf.data.TFRecordDataset(

filenames,

num_parallel_reads=10,

).shuffle(100000, reshuffle_each_iteration=True)

features = {

'dense_input': tf.io.FixedLenSequenceFeature([], tf.float32, allow_missing=True),

'sparse_ids_input': tf.io.FixedLenSequenceFeature([], tf.int64, allow_missing=True),

'sparse_wgt_input': tf.io.FixedLenSequenceFeature([], tf.float32, allow_missing=True),

'seq_50_input': tf.io.FixedLenSequenceFeature([], tf.int64, allow_missing=True),

'is_click': tf.io.FixedLenSequenceFeature([], tf.int64, allow_missing=True),

}

def _parse_example(example):

"""

parse data

"""

parse_data = tf.io.parse_single_example(example, features)

return [

tf.reshape(parse_data['dense_input'][:587], shape=[587]),

tf.reshape(tf.cast(parse_data["sparse_ids_input"], tf.int32), shape=[53]),

tf.reshape(parse_data["sparse_wgt_input"], shape=[53]),

tf.reshape(tf.reshape(tf.cast(parse_data['seq_50_input'], tf.int32), [-1, 50])[:6, :], shape=[6, 50]),

tf.reshape(parse_data['is_click'], shape=[1])]

dataset = reader.map(_parse_example, num_parallel_calls=11) # Parsing data

dataset = dataset.prefetch(buffer_size=batch_size)

batch = dataset.batch(batch_size=batch_size)

return batch

# Data preprocessing

data_file = "/openvino_notebooks/open_model_zoo_models/custom/eval_processed_data.tfrecords"

batch_size = 100

inputs_list = ['dense_input', 'sparse_ids_input', 'sparse_wgt_input', 'seq_50_input']

total_samples = sum(1 for _ in tf.compat.v1.python_io.tf_record_iterator(data_file))

n = math.ceil(float(total_samples) / batch_size)

data = []

with tf.compat.v1.Session() as sess:

dataset = input_fn_tfrecord(data_file, 100)

dataset_iterator = tf.compat.v1.data.make_one_shot_iterator(dataset)

next_element = dataset_iterator.get_next()

next_element = sess.run(next_element)

for i in range(n):

records = {

'dense_input': next_element[0],

'sparse_ids_input': next_element[1],

'sparse_wgt_input': next_element[2],

'seq_50_input': next_element[3],

'label': next_element[4],

}

data.append(records)

class OriginModelDataLoader(DataLoader):

def __init__(self, data_list):

"""dataloader generator

Args:

data_location (str): tf recorder local path

batch_size (int): dataloader batch size

"""

self.data_list = data_list

def __getitem__(self, index):

if index >= len(self.data_list):

raise IndexError("Index out of dataset size")

current_item = self.data_list[index]

label = self.data_list[index]['label']

feat_names = {'dense_input', 'sparse_ids_input', 'sparse_wgt_input', 'seq_50_input'}

p2 = {key: value for key, value in current_item.items() if key in feat_names}

return ((index, label), p2)

def __len__(self):

return len(self.data_list)

# Perform model quantification

from openvino.tools.pot import IEEngine

import addict

from openvino.tools.pot import load_model,save_model

from openvino.tools.pot import compress_model_weights

from openvino.tools.pot import create_pipeline

from compression.api import DataLoader, Metric

path_to_xml = "/openvino_notebooks/open_model_zoo_models/custom/fp32/saved_model.xml"

path_to_bin = "/openvino_notebooks/open_model_zoo_models/custom/fp32/saved_model.bin"

data_file = "/openvino_notebooks/open_model_zoo_models/custom/eval_processed_data.tfrecords"

batch_size = 512

# Model config specifies the model name and paths to model .xml and .bin file

model_config = addict.Dict(

{

"model_name": "origin_model",

"model": path_to_xml,

"weights": path_to_bin,

}

)

# Engine config

engine_config = addict.Dict({"device": "CPU"})

algorithms = [

{

"name": "AccuracyAwareQuantization",

"params": {

"target_device": "CPU",

"stat_subset_size": 300,

"maximal_drop": 0.001, # The loss of accuracy shall not exceed 0.001

},

}

]

# Step 1: implement and create user's data loader

data_loader = OriginModelDataLoader(data)

# Step 2: load model

ir_model = load_model(model_config=model_config)

metric = Accuracy()

# Step 3: Initialize the engine for metric calculation and statistics collection.

engine = IEEngine(config=engine_config, data_loader=data_loader, metric=metric)

# Step 4: Create a pipeline of compression algorithms and run it.

pipeline = create_pipeline(algorithms, engine)

algorithm_name = pipeline.algo_seq[0].name

with yaspin(

text=f"Executing POT pipeline on {model_config['model']} with {algorithm_name}"

) as sp:

start_time = time.perf_counter()

compressed_model = pipeline.run(ir_model)

end_time = time.perf_counter()

sp.ok("")

print(f"Quantization finished in {end_time - start_time:.2f} seconds")

# Step 5 (Optional): Compress model weights to quantized precision

# in order to reduce the size of the final .bin file.

compress_model_weights(compressed_model)

# Step 6: Save the compressed model to the desired path.

# Set save_path to the directory where the model should be saved

compressed_model_paths = save_model(

model=compressed_model,

save_path="optimized_model",

model_name="optimized_model",

)

# Step 7 (Optional): Evaluate the compressed model. Print the results.

metric_results = pipeline.evaluate(compressed_model)

original_metric_results = pipeline.evaluate(ir_model)

if original_metric_results:

print(f"Accuracy of the original model: {next(iter(original_metric_results.values())):.5f}")

quantized_metric_results = pipeline.evaluate(compressed_model)

if quantized_metric_results:

print(f"Accuracy of the quantized model: {next(iter(quantized_metric_results.values())):.5f}")Comparison test before and after optimization

# Compare the model size before and after optimization

ir_path = "/openvino_notebooks/open_model_zoo_models/custom/fp32/saved_model.xml"

quantized_model_path = "/openvino_notebooks/notebooks/002-openvino-api/optimized_model/optimized_model.xml"

original_model_size = Path(ir_path).with_suffix(".bin").stat().st_size / 1024

quantized_model_size = Path(quantized_model_path).with_suffix(".bin").stat().st_size / 1024

compression_ratio = (34231.60-12384.25)/342.3160

print(f"FP32 model size: {original_model_size:.2f} KB")

print(f"INT8 model size: {quantized_model_size:.2f} KB")

print(f"Compression ratio : {compression_ratio:.4f}%")

# Model performance comparison , benchmark_app yes openvion Official performance testing tools

#!benchmark_app --help

model_name = "quantized_model"

benchmark_command = f"benchmark_app -m {quantized_model_path} -t 15 -d CPU -api async -hint latency"

display(Markdown(f"Benchmark command: `{benchmark_command}`"))

display(Markdown(f"Benchmarking {model_name} on CPU with async inference for 15 seconds..."))

! $benchmark_command

#!benchmark_app --help

model_path = "/openvino_notebooks/open_model_zoo_models/custom/fp32/saved_model.xml"

model_name = "origin_model"

benchmark_command = f"benchmark_app -m {model_path} -t 15 -hint latency "

display(Markdown(f"Benchmark command: `{benchmark_command}`"))

display(Markdown(f"Benchmarking {model_name} on CPU with async inference for 15 seconds..."))

! $benchmark_commandFour . The experimental conclusion

The model name | size | QPS |

|---|---|---|

origin_model |

| 88.93 |

quantiztion model |

| 105.58 |

Optimization ratio | Less | 18.72% |

By observing the log during the conversion , It is found that the model structure is simple and compact , Features are also very sparse , There are not many nodes that can be fused and quantized by operators during conversion , Therefore, the performance improvement is not particularly obvious .

边栏推荐

- 基于深度学习的视觉目标检测技术综述

- Vector 1 (classes and objects)

- Shell view help

- TiDB VS MySQL

- Read Amazon memorydb database based on redis

- Because I said: volatile is a lightweight synchronized, the interviewer asked me to go back and wait for the notice!

- three. JS simulated driving tour art exhibition hall - creating super camera controller

- 中国国际期货有限公司怎么样,是正规的期货公司吗?网上开户安全吗?

- Ansible learning summary (7) -- ansible state management related knowledge summary

- JS prevent the PC side from copying correct links

猜你喜欢

Psychological analysis of the safest spot Silver

![[initial launch] there are too many requests at once, and the database is in danger](/img/c1/807575e1340b8f8fe54197720ef575.png)

[initial launch] there are too many requests at once, and the database is in danger

Binary tree to string and string to binary tree

BGP联邦综合实验

Dig three feet to solve the data consistency problem between redis and MySQL

How to get started with machine learning?

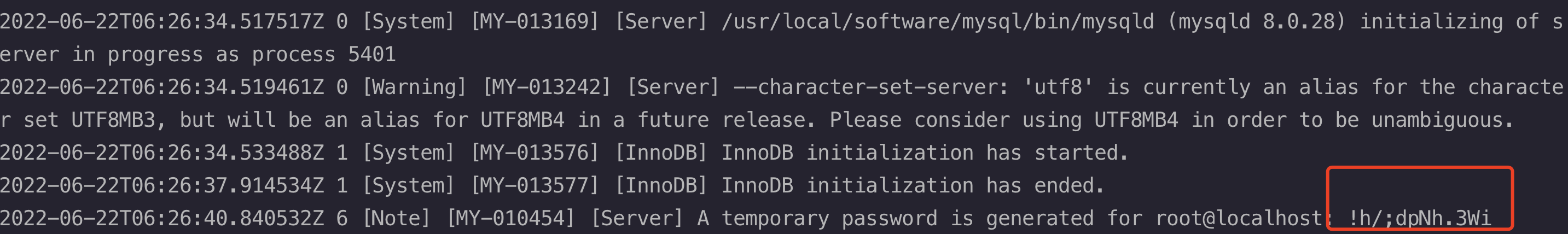

Installing MySQL for Linux

崔鹏团队:万字长文梳理「稳定学习」全景图

E-R diagram

一文读懂基于Redis的Amazon MemoryDB数据库

随机推荐

打新债到底靠不靠谱呀?是不是安全的?

Typecho imite le modèle de thème du blog Lu songsongsong / modèle de thème du blog d'information technologique

cadence SPB17.4 - allegro - 优化指定单条电气线折线连接角度 - 折线转圆弧

Dual cross domain: access allow origin header contains multiple values "*, *", but only one is allowed

Which platform is safer to buy stocks on?

Psychological analysis of the safest spot Silver

Have you stepped on these pits? Use caution when creating indexes on time type columns

Wallys/DR7915-wifi6-MT7915-MT7975-2T2R-support-OpenWRT-802.11AX-supporting-MiniPCIe

Tidb monitoring upgrade: a long way to solve panic

New progress in the construction of meituan's Flink based real-time data warehouse platform

Learn the specific technical learning experience of designing reusable software from the three levels of class, API and framework

黄金etf持仓量如何算

[sliding window] leetcode992 Subarrays with K Different Integers

Explain the startup process of opengauss multithreading architecture in detail

Ros2 summer school 2022 transfer-

[launch] redis Series 2: data persistence to improve availability

时间复杂度

cadence SPB17.4 - 中文UI设置

Vector 3 (static member)

Some thoughts about the technology of test / development programmers are very advanced, and they can't go on