当前位置:网站首页>Introduction to gradient descent method - black horse programmer machine learning handout

Introduction to gradient descent method - black horse programmer machine learning handout

2022-06-24 04:36:00 【Dark horse programmer official】

Learning goals

- Know the principle of full gradient descent algorithm

- Know the principle of random gradient descent algorithm

- Know the principle of random average gradient descent algorithm

- Know the principle of small batch gradient descent algorithm

In the previous section, we introduced the most basic implementation process of gradient descent method , Common gradient descent algorithms are :

- Full gradient descent algorithm (Full gradient descent),

- Stochastic gradient descent algorithm (Stochastic gradient descent),

- Small batch gradient descent algorithm (Mini-batch gradient descent),

- Random average gradient descent algorithm (Stochastic average gradient descent)

They are all to adjust the weight vector correctly , By calculating a gradient for each weight , To update the weights , Minimize the objective function as much as possible . The difference is that the samples are used in different ways .

1 Full gradient descent algorithm (FG)

Calculate all sample errors of the training set , Sum them and then take the average value as the objective function .

The weight vector moves in the opposite direction of its gradient , So as to reduce the current objective function by the most .

Because when you perform each update , We need to calculate all gradients over the entire data set , So the batch gradient descent method will be very slow , meanwhile , The batch gradient descent method cannot process data sets that exceed the memory capacity limit .

The batch gradient descent method also can not update the model online , That is, in the process of operation , No new samples can be added .

It is to calculate the loss function with respect to the parameters on the whole training data set θ Gradient of :

2 Stochastic gradient descent algorithm (SG)

because FG All sample errors need to be calculated for each iteration to update the weight , In practical problems, there are often hundreds of millions of training samples , Therefore, the efficiency is low , And it is easy to fall into local optimal solution , Therefore, a random gradient descent algorithm is proposed .

The objective function of each round of calculation is no longer the error of all samples , It's just a single sample error , namely Calculate the gradient of the objective function of one sample at a time to update the weight , Take another sample and repeat the process , Until the loss function value stops falling or the loss function value is less than a tolerable threshold .

This process is simple , Efficient , Generally, the convergence of update iteration to local optimal solution can be avoided . Its iterative form is

among ,x(i) Represents the eigenvalue of a training sample ,y(i) Represents the tag value of a training sample

But because of ,SG Use only one sample iteration at a time , In case of noise, it is easy to fall into local optimal solution .

3 Small batch gradient descent algorithm (mini-batch)

The small batch gradient descent algorithm is FG and SG A compromise of , To some extent, it takes into account the advantages of the above two methods .

A small sample set is randomly selected from the training sample set every time , On the extracted small sample set FG Iteratively update weights .

The number of sample points contained in the extracted small sample set is called batch_size, Usually set to 2 Power square , Better for GPU Accelerated processing .

Special , if batch_size=1, It becomes SG; if batch_size=n, It becomes FG. Its iterative form is

4 Random average gradient descent algorithm (SAG)

stay SG In the method , Although it avoids the problem of high computational cost , But for big data training ,SG The effect is often unsatisfactory , Because each round of gradient update is completely independent of the data and gradient of the previous round .

The random average gradient algorithm overcomes this problem , Maintain an old gradient in memory for each sample , Randomly select the second i Samples to update the gradient of this sample , The gradient of other samples remains unchanged , Then we get the average of all the gradients , Then the parameters are updated .

such , Each round of updating only needs to calculate the gradient of one sample , Calculating the cost is equivalent to SG, But it converges much faster .

5 Summary

- Full gradient descent algorithm (FG)【 know 】

- When doing the calculation , Calculate the average error of all samples , As my objective function

- Stochastic gradient descent algorithm (SG)【 know 】

- Select only one sample at a time for assessment

- Small batch gradient descent algorithm (mini-batch)【 know 】

- Select a part of samples for assessment

- Random average gradient descent algorithm (SAG)【 know 】

- Will maintain an average value for each sample , In the later calculation , Refer to this average

边栏推荐

- Indicator statistics: real time uvpv statistics based on flow computing Oceanus (Flink)

- Have you learned all these routines to solve bugs?

- Collagenase -- four types of crude collagenase from Worthington

- Clickhouse thread occupation troubleshooting and killing

- How to add a domain name to ECS? What are the advantages of ECS?

- web渗透测试----5、暴力破解漏洞--(9)MS-SQL密码破解

- How does ECS build websites? Is it troublesome for ECs to build websites?

- Download files and close the enhanced module security configuration to visit the website for the first time using IE browser

- 抢先报名丨新一代 HTAP 数据库如何在云上重塑?TiDB V6 线上发布会即将揭晓!

- What does VPS server mean? What is the difference between a VPS server and an ECS?

猜你喜欢

Final summary of freshman semester (supplement knowledge loopholes)

Advanced authentication of uni app [Day12]

什么是数据中台

2022年二级造价工程师备考攻略,你准备好了吗?

15+ urban road element segmentation application, this segmentation model is enough

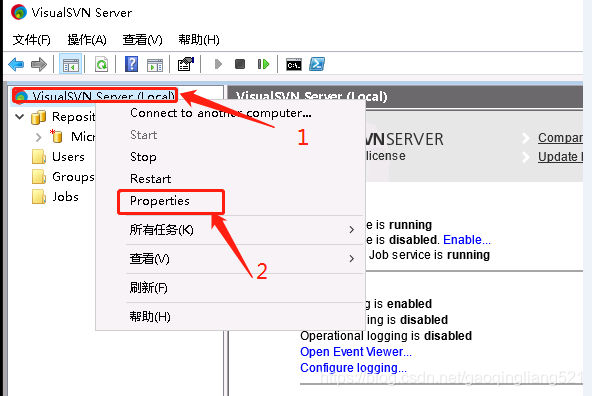

External network access SVN server (external network access SVN server deployed on the cloud)

Jointly build Euler community and share Euler ecology | join hands with Kirin software to create a digital intelligence future

Abnova荧光原位杂交(FISH)探针解决方案

Openeuler kernel technology sharing issue 20 - execution entity creation and switching

Abnova fluorescence in situ hybridization (FISH) probe solution

随机推荐

How to monitor multiple platforms simultaneously when easydss/easygbs platform runs real-time monitoring?

Through the fog: location notes of Flink crash with a multi component timeout

apipost接口断言详解

Brief introduction: how much do you know about supply chain attacks

What are the advantages of ECS? Is ECS better than VM?

Multi task video recommendation scheme, baidu engineers' actual combat experience sharing

Beauty of script │ VBS introduction interactive practice

我与物联有个约定

DP summary of ACM in recent two weeks

C语言自定义类型的介绍(结构体,枚举,联合体,位段)

Clickhouse thread occupation troubleshooting and killing

How are ECS leased? Can the ECS use VPN?

Gpt/gpt2/dialogpt detailed explanation comparison and application - text generation and dialogue

What is the difference between arm architecture, ARM7, ARM9, STM32, cortex m3 M4, 51 and AVR

How to add a domain name to ECS? What are the advantages of ECS?

ARM 架构、ARM7、ARM9、STM32、Cortex M3 M4 、51、AVR 有啥区别

ribbon

应用实践 | Apache Doris 整合 Iceberg + Flink CDC 构建实时湖仓一体的联邦查询分析架构

The results of the 2022 open source summer were announced, and 449 college students will contribute to open source projects

How to restart the ECS? What are the differences between ECS restart and normal computers?

![Advanced authentication of uni app [Day12]](/img/2e/59ad76ce8911d6d1f644322b34aaa5)