当前位置:网站首页>System design: partition or data partition

System design: partition or data partition

2022-06-24 06:59:00 【Xiaochengxin post station】

Definition

Data partition ( Also known as fragmentation ) Is a kind of large database (DB) Technology that breaks down into many smaller parts . It is to split one across multiple computers DB/ Table process , To improve application manageability 、 performance 、 Availability and load balancing .

reason

The reason for data fragmentation is , After reaching a certain scale , It is cheaper to scale horizontally by adding more machines than vertically by adding more powerful servers 、 More feasible .

One 、 Division method

You can use many different scenarios to decide how to decompose an application database into smaller databases . Here are the three most popular scenarios used by various large-scale applications .

A. Horizontal zoning

In this scheme , We put different rows in different tables . for example , If we store different locations in a table , We can confirm that the area code is less than 1000 The location of is stored in a table , The region code is greater than 1000 The location of is stored in a separate table . This is also called Range based sharding , Because we store different ranges of data in different tables .

The key problem with this approach is , If you do not carefully select the range value for slicing , The partition scheme will result in server imbalance . For example, Beijing may have more data than other regions .

B Vertical zones

In this scheme , We divide the data into tables related to specific functions and store them in their own servers . for example , If we are building an application similar to an e-commerce website — We can decide to put the user information on one computer DB Server , The merchant list is placed on another server , The product is placed on the third server .

Vertical partitioning is easy to implement , Less impact on the application . The main problem with this method is , If our application experiences additional growth , Then it may be necessary to further divide the function specific databases on different servers ( for example , A single server cannot handle 1.4 Million users to 100 All metadata queries of 100 million photos )

C Directory based partition

The loosely coupled solution to the problems mentioned in the above solution is to create a lookup service , The service understands the current partition scheme , And take it from DB Abstracted from the access code . therefore , To find the location of a particular data entity , We query to save each tuple key to its DB Mapping directory servers between servers . This loosely coupled approach means that we can perform tasks such as transferring data to the DB Tasks such as adding servers to the pool or changing the partition scheme .

Two 、 Division criteria

A. Key or hash based partitioning ( Hash partition )

Under this scheme , We apply hash functions to some of the key attributes of our stored entities ; This produces the partition number . for example , If we had 100 individual DB The server , And our ID It's a number , Each time a new record is inserted , It will increase by one . In this case , The hash function can be 'ID%100', This will provide us with the ability to store / Read the server number of the record . This approach should ensure uniform distribution of data between servers . The fundamental problem with this approach is , It effectively fixes DB Total number of servers , Because adding a new server means changing the hash function , This will require reallocation of data and service downtime . One way to solve this problem is to use consistent hashes .

B List partition

In this scheme , Each partition is assigned a list of values , So whenever we want to insert a new record , We all see which partition contains our keys , Then store it there . for example , We can decide to live in Iceland 、 The Norwegian 、 The Swedish 、 All users in Finland or Denmark will be stored in partitions in the Nordic countries .

C Cycle partition ( Hash modulus )

This is a very simple strategy , It can ensure the consistency of data distribution . about 'n' Partition ,'i' Tuples are assigned to partitions (i mod n).

D Combined zones

Under this scheme , We combine any of the above partition schemes to design a new scheme . for example , Apply the list partition scheme first , Then apply hash based partitions . Consistent hashing can be thought of as a combination of hashing and list partitioning , Where hashing reduces the key space to a size that can be listed

3、 ... and 、 Segmentation FAQs

On the shard database , There are some additional restrictions on the different operations that can be performed . Most of these limitations are due to the fact that operations that span multiple tables or multiple rows in the same table will no longer run on the same server . Here are some of the limitations and additional complexity of sharding :

A. League table query join And the use of inverse paradigms

Performing a join on a database running on a server is simple , But once a database is partitioned and distributed across multiple computers , It is often not feasible to perform joins across database fragments . Because you have to compile data from multiple servers , Such a connection will not improve performance . A common way to solve this problem is to denormalize the database , So that you can execute previously required joined queries from a single table . Of course , The service must now deal with all the dangers of denormalization , For example, the data is inconsistent .

B Citation integrity

As we can see , It is not feasible to perform cross sharding queries on a partitioned database , Similarly , Enforce data integrity constraints in a fragmented database ( Such as foreign keys ) It can be very difficult .

majority RDBMS Foreign key constraints between databases on different database servers are not supported . This means that applications that require referential integrity on a fragmented database must usually be enforced in the application code . Usually in this case , The application must run regular SQL Job to clear dangling references .

C Repartition

There may be many reasons why we have to change the fragmentation scheme :

1. Uneven data distribution , For example, there are many places in a particular postal code that cannot be put into a database partition .

2. One shard A lot of load , such as DB shard Too many user photo requests processed .

under these circumstances , Or we have to create more DB shard, Or you have to rebalance the existing shard, This means that the partitioning scheme has changed , All existing data is moved to a new location . It is very difficult to do this without causing downtime . Using a scheme similar to directory based partitioning does make the rebalancing experience more enjoyable , But the cost is to increase the complexity of the system and create a new single point of failure ( Find service / database ).

So, based on the theory of Google system design, how to operate the specific practice ? The author has experienced the above process in JD before , The data volume of each table directly affects the data storage volume and performance index according to the description complexity of the table , According to the author's average data volume of a single table at that time 400-700 There is a data skew between million , As well as a single big boost due to business growth 15 More than 100 million data leads to the need to repartition , Specific practice case reference 2018 Articles in MySQL Practice of sub database and sub table .

Reference material

grok_system_design_interview.pdf

边栏推荐

- If you want to learn programming well, don't recite the code!

- Become TD hero, a superhero who changes the world with Technology | invitation from tdengine community

- Interpreting the new features of Appstore: Customizing product pages and a/b test tools

- Do you know about Statistics?

- Free and easy-to-use screen recording and video cutting tool sharing

- Source code analysis of current limiting component uber/ratelimit

- leetcode:85. 最大矩形

- Record of waic 2021 round table conference 𞓜 cross border dialogue: current situation and future of AI and sustainable development

- 你有一个机会,这里有一个舞台

- 基于三维GIS系统的智慧水库管理应用

猜你喜欢

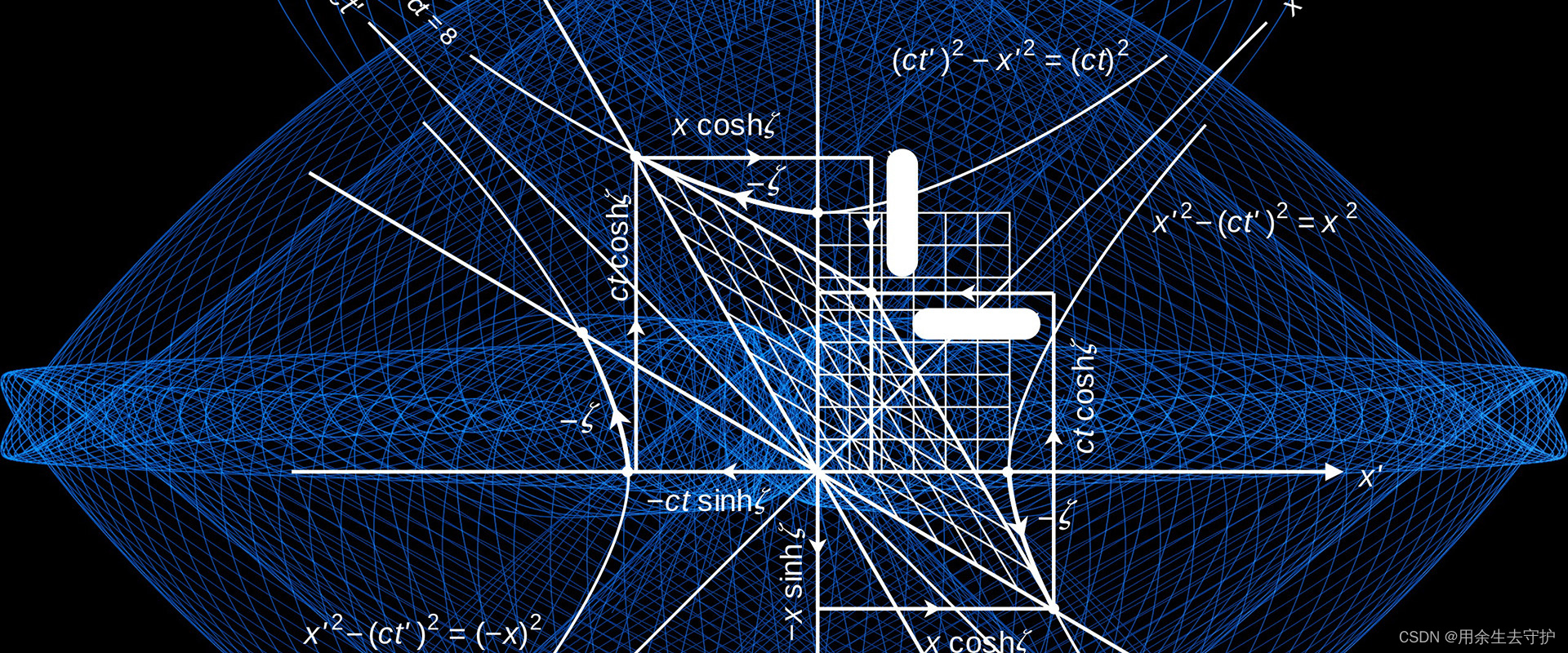

程序员使用个性壁纸

![Command ‘[‘where‘, ‘cl‘]‘ returned non-zero exit status 1.](/img/2c/d04f5dfbacb62de9cf673359791aa9.png)

Command ‘[‘where‘, ‘cl‘]‘ returned non-zero exit status 1.

File system notes

解读AI机器人产业发展的顶层设计

目标5000万日活,Pwnk欲打造下一代年轻人的“迪士尼乐园”

Programmers use personalized Wallpapers

Rockscache schematic diagram of cache operation

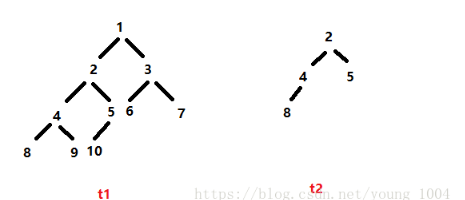

leetcode:剑指 Offer 26:判断t1中是否含有t2的全部拓扑结构

Application configuration management, basic principle analysis

虚拟文件系统

随机推荐

How long will it take for us to have praise for Shopify's market value of 100 billion yuan?

【愚公系列】2022年6月 ASP.NET Core下CellReport报表工具基本介绍和使用

[JUC series] completionfuture of executor framework

What is domain name resolution? What if the domain name cannot be resolved?

浅谈如何运营好小红书账号:利用好长尾词理论

文件系统笔记

【二叉数学习】—— 树的介绍

云监控系统 HertzBeat v1.1.0 发布,一条命令开启监控之旅!

解读AI机器人产业发展的顶层设计

On BOM and DOM (1): overview of BOM and DOM

Nine unique skills of Huawei cloud low latency Technology

When easynvs is deployed on the project site, easynvr cannot view the corresponding channel. Troubleshooting

Several methods for reinstalling the system:

RS485 serial port wiring description of smart lamp post smart gateway

How to apply 5g smart pole to smart highway

In the half year, there were 2.14 million paying users, a year-on-year increase of 62.5%, and New Oriental online launched its private domain

Domain name purchase method good domain name selection principle

Database stored procedure begin end

Do you know about Statistics?

35 year old crisis? It has become a synonym for programmers