当前位置:网站首页>GPT plus money (OpenAI CLIP,DALL-E)

GPT plus money (OpenAI CLIP,DALL-E)

2022-07-25 12:03:00 【Shangshanxianger】

Connect images and text , More multimodal articles can be found in the series compiled by bloggers ( Large scale cross modal pre training portal ), This article mainly collates OpenAI Published 2 An article . among CLIP Can complete the image and text category matching ,DALL·E Then the image can be generated directly based on the text description , And the performance is very excellent .

CLIP

First of all CLIP, Just look at the model , There are three steps :Contrastive Pretraning,Create dataset classifier from label text and use for zero-shot prediction.

The overall structure of the first part is shown in the figure above , It is a two stream branch of picture and text matching , On one side is the image encoder ( Such as resnet50 perhaps ViT etc. ), On the other side is the text encoder ( Such as Transformer) Get the feature , Then to a batch Text graph of pair Calculate the inner product of the data to get the matching matrix , The row direction of the matrix is the classifier of the image , From the text point of view, the column direction is a similar classifier . Finally, maximize the probability of the blue part of the diagonal ( Cause to match pair Maximize inner product similarity ), Comparative learning Bloggers have sorted out Do not go into .

This step is mainly to use a large amount of training data ( Sentences obtained directly from the Internet - The image is right ) Get the representation of the feature . The next two steps are the testing process , The flow is as follows: :

Similar to the training phase , Firstly, the image to be classified is encoded to get the features , Then each tag of the target task data set is converted into a corresponding text ( because CLIP Of Pretraning Data are sentences , For the words of the classification task label Not applicable ), As shown in the figure above dog this label Will be transformed into “A photo of a dog”, also dog The word is mask, Try to predict the word by calculating the inner product similarity of the model , You can do a good job of classification , Because it is the feeling of generating sentences , So in fact, it is very suitable for zero-shot The classification of .

meanwhile , be based on CLIP You can also define your own classifiers freely ! That is to say, it can be used conveniently CLIP Combined with a lot of work , For example, it will be sorted out later DALL-E I used CLIP To feature .

Take a look CLIP The logic flow

def forward(self, image, text):

image_features = self.encode_image(image) # code image

text_features = self.encode_text(text) # code text

# norm The following features

image_features = image_features / image_features.norm(dim=-1, keepdim=True)

text_features = text_features / text_features.norm(dim=-1, keepdim=True)

# Calculate the inner product similarity logits

logit_scale = self.logit_scale.exp()

logits_per_image = logit_scale * image_features @ text_features.t()

logits_per_text = logit_scale * text_features @ image_features.t()

# shape = [global_batch_size, global_batch_size]

return logits_per_image, logits_per_text

Why the effect is good ?

- Large data sets .Contrastive Pretraning Some of the data used is about... Collected by the author from social media 4 Billion pairs of data , And no one is required to mark ( It saves a lot of manpower and expands the generalization ). There are up to for each image 32,768 A text candidate , This thought SimCLR Big enough CLIP yes 2 times …

- Study object Instead of predicting the entire text description . Namely the dog become "A photo of a dog" This form , Then predict dog, It can accelerate the speed of comparative learning to 4-10 times .

- Vision Transformer. This blogger has also sorted it out and will not repeat it : Portal . In the code, the author uses ViT, It can also be used more than ordinary resnet Fast 3 times , This can make CLIP On larger datasets , Spend more time burning money ( Training ).

For details, please refer to the original paper :

blog:https://openai.com/blog/clip/

paper:https://arxiv.org/pdf/2103.00020.pdf

code:https://github.com/openai/CLIP

DALL-E

And then there was DALL-E Model ,CLIP It can mainly do tasks such as classification and retrieval , And it can generate very good images directly from the text .motivation The goal is to train a transformer Automatic modeling , About text and pictures tokens Convert to a single data stream , Therefore, it is mainly necessary to consider how to 2D The picture is also converted to a single data stream .

It is also a direct look at the model , As shown in the figure above, it can be divided into three stages :dVAE,Transformer and CLIP.

- Stage One.dVAE Used for each of the images patch Generate token Express ( Get a single data stream ). Specifically, it will 256×256 The pictures of are divided into 32×32 individual patch, Then use the trained discrete variational self encoder dVAE The model will each patch Map to size 8192 In the vocabulary of , Finally, a picture will be transformed into 1024 individual token It means . This stage will make transformer Context size of (context size) Reduce 192 times , At the same time, it will not significantly reduce “ Vision ” quality .

- Stage Two.Transformer The architecture is similar to GPT-3 The generative pre training method . As shown in the figure, you will first use BPE-encoder Embed text , obtain 256 individual token( Not enough padding), then concat Images token Splicing , And then input it directly into the trained with 120 Billion parameter Transformer Modeling joint distribution in the model (64 layer , Each layer 62 head , Each head 64 dimension , The final dimension is 3968).

- sample and ranking. Finally, the image generated by the model can be sampled , And then use CLIP The model sorts the sampling results , So as to get the generated image that best matches the text .

Something worth noting trick:

- Gumbel-Softmax. Map image patch The word list is discrete , So we should use relaxed conditions ELB(evidence lower bound).

- stay VAE The end of the encoder and the beginning of the decoder 1×1 Convolution

- Multiply the output activation of the encoder and decoder by a small constant

- To text - Images token The cross entropy loss is normalized

- Because the main image modeling , So multiply the cross entropy loss of the text by 1/8, Multiply the cross entropy loss of the image by 7/8

- Use Adam Algorithm , The optimization is carried out by the exponential weighted iterative average method

- Mixed precision training , In order to save GPU Memory and improved throughput

- Distributed optimization

For details, please refer to the original paper :

blog:https://openai.com/blog/dall-e/

paper:https://arxiv.org/pdf/2102.12092.pdf

official code( Only for the time being dVAE Part of ):https://github.com/openai/DALL-E

Come back, big man code:https://github.com/lucidrains/DALLE-pytorch

The reproduced library can directly call the training , It seems to work very well , If you have enough cards then pip Then you can. :

$ pip install dalle-pytorch

import torch

from dalle_pytorch import CLIP

clip = CLIP(

dim_text = 512,

dim_image = 512,

dim_latent = 512,

num_text_tokens = 10000,

text_enc_depth = 6,

text_seq_len = 256,

text_heads = 8,

num_visual_tokens = 512,

visual_enc_depth = 6,

visual_image_size = 256,

visual_patch_size = 32,

visual_heads = 8

) # Set up CLIP Parameters of

text = torch.randint(0, 10000, (4, 256))

images = torch.randn(4, 3, 256, 256)

mask = torch.ones_like(text).bool()

loss = clip(text, images, text_mask = mask, return_loss = True) # Direct training CLIP

loss.backward()

The next article will describe it in more detail Prompt This recently popular technology .

边栏推荐

- brpc源码解析(六)—— 基础类socket详解

- I advise those students who have just joined the work: if you want to enter the big factory, you must master these concurrent programming knowledge! Complete learning route!! (recommended Collection)

- GPT plus money (OpenAI CLIP,DALL-E)

- Power Bi -- these skills make the report more "compelling"“

- flink sql client 连接mysql报错异常,如何解决?

- R语言ggplot2可视化:使用ggpubr包的ggviolin函数可视化小提琴图、设置add参数在小提琴内部添加抖动数据点以及均值标准差竖线(jitter and mean_sd)

- 【AI4Code】《InferCode: Self-Supervised Learning of Code Representations by Predicting Subtrees》ICSE‘21

- Classification parameter stack of JS common built-in object data types

- PHP uploads the FTP path file to the curl Base64 image on the Internet server

- Pycharm connects to the remote server SSH -u reports an error: no such file or directory

猜你喜欢

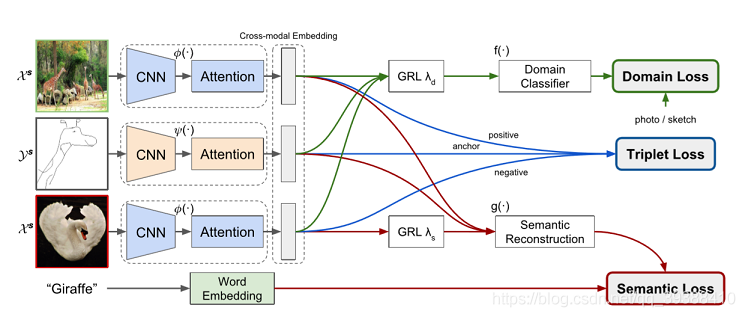

Zero-Shot Image Retrieval(零样本跨模态检索)

Hardware connection server TCP communication protocol gateway

Pycharm connects to the remote server SSH -u reports an error: no such file or directory

Start with the development of wechat official account

Oil monkey script link

brpc源码解析(八)—— 基础类EventDispatcher详解

Brpc source code analysis (VI) -- detailed explanation of basic socket

brpc源码解析(二)—— brpc收到请求的处理过程

【AI4Code】《InferCode: Self-Supervised Learning of Code Representations by Predicting Subtrees》ICSE‘21

Learning to Pre-train Graph Neural Networks(图预训练与微调差异)

随机推荐

R语言ggplot2可视化:使用ggpubr包的ggviolin函数可视化小提琴图、设置add参数在小提琴内部添加抖动数据点以及均值标准差竖线(jitter and mean_sd)

OSPF综合实验

What is the difference between session and cookie?? Xiaobai came to tell you

Web APIs (get element event basic operation element)

Transformer variants (routing transformer, linformer, big bird)

brpc源码解析(八)—— 基础类EventDispatcher详解

Brpc source code analysis (I) -- the main process of RPC service addition and server startup

Solutions to the failure of winddowns planning task execution bat to execute PHP files

【AI4Code】《GraphCodeBERT: Pre-Training Code Representations With DataFlow》 ICLR 2021

[imx6ull notes] - a preliminary exploration of the underlying driver of the kernel

JS process control

Brpc source code analysis (VII) -- worker bthread scheduling based on parkinglot

Learning to pre train graph neural networks

JS中的数组

Arrays in JS

浅谈低代码技术在物流管理中的应用与创新

JS流程控制

Zero shot image retrieval (zero sample cross modal retrieval)

LeetCode 50. Pow(x,n)

Mirror Grid