当前位置:网站首页>Es data export CSV file

Es data export CSV file

2022-06-28 07:37:00 【Courageous steak】

1 Introduce

es Export data to csv file , For the time being, we will not consider efficiency , Just talk about the implementation method .

2 python3

def connect_elk():

client = Elasticsearch(hosts='http://192.168.56.20:9200',

http_auth=("elastic", "elastic password "),

# Before doing anything , Sniff first

# sniff_on_start=True,

# When the node does not respond , refresh , Reconnect the

sniff_on_connection_fail=True,

# Every time 60 Refresh every second

sniffer_timeout=60

)

return client

from elasticsearch import Elasticsearch

import csv

# obtain es database

from common.util_es import connect_elk

es = connect_elk()

''' Query all data and export '''

index = 'blog_rate'

body = {

}

item = ["r_id", "a_id"]

# body = {

# "query": {

# "match": {"name": " Zhang San "},

# }

# }

def ExportCsv(index, body,item):

query = es.search(index=index, body=body, scroll='5m', size=1000)

# es The first page of the query results

results = query['hits']['hits']

# es The total number of results found

total = query['hits']['total']["value"]

# Cursor for output es All the results of the query

scroll_id = query['_scroll_id']

for i in range(0, int(total / 100) + 1):

# scroll Parameter must be specified or an error will be reported

query_scroll = es.scroll(scroll_id=scroll_id, scroll='5m')['hits']['hits']

results += query_scroll

with open('./' + index + '.csv', 'w', newline='', encoding="utf_8_sig") as flow:

csv_writer = csv.writer(flow)

for res in results:

csvrow1 = []

for i in item:

csvrow1.append(res["_source"][i])

csv_writer.writerow(csvrow1)

print('done!')

Reference address :

https://blog.csdn.net/github_27244019/article/details/115351640

边栏推荐

- HJ进制转换

- kubernetes部署thanos ruler的发送重复告警的一个隐秘的坑

- Application and Optimization Practice of redis in vivo push platform

- Uninstall and reinstall the latest version of MySQL database. The test is valid

- 8 figures | analyze Eureka's first synchronization registry

- Force buckle 515 Find the maximum value in each tree row

- "Three routines" of digital collection market

- kubelet驱逐机制的源码分析

- 代码提交规范

- Is it safe for flush to open an account online

猜你喜欢

What is EC blower fan?

2021 programming language ranking summary

Application of XOR. (extract the rightmost 1 in the number, which is often used in interviews)

卸载重装最新版mysql数据库亲测有效

Section Xi. Axi of zynq_ Use of DMA

Modifying MySQL port number under Linux

以动态规划的方式求解最长回文子串

GoLand IDE and delve debug Go programs in kubernetes cluster

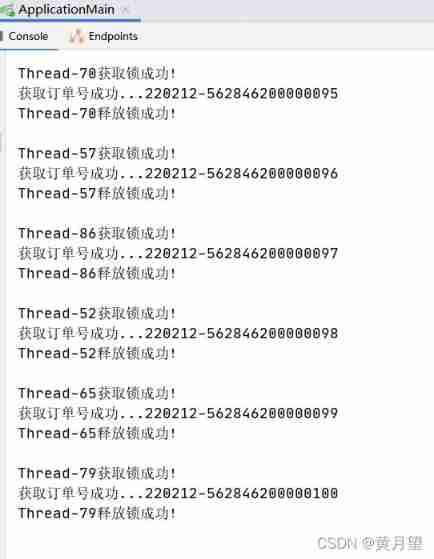

Redis implements distributed locks

Hash slot of rediscluster cluster cluster implementation principle

随机推荐

Section 5: zynq interrupt

hack the box:RouterSpace题解

Ice, protobuf, thrift -- Notes

Is it safe to open an account on Dongfang fortune

HJ进制转换

Code submission specification

Spark 离线开发框架设计与实现

2021 programming language ranking summary

A gadget can write crawlers faster

In idea, the get and set methods may be popular because the Lombok plug-in is not installed

Sword finger offer II 091 Paint the house

Installing redis on Linux

okcc呼叫中心没有电脑的坐席能不能开展工作?

Hash slot of rediscluster cluster cluster implementation principle

Solving the longest palindrome substring by dynamic programming

Static resource compression reduces bandwidth pressure and increases access speed

Rediscluster cluster mode capacity expansion node

Sentinel mechanism of redis cluster

Kubelet garbage collection (exiting containers and unused images) source code analysis

Mysql8.0和Mysql5.0访问jdbc连接