当前位置:网站首页>Notes of Teacher Li Hongyi's 2020 in-depth learning series 9

Notes of Teacher Li Hongyi's 2020 in-depth learning series 9

2022-07-24 22:59:00 【ViviranZ】

Look blind .... At least take a note

https://www.bilibili.com/video/BV1UE411G78S?from=search&

Finally, let's talk about A3C La la la !

First of all, let's review Policy Gradient, Considering discount factor and baseline, But this formula is very unstable Of , This is because in the s for a What happens later is also highly random , So I got G Of varience It's big , Although if we can repeat it enough times, we can avoid the results obtained in the example G=100 The situation of , But actually we sample Very few times , Therefore, it is natural for us to consider whether we can obtain G Instead of us sample Obtained in the process of G.

So we also review how to get this “ expect ”: Yes V Yes Q, And by definition, we know that it is indeed what we want “ expect ”.

We can know below Q The formula of is indeed the sum above , and b As we said before, the definiteness is in this state The expectations of the , So the formula in the red box means “ stay s_t take a_t And then get reward The expectations of the ” Subtract “ stay s_t Own expectations ( That is, for different action The mean value obtained by synthesizing the different results of )”、 That is to say s_t Take on a_t Of “ benefits ”( Maybe this itself state It's good , In order to prevent “ Pigs standing in the air ” Effect we want to exclude “ tuyere ” Influence - See the previous notes for details ).

But there may be a problem with this method , Namely Q and V You need two network , respectively, train, But in itself network There are errors , So the error of this result is two network The sum of is very big , And two network It's also complicated , So we just trainV, It is also because Q It can be used r and V Express .Q The expression expressed as expectation is understandable , But expect us to directly sample The result is approximate . because V It is the expectation itself, so it is expected E In fact, it is only for r, To put it bluntly, we are r( stay s To execute a To obtain the reward) The expectation of sample The result of approximately replaces . You can also imagine by looking at the blue purple formula below orange , Itself orange formula ( Just like the previous one ppt In terms of notes ) Namely “ stay s_t perform a_t Got reward Our expectations exceed those in s_t Perform various action The degree of the mean value of ”, Therefore, it is easy to know that it can be understood as “ stay s_t perform a_t Get instant reward And s_t perform a_t Arrived at s_{t+1} Of reward Expectations and s_t Difference The sum of the ”…… Hiss seems not enough for people —— That is the difference between the reward for one step and the average reward for arriving at a new place and starting point .

More specifically ——A3C This formula works best in the paper = =

Something about A2C Of tips:

1. actor and critic Parameters of can be shared ( But not completely shared , The two networks are not exactly the same ).2. each action Of output It won't make a big difference , We hope to explore extensively .

Here is A3C La ! Like Kakashi N Practice together with multiple shadow parts !

A3C Specific process : every last worker( May occupy a single CPU) First copy Parameters , Then interact with the environment update Parameters , For comparison diverse Of data, every last actor The initial point may be very different , Then each actor Separate calculation gradient, then ( The upper triangle is typo It should be the lower triangle ) hold gradient Send it back to the control center , Then overwrite the previous \theta.

Let's talk about another method :

This method , Different from the previous actor-critic Just tell you if it's good , I will also tell you which is better

The specific method is as follows :critic analogy generater What? ,GANS What is it ....? But this step is still very easy to understand

Algorithm :

More specifically , And Q-learning contrast

Find one. Q, stay s adopt Q Add a little exploration and find one a, Then get r Deposit in buffer in . Every step of sampling updates sampling updates .

Change to A3C…… More than target Q also target actor

reference:

边栏推荐

- Org.json Jsonexception: what about no value for value

- Network Security Learning (I) virtual machine

- Convert a string to an integer and don't double it

- Icassp 2022 | KS transformer for multimodal emotion recognition

- 郑慧娟:基于统一大市场的数据资产应用场景与评估方法研究

- [zero basis] SQL injection for PHP code audit

- WPF uses pathgeometry to draw the hour hand and minute hand

- The size of STM32 stack

- yolov5

- The kettle job implementation runs a kettle conversion task every 6S

猜你喜欢

【1184. 公交站间的距离】

Shardingsphere database sub database sub table introduction

ShardingSphere-数据库分库分表简介

Introduction to HLS programming

Outlook邮件创建的规则失效,可能的原因

《JUC并发编程 - 高级篇》05 -共享模型之无锁 (CAS | 原子整数 | 原子引用 | 原子数组 | 字段更新器 | 原子累加器 | Unsafe类 )

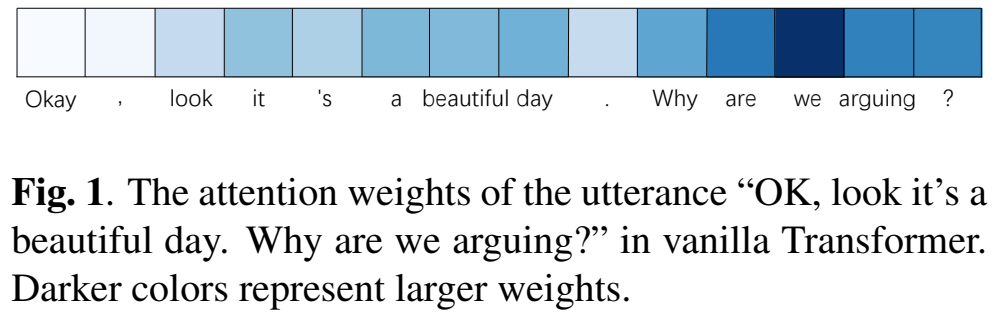

Icassp 2022 | KS transformer for multimodal emotion recognition

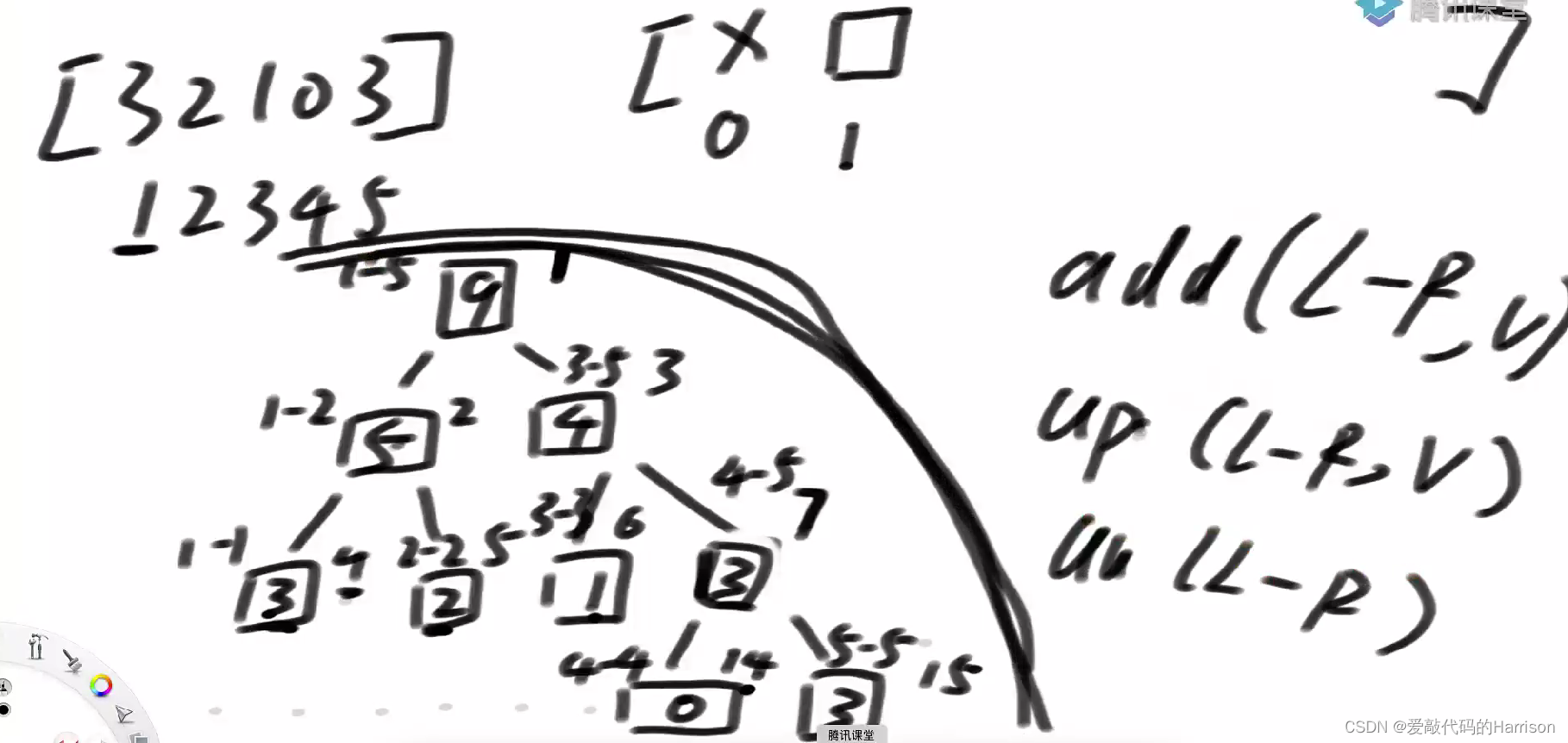

Segment tree,,

工业物联网中的时序数据

The kettle job implementation runs a kettle conversion task every 6S

随机推荐

激光雷达障碍物检测与追踪实战——cuda版欧式聚类

生成式对抗网络的效果评估

In pgplsql: = and=

TrinityCore魔兽世界服务器-注册网站

PGPLSQL中的:=和=

认识复杂度和简单排序运算

"Fundamentals of program design" Chapter 10 function and program structure 7-3 recursive realization of reverse order output integer (15 points)

Effect evaluation of generative countermeasure network

Shell调试Debug的三种方式

Network Security Learning (III) basic DOS commands

常用在线测试工具集合

【1184. 公交站间的距离】

VGA display based on FPGA

聊聊 Redis 是如何进行请求处理

First engineering practice, or first engineering thought—— An undergraduate's perception from learning oi to learning development

CA证书制作实战

On the problem that the on-board relay cannot be switched on due to insufficient power supply

About constant modifier const

Brainstorming -- using reduce method to reconstruct concat function

WPF uses pathgeometry to draw the hour hand and minute hand