当前位置:网站首页>NLP introduction + practice: Chapter 2: introduction to pytorch

NLP introduction + practice: Chapter 2: introduction to pytorch

2022-07-24 10:31:00 【ZNineSun】

List of articles

Last one : 《nlp introduction + actual combat : Chapter one : Deep learning and neural networks 》

Code link of this chapter : https://gitee.com/ninesuntec/nlp-entry-practice/blob/master/code/2.pytorch.py

1.pytorch Introduction to

Pytorch Is a facebook Published in-depth learning framework , Because of its ease of use , Friendliness . Favored by the majority of users .

2.pytorch Version of

Please refer to pytorch Official website :https://pytorch.org/get-started/locally/

There are detailed installation steps above , Of course , You can also base on anaconda Environment to install pytorch Environment

Of course , If your computer doesn't have gpu Words , The simple calculation in the early stage depends on cpu No problem at all , The installation command is as follows :

3.pytorch To get started with

3.1 tensor Tensor

Tensor is a general term , There are many types :

- 0 Order tensor : Scalar 、 constant ,0-D Tensor

- 1 Order tensor : vector ,1-D Tensor

- 2 Order tensor : matrix ,2-D Tensor

- 3 Order tensor

- 5…

- N Order tensor

With numpy, Sequence , Arrays, etc , Why do we need tensors , In us pytorch In the frame , It only knows tensors ,, So we need to convert the data into tensor type ,pytorch To analyze .

3.2 stay Pytorch Create tensors in

Before learning this section , Please make sure you have installed pytorch as well as numpy

I'm through anaconda Installed , No, you can baidu by yourself , Lots of tutorials .

- 《pycharm Middle configuration anaconda Medium tensorflow Environmental Science 》

- 《anaconda Commonly used instructions 》

1. Use python Create tensors from lists or sequences in

import torch

import numpy as np

t1=torch.tensor([[1, -1], [1, -1]])

print(t1)

This article only shows torch and numpy Import of , The following defaults are added , Please fill in by yourself . At the same time, this article does not give a screenshot of the running results , It aims to ensure that everyone manually types the code , Otherwise, it's a bolt , Not much .

2. Use Numpy Create tensors from arrays in

t2 = torch.tensor(np.array([[1, 2, 3], [4, 5, 6]]))

print(t2)

3. Use torch Medium api establish tensor

t3_0 = torch.empty(3, 4) # establish 3 That's ok 4 Empty column tensor, Will use useless data to fill

t3_1 = torch.ones([3, 4]) # establish 3 That's ok 4 All are listed as 1 Of tensor

t3_2 = torch.zeros([3, 4]) # establish 3 That's ok 4 All are listed as 0 Of tensor

t3_3 = torch.rand([3, 4]) # establish 3 That's ok 4 Column of random values tensor, The random interval is (0,1)

t3_4 = torch.randint(low=0, high=10, size=[3, 4]) # establish 3 That's ok 4 Columns of random integers tensor, The random interval is (low,high)

t3_5 = torch.randn([3, 4]) # establish 3 That's ok 4 Columns of random numbers tensor, The mean value of the random value is 0, The variance of 1

3.3 Pytorch Medium tensor Common methods

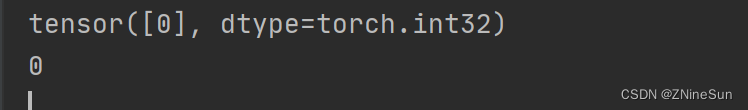

1. obtain tensor Data in ( When tensor Only one element is available in ):tensor.item()

t4 = torch.tensor(np.arange(1))

print(t4)

print(t4.item())

2. Turn into numpy Array

t5_1 = torch.randint(low=0, high=10, size=[3, 4])

t5_2=t5_1.numpy()

print(t5_1)

print(t5_2)

3. Get shape :tensor.size()

t6 = torch.randint(low=0, high=10, size=[3, 4])

print(t6)

print(t6.size())

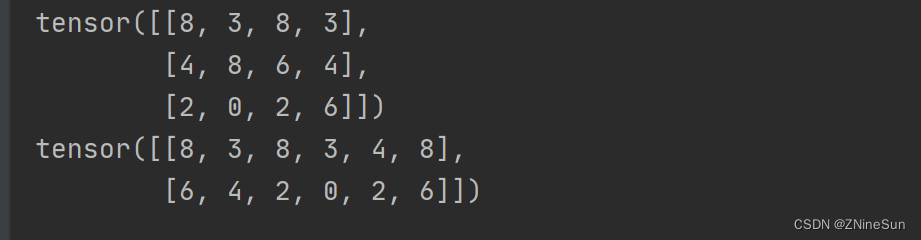

4. Shape change :tensor.view((3,4)). similar numpy Medium reshape, It's a shallow copy , Just a change in shape

t7 = torch.randint(low=0, high=10, size=[3, 4])

print(t7)

print(t7.view(2, 6))

Be careful , No matter how you convert it, you must ensure that the final product remains unchanged , Such as 3* 4=2*6

5. Get dimension :tensor.dim()

t8 = torch.randint(low=0, high=10, size=[3, 4])

print(t8)

print(t8.dim())

6. Get the maximum :tensor.max()

t9 = torch.randint(low=0, high=10, size=[3, 4])

print(t9)

print(t9.max())

7. Transposition :tensor.t()

t10 = torch.randint(low=0, high=10, size=[3, 4])

print(t10)

print(t10.t())

8. obtain tensor The first m That's ok n The value of the column :tensor[m,n]

t11 = torch.randint(low=0, high=10, size=[3, 4])

print(t11)

print(t11[1, 2]) # Get the second line 3 The value of the column

9. assignment :tensor[m,n]=x, Will be the first m That's ok n The value of the column is assigned x

t12 = torch.randint(low=0, high=10, size=[3, 4])

print(t12)

t12[1,2]=1000

print(t12)

10. Two dimensions of the commutative matrix :torch.transpose(x, 0, 1)

t13 = torch.randn(2, 3); # 2 That's ok 3 Column

print(t13)

t13=torch.transpose(t13, 0, 1)

print(t13) # take t13 Turn into 3 That's ok 2 Column

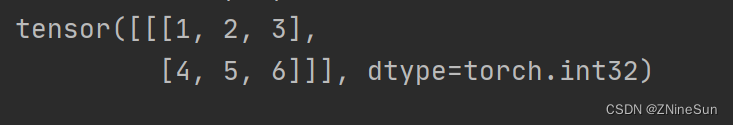

If we encounter this type of data , Inside [1,2,3] What does it mean to represent respectively ?

It means that the tensor is a three-dimensional tensor , among

- 1: There is only one piece of data

- 2,3: Represents that every piece of data is a 2 That's ok 3 Columns of data

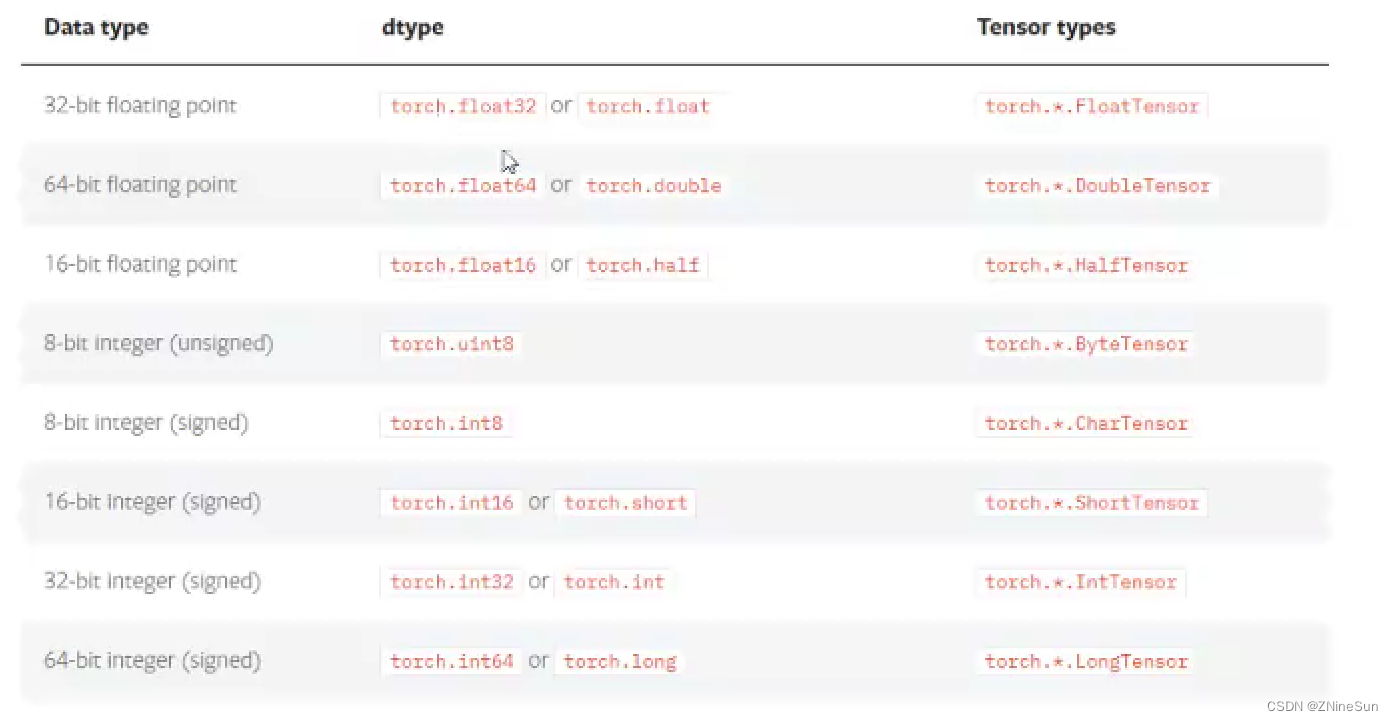

3.4 tensor Data type of

1. obtain tensor Data type of :x.dtype

t15 = torch.randn([3, 4])

print(t15.dtype)

2. When creating tensors , Can also pass dtype To specify the data type

t16 = torch.randn([3, 4],dtype=torch.double)

print(t16.dtype)

Of course, we can also pass FloatTensor、LongTensor Wait to create the data type we need

t17 = torch.LongTensor([1, 2])

print(t17)

3.5 tensor Other operations of

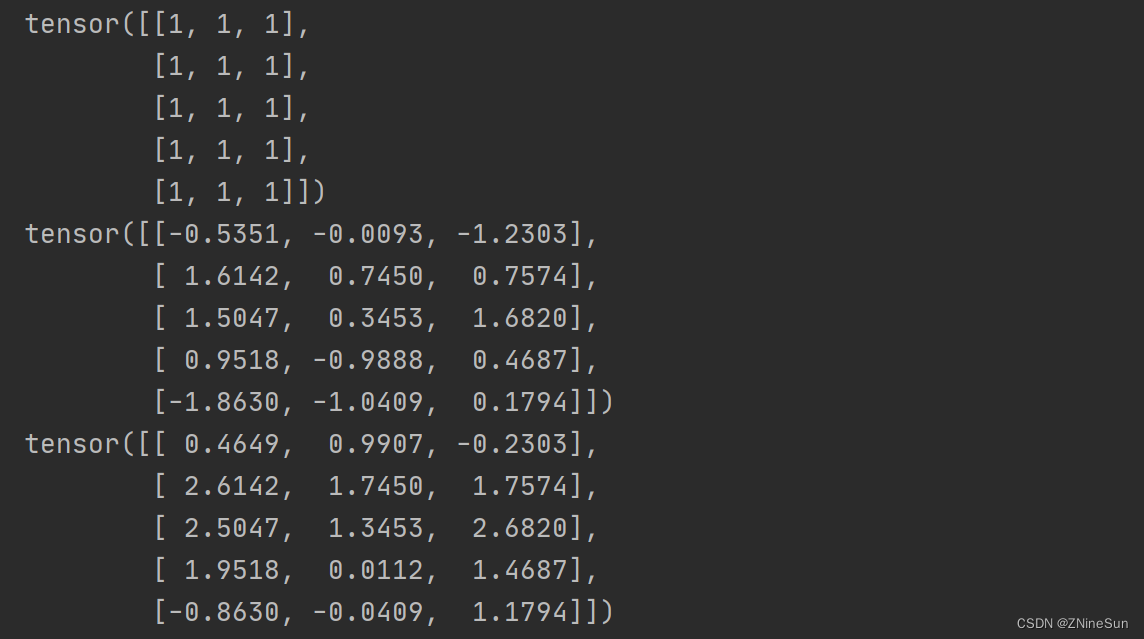

1.tensor And tensor The addition of

t18 = t17.new_ones(5, 3, dtype=torch.float)

t18_1 = torch.randn(5, 3)

print(t18)

print(t18_1)

print(t18 + t18_1)

print(torch.add(t18, t18_1))

print(t18.add(t18_1))

t18.add_(t18_1) # The underlined method will be correct for t18 Make local modifications

print(t18)

2.tensor And digital operation

t19 = torch.randn(3, 3)

print("t19:", t19)

print("t19+10=", t19 + 10)

3.CUDA Medium tensor

CUDA (Compute Unified Device Architecture), yes NVIDIA The new computing platform .

CUDATM It's a kind of NVIDIA General parallel computing architecture , The architecture enables GPU Able to solve complex computing problems .

torch.cuda This module adds a pair of CUDA tensor Support for , In the cpu and gpu Use the same method on tensor

adopt .to Method can put a tensor Transfer to another device ( For instance from CPU go to GPU)

if torch.cuda.is_available():

device = torch.device("cuda") # cuda device object

y = torch.ones_like(t19, device=device) # Create a cuda Of tensor

x = t19.to(device) # How to use t19 Turn into cuda Of tensor

z = x + y

print(z)

print(z.to("cpu", torch.double)) # .to Method can also set the type

else:

print(" Your device does not support gpu operation ")

边栏推荐

- A ten thousand word blog post takes you into the pit. Reptiles are a dead end [ten thousand word pictures]

- 火山引擎:开放字节跳动同款AI基建,一套系统解决多重训练任务

- What did zoneawareloadbalancer of ribbon and its parent class do?

- Curse of knowledge

- Intranet remote control tool under Windows

- Adobe substance 3D Designer 2021 software installation package download and installation tutorial

- [electronic device note 3] capacitance parameters and type selection

- Record AP and map calculation examples

- 图像处理:RGB565转RGB888

- 【剑指 Offer II 115. 重建序列】

猜你喜欢

Figure model 2-2022-5-13

OSPF includes special area experiments, mGRE construction and re release

机器学习小试(11)验证码识别测试-使用Qt与Tensorflow2进行深度学习实验

How does ribbon get the default zoneawareloadbalancer?

Homologous policy solutions

NiO knowledge points

很佩服的一个Google大佬,离职了。。

New:Bryntum Grid 5.1.0 Crack

常量指针、指针常量

Analysis of distributed lock redistribution principle

随机推荐

Tree array-

Homologous policy solutions

js函数调用下载文件链接

脚手架内各文件配置说明、组件化开发步骤

二叉树、二叉树排序树的实现及遍历

Arduino + AD9833 波形发生器

Sub query of multi table query_ Single row and single column

Basic SQL operations

PC博物馆(1) 1970年 Datapoint 2000

机器学习小试(11)验证码识别测试-使用Qt与Tensorflow2进行深度学习实验

Programmers can't JVM? Ashes Engineer: all waiting to be eliminated! This is a must skill!

ffmpeg花屏解决(修改源码,丢弃不完整帧)

Try the video for 5 minutes [easy to understand]

fatal: unable to commit credential store: Device or resource busy

Sentinel 三种流控效果

Analysis of distributed lock redistribution principle

How to solve the problem of robot positioning and navigation in large indoor scenes with low-cost solutions?

2022, enterprise informatization construction based on Unified Process Platform refers to thubierv0.1

多表查询之子查询_单行单列情况

OSPF含特殊区域实验,MGRE构建和重发布