当前位置:网站首页>The performance of the new Tokio scheduler is improved by 10 times

The performance of the new Tokio scheduler is improved by 10 times

2022-06-23 02:51:00 【to listen attentively】

The optimization of the scheduler is carried out around the following aspects :

- new std::future Mission system

- Better queue algorithm

- Optimize messaging patterns

- The improved “ Task stealing ” Algorithm

- Reduce cross thread synchronization

- Reduce memory allocation

- Reduce the atomic reference count

1 How the scheduler works

When thread concurrency is involved ,CPU The cache consistency mechanism of will work , Therefore, cross thread synchronization should be avoided as much as possible .

- Single line + Multiprocessor

- advantage : Implement a simple ; Tasks are fairly scheduled .

- shortcoming : All the processors are at the head of the queue , The time it takes to actually execute a task is much longer than the time it takes to eject the task from the queue .rust Asynchronous tasks are short and time consuming , The overhead of contention queue is large .

- Multiprocessor + Multi task queue

- Using multiple single threaded schedulers , Each processor has its own task queue , Synchronization problems can be completely avoided .

- rust In the task model of , Any thread can submit tasks to the queue , You still need thread safety .

- Either the task queue of each processor supports thread safe insertion .

- Either there are two queues per processor : Synchronous queue and asynchronous queue .

- advantage : Synchronization is almost completely avoided , Higher performance .

- shortcoming : The processor may experience severe load imbalance .

- “ Task stealing ” Scheduler

- Each processor has its own task queue . When a processor is idle , Some tasks can be stolen from other processors at the same level , When the theft fails, it will sleep .

- advantage : Avoid synchronization overhead ; Avoid task load imbalance .

- shortcoming : complex , Task queues must support “ steal ”, And requires some cross processor synchronization . If the whole process is not executed correctly ,“ steal ” Your expenses may outweigh your benefits .

- Each processor has its own task queue . When a processor is idle , Some tasks can be stolen from other processors at the same level , When the theft fails, it will sleep .

- summary

- Minimize synchronous operations .

- “ Task stealing ” It is the preferred algorithm for general scheduler .

- The processors are basically independent of each other , but “ steal ” Operation requires some synchronous operation .

2 new Tokio Scheduler

- The new mission system

- std Contains new std::future Mission system , Lighter and more flexible than previous versions .

- Waker Smaller structure , Reduced replication overhead , It also allows more critical data to be put into cache rows .

- Better task queues

- Use a fixed size for each queue . When the queue is full , The task will be pushed to a global 、 Multi user 、 In a multi producer queue . The processor needs to check the global queue , But the frequency is much lower than the local queue .

- advantage : Avoid the overhead of expanding local queues . The capacity expansion of the double ended queue is relatively heavy .

- details : The local queue uses a fixed size single producer 、 Multi consumer queue , It requires very little synchronization to work properly .

- Use a fixed size for each queue . When the queue is full , The task will be pushed to a global 、 Multi user 、 In a multi producer queue . The processor needs to check the global queue , But the frequency is much lower than the local queue .

- Optimize messaging patterns

- When a task becomes runnable , Stored in “ The next task ” In groove , Instead of adding to the end of the task queue . The processor checks the slot before checking the task queue .

- advantage : In the case of messaging , The recipient of the message is immediately scheduled , A high probability of hitting CPU Cache .

- When a task becomes runnable , Stored in “ The next task ” In groove , Instead of adding to the end of the task queue . The processor checks the slot before checking the task queue .

- Task stealing

- When the processor's run queue is empty , The processor will try to steal tasks from a peer processor at random , If not found , Try the next peer processor .

- shortcoming : Many processors complete the processing of the run queue at approximately the same time . Will cause all processors to attempt to steal at the same time , Cause contention . Although random selection of initial nodes can reduce contention , But it's still bad .

- improve : Limit the number of processors that concurrently perform stealing operations . The processor state attempted to steal is “ Searching ”. Control the number of concurrency by using atomic counters : The processor increments the atomic counter before starting the search , Decrements the atomic counter when exiting the search state .

- When the processor's run queue is empty , The processor will try to steal tasks from a peer processor at random , If not found , Try the next peer processor .

- Reduce cross thread synchronization

- Another key part of the task stealing scheduler is peer notification . The processor notifies the peer processor when a new task is observed , If the peer processor receiving the notification is in the sleep state, it will wake up and steal the task .

- shortcoming : Too many notifications can cause crowd panic problems .

- improve : When no processor is in the search state , To be notified . When a processor in search state finds a new task , It will exit the search state first , Then notify the next processor . The processor in search state will not receive any notification . The notification processor will steal half the tasks in the batch , Then notify the other processor . The third processor is awakened , Find tasks from the first two processors and steal half of them , So as to quickly achieve responsible balance .

- Another key part of the task stealing scheduler is peer notification . The processor notifies the peer processor when a new task is observed , If the peer processor receiving the notification is in the sleep state, it will wake up and steal the task .

- Reduce memory allocation

- Allocate memory only once for each task .

- Reduce the atomic reference count

- Each wakeup has a reference count to the task handle , Wake up the task , Will call task Of clone Method , Increase the atomic count , Then put the reference in the run queue . When the processor finishes executing the task , It will delete the reference , Reduce the atomic count . These atomic operations are cheap but add up .

- improve : Provide weke Method to take ownership directly , Instead of getting references . The scheduler needs to maintain a list of outstanding tasks .

- difficult : Make sure that the scheduler does not delete any tasks from its list before the task ends .

- improve : Provide weke Method to take ownership directly , Instead of getting references . The scheduler needs to maintain a list of outstanding tasks .

- Each wakeup has a reference count to the task handle , Wake up the task , Will call task Of clone Method , Increase the atomic count , Then put the reference in the run queue . When the processor finishes executing the task , It will delete the reference , Reduce the atomic count . These atomic operations are cheap but add up .

3 Use Loom Fearless concurrency

Loom Is a tool for testing concurrent code .Loom Will run multiple use cases , It also enumerates the behaviors that may be encountered in a multi-threaded environment , And verify memory access 、 Whether memory allocation and release are correct .

Reference resources

边栏推荐

- Three ways to get class

- Solution to the problem of easycvr switching MySQL database traffic statistics cannot be displayed

- Win11 client remote ECS black screen

- Small knowledge points of asset

- Get the structure of the class through reflection, little chestnut

- Reading redis source code (III) initialization and event cycle

- RI Geng series: potential memory leak caused by abuse of mutable in Google Pb structure

- Redis source code reading (I) general overview

- Scanning technology (getting started with web security 06)

- 862. triple sorting

猜你喜欢

![Buuctf misc-[actf freshman competition 2020]outline](/img/a4/ac9d14a69e0759d1e7c65740415bf7.jpg)

Buuctf misc-[actf freshman competition 2020]outline

Performance test -- Jenkins environment construction for 15jmeter performance test

8. greed

Microservice Optimization: internal communication of microservices using grpc

Mongodb aggregate query implements multi table associated query, type conversion, and returns specified parameters.

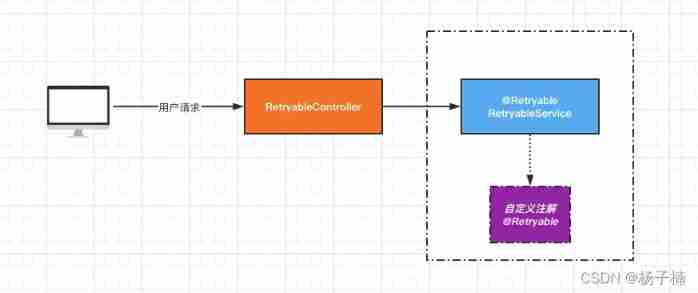

My good brother gave me a difficult problem: retry mechanism

5. concept of ruler method

Vulnhub DC-5

Performance testing -- Interpretation and practice of 16 enterprise level project framework

Soft exam information system project manager_ Information system comprehensive testing and management - Senior Information System Project Manager of soft test 027

随机推荐

Hypervisor Necromancy; Recover kernel protector (1)

Exploit format string vulnerability in CDE

No error is reported when using the Gorm framework to create a table, but the data cannot be inserted successfully

Reading redis source code (V) master-slave replication and sentinel mechanism

Use Sakura FRP intranet penetration service to build your own website / game server

Zoom/skype/ nailing / live broadcast / conference / online video real-time subtitle generation and translation, simultaneous interpretation

Practice and exploration of vivo live broadcast application technology

Reading redis source code (III) initialization and event cycle

Related concepts of TTF, TOF, woff and woff2

Source code analysis | activity setcontentview I don't flash

Vs code remote SSH configuration

Docker builds redis3 master-slave cluster and expands the capacity

Microservice Optimization: internal communication of microservices using grpc

How to generate DataMatrix code in batch through TXT file

Summary of easy-to-use MySQL interview questions (Part 1)

EDI project cases of customers in medical device industry

Special exercise split line-----------------------------

C language series - Section 4 - arrays

How to locate memory leaks

About the use of mock framework