当前位置:网站首页>Common operation and Principle Exploration of stream

Common operation and Principle Exploration of stream

2022-06-27 07:31:00 【Illusory private school】

High quality resource sharing

| Learning route guidance ( Click unlock ) | Knowledge orientation | Crowd positioning |

|---|---|---|

| 🧡 Python Actual wechat ordering applet 🧡 | Progressive class | This course is python flask+ Perfect combination of wechat applet , From the deployment of Tencent to the launch of the project , Create a full stack ordering system . |

| Python Quantitative trading practice | beginner | Take you hand in hand to create an easy to expand 、 More secure 、 More efficient quantitative trading system |

Stream Common operation and principle

Stream What is it? ?

Stream Is an advanced iterator , It's not a data structure , Can't store data . It can be used to implement internal iterations , Internal iterations are compared to normal external iterations , It can implement parallel evaluation ( Efficient , External iterations should define their own thread pool to implement multithreading to achieve efficient processing )、 Lazy evaluation ( Operation not terminated in , Intermediate operations are not performed )、 Short circuit operation ( Get the correct result and return , You don't have to wait until the whole process is finished ) etc.

- Stream The translation means “ The stream , flow ” It means , And we just started learning java The most contact is IO flow , It's more like “ The farmer mountain spring ”,“ We only do nature's porters ”, Just transfer a file from one place to another , Do not add, delete or modify the contents of the document , and Stream will , That is, treat the data to be processed as a stream , To carry out transmission in a pipe , And the data is processed at each node in the pipeline , Such as filtration 、 Sort 、 Conversion, etc ;

- Usually the data we need to process is in the form of Collection、Array Etc ;

- Stream It is Java8 A new feature in , That about Java8 You can refer to this article for other new features in 《Java8 New features in action 》;

- Since it is Java8 New features , And we know Java8 One of the big changes is the addition of functional programming , and Stream On the protagonist , So what is functional programming , You can refer to an article on Zhihu 《 What is functional programming ?》;

- Since it is functional programming , So it's usually cooperation Lambda Expressions use ;

Stream How to use it? ?

All operation categories

First Stream All operations of can be divided into two categories , First, intermediate operation , The second is to terminate the operation

** Intermediate operation :** The middle operation is just a kind of mark , Only the end operation will trigger the actual calculation

- No state : The processing of an element is not affected by the preceding element ;

- ** A stateful :** Stateful intermediate operations must wait until all elements are processed before the final result is known , For example, sorting is a stateful operation , You can't determine the sort result until you read all the elements .

** Termination operation :** seeing the name of a thing one thinks of its function , The result of the calculation is the operation

- ** Short circuit operation :** To return a result without processing all elements ;

- ** Non short circuit operation :** All elements must be processed to get the final result .

Besides, here I see some places that will collect Defined as an intermediate operation , But I read most of them right Stream Introduction to , Find out Collect This collection operation is the final stop operation , After all, this is also in line with the scenes we usually use it , So please also identify some articles mentioned collect It is the wrong explanation of the intermediate operation .

Common operations

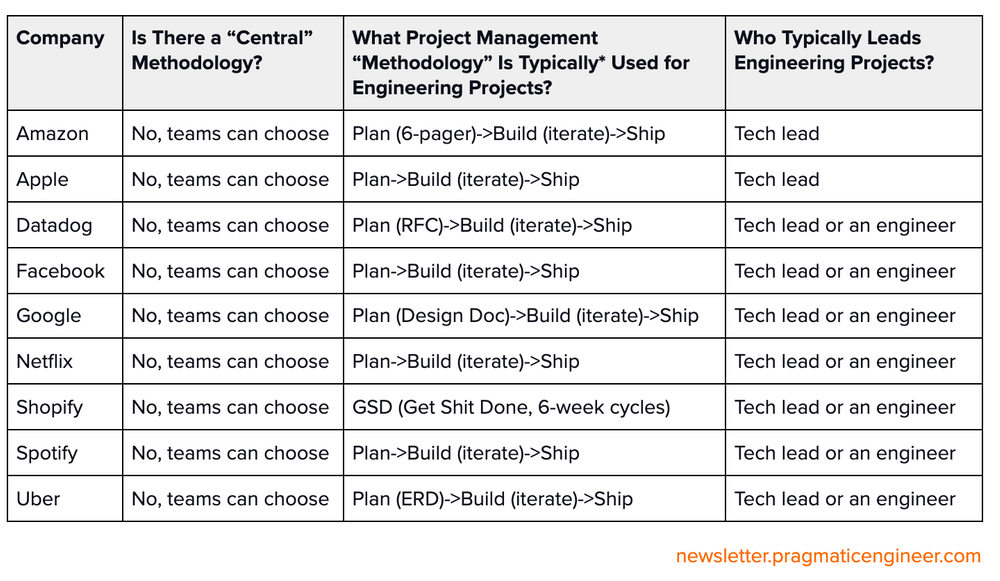

The following two figures are right stream A simple use case is made for the common operations of , The original flow chart is here Java8 New characteristics

As for the common operation , This blog will not go into too much detail , Because there are many articles of this kind on the Internet , I often read these three articles :

Why can I get off work on time

- The author drinks water when he is unhappy , Although the title is a little inconsistent with the content of the article , But the content is still very liver , Mainly some application examples .

use Stream To optimize old code , Instantly clean and elegant !

- The author is JavaGuide, It simply mentions some operations

Java8 stream Sorting and custom comparator , Very practical !

- The author is taro source code , It's mainly because we usually use List、Set、Map Examples of sorting these collection types

java Two List Intersection , Combine

- This is an article I saw when I was using collections , It can be used as a supplement

Why use Stream?

Declarative processing of data

The first reason I think is Stream Streams can process data declaratively , That is to say, there are filter、sort This and the written operation , Just use it , If we usually use for loop , Still in for In the loop, you write how to filter these operations , Finally, I can get the result I want , Compare this imperative operation

It can be said that it makes our code cleaner 、 concise .

contrast for loop

For and for Comparison of cycle efficiency , I think it is similar to the following , But I haven't found any information on the Internet to prove that a certain point of view is correct , Many people hold the view that “ Sacrifice code efficiency for code simplicity ”,“Stream Its advantage is that it has parallel processing ”,“Stream Efficiency and for almost , For the sake of code conciseness, I prefer Stream” etc. .

But I think there is still a problem in sacrificing code efficiency for code simplicity , Can't generalize . But the advantage of functional programming is that the code is simple , Multi core friendly parallel processing is undeniable .

- For different data structures ,Stream The execution efficiency of flow is different

- For different data sources ,Stream The execution efficiency of flow is also different

- For simple numbers (list-Int) Traverse , Ordinary for Cycle efficiency is indeed better than Stream High efficiency of serial stream execution (1.5-2.5 times ). however Stream Streams can be executed in parallel CPU Multi core advantage of , Therefore, the execution efficiency of parallel stream computing is higher than for loop .

- about list-Object Type of data traversal , Ordinary for Circulation and Stream There is no advantage in serial stream ratio , Not to mention Stream Parallel stream computing .

Although in different scenes 、 Different data structures 、 Different hardware environments .Stream Flow and for The test results of cycle performance are quite different , Even reverse . But overall :

- Stream Parallel stream computing >> Ordinary for loop ~= Stream Serial stream computing ( The reason for using two greater than signs , Your delicacies )

- The larger the data capacity ,Stream The more efficient the flow is .

- Stream Parallel stream computing can usually make good use of CPU Multi core advantage of .CPU The more cores ,Stream The more efficient parallel flow computing is .

- If the data is in 1 Within ten thousand words ,for The cycle efficiency is higher than foreach and stream; If the amount of data is 10 Ten thousand hours ,stream Highest efficiency , The second is foreach, And finally for. It should also be noted that if the data reaches 100 Ten thousand words ,parallelStream Asynchronous parallel processing is the most efficient , higher than foreach and for

Processing set data

Stream Can be said to be Java8 The abstract concept of dealing with sets in , So we often use data in sets like SQL In a similar way ; So I often use Stream Traverse , That's compared to the nesting we wrote before for Loops can be said to make the code more concise , More intuitive and easy to read . Of course, a cycle is just a cycle , and Stream It is a stream to process . How to do iteration , Then we have to see stream How does it work .

The inertia calculation

Lazy computing can also be called lazy evaluation or delayed evaluation , This approach is very common in functional programming , That is, when the result is calculated, it does not immediately return the value , It is calculated when it is to be used ;

stay Stream in , We can think of it as an intermediate operation , For example, when it comes to a List Assemble to make Stream operation , such as filter, But there is no final operation , It returns a Stream flow . That is, we can think of it as the following figure .

And Collection The difference between

Compare from an implementation Perspective , Stream and Collection There are also many differences :

- Don't store data . A stream is not a data structure that stores elements . It's just a delivery source (source) The data of .

- Functional (Functional in nature). Operating on a stream only produces a result , The source will not be modified . for example filter Just generate a filtered stream, The elements in the source will not be deleted .

- Delay search . Many stream operations , Such as filter, map etc. , It's all delayed execution . Intermediate operations are always lazy Of .

- Stream May be unbounded . And the set is always bounded ( The number of elements is finite ). Short circuit operation, such as limit(n) , findFirst() Can be completed in a limited time in an unbounded stream

- Consumable (Consumable). It's not very easy to translate , It means that the elements of the stream can only be accessed once in the declaration cycle of the stream . Access again can only regenerate one... From the source Stream

Stream principle

Maybe we'll think ,Stream The implementation of is to call the function every time , It will do an iteration , It must be wrong , such Stream The efficiency of is very low .

The fact is that we can see how it iterates by looking at the source code , Actually Stream The interior is through the assembly line (Pipeline) The way to achieve , The basic idea is to follow the pipeline during iteration (Pipeline) Do as much as you can , Thus avoiding multiple iterations .

in other words Stream When performing intermediate operations, it's just recording , When the user calls the terminate operation , The recorded operations will be executed along the pipeline in an iteration . Follow this line of thinking , There are several problems that need to be solved :

- How the user's actions are recorded ?

- How operations stack ?

- How to perform the operation after stacking ?

Key problem solving

From the above we can know Stream Complete operation of , It's a by < Data sources 、 operation 、 Callback function > Triples composed of ;

We also need to know Stream The inheritance relationship between related classes and interfaces of . Here's the picture :

- From the figure, we can see that in addition to the basic types , Reference types are instantiated ReferencePipeline To express

- And with the ReferencePipeline Parallel three classes are customized for their basic types .

1. How the operation records ?

- First JDK It is often used in the source code stage( Stage ) To identify an operation .

- secondly ,Stream Operations usually require a callback function (Lambda expression )

As we can see from the above , When we call stream When the method is used , Eventually I will create a Head Instance to represent the operation header , That's the first one stage, When calling filter() Method, an intermediate operation instance will be created StatelessOp( No state ), Then call map() Method, an intermediate operation instance will be created StatelessOp( No state ), Last call sort() Method will create the final operation instance StatefulOp( A stateful ), Similarly, calling the corresponding methods of other operations will generate a ReferencePipeline example , By calling this series of operations, a two-way linked list is finally formed , each Stage All recorded the previous one Stage And this operation and callback function .

Source code :

1. call stream, establish Head example

2. call filter or map Intermediate operation

- These intermediate operations and final operations are all in ReferencePipeline In this class , It implements the intermediate pipeline stage of its element type or the abstract base class of the pipeline source stage .

The following code logic is to call back the function mapper Package to a Sink among . because Stream.map() It's a stateless intermediate operation , therefore map() Method returns a StatelessOp Inner class object ( A new Stream), Call this new Stream Of opWripSink() Method will get a Sink.

This Sink This is how the operations mentioned below are superimposed .

2. How operations stack ?

From above we can know Stream adopt stage To record the operation , but stage Save only the current operation , It doesn't know how to operate the next stage, What operation does it need .

So if you want to implement it, you need some kind of agreement to make each of them stage Connect .

JDK Is the use of Sink( We can call it “ Convergence point ”) Interface ,Sink Interface definition begin()、end()、cancellationRequested()、accept() Four ways , As shown in the following table .

| Method name | effect |

|---|---|

| void begin(long size) | Call this method before you start traversing elements. , notice Sink To prepare . |

| void end() | When all elements are traversed, they are called. , notice Sink There are no more elements . |

| boolean cancellationRequested() | Can I end the operation , Short circuit operation can be finished as soon as possible . |

| void accept(T t) | Called when traversing an element , Accept a pending element , And deal with the elements .Stage Encapsulate the operations and callback methods you include in this method , Previous Stage Just call the current Stage.accept(T t) The method will do . |

Sink Interface annotation document :

Consumer An extension of , Used to pass values through various stages of the flow pipeline , With management size information 、 Additional methods of control flow, etc . On the first call Sink Upper accept() Before method , You must first call begin() Method to notify it that data has arrived ( Optionally, the receiver is notified how much data is coming ), And after all the data is sent , You have to call end() Method . Calling end() after , You should not call again without begin() ) Called in the case of accept() ). Sink It also provides a mechanism , Through this mechanism ,sink Can cooperate to signal that it does not want to receive more data ( cancellationRequested() Method ), The source can be in the direction of Sink Poll the mechanism before sending more data .

The receiver may be in one of two states : Initial state and active state . It starts from the initial state ; begin() Method to convert it to an active state , end() Method to return it to its initial state , It can be reused in this state . Data acceptance method ( Such as accept() Valid only when active .

API notes :

The flow pipeline consists of a source 、 Zero or more intermediate stages ( Such as filtering or mapping ) And a terminal stage ( For example, reduction or for-each) form . say concretely , Consider piping :

int longestStringLengthStartingWithA

= strings.stream()

.filter(s -> s.startsWith("A"))

.mapToInt(String::length)

.max();

ad locum , We have three stages , Filter 、 Mapping and reduction . The filtering phase uses strings and emits a subset of those strings ; The mapping phase uses strings and emits integers ; The reduction phase consumes these integers and calculates the maximum value .

Sink Instances are used to represent each stage of this pipeline , No matter what objects are accepted at this stage 、 Integers 、 Long integer or double precision number . Sink have accept(Object) 、 accept(int) And so on , So we don't need every original specialized dedicated interface . ( For this omnivorous trend , It may be called “ Kitchen sink ”.) The entry point of the pipe is the filter stage Sink , It takes some elements “ The downstream ” Sent to... In the mapping phase Sink , The integer value is then sent downstream to Sink For the reduction phase . Associated with a given phase Sink The implementation should know the data type of the next phase , And downstream Sink Call the correct accept Method . Again , Each phase must implement the correct... Corresponding to the data type it accepts accept Method .

Sink.OfInt And so on accept(Object) By calling accept Appropriate primitive specialization for , Realization Consumer Appropriate primitive specialization for , And re abstract accept Appropriate primitive specialization for .

Sink.ChainedInt Isochain subtypes not only implement Sink.OfInt , A representation of downstream is also maintained Sink Of downstream Field , And implemented begin() 、 end() and cancellationRequested() Method to delegate to downstream Sink . Most implementations of intermediate operations will use these link wrappers . for example , The mapping phase in the above example is shown below :

IntSink is = new Sink.ChainedReference(sink) {

public void accept(U u) {

downstream.accept(mapper.applyAsInt(u));

}

};

ad locum , We implement Sink.ChainedReference , This means that we expect to receive U Type as input , And pass the downstream receiver to the constructor . Because the next stage needs to receive integers , So we must call when we send the value downstream accept(int) Method . accept() Method to transfer the mapping function from U Applied to the int And pass the result value to the downstream Sink .

interface Sink extends Consumer {}

Call... From the above figure ReferencePipeline.map() Methods , We will find that we are creating a ReferencePipeline At instance time , Need to rewrite opWrapSink Method to generate the corresponding Sink example . And by reading the source code, you will find that common operations will create a ChainedReference example ;

With the agreement above , adjacent Stage It's very convenient to call between , Every Stage Will encapsulate their operations into a Sink in , Previous Stage Just call the latter Stage Of

accept()The method can , There's no need to know what's going on inside .Of course, for stateful operations ,Sink Of

begin()andend()Methods also have to be implemented . such as Stream.sorted() It's a stateful intermediate operation , Their corresponding Sink.begin() Method may create a container for multiplying and placing the results , and accept() Method is responsible for adding elements to the container , Last end() Responsible for sorting containers .For short circuit operation ,

Sink.cancellationRequested()It has to be realized , such as Stream.findFirst() It's a short circuit operation , Just find one element ,cancellationRequested() Should return true, So that the caller can finish the search as soon as possible .Sink The four interface methods often work together , Work together on computing tasks .

actually Stream API The essence of internal implementation , Is how to reload Sink These four interface methods

3. How to execute after operation superposition ?

Sink It's a perfect package Stream Every step of the operation , And gives [ Handle -> forward ] Mode to overlay operations . This chain of gears has engaged , The last step is to start the gear . What started this series of operations ? Maybe you've already thought that the original power of starting is to end the operation (Terminal Operation), Once an end operation is called , It triggers the execution of the entire pipeline .

After the end of the operation, there can be no other operations , So the end operation does not create a new pipeline stage (Stage), Intuitively speaking, the linked list of the pipeline will not be extended in the future . The end operation will create a package of your own operation Sink, This is the last one in the assembly line Sink, This Sink You only need to process the data without passing the results downstream Sink( Because there is no downstream ). about Sink Of [ Handle -> forward ] Model , End the operation Sink It's the exit of the call chain .

Let's look at the upstream Sink How to find downstream Sink Of .

One alternative is in PipelineHelper Set a Sink Field , Find downstream in the assembly line Stage And access Sink Field can be .

but Stream The designer of the class library didn't do that , It's set up a

Sink AbstractPipeline.opWrapSink(int flags, Sink downstream)Method to get Sink, The function of this method is to return a new containing the current Stage Represents the operation and the ability to pass results to downstream Of Sink object .Why create a new object instead of returning a Sink Field ?

This is because of the use of opWrapSink() You can connect the current operation with the downstream Sink( Above downstream Parameters ) Combination and innovation Sink. Just imagine from the last one in the assembly line Stage Start , Keep calling the last one Stage Of opWrapSink() Until the beginning ( barring stage0, because stage0 For data sources , Operation not included ), You can get a representation of all operations on the pipeline Sink, In code, that's it :

class PipelineHelper

class AbstractPipeline extends PipelineHelper

- adopt wrapSink Method gets all operations from start to end and wraps them in a sink Inside , And then through copyInto perform , It is equivalent to the execution of the entire pipeline

- Code execution logic : First call wrappedSink.begin() The method tells Sink The data is coming , And then call spliterator.forEachRemaining() Methods iterate the data , Last call wrappedSink.end() Method notice Sink End of data processing .

4. Where is the operation result ?

For different types of returned results , The following table shows all kinds of... With returned results Stream End operation :

| Return type | Corresponding end operation |

|---|---|

| boolean | anyMatch() allMatch() noneMatch() |

| Optional | findFirst() findAny() |

| Reduction result | reduce() collect() |

| Array | toArray() |

- For tables that return boolean perhaps Optional The operation of (Optional It's for storage. One Value container ) The operation of , Because the value returns a value , Just in the corresponding Sink Record this value in , Wait until the end of execution and return .

- For reduction operations , The final result is placed in the container specified when the user calls ( The container type is determined by [ The collector ](https://blog.csdn.net/CarpenterLee/p/5-Streams API(II).md# The collector ) Appoint ).collect(), reduce(), max(), min() All reduction operations , although max() and min() Also return one Optional, But in fact, the bottom layer is through calling [reduce()](https://blog.csdn.net/CarpenterLee/p/5-Streams API(II).md# Versatile reduce) Method .

- For the case where the return is an array , There is no doubt that the results will be placed in the array . Of course that's right , But before finally returning the array , The results are actually stored in a system called Node In the data structure of .Node It's a multi tree structure , Elements are stored in the leaves of the tree , And a leaf node can store multiple elements . This is done to facilitate parallel execution .

Reference article :

Java 8 Stream Principle analysis

In depth understanding of Java Stream Assembly line

Java 8 Stream explore a mystery

Java8 Stream In depth analysis of principle

carding

// Example :List a = b.stream().map(m::getId()).collect(Collectors.toList())

//1. First call stream Method , Look at the source code :

public interface Collection extends Iterable {

default Stream stream() {

return StreamSupport.stream(spliterator(), false);

}

}

//2. Get into StreamSupport

//3. For discovery ReferencePipeline Created Head head , Record this operation

public final class StreamSupport {

public static Stream stream(Spliterator spliterator, boolean parallel) {

Objects.requireNonNull(spliterator);

return new ReferencePipeline.Head<>(spliterator,

StreamOpFlag.fromCharacteristics(spliterator),

parallel);

}

}

//4. Call the intermediate operation map Method , Those operations that discover intermediate operations and final operations are here

//5. Find out map The operation is StatelessOp( Stateless operation ), At the same time, this class inherits from AbstractPipeline, Rewrite the opWrapSink Method ;

//6. And pass Sink Interface implements adjacency stage Direct connection , To stack operation records

abstract class ReferencePipeline

extends AbstractPipeline>

implements Stream {

@Override

@SuppressWarnings("unchecked")

public final Stream map(Function <span class="hljs-built\_in"super P\_OUT, ? extends R> mapper) {

Objects.requireNonNull(mapper);

return new StatelessOp(this, StreamShape.REFERENCE,

StreamOpFlag.NOT\_SORTED | StreamOpFlag.NOT\_DISTINCT) {

@Override

Sink opWrapSink(int flags, Sink sink) {

return new Sink.ChainedReference(sink) {

@Override

public void accept(P\_OUT u) {

downstream.accept(mapper.apply(u));

}

};

}

};

}

}

//7. adopt PipelineHelper Medium wrapSink The interface carries out the operation record from the beginning to the end, which is packaged into a Sink in

abstract class PipelineHelper {

abstract Sink wrapSink(Sink sink);

}

abstract class AbstractPipelineextends BaseStream>

extends PipelineHelper implements BaseStream {

@Override

@SuppressWarnings("unchecked")

final Sink wrapSink(Sink sink) {

Objects.requireNonNull(sink);

for ( @SuppressWarnings("rawtypes") AbstractPipeline p=AbstractPipeline.this; p.depth > 0; p=p.previousStage) {

sink = p.opWrapSink(p.previousStage.combinedFlags, sink);

}

return (Sink) sink;

}

}

//8. adopt PipelineHelper Medium copyInto Interface execution stage

abstract class PipelineHelper {

abstract void copyInto(Sink wrappedSink, Spliterator spliterator);

}

abstract class AbstractPipelineextends BaseStream>

extends PipelineHelper implements BaseStream {

@Override

final void copyInto(Sink wrappedSink, Spliterator spliterator) {

Objects.requireNonNull(wrappedSink);

if (!StreamOpFlag.SHORT\_CIRCUIT.isKnown(getStreamAndOpFlags())) {

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();

}

else {

copyIntoWithCancel(wrappedSink, spliterator);

}

}

}

//9. Finally, different types of operation types are used to get Stream Return result of

Last

What these individuals want to say remains at the end , After all, there seems to be something wrong with the foreword , After all, this is not the point of the article .

I haven't blogged for a while , I still have to reflect on myself . The result of introspection is that people like to be lazy , Become unable to summarize the learning content for a period of time , In addition, I need to sort out my own ideas in the whole process of blogging , And you should also have a certain degree of correctness judgment on the content you write , So the time of blogging also grows . Little by little , I also relaxed , And so The biggest problem is that our knowledge system is becoming more and more fragmented , It seems that I have been learning something , But at the same time, the speed of forgetting is also getting faster , As a result, I can not correctly apply these learned technologies and knowledge points in practice .

Last time I said , Summarize the relevant contents of design patterns , But after all, this kind of thinking level thing , If not through theory and practice , It's hard to sum up something useful for yourself , And these contents are put in my own online blog after all , Then you're not just watching , I don't want some rookies like me to be misled by the article after reading .

Stream This thing is also a thing that I usually use more , So let's make a summary .

Any errors in the text , Please point out in time , And please do not hesitate to give us your advice .

边栏推荐

- 请问网页按钮怎么绑定sql语句呀

- Talk about Domain Driven Design

- 移动安全工具-jad

- Yarn create vite reports an error 'd:\program' which is neither an internal or external command nor a runnable program or batch file

- [compilation principles] review outline of compilation principles of Shandong University

- hutool对称加密

- 通过uview让tabbar根据权限显示相应数量的tabbar

- 内存屏障今生之Store Buffer, Invalid Queue

- 将通讯录功能设置为数据库维护,增加用户名和密码

- Window right click management

猜你喜欢

How to write controller layer code gracefully?

如何优雅的写 Controller 层代码?

DMU software syntax highlighting VIM setting -- Learning Notes 6

vs怎么配置OpenCV?2022vs配置OpenCV详解(多图)

Basic knowledge | JS Foundation

![[openairinterface5g] rrcsetupcomplete for RRC NR resolution](/img/61/2136dc37b98260e09f3be9979492b1.jpg)

[openairinterface5g] rrcsetupcomplete for RRC NR resolution

Oppo interview sorting, real eight part essay, abusing the interviewer

![Speech signal processing - concept (II): amplitude spectrum (STFT spectrum), Mel spectrum [the deep learning of speech mainly uses amplitude spectrum and Mel spectrum] [extracted with librosa or torch](/img/b3/6c8d9fc66e2a4dbdc0dd40179266d3.png)

Speech signal processing - concept (II): amplitude spectrum (STFT spectrum), Mel spectrum [the deep learning of speech mainly uses amplitude spectrum and Mel spectrum] [extracted with librosa or torch

IDEA连接数据库报错

再见了,敏捷Scrum

随机推荐

Yolov6's fast and accurate target detection framework is open source

guava 定时任务

R 语言 基于关联规则与聚类分析的消费行为统计

Stream常用操作以及原理探索

Rust中的Pin详解

Gérer 1000 serveurs par personne? Cet outil d'automatisation o & M doit être maîtrisé

poi导出excle

cookie加密7 fidder分析阶段

再见了,敏捷Scrum

请问如何将数据从oracle导入fastDFS?

语音信号特征提取流程:输入语音信号-分帧、预加重、加窗、FFT->STFT谱(包括幅度、相位)-对复数取平方值->幅度谱-Mel滤波->梅尔谱-取对数->对数梅尔谱-DCT->FBank->MFCC

延时队列`DelayQueue`

Jupiter notebook file directory

Date database date strings are converted to and from each other

语音信号处理-概念(一):时谱图(横轴:时间;纵轴:幅值)、频谱图(横轴:频率;纵轴:幅值)--傅里叶变换-->时频谱图【横轴:时间;纵轴:频率;颜色深浅:幅值】

1-4 decimal representation and conversion

R language calculates Spearman correlation coefficient in parallel to speed up the construction of co occurrence network

将通讯录功能设置为数据库维护,增加用户名和密码

Rust Async: smol源码分析-Executor篇

JDBC operation MySQL example