当前位置:网站首页>The first in the industry! MOS sub evaluation model for subjective video quality experience that can run on mobile devices!

The first in the industry! MOS sub evaluation model for subjective video quality experience that can run on mobile devices!

2022-06-28 03:05:00 【Zhiyuan community】

In a real-time interactive scenario , Video quality is a key indicator of the audience experience , However, how to evaluate the image quality of video in real time has always been a difficult problem in the industry , It is necessary to turn the unknown video quality user's subjective experience into knowable .

The unknown is often the part that needs to be conquered most , Sound network has also been continuously exploring video quality evaluation methods in line with the field of real-time interaction , After the continuous research of audio network video algorithm experts , Officially launched the industry's first video quality subjective experience that can run on mobile devices MOS Sub evaluation model . Using advanced deep learning algorithms , Realize the subjective experience of video image quality in real-time interactive scenes MOS branch ( Average subjective opinion score ) No reference evaluation of , We call this evaluation system Acoustic network VQA (Video Quality Assessment).

Acoustic network VQA It's a set “ Evaluate subjective video quality experience ” Objective indicators of , In the sound network VQA There are already two ways to evaluate the video quality before the launch . The first is objective video quality assessment , This method is mainly used in streaming media playing scenes , And the quality is evaluated according to the amount of information provided by the original reference video . The second is subjective video quality assessment , The traditional method mainly relies on watching video and scoring , Although it can directly reflect the audience's feelings about video quality to a certain extent , But this is still time-consuming and laborious 、 The high cost 、 The subjective perception is biased .

The above two traditional video quality evaluation methods are difficult to apply to real-time interactive scenes , To solve the above problems , Sound network has built a large-scale subjective evaluation database of video image quality , On this basis, it trained the first in the industry that can run directly on the mobile terminal VQA Model , It uses the deep learning algorithm to realize the subjective experience of the video quality at the receiving end of the real-time interactive scene MOS Evaluation of scores , It relieves the high dependence of traditional subjective image quality assessment on human rating , This greatly improves the efficiency of video quality evaluation , Make real-time video quality assessment possible .

Simply speaking , We have established a database of subjective ratings of video quality , Then an algorithm model is established through the deep learning algorithm , And based on a lot of video -MOS Point information for training , Finally, it is applied to real-time interactive scenes , Achieve subjective video quality MOS Accurate simulation of points . But the difficulty is ,1、 How to collect data sets , That is, how to quantify people's subjective evaluation of video quality ;2、 How to model , Enable the model to run at any receiving end , Real time evaluation of the image quality at the receiving end .

Collect professional 、 rigorous 、 Reliable video quality data set

Sound network first established a subjective image quality evaluation database , And refer to ITU ( ITU standards ) Set up a scoring system to collect the subjective scoring of raters , Then data cleaning , Finally, get the subjective experience of the video MOS branch .

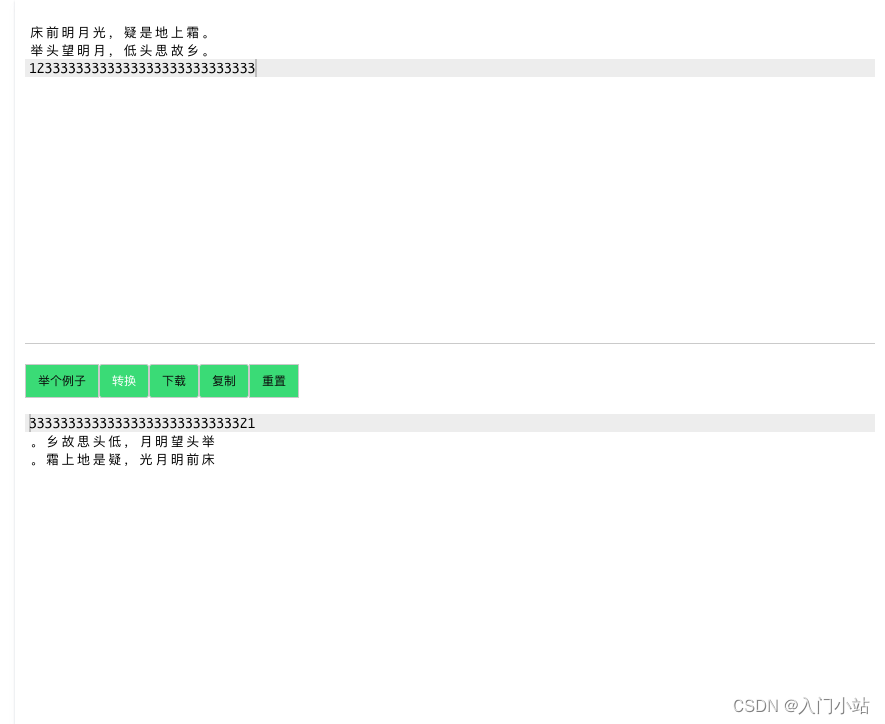

To ensure the professionalism of the data set 、 Rigorous and reliable , The sound network is first in the video material sorting stage , Make the video content itself rich in sources , Avoid visual fatigue when the rater scores , meanwhile , Try to distribute evenly in the image quality interval , Avoid too much video material in some image quality areas , There are too few videos in some image quality areas , This will have an impact on the average value of subsequent scores , The following figure shows the scoring distribution we collected in a video :

secondly , In order to better match the real-time interactive scene , The design of sound network data set is very rigorous , It covers a variety of scene video damage distortion types , Include : Dark light makes more noise 、 Motion blur 、 Blurred screen 、 Block effect 、 Motion blur ( Camera jitters )、 tonal 、 saturation 、 Highlights, noise, etc . Scoring indicators are also set 1-5 branch , With 0.5 It is divided into a picture quality range , Each interval does arrive at 0.1, The particle size is finer and corresponds to the detailed standard .

Last , In data cleaning phase , We follow ITU The standard is established ≥15 Human rater group , First, calculate the correlation between each rater and the overall mean , After excluding the raters with low correlation , Then calculate the mean value of the evaluation of the remaining raters , Get the final video subjective experience MOS branch . Although different raters are interested in “ good ” and “ bad ” Absolute interval definition of , Or the sensitivity to image quality damage is different , But yes. “ good ” and “ Poor ” Our judgments are still converging .

Establish a subjective experience of video image quality based on the mobile terminal MOS Sub evaluation model

After collecting the data , Next, we need to establish the video subjective experience based on the database through the deep learning algorithm MOS Sub evaluation model , So that the model can replace manual scoring . Because in the real-time interactive scene , The receiver cannot obtain the lossless video reference source , Therefore, the scheme of the sound network is to be objective VQA It is defined as a non reference evaluation tool on the decoding resolution of the receiver , Monitor the decoded video quality with the method of deep learning .

● Academic rigor of model design : In the process of training the deep learning model , We also refer to many academic papers ( See the paper references at the end of the article ), For example, in non end-to-end training, some features will be extracted from the original video , We find that sampling in video space has the greatest impact on performance , The sampling in time domain is compared with the original video MOS The most relevant ( Reference papers 1). meanwhile , It is not only the characteristics of airspace that affect the image quality experience , Distortion in the time domain can also have an impact , There is a time-domain lag effect ( Reference paper 2). This effect corresponds to two behaviors : First, when the video quality decreases, the subjective experience immediately decreases , The second is the slow improvement of viewer experience when video image quality is improved . Regarding this , This phenomenon is also considered in the modeling of acoustic network .

● The number of ultra small model parameters at the mobile end is reduced 99.1%: Considering that many real-time interactive scenarios are currently applied on the mobile terminal , The sound network has designed a super small model that is easier to be used at the mobile end , The model is relatively large and the number of model parameters is reduced 99.1%, Less computation 99.4% . Even after the low-end mobile phone is connected , You can also run without pressure , Conduct a survey of the image quality of end-to-end video . meanwhile , We also implement an innovative compression method for deep learning models , Based on a lightweight version and keeping the model prediction correlation , Further reduce the model parameters 59%, Less computation 49.2%. As a general method , It is extended to simplify the models of other deep learning tasks , Form an effective common simplification method .

● The performance of the model is better than that of the open large model in academic circles : One side , Acoustic network VQA The correlation of the prediction results of the small model is similar to that of the large model published by the academic circles , Even slightly better than the results of some large models , We chose the sound network VQA The model is open to academia IQA、BRISQUE、V-BLINDS as well as VSFA And other four video quality evaluation algorithm models in two large-scale public data sets KoNViD-1k 、LIVE-VQC We did experiments on , The experimental results are shown below :

On the other hand , Acoustic network VQA Compared with the large model based on deep learning in academic circles, our model has great computational advantages , We will put the sound net VQA And VSFA The parameters and computation of the model are compared , Result sound network VQA The amount of parameters and operations of are far lower than VSFA Model . This performance advantage gives the sound network VQA Directly evaluate the possibility of video call service experience on the end , Under the condition of providing certain accuracy guarantee , It greatly improves the saving of computing resources .

● VQA The model has good generalization ability , In the deep learning algorithm , Generalization ability refers to the adaptability of the algorithm to fresh samples , In short, it is a model trained through deep learning method , The performance of the training set with known data is good , The test set of unknown data can also give reasonable results after training . In the early stage , Acoustic network VQA The model is mainly used to polish the internal video conference tools and educational scene data , But in the follow-up, the relevance of the entertainment scene test results reached 84% above . Good generalization capability will be the basis for future sound network VQA Create a video quality evaluation standard recognized by the industry and establish a good foundation .

● More applicable to RTE Real time interactive scene : At present, there are some similar enterprises in the industry VQA The algorithm is mainly used in non real-time streaming media playing scenes , And because of the limitations of the evaluation method , The final evaluation results often have a certain gap with the users' real subjective experience scores , And the voice network VQA The algorithm model can be applied to many real-time interactive scenes , And the subjective video quality score finally evaluated is highly consistent with the user's real sensory experience . meanwhile , Acoustic network VQA The video data of the model does not need to be uploaded to the server , It can run directly on the end in real time , Not only save resources , It can also effectively avoid data privacy problems for customers .

■ Acoustic network VQA Demo: Dynamically predict video quality

from XLA To VQA yes QoS To QoE Evolution of indicators

In real-time interaction ,QoS Service quality mainly reflects the performance and quality of audio and video technology services , and QoE Experience quality represents users' subjective feelings about the quality and performance of real-time interactive services . The sound network has launched real-time interaction XLA Experience quality standards , contain 5s Login success rate 、600ms Video jam rate 、200ms Audio Caton rate 、<400ms The four indicators of network delay , The monthly compliance rate of each indicator shall exceed 99.5%,XLA The four indicators mainly reflect the service quality of real-time audio and video (QoS). Acoustic network VQA It can more intuitively reflect users' subjective experience quality of video quality (QoE), It also represents that the real-time interactive quality evaluation indicators will achieve the goal of QoS To QoE Evolution .

For enterprise customers and developers , Acoustic network VQA It can also enable multiple values :

1、 Enterprises choose to avoid pitfalls , Many enterprises and developers choose real-time audio and video service providers , Will make several audio and video calls Demo Subjective feeling or simple access test as the selection standard , Acoustic network VQA The introduction of can help enterprises to have a quantifiable evaluation standard when selecting service providers , Better understand the subjective experience evaluation of the service provider's audio and video quality on the user side .

2、 help ToB Enterprises provide customers with video quality assessment tools , For providing enterprise video conferencing 、 Collaboration 、 train , And enterprises of various industry-class video systems can use the sound network VQA Effectively quantify video quality , Help enterprises to be more intuitive 、 It can quantitatively show the image quality of its own products and services .

3、 Help optimize product experience , Acoustic network VQA Turn the previously unknown subjective experience of users in real-time interaction into knowable , It will undoubtedly help customers to evaluate the experience of the product side 、 Fault detection is of great help , Only a more comprehensive understanding of objective service quality indicators and subjective user experience quality , To further optimize the product experience , Finally, the user experience will be improved .

Future outlook

Next , Acoustic network VQA There is still a long way to go , For example, for model training VQA Data sets , Most of the time is 4~10s Unequal video clips , In the actual call, the proximate effect should be considered , Only through linear tracking of video clips 、 The way of management and reporting , It may not be able to accurately fit the overall subjective feelings of users , Next, we plan to comprehensively consider the clarity 、 Fluency 、 Interactive delay 、 Sound and picture synchronization, etc , Form a time-varying experience evaluation method .

meanwhile , Future sound network VQA It is also expected to open source , We hope to cooperate with industry manufacturers 、 Developers work together to promote VQA The continuous evolution of , To form RTE Industry recognized subjective experience evaluation standard for video quality .

At present, the sound network VQA It has been in the iterative grinding of internal system , The follow-up will gradually open up , And plan to synchronize in SDK Integrated online evaluation function , And release offline evaluation tools . If you want to learn more about or experience the sound network VQA , You can click on the text below to leave your message , We will make further communication with you .

Attached are academic papers and references :

[1] Z. Ying, M. Mandal, D. Ghadiyaram and A. Bovik, "Patch-VQ: ‘Patching Up’ the Video Quality Problem," 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 2021, pp. 14014-14024.

[2] K. Seshadrinathan and A. C. Bovik, "Temporal hysteresis model of time varying subjective video quality," 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 2011, pp. 1153-1156.

边栏推荐

- be fond of the new and tired of the old? Why do it companies prefer to spend 20K on recruiting rather than raise salaries to retain old employees

- 初始线性回归

- math_ (function & sequence) meaning of limit & misunderstanding and symbol sorting / neighborhood & de centring neighborhood & neighborhood radius

- adb双击POWER键指令

- Win11 ne peut pas faire glisser l'image sur le logiciel de la barre des tâches

- Intel Ruixuan A380 graphics card will be launched in China

- Différences d'utilisation entre IsEmpty et isblank

- [today in history] June 17: the creator of the term "hypertext" was born; The birth of Novell's chief scientist; Discovery channel on

- Severe Tire Damage:世界上第一个在互联网上直播的摇滚乐队

- Mixed programming of C language and assembly language in stm32

猜你喜欢

![[today in history] June 6: World IPv6 launch anniversary; Tetris release; Little red book established](/img/06/895913d2c54b03cde86b3116955a9e.png)

[today in history] June 6: World IPv6 launch anniversary; Tetris release; Little red book established

![Packet capturing and sorting out external Fiddler -- understanding the toolbar [1]](/img/5f/24fd110a73734ba1638f0aad63c787.png)

Packet capturing and sorting out external Fiddler -- understanding the toolbar [1]

业内首个!可运行在移动设备端的视频画质主观体验MOS分评估模型!

在线文本按行批量反转工具

微信小程序中生成二维码

转载文章:数字经济催生强劲算力需求 英特尔发布多项创新技术挖掘算力潜能

Win11不能拖拽圖片到任務欄軟件上快速打開怎麼辦

![[today in history] June 11: the co inventor of Monte Carlo method was born; Google launched Google Earth; Google acquires waze](/img/eb/147d4aac20639d50b204dcf424c9e2.png)

[today in history] June 11: the co inventor of Monte Carlo method was born; Google launched Google Earth; Google acquires waze

What if win11 can't drag an image to the taskbar software to open it quickly

2-5基础配置-Win2003增加攻击面

随机推荐

横向滚动的RecycleView一屏显示五个半,低于五个平均分布

[today in history] June 23: Turing's birthday; The birth of the founder of the Internet; Reddit goes online

初始线性回归

读书,使人内心宁静

Why are so many people keen on big factories because of the great pressure and competition?

[today in history] June 18: JD was born; The online store platform Etsy was established; Facebook releases Libra white paper

[today in history] June 20: the father of MP3 was born; Fujitsu was established; Google acquires dropcam

Packet capturing and sorting out external Fiddler -- understanding the toolbar [1]

apache、iis6、ii7独立ip主机屏蔽限制ip访问

第一次使用gcc和makefile编写c程序

Mixed programming of C language and assembly language in stm32

How does win11 add printers and scanners? Win11 add printer and scanner settings

抓包整理外篇fiddler————了解工具栏[一]

MFC common current path

3年功能测试拿8K,被刚来的测试员反超,其实你在假装努力

[elevator control system] design of elevator control system based on VHDL language and state machine, using state machine

Writing based on stm32

嵌入式DSP音频开发

Domain Name System

[today in history] June 7: kubernetes open source version was released; Worldofwarcraft landed in China; Birth of the inventor of packet switching network