当前位置:网站首页>Nfnet: extension of NF RESNET without BN's 4096 super batch size training | 21 year paper

Nfnet: extension of NF RESNET without BN's 4096 super batch size training | 21 year paper

2022-06-23 15:34:00 【VincentLee】

The paper holds that Batch Normalization It is not a necessary structure of the network , On the contrary, it will bring many problems , So I started to study Normalizer-Free The Internet , It is hoped that it can not only have considerable performance, but also support large-scale training . The paper proposes ACG Gradient clipping method to assist training , It can effectively prevent gradient explosion , Also based on NF-ResNet Your thoughts will SE-ResNet Transform into NFNet series , have access to 4096 It's huge batch size Training , Performance goes beyond Efficient series

source : Xiaofei's algorithm Engineering Notes official account

The paper : High-Performance Large-Scale Image Recognition Without Normalization

- Address of thesis :https://arxiv.org/abs/2102.06171

- Paper code :https://github.com/deepmind/deepmind-research/tree/master/nfnets

Introduction

at present , Most models of computer vision benefit from depth residual networks and batch normalization, These two innovations can help train deeper networks , It achieves high accuracy in training set and test set . especially batch normalization, It can not only smooth the loss curve , Use greater learning rates and batch size Training , And regularization . However ,batch normalization Not perfect ,batch normalization There are three shortcomings in practice :

- It costs a lot of calculation , Memory consumption is high .

- There is a discrepancy in the use of training and reasoning , And bring in extra super parameters .

- Broke the training set minibatch Independence .

among , The third problem is the most serious , This will cause a series of negative problems . First ,batch normalization This makes it difficult for the model to reproduce the accuracy on different devices , And distributed training often has problems . secondly ,batch normalization It cannot be used in tasks requiring independent training samples for each round , Such as GAN and NLP Mission . Last ,batch normalization Yes batch size Very sensitive , stay batch size Low performance , Limited model size on limited devices .

therefore , Even though batch normalization It has a very powerful effect , Some researchers are still looking for a simple alternative , Not only does it need to be fairly accurate , It can also be used in a wide range of tasks . at present , Most of the alternatives focus on suppressing the weight of the residual branch , For example, a learnable scalar with zero initial value is introduced at the end of the residual branch . But these methods are not accurate enough , It just can't be used for large-scale training , The accuracy is always inferior to EfficientNets.

thus , The paper is mainly based on the previous substitution batch normalization The job of , Try to solve the core problem , The main contributions of this paper are as follows :

- Put forward Adaptive Gradient Clipping(AGC), In dimensions , Based on the proportion of Weight Norm and gradient norm, gradient clipping is carried out . take AGC Used for training Normalizer-Free The Internet , Use larger batch size And stronger data enhancements .

- Design Normalizer-Free ResNets series , Name it NFNets, stay ImageNet Up to SOTA, among NFNet-F1 And EfficientNet-B7 The precision is equal , Fast training 8.7 times , maximal NFNet Accessible 86.5%top-1 Accuracy rate .

- Experimental proof , stay 3 After pre training on the private data set of 100 million tags , And then ImageNet on finetune, The accuracy is comparable to batch normalization The network should be high , The best model achieves 89.2%top-1 Accuracy rate .

Understanding Batch Normalization

This paper discusses batch normalization Several advantages of , Let's talk about it briefly :

- downscale the residual branch:batch normalization It limits the weight of the residual branch , Deflect the signal skip path Direct transmission , Help train ultra deep networks .

- eliminate mean-shift: The activation function is asymmetric and its mean value is non-zero , The eigenvalues activated at the initial stage of training will become larger and positive ,batch normalization Just can eliminate this problem .

- regularizing effect: because batch normalization Training is done with minibatch Statistics , It's equivalent to the present batch Noise is introduced , Play the role of regularization , Can prevent over fitting , Improve accuracy .

- allows efficient large-batch training:batch normalization Can smooth loss curve , Greater learning rates and bach size Training .

Towards Removing Batch Normalization

The research of this paper is based on the author's previous Normalizer-Free ResNets(NF-ResNets) Expand ,NF-ResNets Getting rid of normalization There is still quite good training and test accuracy after the layer .NF-ResNets The core of is to adopt $h{i+1}=h_i+\alpha f_i(h_i/\beta_i)$ Formal residual block,$h_i$ For the first time $i$ Input of residual blocks ,$f_i$ For the first time $i$ individual residual block Residual branch of .$f_i$ Special initialization is required , It has the function of keeping the variance constant , namely $Var(f_i(z))=Var(z)$.$\alpha=0.2$ Used to control the variance variation ,$\beta_i=\sqrt{Var(h_i)}$ by $h_i$ Standard deviation . after NF-ResNet Of residual block After processing , The variance of the output becomes $Var(h{i+1})=Var(h_i)+\alpha^2$.

Besides ,NF-ResNet Another core of is Scaled Weight Standardization, Used to solve the problems caused by the activation layer mean-shift The phenomenon , Reinitialize the convolution layer with the following weights :

among ,$\mui=(1/B)\sum_jW{ij}$ and $\sigma^2i=(1/N)\sum_j(W{ij}-\mu_i)^2$ Is a line corresponding to the convolution kernel (fan-in) The mean and variance of . in addition , The output of the nonlinear activation function needs to be multiplied by a specific scalar $\gamma$, The combination of the two ensures that the variance does not change .

Previously published articles also have NF-ResNet A detailed interpretation of , Those who are interested can go and have a look .

Adaptive Gradient Clipping for Efficient Large-Batch Training

Gradient tailoring can help training use a greater learning rate , It can also accelerate convergence , Especially when the loss curve is not ideal or large batch size Training scenario . therefore , The paper thinks that gradient clipping can help NF-ResNet Large adaptation batch size Training scene . For gradient vectors $G=\partial L/\partial\theta$, The standard gradient clipping is :

Crop threshold $\lambda$ It is a super parameter that needs debugging . Based on experience , Although gradient tailoring can help training with larger batch size, But the effect of the model on the threshold $\lambda$ The setting of is very sensitive , Depending on the depth of the model 、batch size And learning rate . therefore , The paper puts forward a more convenient Adaptive Gradient Clipping(AGC).

Definition $W^l\in\mathbb{R}^{N\times M}$ and $G^l\in\mathbb{R}^{N\times M}$ by $l$ Layer weight matrix and gradient matrix ,$|\cdot|_F$ by F- norm ,ACG The algorithm uses the ratio between gradient norm and weight norm $\frac{|G^l|_F}{|W^l|_F}$ For dynamic gradient clipping . In practice , It is found that according to convolution kernel (unit-wise) The effect of gradient clipping is better than that of the whole convolution kernel , Final ACG Algorithm for :

Crop threshold $\lambda$ Is a super parameter , Set up $|W_i|^{*}_F=max(|W_i|_F, \epsilon=10^{-3})$, Avoid zero initialization , The parameter always cuts the gradient to zero . With the help of AGC Algorithm ,NF-ResNets You can use bigger batch size(4096) Training , You can also use more complex data enhancements . The most optimal $\lambda$ Consider the optimizer 、 Learning rate and batch size, Discover through practice , The bigger batch size The smaller $\lambda$, such as batch size=4096 Use $\lambda=0.01$.

ACG The algorithm is similar to the optimizer normalization , such as LARS.LARS The norm of the weight update value is fixed as the ratio of the weight norm $\Delta w=\gamma \eta \frac{|w^l|}{|\nabla L(w^l)|} * \nabla L(w^l_t)$, Thus ignoring the magnitude of the gradient , Only the gradient direction is preserved , It can alleviate the phenomenon of gradient explosion and gradient disappearance .ACG The algorithm can be considered as a relaxed version of the optimizer normalization , The maximum gradient is constrained based on the weight norm , But it does not constrain the lower bound of the gradient or ignore the magnitude of the gradient . The paper also tries ACG and LARS Use it together , I found that the performance decreased instead .

Normalizer-Free Architectures with Improved Accuracy and Training Speed

The paper takes GELU Activation of SE-ResNeXt-D Model as Normalizer-Free The foundation of the Internet , In addition to training, join ACG Outside , The main improvements are as follows :

- take $3\times 3$ Convolution becomes grouping convolution , The dimensions of each group are fixed as 128, The number of groups is determined by the input dimension of the convolution . Smaller grouping dimensions can reduce the amount of theoretical calculation , However, the reduction of computing density leads to the inability to make good use of the advantages of device dense computing , It doesn't actually bring more acceleration .

- ResNet Deep expansion of ( from resnNet50 Extended to ResNet200) Mainly focused on stage2 and stage3, and stage1 and stage4 keep 3 individual block In the form of . Such an approach is not optimal , Because regardless of low-level features or high-level features , Need enough space to learn . therefore , The paper first formulates the minimum F0 The Internet stage Of block The number of $1,2,6,3$, Subsequent larger networks are expanded in multiples on this basis .

- ResNet Of stage Dimension for $256,512,1024,2048$, After testing , Change it to $256,512,1536,1536$,stage3 Adopt larger capacity , Because it's deep enough , Need more capacity to collect features , and stage4 The main reason for not increasing the depth is to maintain the training speed .

- take NF-ResNet Of bottleneck residual block Applied to the SE-ResNeXt And modify , In addition to the original foundation, a $3\times 3$ Convolution , There is only a small increase in the amount of calculation .

- Build a scaling strategy to produce models of different computing resources , It is found that width expansion has little effect on network gain , So only the scaling of depth and input resolution is considered . As I said before , Deep expansion of the basic network in the form of multiples , Scale resolution at the same time , Make its training and testing speed reach half of the previous level .

- When the network volume increases , Strengthen regularization strength . It is found through experiments that , adjustment weight decay and stochastic depth rate( The training process makes some random block The residual branch of is invalid ) There are no big gains , So by increasing dropout Of drop rate To achieve the purpose of regularization . Due to lack of network BN Display regularization of , So this step is very important , Prevent over fitting .

According to the above modification , It is concluded that the NFNet The parameters of the series are shown in table 1 Shown . There is a global pooling layer at the end of the network , So the resolution of training and testing can be different .

Experiment

contrast AGC In different batch size The next effect , as well as $\lambda$ And batch size The relationship between .

stay ImageNet Compare the performance of networks of different sizes .

be based on ImageNet Of 10 epoch Pre training weights , Conduct NF-ResNet Transform and Fine-tuning, The performance is shown in the table 4 Shown .

Conclusion

The paper holds that Batch Normalization It is not a necessary structure of the network , On the contrary, it will bring many problems , So I started to study Normalizer-Free The Internet , It is hoped that it can not only have considerable performance, but also support large-scale training . The paper proposes ACG Gradient clipping method to assist training , It can effectively prevent gradient explosion , Also based on NF-ResNet Your thoughts will SE-ResNet Transform into NFNet series , have access to 4096 It's huge batch size Training , Performance goes beyond Efficient series .

边栏推荐

- php 二维数组插入

- 基因检测,如何帮助患者对抗疾病?

- Redis缓存三大异常的处理方案梳理总结

- Error creating bean with name xxx Factory method ‘sqlSessionFactory‘ threw exception; nested excepti

- The work and development steps that must be done in the early stage of the development of the source code of the live broadcasting room

- How can genetic testing help patients fight disease?

- 这届文娱人,将副业做成了主业

- [cloud based co creation] intelligent supply chain plan: improve the decision-making level of the supply chain and help enterprises reduce costs and increase efficiency

- Print memory station information

- 百萬獎金等你來拿,首届中國元宇宙創新應用大賽聯合創業黑馬火熱招募中!

猜你喜欢

How to solve the problem that iterative semi supervised training is difficult to implement in ASR training? RTC dev Meetup

变压器只能转换交流电,那直流电怎么转换呢?

JS traversal array (using the foreach () method)

Sfod: passive domain adaptation and upgrade optimization, making the detection model easier to adapt to new data (attached with paper Download)

《墨者学院——SQL手工注入漏洞测试(MySQL数据库)》

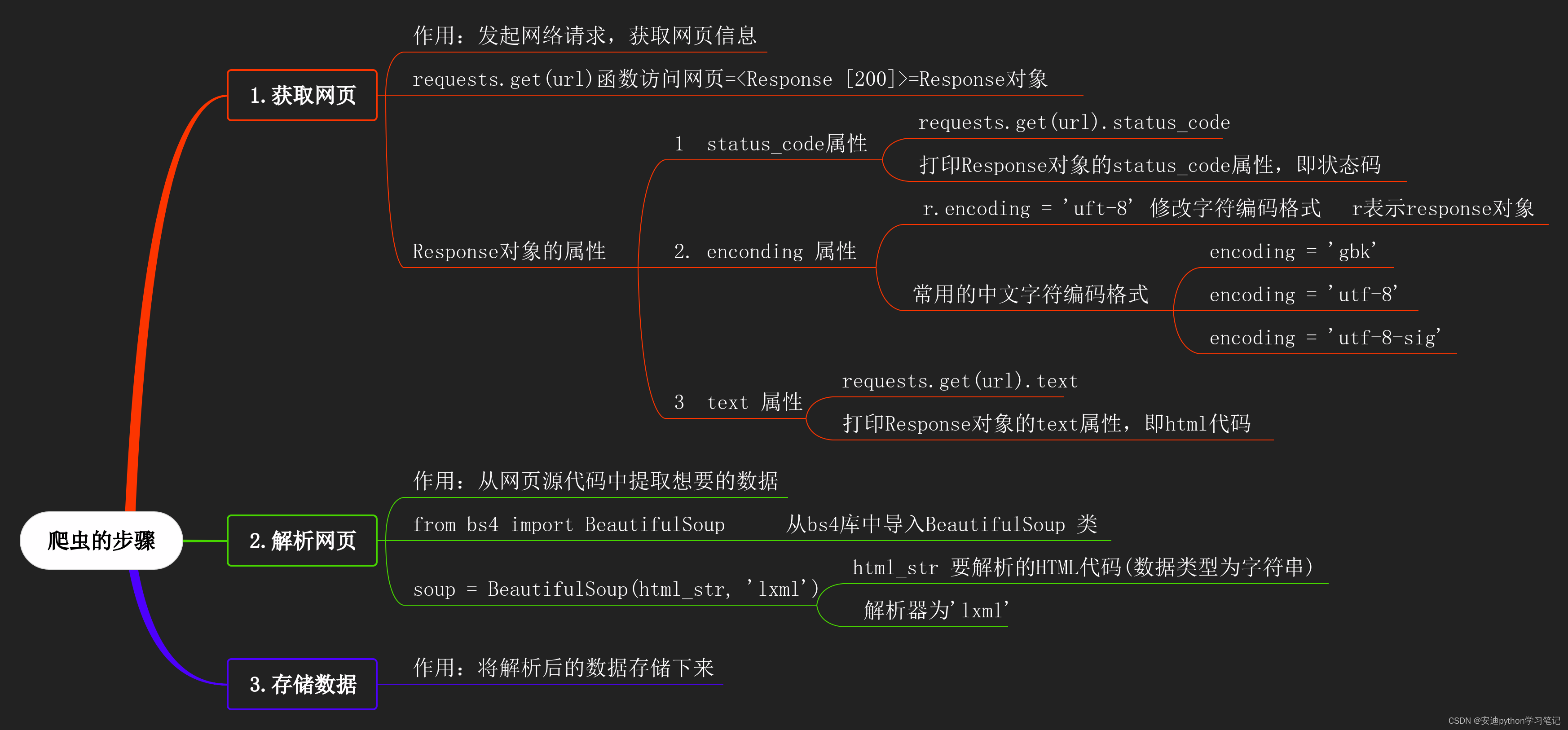

12 BeautifulSoup类的初始化

The idea and method of MySQL master-slave only synchronizing some libraries or tables

SQL注入漏洞(原理篇)

Half wave loss equal thickness and equal inclination interference

JS garbage collection

随机推荐

RF Analyzer Demo搭建

golang 重要知识:mutex

JS garbage collection

【Pyside2】 pyside2的窗口在maya置顶(笔记)

Pop() element in JS

小米为何深陷芯片泥潭?

山东:美食“隐藏款”,消费“扫地僧”

Important knowledge of golang: sync Cond mechanism

聚合生态,使能安全运营,华为云安全云脑智护业务安全

After nine years at the helm, the founding CEO of Allen Institute retired with honor! He predicted that Chinese AI would lead the world

Jsr303 data verification

Usestate vs useref and usereducer: similarities, differences and use cases

golang 重要知识:sync.Once 讲解

快速排序的简单理解

进销存软件排行榜前十名!

Convert JSON file of labelme to coco dataset format

百萬獎金等你來拿,首届中國元宇宙創新應用大賽聯合創業黑馬火熱招募中!

Important knowledge of golang: detailed explanation of context

【云驻共创】制造业企业如何建设“条码工厂”

Horizon development board commissioning