当前位置:网站首页>hue新建账号报错解决方案

hue新建账号报错解决方案

2022-06-27 12:37:00 【51CTO】

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO conf.Configuration: resource-types.xml not found

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO resource.ResourceUtils: Unable to

find

'resource-types.xml'.

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (32768 MB per container)

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO yarn.Client: Will allocate AM container, with

2048 MB memory including

1024 MB overhead

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO yarn.Client: Setting up container launch context

for our AM

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO yarn.Client: Setting up the launch environment

for our AM container

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO yarn.Client: Preparing resources

for our AM container

22/06/26

19:03:45 INFO client.SparkClientImpl: Exception

in thread

"main" org.apache.hadoop.security.AccessControlException: Permission denied:

user

=wangyx,

access

=WRITE,

inode

=

"/user/wangyx":mapred:mapred:drwxr-xr-x

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:400)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:256)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:194)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1855)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1839)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1798)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirMkdirOp.mkdirs(FSDirMkdirOp.java:61)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3101)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1123)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:696)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos

$ClientNamenodeProtocol

$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.ProtobufRpcEngine

$Server

$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.RPC

$Server.call(RPC.java:991)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$RpcCall.run(Server.java:869)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$RpcCall.run(Server.java:815)

22/06/26

19:03:45 INFO client.SparkClientImpl: at java.security.AccessController.doPrivileged(Native Method)

22/06/26

19:03:45 INFO client.SparkClientImpl: at javax.security.auth.Subject.doAs(Subject.java:422)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$Handler.run(Server.java:2675)

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

22/06/26

19:03:45 INFO client.SparkClientImpl: at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:121)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:88)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2341)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2315)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DistributedFileSystem

$2

7.doCall(DistributedFileSystem.java:1248)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DistributedFileSystem

$2

7.doCall(DistributedFileSystem.java:1245)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:1262)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:1237)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:2209)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:673)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.yarn.Client.prepareLocalResources(Client.scala:428)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.yarn.Client.createContainerLaunchContext(Client.scala:868)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:183)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.yarn.Client.run(Client.scala:1143)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1606)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit.org

$apache

$spark

$deploy

$SparkSubmit

$$runMain(SparkSubmit.scala:851)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit.doRunMain

$1(SparkSubmit.scala:167)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit

$$anon

$2.doSubmit(SparkSubmit.scala:926)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit

$.main(SparkSubmit.scala:935)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

22/06/26

19:03:45 INFO client.SparkClientImpl: Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied:

user

=wangyx,

access

=WRITE,

inode

=

"/user/wangyx":mapred:mapred:drwxr-xr-x

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:400)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:256)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:194)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1855)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1839)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1798)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSDirMkdirOp.mkdirs(FSDirMkdirOp.java:61)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3101)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1123)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:696)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos

$ClientNamenodeProtocol

$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.ProtobufRpcEngine

$Server

$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.RPC

$Server.call(RPC.java:991)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$RpcCall.run(Server.java:869)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$RpcCall.run(Server.java:815)

22/06/26

19:03:45 INFO client.SparkClientImpl: at java.security.AccessController.doPrivileged(Native Method)

22/06/26

19:03:45 INFO client.SparkClientImpl: at javax.security.auth.Subject.doAs(Subject.java:422)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Server

$Handler.run(Server.java:2675)

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1499)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Client.call(Client.java:1445)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.Client.call(Client.java:1355)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.ProtobufRpcEngine

$Invoker.invoke(ProtobufRpcEngine.java:228)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.ipc.ProtobufRpcEngine

$Invoker.invoke(ProtobufRpcEngine.java:116)

22/06/26

19:03:45 INFO client.SparkClientImpl: at com.sun.proxy.

$Proxy9.mkdirs(Unknown Source)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:640)

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

22/06/26

19:03:45 INFO client.SparkClientImpl: at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

22/06/26

19:03:45 INFO client.SparkClientImpl: at java.lang.reflect.Method.invoke(Method.java:498)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.io.retry.RetryInvocationHandler

$Call.invokeMethod(RetryInvocationHandler.java:165)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.io.retry.RetryInvocationHandler

$Call.invoke(RetryInvocationHandler.java:157)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.io.retry.RetryInvocationHandler

$Call.invokeOnce(RetryInvocationHandler.java:95)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

22/06/26

19:03:45 INFO client.SparkClientImpl: at com.sun.proxy.

$Proxy10.mkdirs(Unknown Source)

22/06/26

19:03:45 INFO client.SparkClientImpl: at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2339)

22/06/26

19:03:45 INFO client.SparkClientImpl: ...

20 more

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO util.ShutdownHookManager: Shutdown hook called

22/06/26

19:03:45 INFO client.SparkClientImpl:

22/06/26

19:03:45 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-ca9cf15c-8a40-40e1-83ee-e6457fa4577e

22/06/26

19:03:46 ERROR client.SparkClientImpl: Error

while waiting

for Remote Spark Driver to connect back to HiveServer2.

java.util.concurrent.ExecutionException: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41)

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:103)

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:100)

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132)

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131)

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1328)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:187)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:409)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:836)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:772)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

at org.apache.hive.spark.client.SparkClientImpl

$2.run(SparkClientImpl.java:495)

at java.lang.Thread.run(Thread.java:748)

22/06/26

19:03:46 ERROR spark.SparkTask: Failed to execute Spark task

"Stage-1"

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client

for Spark session 281661e4-07fd-4d6a-84c2-f3bd2a37c0b5_0: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.getHiveException(SparkSessionImpl.java:286)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:135)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132)

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131)

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1328)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:187)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:409)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:836)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:772)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.lang.RuntimeException: Error

while waiting

for Remote Spark Driver to connect back to HiveServer2.

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:124)

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:100)

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131)

...

22 more

Caused by: java.util.concurrent.ExecutionException: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41)

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:103)

...

27 more

Caused by: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

at org.apache.hive.spark.client.SparkClientImpl

$2.run(SparkClientImpl.java:495)

at java.lang.Thread.run(Thread.java:748)

FAILED: Execution Error, return code

30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client

for Spark session 281661e4-07fd-4d6a-84c2-f3bd2a37c0b5_0: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

22/06/26

19:03:46 ERROR ql.Driver: FAILED: Execution Error, return code

30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client

for Spark session 281661e4-07fd-4d6a-84c2-f3bd2a37c0b5_0: java.lang.RuntimeException: spark-submit process failed with

exit code

1 and error ?

22/06/26

19:03:46 INFO ql.Driver: Completed executing command

(queryId

=wangyx_20220626190342_4084f851-bd19-441f-9198-7de25138fef4); Time taken:

3.59 seconds

22/06/26

19:03:46 INFO conf.HiveConf: Using the default value passed

in

for log id: 281661e4-07fd-4d6a-84c2-f3bd2a37c0b5

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

- 92.

- 93.

- 94.

- 95.

- 96.

- 97.

- 98.

- 99.

- 100.

- 101.

- 102.

- 103.

- 104.

- 105.

- 106.

- 107.

- 108.

- 109.

- 110.

- 111.

- 112.

- 113.

- 114.

- 115.

- 116.

- 117.

- 118.

- 119.

- 120.

- 121.

- 122.

- 123.

- 124.

- 125.

- 126.

- 127.

- 128.

- 129.

- 130.

- 131.

- 132.

- 133.

- 134.

- 135.

- 136.

- 137.

- 138.

- 139.

- 140.

- 141.

- 142.

- 143.

- 144.

- 145.

- 146.

- 147.

- 148.

- 149.

- 150.

- 151.

- 152.

- 153.

- 154.

- 155.

- 156.

- 157.

- 158.

- 159.

- 160.

- 161.

- 162.

- 163.

- 164.

- 165.

- 166.

- 167.

- 168.

- 169.

- 170.

- 171.

- 172.

- 173.

- 174.

- 175.

- 176.

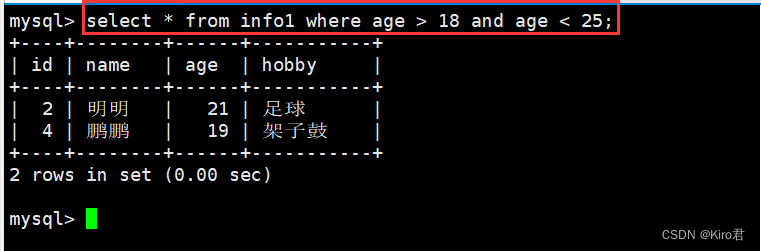

22/06/26 19:03:45 INFO client.SparkClientImpl: Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=wangyx, access=WRITE, inode="/user/wangyx":mapred:mapred:drwxr-xr-x

报没有权限:解决问题方法如下:

边栏推荐

- AI for Science:科研范式、开源平台和产业形态

- Win10彻底永久关闭自动更新的步骤

- 二叉树的三种遍历方式

- OpenFeign服务接口调用

- 全志A13折腾备忘

- Script defer async mode

- 微服务之配置管理中心

- ACL 2022 | TAMT proposed by Chinese Academy of Sciences: TAMT: search for a portable Bert subnet through downstream task independent mask training

- log4j. Detailed configuration of properties

- Object serialization

猜你喜欢

How to download pictures with hyperlinks

JMETER连接DM8

MySQL high level statements (I)

Size end byte order

【TcaplusDB知识库】TcaplusDB-tcapsvrmgr工具介绍(二)

【医学分割】unet3+

TiDB 6.0:让 TSO 更高效丨TiDB Book Rush

Tidb 6.0: making Tso more efficient tidb Book rush

SSH workflow and principle

Local visualization tool connects to redis of Alibaba cloud CentOS server

随机推荐

Quanzhi A13 tossing memo

picocli-入门

行业洞察 | 新零售业态下,品牌电商应如何重塑增长?

threejs的环境光+点光源+平行光源+球面光 以及hepler理解+阴影()

Uni app sends request instructions using the escook / request miniprogram plug-in

What's the matter with Amazon's evaluation dropping and failing to stay? How to deal with it?

What is the next step in the recommendation system? Alispacetime aggregates GNN, and the effect is to sling lightgcn!

[fans' welfare] today, I'd like to introduce a method to collect money for nothing - convertible bonds. I personally verified that each person can earn 1500 yuan a year

[medical segmentation] unet3+

阿胖的操作记录

Configuration of YML

l六月集训(第27天) —— 图

Script defer async mode

PyCharm汉化

convn-N 维卷积

云原生(三十) | Kubernetes篇之应用商店-Helm

zabbix支持钉钉报警

Socket blocking and non blocking modes

VS调试技巧

AI for Science: scientific research paradigm, open source platform and industrial form