当前位置:网站首页>Convolutional neural network model -- googlenet network structure and code implementation

Convolutional neural network model -- googlenet network structure and code implementation

2022-07-23 16:42:00 【1 + 1= Wang】

List of articles

GoogLeNet Network profile

GoogLeNet Original address :Going Deeper with Convolutions:https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Szegedy_Going_Deeper_With_2015_CVPR_paper.pdf

GoogLeNet stay 2014 Year by year Christian Szegedy Put forward , It is a new deep learning structure .

GoogLeNet The main innovation of the network is :

Put forward Inception The structure is convoluted and repolymerized simultaneously on multiple sizes ;

Use 1X1 The convolution of is used for dimension reduction and mapping ;

Add two auxiliary classifiers to help train ;

Auxiliary classifier is to use the output of a certain middle layer as a classification , And add a smaller weight to the final classification result .Use the average pool layer instead of the full connection layer , Greatly reduced the number of parameters .

GoogLeNet Network structure

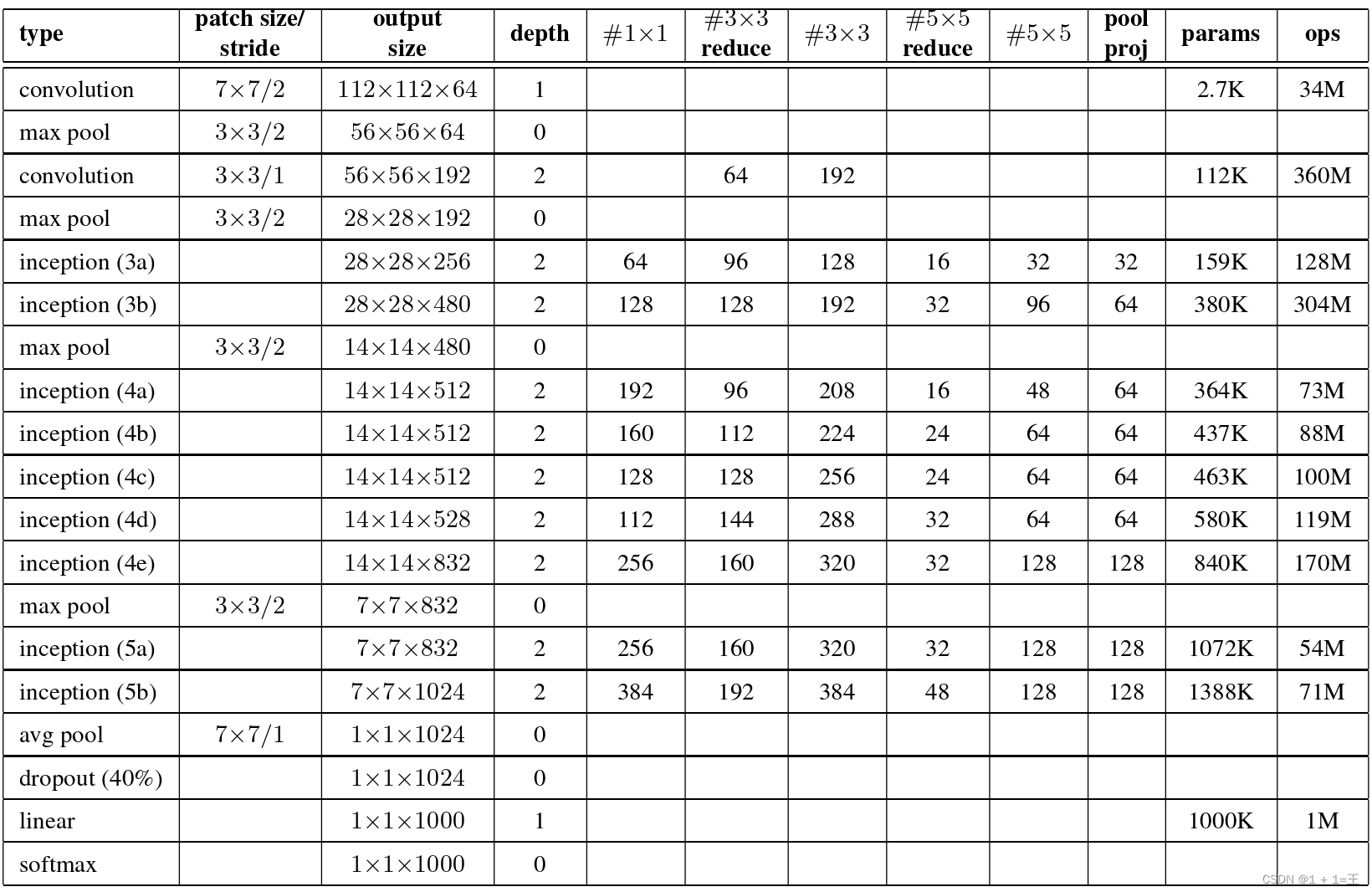

GoogLeNet The complete network structure of is as follows :

Next, we will explain it layer by layer and combine it with code analysis

Inception Previous layers

When entering Inception Before the structure ,GoogLeNet The network first stacks two convolutions ( But in fact 3 individual , There is one 1X1 Convolution of ) And two maximum pooling layers .

# input(3,224,224)

self.front = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3), # output(64,112,112)

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2,ceil_mode=True), # output(64,56,56)

nn.Conv2d(64,64,kernel_size=1),

nn.Conv2d(64,192,kernel_size=3,stride=1,padding=1), # output(192,56,56)

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2,ceil_mode=True), # output(192,28,28)

)

Inception structure

Inception The module will only change the number of channels of the characteristic graph , Without changing the size .

Inception The structure is relatively complex , We recreate a class to build this structure , The number of channels in each layer is controlled by different parameters .

class Inception(nn.Module):

''' in_channels: Enter the number of channels out1x1: Branch 1 Number of output channels in3x3: Branch 2 Of 3x3 Number of input channels for convolution out3x3: Branch 2 Of 3x3 The number of output channels of convolution in5x5: Branch 3 Of 5x5 Number of input channels for convolution out5x5: Branch 3 Of 5x5 The number of output channels of convolution pool_proj: Branch 4 Maximum number of pooled layer output channels '''

def __init__(self,in_channels,out1x1,in3x3,out3x3,in5x5,out5x5,pool_proj):

super(Inception, self).__init__()

self.branch1 = nn.Sequential(

nn.Conv2d(in_channels, out1x1, kernel_size=1),

nn.ReLU(inplace=True)

)

self.branch2 = nn.Sequential(

nn.Conv2d(in_channels,in3x3,kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(in3x3,out3x3,kernel_size=3,padding=1),

nn.ReLU(inplace=True)

)

self.branch3 = nn.Sequential(

nn.Conv2d(in_channels, in5x5, kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(in5x5, out5x5, kernel_size=5, padding=2),

nn.ReLU(inplace=True)

)

self.branch4 = nn.Sequential(

nn.MaxPool2d(kernel_size=3,stride=1,padding=1),

nn.Conv2d(in_channels,pool_proj,kernel_size=1),

nn.ReLU(inplace=True)

)

def forward(self,x):

branch1 = self.branch1(x)

branch2 = self.branch2(x)

branch3 = self.branch3(x)

branch4 = self.branch4(x)

outputs = [branch1,branch2,branch3,branch4]

return torch.cat(outputs,1) # Stack by channel number

Inception3a modular

# input(192,28,28)

self.inception3a = Inception(192, 64, 96, 128, 16, 32, 32) # output(256,28,28)

Inception3b + MaxPool

# input(256,28,28)

self.inception3b = Inception(256, 128, 128, 192, 32, 96, 64) # output(480,28,28)

self.maxpool3 = nn.MaxPool2d(3, stride=2, ceil_mode=True) # output(480,14,14)

Inception4a

# input(480,14,14)

self.inception4a = Inception(480, 192, 96, 208, 16, 48, 64) # output(512,14,14)

Inception4b

# input(512,14,14)

self.inception4b = Inception(512, 160, 112, 224, 24, 64, 64) # output(512,14,14)

Inception4c

# input(512,14,14)

self.inception4c = Inception(512, 160, 112, 224, 24, 64, 64) # output(512,14,14)

Inception4d

# input(512,14,14)

self.inception4d = Inception(512, 112, 144, 288, 32, 64, 64) # output(528,14,14)

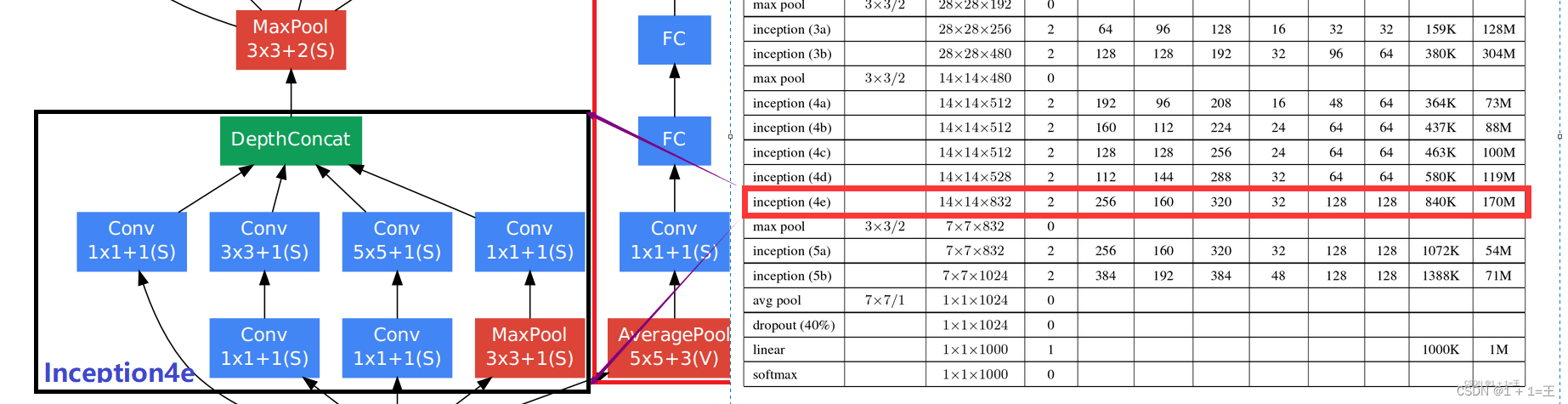

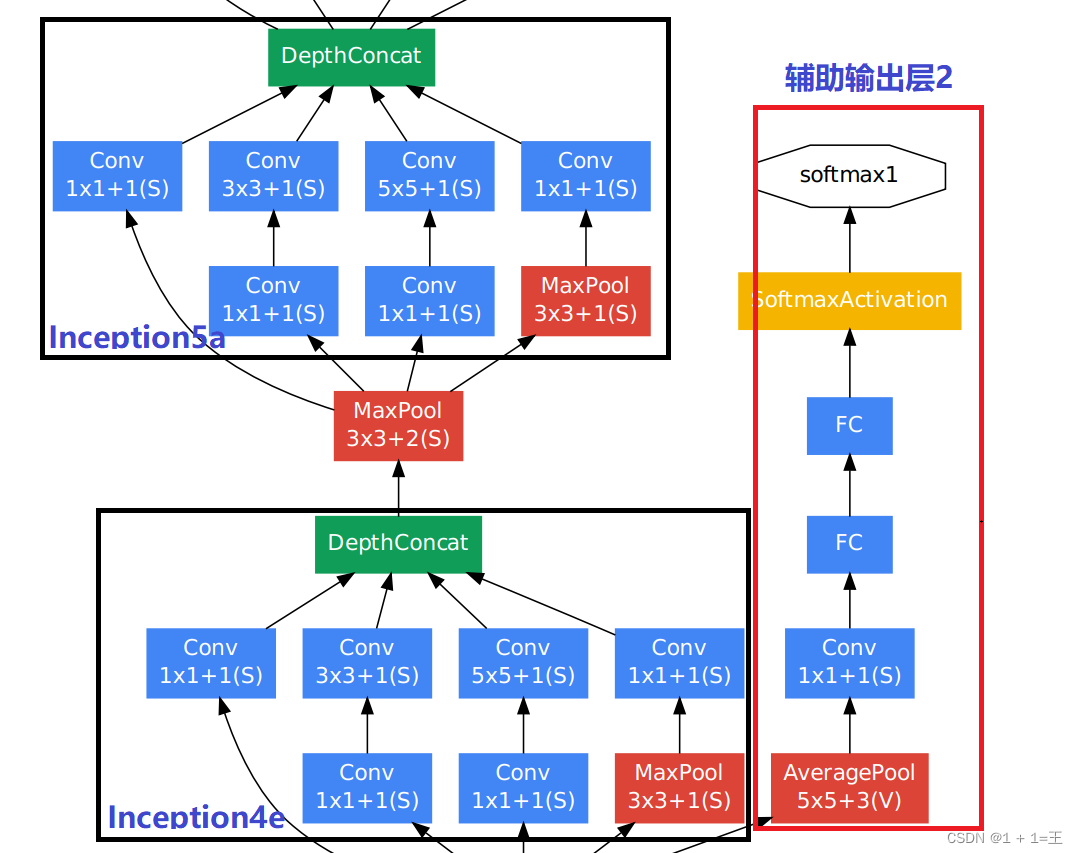

Inception4e+MaxPool

# input(528,14,14)

self.inception4e = Inception(528, 256, 160, 320, 32, 128, 128) # output(832,14,14)

self.maxpool4 = nn.MaxPool2d(3, stride=2, ceil_mode=True) # output(832,7,7)

Inception5a

# input(832,7,7)

self.inception5a = Inception(832, 256, 160, 320, 32, 128, 128) # output(832,7,7)

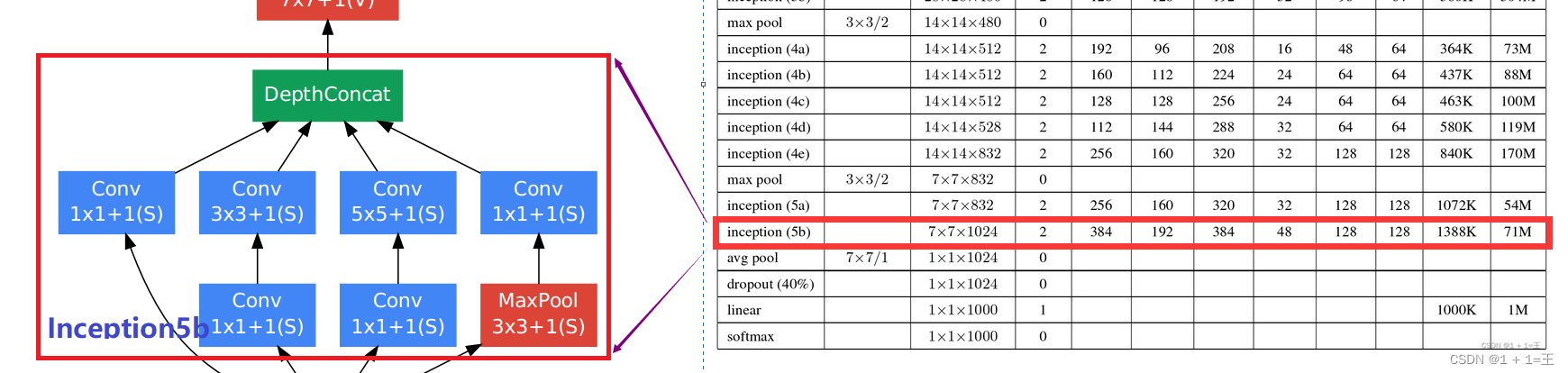

Inception5b

# input(832,7,7)

self.inception5b = Inception(832, 384, 192, 384, 48, 128, 128) # output(1024,7,7)

Inception The next several layers

Auxiliary classification module

In addition to the above backbone network structure ,GoogLeNet It also provides two auxiliary classification modules , Used to use the output of an intermediate layer as a classification , And press a smaller weight (0.3) Add to the final classification result .

And Inception The modules are the same , We also re create a class to build the auxiliary classification module structure .

class AccClassify(nn.Module):

# in_channels: Input channel

# num_classes: Number of categories

def __init__(self,in_channels,num_classes):

self.avgpool = nn.AvgPool2d(kernel_size=5, stride=3)

self.conv = nn.MaxPool2d(in_channels, 128, kernel_size=1) # output[batch, 128, 4, 4]

self.relu = nn.ReLU(inplace=True)

self.fc1 = nn.Linear(2048, 1024)

self.fc2 = nn.Linear(1024, num_classes)

def forward(self,x):

x = self.avgpool(x)

x = self.conv(x)

x = self.relu(x)

x = torch.flatten(x, 1)

x = F.dropout(x, 0.5, training=self.training)

x = F.relu(self.fc1(x), inplace=True)

x = F.dropout(x, 0.5, training=self.training)

x = self.fc2(x)

return x

Auxiliary classification module 1

The first m-server output is located at Inception4a after , take Inception4a The output of is average pooled ,1X1 After convolution and full connection, wait until the classification result .

self.acc_classify1 = AccClassify(512,num_classes)

Auxiliary classification module 2

self.acc_classify2 = AccClassify(528,num_classes)

Overall network structure

pytorch Build complete code

""" #-*-coding:utf-8-*- # @author: wangyu a beginner programmer, striving to be the strongest. # @date: 2022/7/5 18:37 """

import torch.nn as nn

import torch

import torch.nn.functional as F

class GoogLeNet(nn.Module):

def __init__(self,num_classes=1000,aux_logits=True):

super(GoogLeNet, self).__init__()

self.aux_logits = aux_logits

# input(3,224,224)

self.front = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3), # output(64,112,112)

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2,ceil_mode=True), # output(64,56,56)

nn.Conv2d(64,64,kernel_size=1),

nn.Conv2d(64,192,kernel_size=3,stride=1,padding=1), # output(192,56,56)

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2,ceil_mode=True), # output(192,28,28)

)

# input(192,28,28)

self.inception3a = Inception(192, 64, 96, 128, 16, 32, 32) # output(64+128+32+32=256,28,28)

self.inception3b = Inception(256, 128, 128, 192, 32, 96, 64) # output(480,28,28)

self.maxpool3 = nn.MaxPool2d(3, stride=2, ceil_mode=True) # output(480,14,14)

self.inception4a = Inception(480, 192, 96, 208, 16, 48, 64) # output(512,14,14)

self.inception4b = Inception(512, 160, 112, 224, 24, 64, 64) # output(512,14,14)

self.inception4c = Inception(512, 128, 128, 256, 24, 64, 64) # output(512,14,14)

self.inception4d = Inception(512, 112, 144, 288, 32, 64, 64) # output(528,14,14)

self.inception4e = Inception(528, 256, 160, 320, 32, 128, 128) # output(832,14,14)

self.maxpool4 = nn.MaxPool2d(3, stride=2, ceil_mode=True) # output(832,7,7)

self.inception5a = Inception(832, 256, 160, 320, 32, 128, 128) # output(832,7,7)

self.inception5b = Inception(832, 384, 192, 384, 48, 128, 128) # output(1024,7,7)

if self.training and self.aux_logits:

self.acc_classify1 = AccClassify(512,num_classes)

self.acc_classify2 = AccClassify(528,num_classes)

self.avgpool = nn.AdaptiveAvgPool2d((1,1)) # output(1024,1,1)

self.dropout = nn.Dropout(0.4)

self.fc = nn.Linear(1024,num_classes)

def forward(self,x):

# input(3,224,224)

x = self.front(x) # output(192,28,28)

x= self.inception3a(x) # output(256,28,28)

x = self.inception3b(x)

x = self.maxpool3(x)

x = self.inception4a(x)

if self.training and self.aux_logits:

classify1 = self.acc_classify1(x)

x = self.inception4b(x)

x = self.inception4c(x)

x = self.inception4d(x)

if self.training and self.aux_logits:

classify2 = self.acc_classify2(x)

x = self.inception4e(x)

x = self.maxpool4(x)

x = self.inception5a(x)

x = self.inception5b(x)

x = self.avgpool(x)

x = torch.flatten(x,dims=1)

x = self.dropout(x)

x= self.fc(x)

if self.training and self.aux_logits:

return x,classify1,classify2

return x

class Inception(nn.Module):

''' in_channels: Enter the number of channels out1x1: Branch 1 Number of output channels in3x3: Branch 2 Of 3x3 Number of input channels for convolution out3x3: Branch 2 Of 3x3 The number of output channels of convolution in5x5: Branch 3 Of 5x5 Number of input channels for convolution out5x5: Branch 3 Of 5x5 The number of output channels of convolution pool_proj: Branch 4 Maximum number of pooled layer output channels '''

def __init__(self,in_channels,out1x1,in3x3,out3x3,in5x5,out5x5,pool_proj):

super(Inception, self).__init__()

# input(192,28,28)

self.branch1 = nn.Sequential(

nn.Conv2d(in_channels, out1x1, kernel_size=1),

nn.ReLU(inplace=True)

)

self.branch2 = nn.Sequential(

nn.Conv2d(in_channels,in3x3,kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(in3x3,out3x3,kernel_size=3,padding=1),

nn.ReLU(inplace=True)

)

self.branch3 = nn.Sequential(

nn.Conv2d(in_channels, in5x5, kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(in5x5, out5x5, kernel_size=5, padding=2),

nn.ReLU(inplace=True)

)

self.branch4 = nn.Sequential(

nn.MaxPool2d(kernel_size=3,stride=1,padding=1),

nn.Conv2d(in_channels,pool_proj,kernel_size=1),

nn.ReLU(inplace=True)

)

def forward(self,x):

branch1 = self.branch1(x)

branch2 = self.branch2(x)

branch3 = self.branch3(x)

branch4 = self.branch4(x)

outputs = [branch1,branch2,branch3,branch4]

return torch.cat(outputs,1)

class AccClassify(nn.Module):

def __init__(self,in_channels,num_classes):

self.avgpool = nn.AvgPool2d(kernel_size=5, stride=3)

self.conv = nn.MaxPool2d(in_channels, 128, kernel_size=1) # output[batch, 128, 4, 4]

self.relu = nn.ReLU(inplace=True)

self.fc1 = nn.Linear(2048, 1024)

self.fc2 = nn.Linear(1024, num_classes)

def forward(self,x):

x = self.avgpool(x)

x = self.conv(x)

x = self.relu(x)

x = torch.flatten(x, 1)

x = F.dropout(x, 0.5, training=self.training)

x = F.relu(self.fc1(x), inplace=True)

x = F.dropout(x, 0.5, training=self.training)

x = self.fc2(x)

return x

# print(GoogLeNet())

chart

边栏推荐

- 为什么使用opengaussjdbc的时候老是出现FATAL?(标签-数据库|关键词-user)

- Ali Er Mian: when does MySQL use table locks and row locks?

- The working principle of PLL. For example, how can our 8MHz crystal oscillator make MCU work at 48mhz or 72mhz

- 距离IoU损失:包围盒回归更快更好的学习(Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression)

- fio性能测试工具

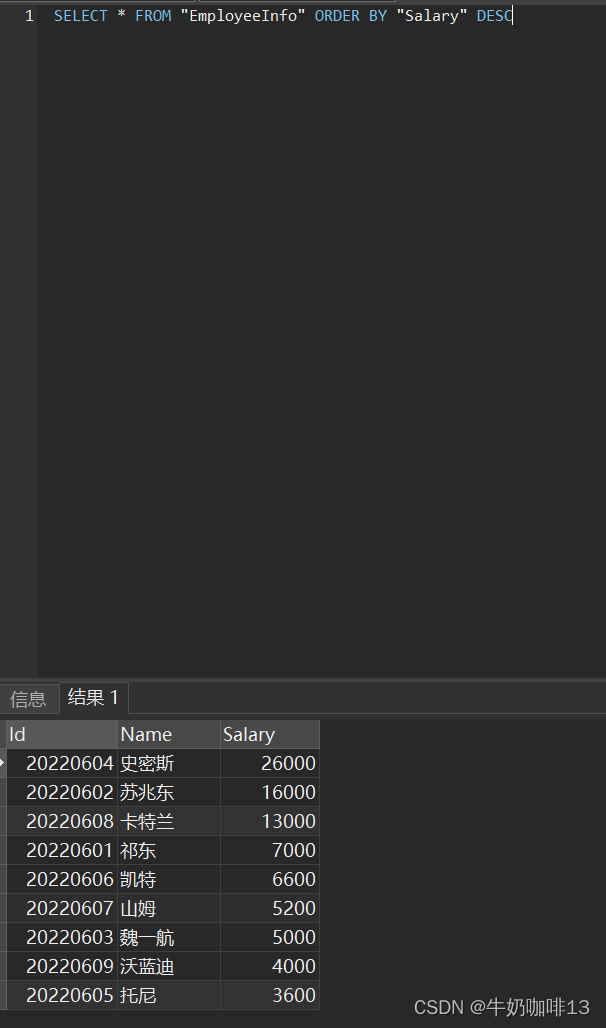

- Backup and restore of database

- 距7月30日PMP考试不足10天,应该做点什么?

- Flutter 组件的生命周期、State 管理及局部重绘 | 开发者说·DTalk

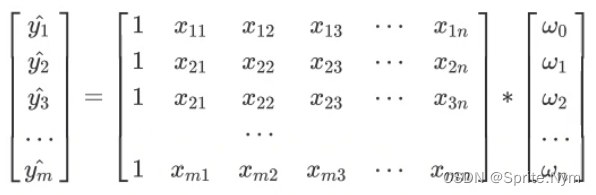

- 【笔记】线性回归

- FreeRTOS个人笔记-挂起/解挂任务

猜你喜欢

Introduction to Huawei's new version of datacom certification

UiPath Studio Enterprise 22.4 Crack

【笔记】线性回归

华为新版Datacom认证介绍

Oracle中实现删除指定查询条件的所有数据

阿里二面:MySQL 啥时候用表锁,啥时候用行锁?

阿里平头哥CPU技术生态负责人陈炜:平头哥的发展之路

C#入门系列(二十八) -- LINQ的查询语法

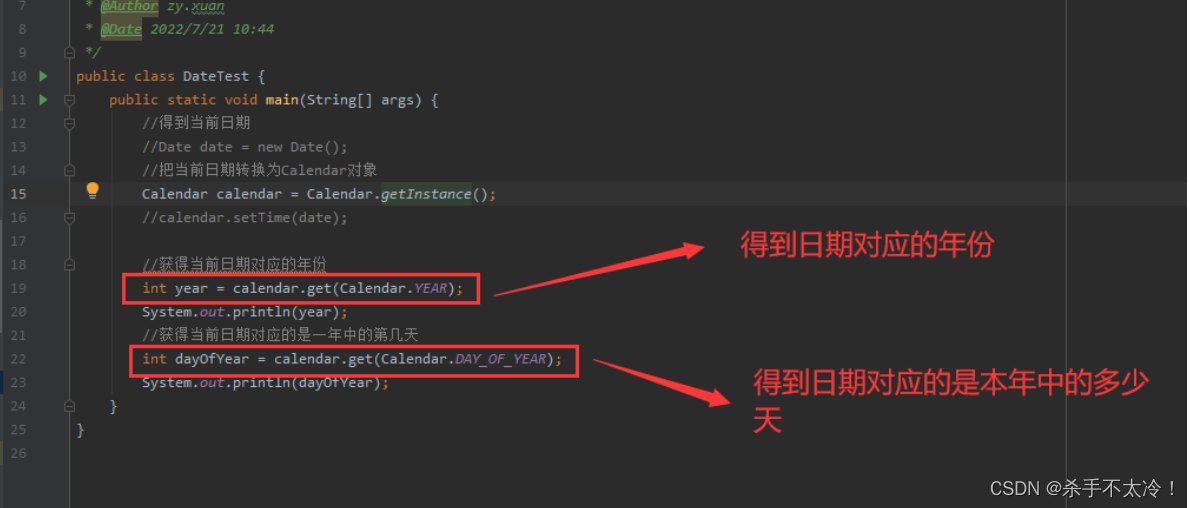

Calendar calendar class

Liupeng, vice president of copu: China's open source has approached or reached the world's advanced level in some areas

随机推荐

Calendar calendar class

go语言多返回值以及返回错误类型

博客表情大全

【Error】TypeError: expected str, bytes or os.PathLike object, not int

V self built n_ Deployment and use

COPU副主席刘澎:中国开源在局部领域已接近或达到世界先进水平

MySQL multi table query_ Cartesian product_ Inner connection_ Implicit connection

32位单片机GPIO端口电路结构以及输出模式

大屏可视化的适配方案

中国化NFT?NFR横空出世

CNCF基金会总经理Priyanka Sharma:一文读懂CNCF运作机制

Squid 代理服务之透明代理服务器架构搭建

Oralce中实现将指定列的指定内容替换为想要的内容

软件体系结构

实时疫情数据可视化分析

Life cycle, state management and local redrawing of fluent components | developers say · dtalk

Niuke-top101-bm36

一款非常棒的开源微社区轻论坛类源码

(已解决)idea编译Gradle项目提示 错误找不到符号

Complete knapsack explanation of dynamic programming knapsack problem