当前位置:网站首页>python_ scrapy_ Fang Tianxia

python_ scrapy_ Fang Tianxia

2020-11-08 08:04:00 【osc_x4ot1joy】

scrapy- Explain

xpath Select node The common tag elements are as follows .

| Mark | describe |

|---|---|

| extract | The extracted content is converted to Unicode character string , The return data type is list |

| / | Select from root node |

| // | Match the selected current node, select the node in the document |

| . | node |

| @ | attribute |

| * | Any element node |

| @* | Any attribute node |

| node() | Any type of node |

Climb to take the house world - Prelude

analysis

1、 website :url:https://sh.newhouse.fang.com/house/s/.

2、 Determine what data to crawl :1) Web address :page.2) Location name :name.3) Price :price.4) Address :address.5) Phone number :tel

2、 Analyze the web page .

open url after , We can see the data we need , Then you can see that there are still pagination .

You can see the opening url Then look at the page elements , All the data we need are in a pair of ul tag .

open li A couple of labels , What we need name Is in a Under the label , And there are unclear spaces around the text, such as line feed, need special treatment .

What we need price Is in 55000 Under the label , Be careful , Some houses have been bought without price display , Step on this pit carefully .

We can find the corresponding by analogy address and tel.

The pagination tag element shows , Of the current page a Of class="active". In opening the home page is a The text of is 1, It means the first page .

Climb to take the house world - Before the specific implementation process

First new scrapy project

1) Switch to the project folder :Terminal Input... On the console scrapy startproject hotel,hotel It's the project name of the demo , You can customize it according to your own needs .

2) On demand items.py Folder configuration parameters . Five parameters are needed in the analysis , Namely :page,name,price,address,tel. The configuration code is as follows :

class HotelItem(scrapy.Item):

# The parameters here should correspond to the specific parameters of the crawler implementation

page = scrapy.Field()

name = scrapy.Field()

price = scrapy.Field()

address = scrapy.Field()

tel = scrapy.Field()

3) Build our new reptile Branch . Switch to spiders Folder ,Terminal Input... On the console scrapy genspider house sh.newhouse.fang.comhouse Is the crawler name of the project , You can customize ,sh.newhouse.fang.com It's an area selection for crawling .

stay spider Under the folder we created house.py The file .

The code implementation and explanation are as follows

import scrapy

from ..items import *

class HouseSpider(scrapy.Spider):

name = 'house'

# Crawling area restrictions

allowed_domains = ['sh.newhouse.fang.com']

# The main page of crawling

start_urls = ['https://sh.newhouse.fang.com/house/s/',]

def start_requests(self):

for url in self.start_urls:

# Return the module name passed by the function , There are no brackets . It's a convention .

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

items = []

# Get the value displayed on the current page

for p in response.xpath('//a[@class="active"]/text()'):

# extract Convert the extracted content to Unicode character string , The return data type is list

currentpage=p.extract()

# Determine the last page

for last in response.xpath('//a[@class="last"]/text()'):

lastpage=last.extract()

# Switch to the nearest layer of tags .// Select the node in the document from the current node that matches the selection , Regardless of their location / Select from root node

for each in response.xpath('//div[@class="nl_con clearfix"]/ul/li/div[@class="clearfix"]/div[@class="nlc_details"]'):

item=HotelItem()

# name

name=each.xpath('//div[@class="house_value clearfix"]/div[@class="nlcd_name"]/a/text()').extract()

# Price

price=each.xpath('//div[@class="nhouse_price"]/span/text()').extract()

# Address

address=each.xpath('//div[@class="relative_message clearfix"]/div[@class="address"]/a/@title').extract()

# Telephone

tel=each.xpath('//div[@class="relative_message clearfix"]/div[@class="tel"]/p/text()').extract()

# all item The parameters in it have to do with us items The meaning of the parameters in it corresponds to

item['name'] = [n.replace(' ', '').replace("\n", "").replace("\t", "").replace("\r", "") for n in name]

item['price'] = [p for p in price]

item['address'] = [a for a in address]

item['tel'] = [s for s in tel]

item['page'] = ['https://sh.newhouse.fang.com/house/s/b9'+(str)(eval(p.extract())+1)+'/?ctm=1.sh.xf_search.page.2']

items.append(item)

print(item)

# When crawling to the last page , Class label last Automatically switch to the home page

if lastpage==' home page ':

pass

else:

# If it's not the last page , Continue crawling to the next page of data , Know all the data

yield scrapy.Request(url='https://sh.newhouse.fang.com/house/s/b9'+(str)(eval(currentpage)+1)+'/?ctm=1.sh.xf_search.page.2', callback=self.parse)

4) stay spiders Run the crawler under ,Terminal Input... On the console scrapy crawl house.

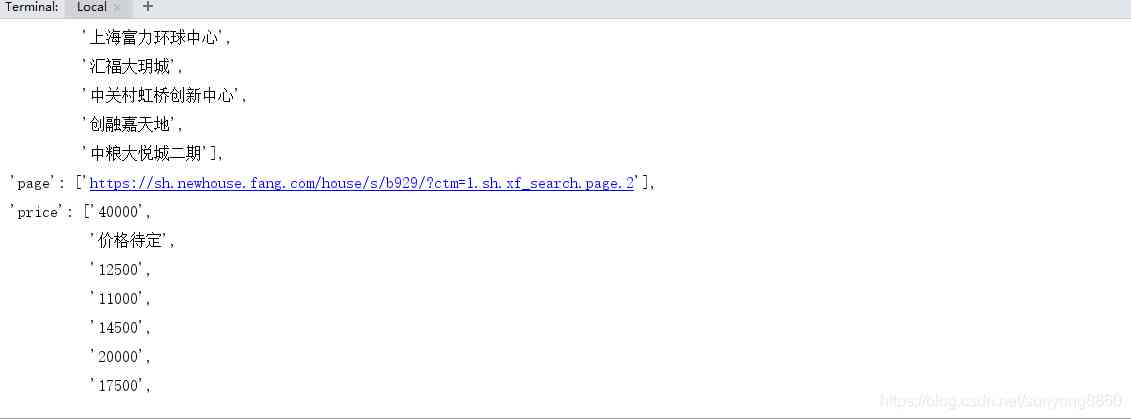

The results are shown in the following figure

The overall project structure is shown on the right tts The folder is used to store data on my side txt file . There is no need for this project .

If you find any errors, please contact wechat :sunyong8860

python Crawling along the road

版权声明

本文为[osc_x4ot1joy]所创,转载请带上原文链接,感谢

边栏推荐

- Game mathematical derivation AC code (high precision and low precision multiplication and division comparison) + 60 code (long long) + 20 point code (Full Permutation + deep search DFS)

- Introduction to ucgui

- ts流中的pcr与pts计算与逆运算

- 1. In depth istio: how is sidecar auto injection realized?

- 京淘项目知识点总结

- Windows下子系统Ubuntu安装

- Download, installation and configuration of Sogou input method in Ubuntu

- 什么你的电脑太渣?这几招包你搞定! (Win10优化教程)

- 接口

- Face recognition: attack types and anti spoofing techniques

猜你喜欢

use Xunit.DependencyInjection Transformation test project

iOS上传App Store报错:this action cannot be completed -22421 解决方案

Problems of Android 9.0/p WebView multi process usage

16.文件传输协议、vsftpd服务

![[solution] distributed timing task solution](/img/3b/00bc81122d330c9d59909994e61027.jpg)

[solution] distributed timing task solution

Macquarie Bank drives digital transformation with datastex enterprise (DSE)

python 循环区分(while循环和for循环)

Android Basics - RadioButton (radio button)

微信昵称emoji表情,特殊表情导致列表不显示,导出EXCEL报错等问题解决!

![IOS learning note 2 [problems and solutions encountered during the installation and use of cocopods] [update 20160725]](/img/3b/00bc81122d330c9d59909994e61027.jpg)

IOS learning note 2 [problems and solutions encountered during the installation and use of cocopods] [update 20160725]

随机推荐

nvm

iOS 学习笔记二【cocopods安装使用和安装过程中遇到的问题及解决办法】【20160725更新】

Face recognition: attack types and anti spoofing techniques

NOIP 2012 提高组 复赛 第一天 第二题 国王游戏 game 数学推导 AC代码(高精度 低精度 乘 除 比较)+60代码(long long)+20分代码(全排列+深搜dfs)

高并发,你真的理解透彻了吗?

[original] about the abnormal situation of high version poi autosizecolumn method

Improvement of rate limit for laravel8 update

【原创】关于高版本poi autoSizeColumn方法异常的情况

你的主机中的软件中止了一个已建立的连接。解决方法

Seven features of Python 3.9

Daily challenges of search engines_ 4_ External heterogeneous resources - Zhihu

Cryptography - Shangsi Valley

Get tree menu list

Swiper window width changes, page width height changes lead to automatic sliding solution

QT hybrid Python development technology: Python introduction, hybrid process and demo

CPP (4) boost installation and basic use for Mac

Insight -- the application of sanet in arbitrary style transfer

什么你的电脑太渣?这几招包你搞定! (Win10优化教程)

鼠标变小手

C++基础知识篇:C++ 基本语法