当前位置:网站首页>Ambari (VIII) --- ambari integrated impala document (valid for personal test)

Ambari (VIII) --- ambari integrated impala document (valid for personal test)

2022-06-28 07:46:00 【New objects first】

Ambari Integrate Impala( Close test effectively )

Document directory

One . take impala Incorporate into ambari In version management

- 2.1 establish impala Source file

- 2.2 restart ambari-server

- 2.3 initialization impala

- 2.4 modify hdfs The configuration file

- 2.5 start-up impala service

- 2.6 Copy hbase jar Package to impala Under the table of contents

- 2.7 modify /etc/default/bigtop-utils To configure

- 2.8 see impala Integration

3、 ... and . Impala Problems encountered during integration

One . take impala Incorporate into ambari In version management

Executing the following command will impala Incorporate into ambari In version management

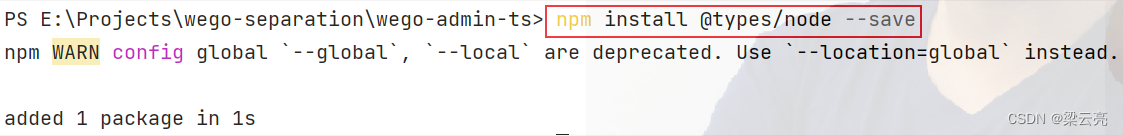

VERSION=`hdp-select status hadoop-client | sed 's/hadoop-client - \([0-9]\.[0-9]\).*/\1/'`

sudo git clone https://github.com/cas-bigdatalab/ambari-impala-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/IMPALA

If you want to integrate through source code compilation and packaging , You need to put this part of the source code in

ambari Source directory /ambari-server/src/main/resources/stacks/HDP/$VERSION/services/IMPALA

After compiling and packaging , Will be in

/var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/IMPALA

Generate the corresponding code under the directory

Two . initialization Impala

Be careful : The problem with this process is in the directory /var/log/impala/ View the corresponding error log

2.1 establish impala Source file

There are two ways to create a source :

cdh Official source download

This method has been used in IMPALA/package/scripts/impala-catalog.py Set up in the file , No need for manual operation

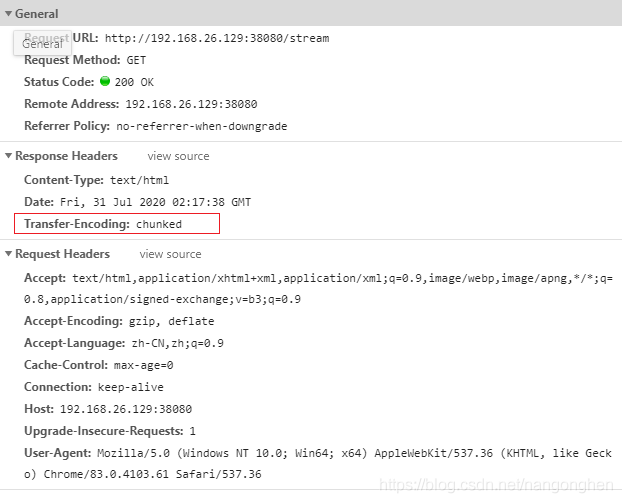

Be careful ! Need to confirm http Whether the operating system involved in the link is consistent with itself , Otherwise, appropriate modifications should be made , After making changes , The link should be verified via the browser

advantage : No manual configuration required , It is easy to operate

shortcoming : Because I have to go to the official website to download impala Of rpm file , Not stable enough , The installation process is slow , May fail

Local source download

notes : Adopt this plan , You need to comment out a few lines of code listed in the official download operation ~

Go to the following link to download cdh Integrated compressed package

Download it , Unzip the installation package and place it in linux On a server /var/www/html/ Under the table of contents

Browser input ip/cdh

If the following appears , Prove that the local source configuration is successful

If there is a display problem here , Need to check httpd Whether the service is installed and started

yum install httpd

service httpd start

stay ambari-server Node /etc/yum.repos.d/ Add files to directory :

impala.repo, The configuration is as follows :

[cloudera-cdh5]

# Packages for Cloudera's Distribution for Hadoop, Version 5, on RedHat or CentOS 6 x86_64

name=Cloudera's Distribution for Hadoop, Version 5

baseurl=http://{

ip}/cdh/5.14.0

gpgkey =http://{

ip}/cdh/RPM-GPG-KEY-cloudera

gpgcheck = 0

This file will then be sent to each required installation impala Of agent Node

2.2 restart ambari-server

restart ambari-server Before , Please go through The third part , ambari Integrate imapal Problems encountered in the process

# Execute the following command to restart ambari-server

ambari-server restart

2.3 initialization impala

After a successful restart ,ambari web Interface selection impala Service installation

Actions–> Add Service --> choice Impala–> next

recommend : ambari-server Node installation Impala_Catalog_Service,Impala_State_Store, agent Node installation Impala_Daemons

Be careful : if 2.1 In the step, select the official source download method directly , It may be slow here , And it may fail , It needs to be tried a few more times

2.4 modify hdfs The configuration file

Ambari main interface –>HDFS–>Configs–>Advanced modify

modify Custom core-site file

Add the following parameters :

<property>

<name>dfs.client.read.shortcircuit</name>

<value>true</value>

</property>

<property>

<name>dfs.client.read.shortcircuit.skip.checksum</name>

<value>false</value>

</property>

<property>

<name>dfs.datanode.hdfs-blocks-metadata.enabled</name>

<value>true</value>

</property>

modify Custom hdfs-site file

Add the following parameters :

<property>

<name>dfs.datanode.hdfs-blocks-metadata.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.block.local-path-access.user</name>

<value>impala</value>

</property>

<property>

<name>dfs.client.file-block-storage-locations.timeout.millis</name>

<value>60000</value>

</property>

After modification , according to ambari The interface prompts to restart hdfs And its related components

Installation needs to be confirmed impala Under the node /etc/impala/conf Are there any of the following files in the directory

core-site.xml

hdfs-site.xml

hive-site.xml

If not , Need from /etc/hadoop/conf/*.xml Copy the past

cp /etc/hadoop/conf/*.xml /etc/impala/conf

# Or maybe you need scp Remote copy ( If the current node /etc/hadoop/conf There are no such files in the directory )

2.5 start-up impala service

Start on the interface impala service

After starting here for a while Impala_Daemons The node will hang up

About a few minutes , This is because some configurations are not well configured

2.6 Copy hbase jar Package to impala Under the table of contents

take hbase Of jar Package copy to /usr/lib/impala/lib And create a soft connection (Impala_Daemons Node execution )

Be careful : According to the corresponding cdh Select the corresponding version hbase edition , Otherwise, incompatible versions may occur . meanwhile , You should use your own cdh The version shall prevail

cp /usr/lib/hbase/lib/hbase-*.jar /usr/lib/impala/lib/

Here's the picture : On my node /usr/lib/impala/lib There are already hbase The four one. jar package (hbase-annotations.jar,hbase-client.jar,hbase-common.jar,hbase-protocol.jar), There is no need to establish a soft connection again

# Create the required soft links

ln -s hbase-examples-1.2.0-cdh5.14.0.jar hbase-examples.jar

ln -s hbase-external-blockcache-1.2.0-cdh5.14.0.jar hbase-external-blockcache.jar

ln -s hbase-hadoop2-compat-1.2.0-cdh5.14.0.jar hbase-hadoop2-compat.jar

ln -s hbase-hadoop-compat-1.2.0-cdh5.14.0.jar hbase-hadoop-compat.jar

ln -s hbase-it-1.2.0-cdh5.14.0.jar hbase-it.jar

ln -s hbase-prefix-tree-1.2.0-cdh5.14.0.jar hbase-prefix-tree.jar

ln -s hbase-procedure-1.2.0-cdh5.14.0.jar hbase-procedure.jar

ln -s hbase-resource-bundle-1.2.0-cdh5.14.0.jar hbase-resource-bundle.jar

ln -s hbase-rest-1.2.0-cdh5.14.0.jar hbase-rest.jar

ln -s hbase-rsgroup-1.2.0-cdh5.14.0.jar hbase-rsgroup-1.2.0.jar

ln -s hbase-server-1.2.0-cdh5.14.0.jar hbase-server.jar

ln -s hbase-shell-1.2.0-cdh5.14.0.jar hbase-shell.jar

ln -s hbase-spark-1.2.0-cdh5.14.0.jar hbase-spark.jar

ln -s hbase-thrift-1.2.0-cdh5.14.0.jar hbase-thrift.jar

effect : If there is no copy hbase Of jar To /usr/lib/impala/lib, The following errors will be reported :

If the soft link is not created correctly, it will lead to jar The package is not properly recognized , Similar errors will be reported :

F0510 04:53:11.157616 25119 impalad-main.cc:64] NoClassDefFoundError: org/apache/hadoop/hbase/client/Scan

CAUSED BY: ClassNotFoundException: org.apache.hadoop.hbase.client.Scan

2.7 modify /etc/default/bigtop-utils To configure

Modify... Under this file JAVA_HOME To configure

2.8 see impala Integration

Ambari Interface , Relevant components are normally integrated and started

Impala_State_Store The node is normal

Impala_Catalog_Service The node is normal

Impala_Daemons The node is normal

stay Impala_Daemons Node operation impala shell

3、 ... and . Impala Problems encountered during integration

3.1 Impala Initialization error Host key verification failed

The key information of error reporting is as follows :

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/2.6/services/IMPALA/package/scripts/impala-daemon.py", line 38, in <module>

...

resource_management.core.exceptions.ExecutionFailed: Execution of 'scp -r [email protected]{hostname}:/etc/hadoop/conf/core-site.xml /var/lib/ambari-agent/tmp' returned 1. Host key verification failed.

reason : ssh The problem of , The current host and the transmitted host are not configured ssh mutual trust

Solution : To configure ssh Password free login

perhaps :

Comment out : impala_base.py 52 That's ok

Comment out : impala_daemon.py 14 That's ok

# self.configureHDFS(env)

Be careful : After the initialization script is completed, you need to execute the following commands :

cp /etc/hadoop/conf/*.xml /etc/impala/conf

# Or maybe you need scp Remote copy ( If the current node /etc/hadoop/conf There are no such files in the directory )

Make sure : imapla The following configuration files are included under the installation node

core-site.xml

hdfs-site.xml

hive-site.xml

边栏推荐

- Hash slot of rediscluster cluster cluster implementation principle

- pip 更新到最新的版本

- PLC -- Notes

- Localization SoC development plan

- Makefile

- Section VII starting principle and configuration of zynq

- Soft exam -- software designer -- afternoon question data flow diagram DFD

- Section 9: dual core startup of zynq

- XML serialization backward compatible

- Installing redis on Linux

猜你喜欢

Path alias specified in vite2.9

flex布局

![[ thanos源码分析系列 ]thanos query组件源码简析](/img/e4/2a87ef0d5cee0cc1c1e1b91b6fd4af.png)

[ thanos源码分析系列 ]thanos query组件源码简析

安全培训是员工最大的福利!2022新员工入职安全培训全员篇

Kubelet garbage collection (exiting containers and unused images) source code analysis

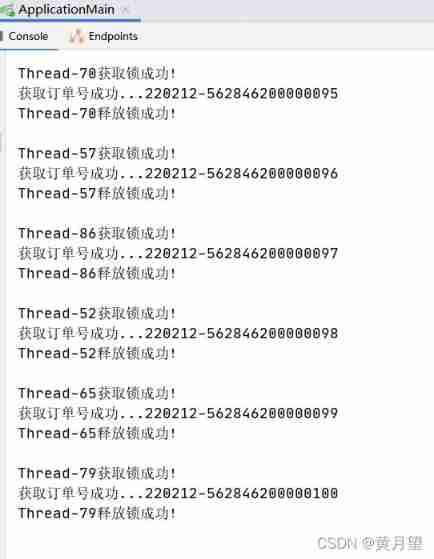

Redis implements distributed locks

什么是EC鼓风机(ec blower fan)?

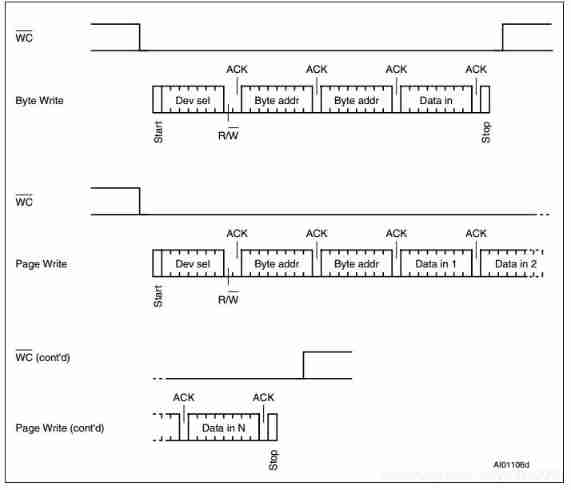

ZYNQ_ IIC read / write m24m01 record board status

golang gin框架进行分块传输

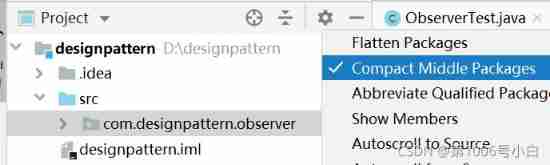

Idea package together, using compact middle packages to solve &

随机推荐

Hash slot of rediscluster cluster cluster implementation principle

Is it safe for flush to open an account online

R language ggmap

Co process, asyncio, asynchronous programming

Section 5: zynq interrupt

ABAP skill tree

Real time database - Notes

Sentinel mechanism of redis cluster

7-2 Finnish wooden chess structure Sorting

GPIO configuration of SOC

Redis one master multi slave cluster setup

7-1 understand everything

以动态规划的方式求解最长回文子串

HJ明明的随机数

HJ字符串排序

Es data export CSV file

股票炒股注册开户靠谱吗?安全吗?

HJ score ranking

Cloud native (to be updated)

Installing redis on Linux